Introduction to Explainable AI (XAI)

What is Explainable AI and Why is it Important?

Explainable AI (XAI) is a field focused on developing methods and tools that make it possible to understand how and why AI models make certain decisions. As complex models such as deep neural networks or ensemble learning algorithms become more popular, it is increasingly difficult to explain the basis for a model’s specific decision. XAI aims to make these processes more transparent and understandable for users, developers, and decision-makers.

The importance of XAI is especially evident in sectors where AI decisions have a direct impact on people—such as healthcare, finance, law, or public administration. Model transparency builds trust, facilitates the adoption of new technologies, and helps better manage the risks associated with incorrect or unethical decisions.

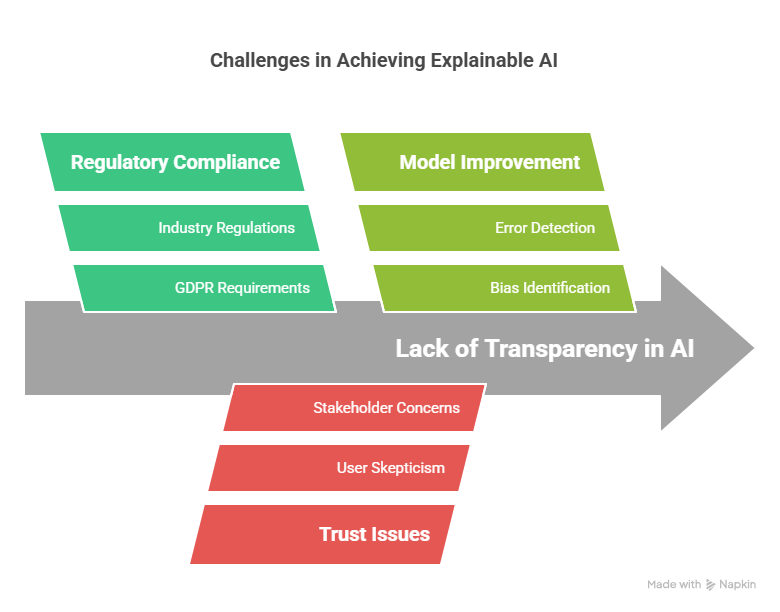

The Need for Transparency in AI

Modern AI models, especially those based on deep learning, often operate as “black boxes”—they generate accurate predictions but cannot explain how they arrived at them. Lack of transparency can lead to serious issues, such as:

Difficulty detecting errors and biases in the model,

Lack of trust from users and stakeholders,

Problems with deploying AI in regulated sectors (e.g., banking, healthcare),

Limited ability to improve and optimize models.

Transparency in AI not only helps us better understand how algorithms work, but also allows for quicker responses to irregularities, ensures regulatory compliance, and builds a positive image for companies using AI.

Benefits of XAI: Trust, Compliance, Model Improvement

Explainable AI brings a range of benefits for both organizations and end users:

Trust – Users are more likely to use AI systems whose decisions are understandable and justified.

Regulatory compliance – In many industries (e.g., GDPR in the EU), there is a requirement to explain decisions made by algorithms.

Model improvement – Analyzing explanations helps detect errors, biases, and unexpected model behaviors, making optimization easier.

Better human–AI collaboration – Understanding how a model works enables more effective collaboration and better decision-making supported by AI.

Key Concepts in Explainable AI (XAI)

Interpretability vs. Explainability

In the context of artificial intelligence, two terms often appear: interpretability and explainability. While they are related, they do not mean the same thing.

Interpretability refers to the degree to which a human can understand the internal mechanisms of an AI model. An example of an interpretable model is linear regression, where the influence of each feature on the outcome is clearly defined by its coefficients.

Explainability, on the other hand, is the ability of the model (or supporting tools) to generate human-understandable explanations for specific decisions or predictions. Even if a model is complex and not inherently interpretable (such as a deep neural network), post-hoc methods can be used to explain why the model made a particular decision.

Intrinsic vs. Post-hoc Explainability

The explainability of AI models can be divided into two main types:

Intrinsic explainability – refers to models that are inherently understandable, such as decision trees, linear regression, or logistic regression. In these models, it is easy to trace how individual features affect the outcome.

Post-hoc explainability – refers to techniques applied after the model has been trained, aimed at explaining the behavior of complex „black box” models (e.g., neural networks, random forests). Examples of such methods include LIME, SHAP, or feature importance analysis.

Local vs. Global Explanations

Explanations generated by XAI can be divided into:

Global explanations – describe the general principles of how the model works across the entire dataset. They help understand which features are most important for all predictions and how the model makes decisions in various cases.

Local explanations – concern individual predictions. They answer the question: „Why did the model make this decision for this specific case?” An example could be explaining why a bank customer was denied a loan.

Methods and Techniques in Explainable AI (XAI)

Rule-Based Systems

Rule-based systems are among the oldest and most interpretable methods in artificial intelligence. They operate based on clearly defined „if–then” rules, which are easy for humans to understand and trace. An example is an expert system in medicine that generates a diagnosis based on symptoms and test results according to established rules. While such systems are highly transparent, they have limited ability to solve complex problems and do not learn from new data.

Linear Models

Linear models, such as linear regression or logistic regression, are inherently interpretable. Each feature is assigned a coefficient that determines its influence on the outcome. This makes it easy to understand which variables are most important to the model and how changing a feature’s value affects the prediction. Linear models are often used as a reference point in AI explainability.

Decision Trees

Decision trees are another example of highly interpretable models. They make decisions by traversing nodes, where at each stage a condition on one of the features is checked. The path from the root to a leaf node constitutes an explanation of why the model made a particular decision. Decision trees are widely used in classification and regression, and their graphical representation makes model analysis easier.

Feature Importance

Feature importance is a technique that allows you to determine which input variables have the greatest impact on the model’s decisions. For ensemble models such as random forests or gradient boosting, you can calculate a ranking of features based on their contribution to data splits. Feature importance analysis helps understand what the model bases its predictions on and whether it relies on irrelevant or misleading information.

LIME (Local Interpretable Model-agnostic Explanations)

LIME is a popular post-hoc method that explains individual predictions of any model (model-agnostic). It works by locally approximating a complex model with a simple linear model around a specific example. This helps to understand which features had the greatest impact on a given decision. LIME is widely used for explaining predictions of classification and regression models.

Example of using LIME in Python:

python

import lime

import lime.lime_tabular

explainer = lime.lime_tabular.LimeTabularExplainer(

training_data=X_train.values,

feature_names=X_train.columns,

class_names=['class_0', 'class_1'],

mode='classification'

)

exp = explainer.explain_instance(X_test.iloc[0].values, model.predict_proba)

exp.show_in_notebook()

SHAP (SHapley Additive exPlanations)SHAP is an advanced method for explaining predictions, based on Shapley values from game theory. SHAP assigns each feature a value that reflects its contribution to a specific prediction. This method is model-agnostic and can generate both local and global explanations. SHAP is especially valued for its strong theoretical foundations and broad support in Python libraries.

Example of using SHAP in Python:

python

import shap

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X_test)

shap.summary_plot(shap_values, X_test)Attention Mechanisms

Attention mechanisms are mainly used in modern natural language processing (NLP) and computer vision models. They allow the model to „focus” on the most important parts of the input data when making decisions. Analyzing attention weights makes it possible to understand which words or image regions had the greatest impact on the model’s output, increasing the transparency of neural networks.

Counterfactual Explanations

Counterfactual explanations indicate what minimal changes in the input data would have led to a different model decision. For example, for a client who was denied a loan, a counterfactual explanation might show that increasing their income by a certain amount would have resulted in approval. Such explanations are particularly useful in communicating with users and supporting decision-making.

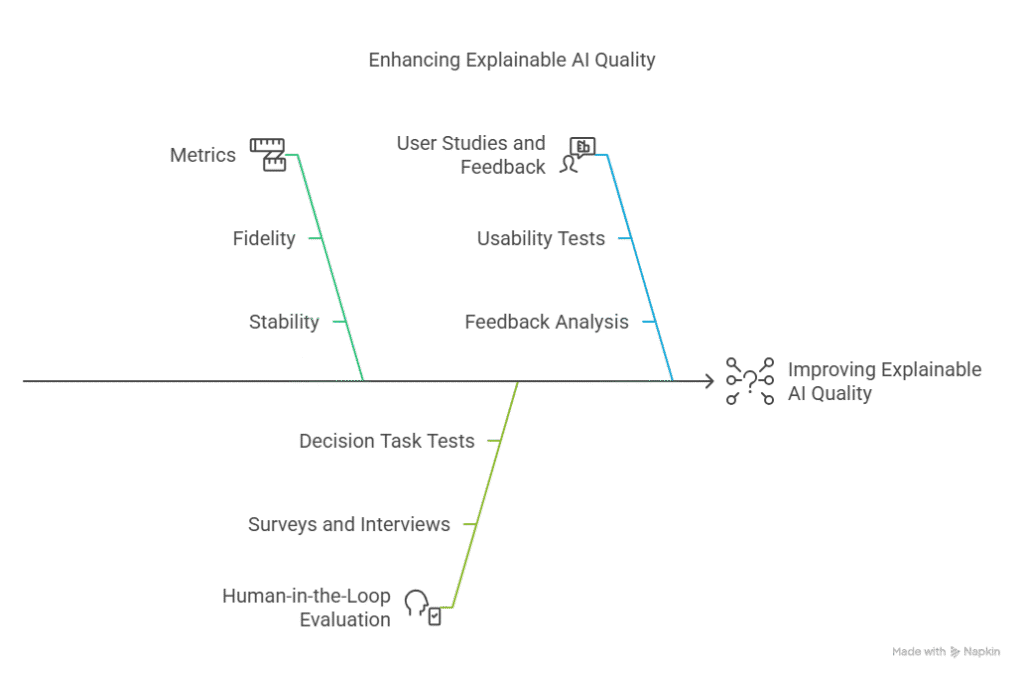

Evaluating Explainability

Metrics for Evaluating Explanations

To effectively implement Explainable AI, it is not enough to simply generate explanations—you also need to be able to assess their quality. Various metrics are used to measure how understandable, accurate, and useful the explanations are for the user. The most important include:

Fidelity – measures how well the explanation reflects the actual behavior of the model. An explanation with high fidelity should be consistent with the model’s predictions.

Stability – measures whether similar cases generate similar explanations. Stability is important so that users can trust the consistency of explanations.

Sparsity – refers to the number of features used in the explanation. The fewer features, the simpler and easier the explanation is to understand.

Human-interpretability – assesses whether the explanation is intuitive and readable for the end user.

In practice, several metrics are often used together to get a more complete picture of explanation quality.

Human-in-the-Loop Evaluation

Evaluating the explainability of AI models should not rely solely on quantitative metrics. Human involvement—both from experts and end users—is very important in the evaluation process. Human-in-the-loop means that users test the explanations, assess their usefulness, clarity, and impact on decision-making.

Example methods of human-in-the-loop evaluation include:

Surveys and interviews with users,

Decision task tests (do explanations help users make better decisions?),

Analysis of the time needed to understand the explanation.

Thanks to user feedback, XAI techniques can be better tailored to real needs and expectations.

User Studies and Feedback

Regular user studies are key to developing effective Explainable AI tools. They help identify which types of explanations are most helpful, what information is unclear, and how explanations affect trust in the AI system.

In practice, it is worth:

Conducting usability tests with different user groups,

Collecting and analyzing feedback after deploying the XAI system,

Iteratively improving explanations based on collected opinions.

XAI in Practice: Use Cases and Examples

Healthcare

In healthcare, Explainable AI plays a crucial role because decisions made by AI models can directly impact patients’ health and lives. An example of XAI application is the analysis of medical images, where deep learning models detect cancerous changes in X-rays or CT scans. Thanks to explanations (e.g., attention maps, SHAP, LIME), a doctor can see which parts of the image had the greatest influence on the diagnosis, increasing trust in the system and making it easier to verify results.

Example:

A model detects cancerous changes in an X-ray and generates a heatmap showing which areas of the image were key to the decision. The doctor can then check whether the model missed any important details.

Finance

In the financial sector, model explainability is essential due to legal regulations and the need to build customer trust. An example is a credit scoring system, where an AI model decides whether to grant or deny a loan. With XAI, it is possible to explain to the client why they received a particular decision—for example, indicating that the key factors were income, credit history, or the number of active obligations.

Example:

The model denies a loan and generates an explanation: “The decision was made due to insufficient income and a high number of active loans.” Such explanations are required by law in many countries.

Autonomous Vehicles

In autonomous vehicles, Explainable AI helps to understand why the system took certain actions on the road, such as braking or changing lanes. Explanations can be generated based on image analysis from cameras, sensor data, or attention maps in neural networks. This allows engineers to detect errors and improve algorithms more quickly, and users gain greater trust in the technology.

Example:

An autonomous car suddenly brakes—XAI indicates that the reason was a detected obstacle on the road, which the system considered a potential threat.

Criminal Justice

In systems supporting judicial decisions or analyzing the risk of recidivism, Explainable AI is essential to ensure transparency and fairness. AI models can assist judges in risk assessment, but must generate explanations that clarify which factors influenced the evaluation. This helps avoid unconscious bias and ensures compliance with ethical standards.

Example:

A model assesses the risk of recidivism and indicates that the key factors were previous convictions and the defendant’s age. The judge can verify whether the model is not relying on unethical or irrelevant features.

Implementing XAI in Python

Using Libraries like SHAP, LIME, and ELI5

Python offers a rich ecosystem of libraries that make implementing Explainable AI (XAI) techniques accessible and efficient. The most popular tools include:

SHAP (SHapley Additive exPlanations): Based on game theory, SHAP provides both local and global explanations for model predictions. It works with many model types, including tree-based models, neural networks, and linear models.

LIME (Local Interpretable Model-agnostic Explanations): LIME explains individual predictions by approximating the model locally with a simple, interpretable model. It is model-agnostic and works well for tabular, text, and image data.

ELI5: This library offers a unified interface for explaining predictions of various machine learning models, including feature importance, weights, and decision paths. ELI5 integrates with scikit-learn, XGBoost, LightGBM, and others.

Code Examples for Different Techniques

Below are practical code examples showing how to use these libraries to generate explanations for machine learning models.

SHAP Example (Tree-based Model):

python

import shap

import xgboost as xgb

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

# Load data and train model

data = load_breast_cancer()

X_train, X_test, y_train, y_test = train_test_split(data.data, data.target, test_size=0.2, random_state=42)

model = xgb.XGBClassifier().fit(X_train, y_train)

# Create SHAP explainer and compute SHAP values

explainer = shap.TreeExplainer(model)

shap_values = explainer.shap_values(X_test)

# Visualize feature importance

shap.summary_plot(shap_values, X_test, feature_names=data.feature_names)LIME Example (Tabular Data):

python

import lime

import lime.lime_tabular

import numpy as np

# Assume model is a trained scikit-learn classifier

explainer = lime.lime_tabular.LimeTabularExplainer(

training_data=X_train,

feature_names=data.feature_names,

class_names=['malignant', 'benign'],

mode='classification'

)

# Explain a single prediction

exp = explainer.explain_instance(X_test[0], model.predict_proba)

exp.show_in_notebook()ELI5 Example (Feature Importance):

python

import eli5

from eli5.sklearn import PermutationImportance

# Train a model (e.g., logistic regression)

from sklearn.linear_model import LogisticRegression

lr = LogisticRegression(max_iter=1000).fit(X_train, y_train)

# Compute permutation importance

perm = PermutationImportance(lr, random_state=42).fit(X_test, y_test)

eli5.show_weights(perm, feature_names=data.feature_names)Best Practices for XAI Implementation

Choose the right tool for your model and data type: SHAP is excellent for tree-based models, LIME is flexible for various data types, and ELI5 is great for scikit-learn models.

Combine multiple techniques: Using more than one method can provide a fuller picture of model behavior and increase trust in explanations.

Visualize explanations: Graphical representations (e.g., SHAP summary plots, LIME bar charts) make explanations more accessible to non-technical users.

Validate explanations with domain experts: Always consult with subject matter experts to ensure that explanations are meaningful and actionable.

Integrate XAI into your workflow: Make explanation generation a standard part of model development, validation, and deployment.

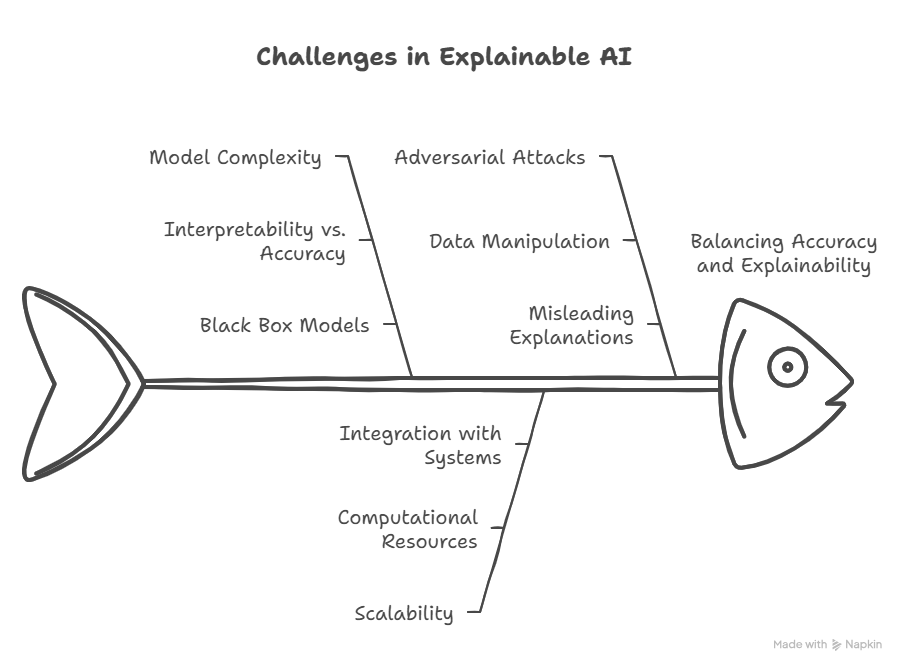

Challenges and Limitations of Explainable AI

Trade-offs Between Accuracy and Explainability

One of the main challenges in Explainable AI is finding the right balance between model accuracy and explainability. Highly interpretable models, such as linear regression or decision trees, are easy to understand but often fall short in effectiveness compared to more complex models like deep neural networks or ensemble methods. On the other hand, these advanced models, while achieving higher precision, operate as “black boxes” and require additional tools to generate explanations. In practice, the choice of the right model depends on the specifics of the task, regulatory requirements, and user expectations.

Scalability Issues

Implementing XAI at scale comes with both technical and organizational challenges. Generating explanations for thousands or millions of predictions can be time-consuming and require significant computational resources, especially for post-hoc methods like SHAP or LIME. Additionally, integrating XAI tools with existing production systems requires careful architectural design and automation of the explanation generation and presentation process.

Adversarial Attacks on Explanations

A new and increasingly studied problem is adversarial attacks on explanations—attempts to manipulate the model or input data so that the generated explanations are misleading or incorrect. For example, data can be modified so that the model still makes correct decisions, but the explanations point to irrelevant features. Such attacks can lead to a loss of trust in the AI system and incorrect user decisions. Therefore, increasing attention is being paid to testing the robustness of explanations against manipulation and developing methods to detect such attacks.

The Future of Explainable AI

New Techniques and Trends

Explainable AI is a rapidly evolving field, with increasingly advanced techniques and tools emerging. One of the most important trends is integrating explainability at the model design stage, rather than just as an add-on after training. More and more architectures are being developed that are inherently more transparent, such as neural networks with built-in attention mechanisms or hybrid models that combine interpretable rules with deep learning.

There is also growing interest in methods for explaining generative models (e.g., GPT, DALL-E), which can create text, images, or music. Tools are being developed to help understand why a model generated a particular response or image, which is crucial for safety and control over AI.

Integration with AutoML

The automation of machine learning processes (AutoML) increasingly includes aspects of explainability. Modern AutoML platforms not only automatically select the best models and hyperparameters but also generate explanations for the resulting predictions. This allows even people without deep technical knowledge to use AI in an informed and safe way. The integration of XAI with AutoML accelerates AI adoption in business and increases the availability of transparent solutions.

Ethical Considerations

As AI plays a growing role in social and economic life, ethical issues related to explainability are becoming increasingly important. Model transparency is key to ensuring fairness, non-discrimination, and accountability. Many countries are introducing regulations that require algorithmic decisions to be understandable and contestable by users. The development of Explainable AI supports building trust in new technologies and enables their responsible use.

Summary: Key Takeaways and Best Practices

Choosing the Right XAI Method

Choosing the appropriate Explainable AI method depends on several factors: the type of model, the specifics of the task, regulatory requirements, and user expectations. For simple models such as linear regression or decision trees, interpreting coefficients or decision paths may be sufficient. For complex models (e.g., neural networks, random forests), it is worth using post-hoc tools such as SHAP, LIME, or ELI5, which allow you to generate both local and global explanations.

A good practice is to combine several explanation techniques to obtain a more complete picture of the model’s behavior. It is also advisable to regularly consult with domain experts, who can help assess whether the explanations align with industry knowledge and intuition.

Communicating Explanations Effectively

Even the most technically sound explanations will not provide value if they are not understood by users. It is crucial to tailor the form and level of detail of explanations to the audience—data analysts have different needs than bank customers or doctors. It is worth using visualizations (e.g., SHAP plots, attention maps), simple language summaries, and counterfactual examples that show what could change the model’s decision.

It is also good practice to collect user feedback and iteratively improve the way explanations are presented. Effective communication builds trust in the AI system and facilitates its adoption within the organization.

Additional Resources

Links to Articles, Courses, and Libraries

To deepen your knowledge of Explainable AI and stay up to date with the latest techniques, it’s worth using high-quality educational materials and tools. Here are some recommended resources:

Articles and Guides:

A Guide to Explainable AI (O’Reilly)

Explainable AI: Interpreting, Explaining and Visualizing Deep Learning (Interpretable Machine Learning Book)

SHAP Documentation

LIME Documentation

ELI5 Documentation

Online Courses:

Coursera: Explainable AI for Beginners

Udemy: Explainable AI (XAI) – Interpretability for Machine Learning

Google Cloud: Explainable AI

Libraries and Tools:

SHAP (Python)

LIME (Python)

ELI5 (Python)

InterpretML (Microsoft)

Alibi (Seldon)

Knowledge Sources

Books:

„Interpretable Machine Learning” – Christoph Molnar (available online)

„Explainable AI: Interpreting, Explaining and Visualizing Deep Learning” – Ankur Taly, Been Kim, Sameer Singh

Communities and Forums:

Stack Overflow – tag explainable-ai

Reddit r/MachineLearning

Kaggle – XAI Discussions

How AI agents can help you write better code

AI Agents in Practice: How to Automate a Programmer’s Daily Work