Introduction to Neuro-Symbolic AI

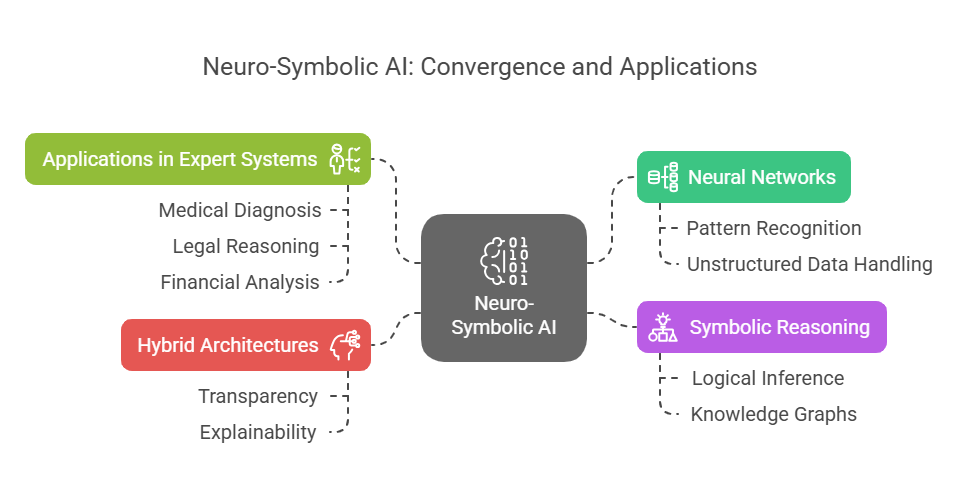

Neuro-symbolic AI is an emerging field that seeks to combine the strengths of neural networks with the capabilities of symbolic reasoning. This hybrid approach aims to bridge the gap between data-driven learning and structured, logic-based knowledge representation, offering new possibilities for building intelligent systems that are both powerful and interpretable.

The Convergence of Neural Networks and Symbolic Reasoning

Traditional neural networks excel at pattern recognition, learning from large datasets, and handling unstructured data such as images, audio, or natural language. However, they often struggle with tasks that require explicit reasoning, logical inference, or the manipulation of abstract concepts. On the other hand, symbolic AI—rooted in logic, rules, and knowledge graphs—enables systems to perform complex reasoning, but typically lacks the flexibility and adaptability of neural approaches.

The convergence of these two paradigms is motivated by the desire to create systems that can learn from data while also reasoning about structured knowledge. By integrating neural and symbolic components, neuro-symbolic AI can, for example, interpret visual scenes (using neural networks) and then answer questions about them using logical reasoning (via symbolic methods). This synergy opens the door to more robust and versatile expert systems.

Benefits of Hybrid Architectures

Hybrid neuro-symbolic architectures offer several key advantages. They can leverage the generalization and learning capabilities of neural networks while maintaining the transparency and explainability of symbolic reasoning. This combination allows for better handling of ambiguous or incomplete data, as the symbolic component can provide context or constraints that guide the neural network’s predictions.

Moreover, hybrid systems are often more interpretable, as symbolic reasoning steps can be traced and understood by humans. This is particularly valuable in domains where trust, accountability, and regulatory compliance are important, such as healthcare or finance. Additionally, the integration of prior knowledge through symbolic representations can reduce the amount of data required for training, making these systems more efficient and adaptable.

Applications in Expert Systems

Expert systems are a natural application area for neuro-symbolic AI. These systems aim to replicate the decision-making abilities of human experts in specific domains, such as medical diagnosis, legal reasoning, or financial analysis. By combining neural networks’ ability to process complex, high-dimensional data with symbolic reasoning’s capacity for explicit logic and domain knowledge, hybrid architectures can deliver more accurate, reliable, and explainable solutions.

Fundamentals of Neural Networks

Neural networks are the foundation of modern artificial intelligence, enabling machines to learn complex patterns and representations from data. Understanding their basic structures, the principles of deep learning, and the methods used for training and optimization is essential for anyone working with hybrid neuro-symbolic AI systems.

Basic Neural Network Architectures

At their core, neural networks are composed of interconnected layers of artificial neurons, inspired by the structure of the human brain. The simplest form is the feedforward neural network, where information flows in one direction from input to output. Each neuron receives inputs, applies a weighted sum, passes the result through an activation function, and forwards the output to the next layer.

More advanced architectures include convolutional neural networks (CNNs), which are particularly effective for image and spatial data, and recurrent neural networks (RNNs), which are designed to handle sequential data such as time series or natural language. These architectures have specialized layers and connections that allow them to capture spatial or temporal dependencies, making them suitable for a wide range of tasks.

Deep Learning Concepts

Deep learning refers to neural networks with multiple hidden layers, allowing them to learn hierarchical representations of data. Each layer extracts increasingly abstract features, enabling the network to model complex relationships. For example, in image recognition, early layers might detect edges and textures, while deeper layers recognize shapes and objects.

Key concepts in deep learning include backpropagation, which is the algorithm used to update the network’s weights based on the error between predicted and actual outputs, and regularization techniques such as dropout or batch normalization, which help prevent overfitting and improve generalization. The depth and complexity of these networks have led to breakthroughs in fields like computer vision, speech recognition, and natural language processing.

Training and Optimization Techniques

Training a neural network involves finding the optimal set of weights that minimize the difference between the network’s predictions and the true values. This is typically achieved using gradient descent, an iterative optimization algorithm that adjusts the weights in the direction that reduces the loss function.

Several variants of gradient descent exist, such as stochastic gradient descent (SGD), which updates weights using small batches of data, and adaptive methods like Adam or RMSprop, which adjust learning rates dynamically for faster convergence. Hyperparameter tuning, including the selection of learning rates, batch sizes, and network architectures, plays a crucial role in achieving good performance.

Additionally, techniques like early stopping, data augmentation, and transfer learning are commonly used to enhance training efficiency and model robustness. Early stopping prevents overfitting by halting training when performance on a validation set stops improving, while data augmentation increases the diversity of training data. Transfer learning leverages pre-trained models on large datasets, allowing for faster and more effective training on specific tasks.

Symbolic Reasoning and Knowledge Representation

Symbolic reasoning and knowledge representation are fundamental components of artificial intelligence, especially in systems that require explicit logic, structured knowledge, and transparent decision-making. These approaches enable machines to manipulate symbols, apply rules, and reason about relationships, making them essential for expert systems and hybrid neuro-symbolic architectures.

3.1 Logic-Based Systems

Logic-based systems use formal logic to represent knowledge and perform reasoning. The most common forms are propositional logic and first-order logic, which allow the encoding of facts, rules, and relationships in a structured and unambiguous way. These systems can deduce new information from existing knowledge using inference engines, which apply logical rules to derive conclusions. Logic-based approaches are highly interpretable and provide a clear audit trail for decisions, making them valuable in domains where transparency and correctness are critical.

3.2 Knowledge Graphs and Ontologies

Knowledge graphs and ontologies are powerful tools for organizing and representing complex relationships between entities. A knowledge graph is a network of nodes (representing entities) and edges (representing relationships), enabling machines to understand context and connections within data. Ontologies add an additional layer by defining categories, properties, and constraints, creating a shared vocabulary for a specific domain. These structures support semantic reasoning, data integration, and the discovery of new insights by linking disparate pieces of information in a meaningful way.

3.3 Rule-Based Systems

Rule-based systems encode expert knowledge in the form of if-then rules, allowing for straightforward and interpretable decision-making. Each rule specifies a condition and an action, enabling the system to respond to specific situations based on predefined logic. Rule engines evaluate incoming data against the rule set and trigger appropriate actions or recommendations. This approach is particularly effective in domains with well-defined procedures or regulations, such as medical diagnosis, financial compliance, or industrial automation, where consistency and explainability are paramount.

Architectural Patterns for Neuro-Symbolic AI

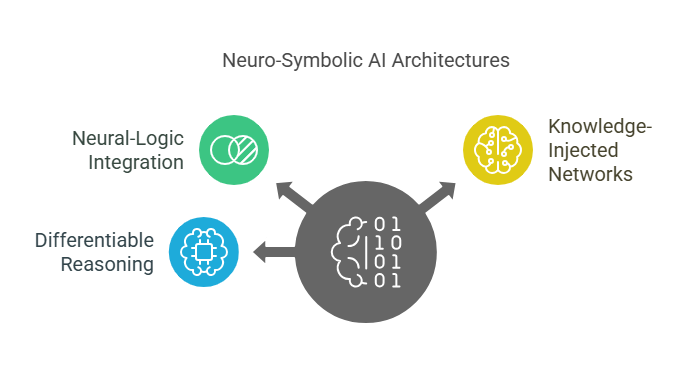

Architectural patterns for neuro-symbolic AI define how neural and symbolic components are integrated to leverage the strengths of both approaches. These patterns are crucial for building systems that can learn from data while also reasoning with structured knowledge, enabling more robust and interpretable expert systems.

4.1 Neural-Logic Integration

Neural-logic integration refers to architectures where neural networks and symbolic logic modules work together, either sequentially or in parallel. In some designs, a neural network processes raw data—such as images or text—and extracts features or predictions, which are then passed to a symbolic reasoning engine for further analysis and decision-making. Alternatively, symbolic logic can guide the neural network by providing constraints or additional context during learning and inference. This integration allows the system to benefit from the adaptability of neural networks and the precision of logical reasoning, making it suitable for tasks that require both perception and explicit rule-following.

4.2 Knowledge-Injected Neural Networks

Knowledge-injected neural networks are architectures where symbolic knowledge is embedded directly into the neural network’s structure or training process. This can be achieved by encoding domain rules, ontologies, or prior knowledge as additional features, constraints, or loss functions. For example, a neural network for medical diagnosis might be trained with extra penalties for predictions that violate known medical guidelines. By injecting knowledge, these networks can learn more efficiently, require less data, and produce outputs that are more consistent with established domain expertise. This approach also helps improve interpretability, as the influence of symbolic knowledge can often be traced within the model’s behavior.

4.3 Differentiable Reasoning

Differentiable reasoning involves designing symbolic reasoning components that are compatible with gradient-based optimization, allowing them to be trained alongside neural networks. This is achieved by making logical operations differentiable, so they can participate in backpropagation and end-to-end learning. Examples include neural theorem provers and differentiable logic layers, which can learn to perform logical inference from data. Differentiable reasoning enables seamless integration of learning and reasoning, allowing the system to adapt its symbolic logic based on experience and feedback. This pattern is particularly promising for complex tasks where both flexible learning and structured reasoning are required.

Designing Hybrid Architectures

Designing hybrid neuro-symbolic architectures involves thoughtful selection and integration of neural and symbolic components to address specific problem domains. This process requires strategies for choosing the right elements, integrating diverse data sources, and encoding knowledge in a way that both neural networks and symbolic systems can utilize effectively.

5.1 Selecting Appropriate Components

The first step in designing a hybrid architecture is to identify which parts of the problem are best handled by neural networks and which require symbolic reasoning. For example, tasks involving perception—such as image or speech recognition—are typically assigned to neural networks, while tasks that require explicit logic or domain knowledge—such as rule-based decision-making—are managed by symbolic modules. The choice of components depends on the nature of the data, the complexity of the reasoning required, and the need for interpretability.

5.2 Data Integration Strategies

Integrating data from multiple sources is a common challenge in hybrid systems. Neural networks often process unstructured data, while symbolic systems require structured representations. A typical strategy is to use neural networks to extract features or entities from raw data, which are then converted into symbolic representations (such as facts or rules) for further reasoning. Conversely, symbolic knowledge can be used to generate additional features or constraints for neural network training.

Here is a simple Python example that demonstrates how to extract entities from text using a neural network (spaCy), and then represent them as symbolic facts for further processing:

python

import spacy

# Load a pre-trained English model

nlp = spacy.load("en_core_web_sm")

text = "John Smith works at OpenAI in San Francisco."

# Process the text

doc = nlp(text)

# Extract entities and represent them as symbolic facts

facts = []

for ent in doc.ents:

facts.append(f"Entity({ent.text}, {ent.label_})")

print("Extracted symbolic facts:")

for fact in facts:

print(fact)This code uses spaCy to extract named entities from a sentence and then formats them as symbolic facts, which could be passed to a rule-based system for further reasoning.

5.3 Knowledge Extraction and Encoding

Encoding domain knowledge in a form usable by both neural and symbolic components is essential for effective hybrid systems. This can involve translating expert rules into machine-readable formats, constructing ontologies, or generating training data that reflects domain constraints. In some cases, knowledge graphs are built from structured data and then used to inform neural network predictions or to validate outputs.

For example, suppose you want to encode a simple rule for a medical expert system: „If a patient has a fever and cough, suggest a flu test.” This can be represented symbolically and used alongside neural predictions:

python

# Example patient data from a neural network

patient = {"fever": True, "cough": True, "age": 30}

# Symbolic rule

def suggest_flu_test(patient):

if patient["fever"] and patient["cough"]:

return "Suggest flu test"

return "No test needed"

print(suggest_flu_test(patient))This approach allows the system to combine neural predictions (e.g., symptom detection from text or images) with explicit symbolic rules, resulting in more robust and interpretable expert systems.

Implementation and Experimentation

Implementing and experimenting with hybrid neuro-symbolic architectures is a crucial phase in developing effective expert systems. This stage involves selecting the right tools and frameworks, conducting case studies to validate approaches, and using appropriate metrics to evaluate system performance.

Tools and Frameworks

A variety of tools and frameworks support the development of neuro-symbolic systems. For neural network components, popular libraries include TensorFlow and PyTorch, which offer flexibility for building and training deep learning models. For symbolic reasoning, tools such as Prolog, CLIPS, or Python-based libraries like PyKE and SymPy are commonly used. Integrating these components often requires custom interfaces or middleware, and some modern frameworks—like DeepProbLog or LNN (Logic Neural Networks)—are designed specifically for neuro-symbolic integration. These tools enable seamless communication between neural and symbolic modules, facilitating the development of complex hybrid systems.

Case Studies

Experimentation with real-world case studies is essential for demonstrating the practical value of hybrid architectures. For example, in healthcare, a hybrid system might use a neural network to analyze medical images and extract features, which are then interpreted by a symbolic reasoning engine that applies medical guidelines to suggest diagnoses. In finance, neural models can process unstructured text from news articles, while symbolic rules assess risk based on extracted information. These case studies highlight how combining neural and symbolic approaches can lead to more accurate, interpretable, and trustworthy expert systems.

Performance Evaluation Metrics

Evaluating the performance of hybrid neuro-symbolic systems requires a combination of traditional machine learning metrics and measures specific to symbolic reasoning. Common metrics include accuracy, precision, recall, and F1-score for classification tasks. For symbolic components, metrics such as rule coverage, inference speed, and explainability are important. Additionally, hybrid systems are often assessed on their ability to generalize to new scenarios, handle ambiguous or incomplete data, and provide transparent justifications for their decisions. Comprehensive evaluation ensures that the system meets both technical and domain-specific requirements.

Applications in Expert Systems

Hybrid neuro-symbolic architectures are particularly well-suited for expert systems, which require both the ability to process complex, unstructured data and the capacity for explicit, rule-based reasoning. By combining neural and symbolic components, these systems can deliver accurate, interpretable, and reliable solutions across various domains.

7.1 Medical Diagnosis

In medical expert systems, hybrid architectures can analyze patient data from multiple sources. For example, a neural network might process medical images or extract symptoms from clinical notes, while a symbolic reasoning engine applies medical guidelines to suggest possible diagnoses. This approach not only improves diagnostic accuracy but also provides transparent explanations for recommendations.

Here is a simple Python example that demonstrates how a neural network’s output (simulated here) can be combined with symbolic rules for medical decision support:

python

# Simulated neural network output

patient_data = {

"fever": True,

"cough": True,

"shortness_of_breath": False,

"age": 45

}

# Symbolic rules for diagnosis

def diagnose(patient):

if patient["fever"] and patient["cough"]:

if patient["shortness_of_breath"]:

return "Possible pneumonia. Recommend chest X-ray."

else:

return "Possible flu. Recommend flu test."

return "No specific diagnosis. Monitor symptoms."

print(diagnose(patient_data))7.2 Financial Analysis

In finance, hybrid expert systems can process unstructured data such as news articles or social media posts using neural networks, extracting relevant entities and sentiments. Symbolic rules can then assess risk or compliance based on this information. This combination enables more nuanced and explainable financial decision-making.

Example: Extracting sentiment (simulated) and applying a symbolic rule for risk assessment.

python

# Simulated neural network sentiment analysis

news_sentiment = "negative"

company = "TechCorp"

# Symbolic rule for risk assessment

def assess_risk(sentiment, company):

if sentiment == "negative":

return f"Increase risk rating for {company}."

else:

return f"No change in risk rating for {company}."

print(assess_risk(news_sentiment, company))7.3 Automated Legal Reasoning

Legal expert systems benefit from hybrid architectures by using neural networks to process and extract information from legal documents, while symbolic reasoning applies statutes and case law to specific scenarios. This enables automated contract analysis, compliance checking, and legal research with clear, auditable logic.

Example: Checking contract compliance with a symbolic rule after extracting contract terms (simulated).

python

# Simulated extracted contract terms

contract = {

"payment_due": True,

"late_fee_applies": False

}

# Symbolic rule for compliance

def check_compliance(contract):

if contract["payment_due"] and not contract["late_fee_applies"]:

return "Send payment reminder. No penalty applied."

elif contract["payment_due"] and contract["late_fee_applies"]:

return "Send payment reminder with late fee notice."

else:

return "No action needed."

print(check_compliance(contract))Challenges and Limitations

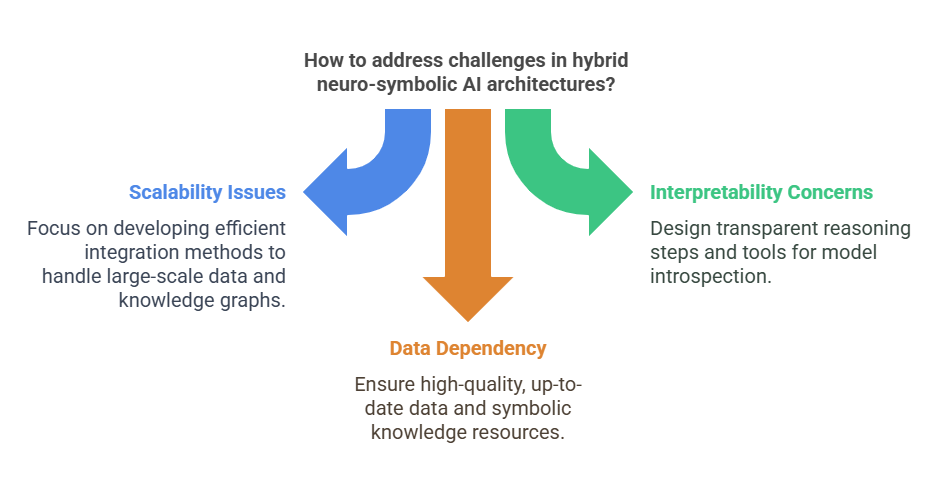

Despite the promise of hybrid neuro-symbolic AI architectures, their development and deployment come with significant challenges and limitations. Understanding these obstacles is crucial for researchers and practitioners aiming to build robust and effective expert systems.

Scalability Issues

One of the primary challenges in hybrid systems is scalability. Neural networks, especially deep learning models, are designed to handle large-scale data and can be efficiently trained on massive datasets using modern hardware. In contrast, symbolic reasoning engines often struggle with scalability, particularly when dealing with extensive rule sets or large knowledge graphs. As the volume and complexity of symbolic knowledge grow, inference can become computationally expensive and slow. Integrating these two paradigms in a way that maintains efficiency at scale remains an open research problem, especially for real-time or high-throughput applications.

Interpretability Concerns

While one of the main motivations for hybrid architectures is to improve interpretability, achieving this goal in practice can be difficult. Neural networks are often considered „black boxes,” making it challenging to trace how specific inputs lead to particular outputs. Although symbolic components can provide transparent reasoning steps, the interaction between neural and symbolic modules can introduce new layers of complexity. Ensuring that the overall system remains explainable—and that users can understand and trust its decisions—requires careful design, documentation, and sometimes the development of new tools for model introspection and visualization.

Data Dependency

Hybrid neuro-symbolic systems can also be highly dependent on the quality and availability of both data and symbolic knowledge. Neural networks require large, well-annotated datasets for effective training, while symbolic reasoning relies on accurate, comprehensive, and up-to-date knowledge bases or rule sets. In many domains, assembling such resources is time-consuming and costly. Furthermore, inconsistencies or gaps in either data or symbolic knowledge can lead to unreliable or biased system behavior. Maintaining and updating both components over time is an ongoing challenge, particularly in rapidly evolving fields.

Future Trends and Research Directions

The field of neuro-symbolic AI is rapidly evolving, with ongoing research and innovation aimed at overcoming current limitations and unlocking new capabilities. Several promising trends and research directions are shaping the future of hybrid expert systems.

Explainable AI (XAI)

Explainability remains a central focus in neuro-symbolic research. As AI systems are increasingly deployed in sensitive domains like healthcare, law, and finance, the demand for transparent and understandable decision-making grows. Future neuro-symbolic architectures are expected to integrate advanced explainability techniques, such as generating human-readable reasoning chains, visualizing the interplay between neural and symbolic components, and providing counterfactual explanations. These advances will help build user trust and facilitate regulatory compliance.

Continual Learning

Another important trend is continual learning, which enables AI systems to adapt to new information and evolving environments without forgetting previously acquired knowledge. In the context of neuro-symbolic AI, this involves not only updating neural network parameters with new data but also dynamically modifying symbolic rules and knowledge bases. Research is focused on developing mechanisms for seamless integration of new knowledge, transfer learning between tasks, and lifelong learning strategies that maintain system performance over time.

Integration with Quantum Computing

Quantum computing is emerging as a potential game-changer for AI, offering the promise of solving certain computational problems much faster than classical computers. Researchers are exploring how quantum algorithms can accelerate both neural and symbolic components of hybrid systems. For example, quantum-enhanced optimization could speed up neural network training, while quantum logic gates might enable more efficient symbolic reasoning. Although still in its early stages, the intersection of neuro-symbolic AI and quantum computing represents an exciting frontier with the potential to revolutionize expert systems.

Conclusion

The development of hybrid neuro-symbolic AI architectures marks a significant step forward in the evolution of expert systems. By combining the strengths of neural networks and symbolic reasoning, these systems are able to tackle complex, real-world problems with a balance of adaptability, accuracy, and interpretability.

Summary of Key Concepts

Throughout this exploration, we have seen how neural networks excel at learning from unstructured data, while symbolic reasoning provides structure, logic, and transparency. Hybrid architectures leverage both approaches, enabling systems to process raw data, extract meaningful features, and apply explicit rules or domain knowledge for decision-making. This synergy is particularly valuable in expert systems, where both data-driven insights and explainable logic are essential.

Best Practices

Building effective neuro-symbolic expert systems requires careful design and integration. Best practices include selecting the right neural and symbolic components for each task, ensuring seamless data flow between modules, and encoding domain knowledge in a way that is both machine-readable and maintainable. Rigorous testing, documentation, and ongoing evaluation are also crucial to ensure reliability, scalability, and user trust. Collaboration between domain experts, data scientists, and engineers is key to capturing the nuances of both data and knowledge.

Final Thoughts on Neuro-Symbolic AI in Expert Systems

Neuro-symbolic AI is still a rapidly developing field, with ongoing research addressing challenges such as scalability, interpretability, and data dependency. As new tools, frameworks, and methodologies emerge, hybrid expert systems are poised to become even more powerful and versatile. Their ability to combine learning and reasoning opens up new possibilities for applications in healthcare, finance, law, and beyond.

Reinforcement Learning in Hybrid Environments

Intelligent Agents: How Artificial Intelligence Is Changing Our World