Introduction

What is Reinforcement Learning?

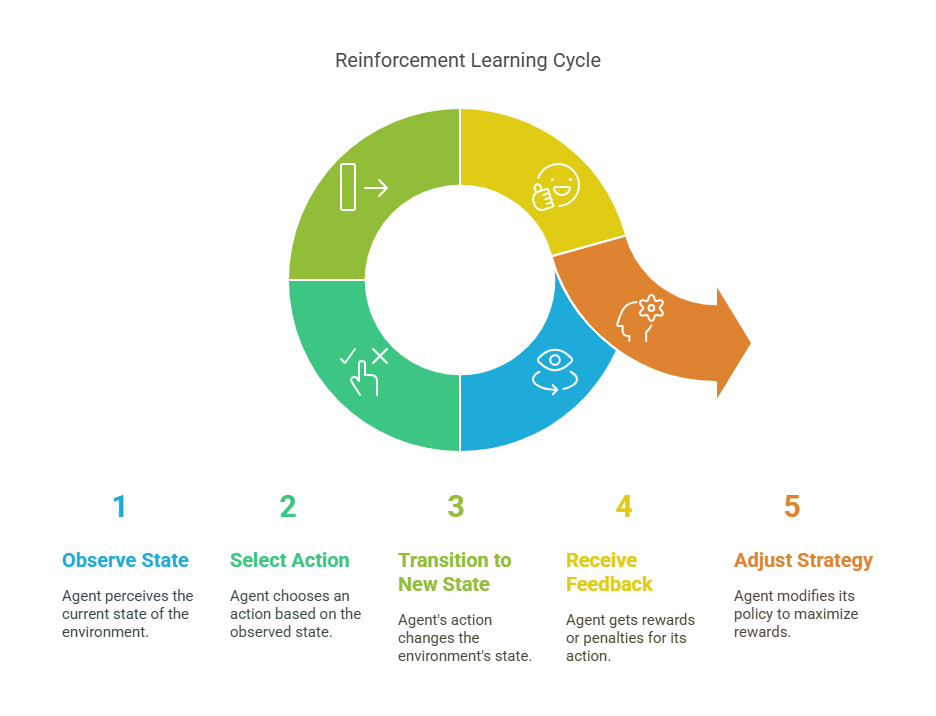

Reinforcement Learning (RL) is a branch of machine learning where an agent learns to make decisions by interacting with an environment. Unlike supervised learning, which relies on labeled data, RL is based on a system of rewards and penalties. The agent takes actions in the environment, receives feedback in the form of rewards or punishments, and adjusts its strategy to maximize cumulative rewards over time.

In RL, the learning process is often modeled as a Markov Decision Process (MDP), where the agent observes the current state, selects an action, transitions to a new state, and receives a reward. Over many iterations, the agent learns an optimal policy—a mapping from states to actions—that yields the highest expected reward.

The Growing Role of RL in Industry

Reinforcement learning has moved beyond academic research and is now making a significant impact in various industrial sectors. Its ability to handle complex, dynamic, and uncertain environments makes it particularly well-suited for industrial applications where traditional rule-based or supervised approaches may fall short.

Industries such as manufacturing, energy, logistics, and robotics are leveraging RL to optimize processes, improve efficiency, and enable automation. RL is used for tasks like predictive maintenance, process control, resource allocation, and real-time decision-making. As computational power and simulation tools advance, RL is expected to play an even greater role in driving innovation and competitiveness in industrial settings.

Fundamentals of Reinforcement Learning

Key Concepts and Terminology

To understand how RL is applied in industry, it’s important to grasp its foundational concepts:

Agent: The learner or decision-maker (e.g., a robot, a control system).

Environment: The system with which the agent interacts (e.g., a factory floor, a power grid).

State: A representation of the current situation of the environment.

Action: A choice made by the agent that affects the environment.

Reward: Feedback from the environment that evaluates the agent’s action.

Policy: The strategy that the agent uses to determine actions based on states.

Value Function: Estimates the expected cumulative reward from a given state or state-action pair.

Episode: A sequence of states, actions, and rewards that ends in a terminal state.

The agent’s goal is to learn a policy that maximizes the total reward over time.

RL Algorithms Commonly Used in Industry

Several RL algorithms have proven effective in industrial applications. The choice of algorithm depends on the complexity of the environment, the availability of data, and the specific requirements of the task. Commonly used RL algorithms include:

Q-Learning: A value-based method that learns the optimal action-value function, enabling the agent to select the best action for each state.

Deep Q-Networks (DQN): Combines Q-learning with deep neural networks to handle high-dimensional state spaces, making it suitable for complex industrial environments.

Policy Gradient Methods: Directly optimize the policy by adjusting its parameters in the direction that increases expected rewards. Examples include REINFORCE and Proximal Policy Optimization (PPO).

Actor-Critic Methods: Combine value-based and policy-based approaches, using separate networks for the policy (actor) and value function (critic). Examples include Advantage Actor-Critic (A2C) and Deep Deterministic Policy Gradient (DDPG).

Model-Based RL: Builds a model of the environment to plan and simulate outcomes, which can be useful when real-world experimentation is costly or risky.

These algorithms form the backbone of RL implementations in industrial settings, enabling agents to learn and adapt to complex, real-world challenges.

Benefits and Challenges of RL in Industrial Settings

Advantages Over Traditional Methods

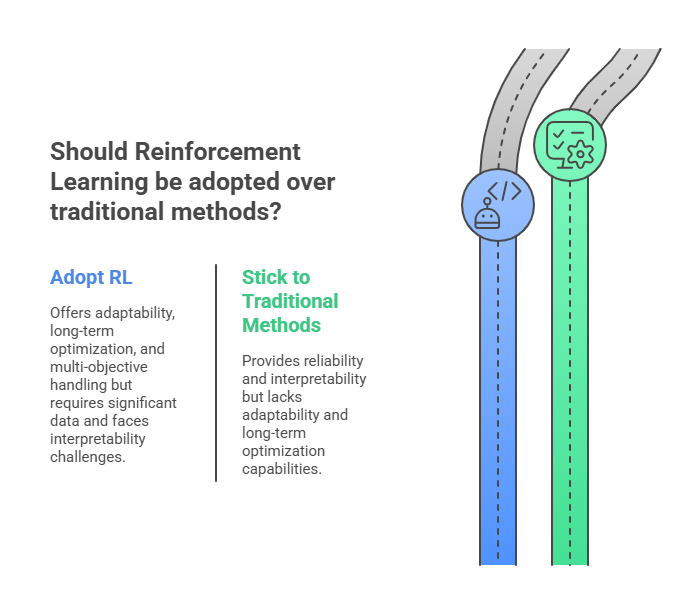

Reinforcement Learning (RL) offers several distinct advantages compared to traditional control and optimization techniques in industrial environments. First, RL excels in dynamic and complex systems where explicit modeling is difficult or impossible. Unlike rule-based systems, RL agents can learn optimal strategies directly from data and adapt to changing conditions in real time. This adaptability is particularly valuable in industries where processes are non-linear, stochastic, or subject to frequent disturbances.

Another advantage is RL’s ability to optimize for long-term objectives rather than just immediate outcomes. For example, in predictive maintenance, an RL agent can learn to schedule interventions that minimize total downtime and maintenance costs over the equipment’s lifetime, rather than simply reacting to failures as they occur. RL can also handle multi-objective optimization, balancing competing goals such as efficiency, safety, and cost.

Practical Challenges and Limitations

Despite its promise, deploying RL in industrial settings comes with significant challenges. One major hurdle is the need for large amounts of high-quality data or realistic simulation environments. Training RL agents through trial and error in the real world can be costly, time-consuming, or even dangerous, especially in critical infrastructure or safety-sensitive applications.

Another challenge is the interpretability and reliability of RL policies. Industrial stakeholders often require clear explanations for automated decisions, especially in regulated sectors. RL models, particularly those based on deep learning, can be seen as “black boxes,” making it difficult to ensure transparency and trust.

Additionally, RL solutions must be robust to unexpected changes and capable of handling rare but critical events. Ensuring safety, stability, and compliance with operational constraints is essential. Finally, integrating RL with existing industrial systems and workflows can require significant engineering effort and domain expertise.

Case Studies of RL in Industrial Applications

Predictive Maintenance and Equipment Optimization

One of the most impactful uses of RL in industry is predictive maintenance. By continuously monitoring sensor data and equipment status, RL agents can learn when to schedule maintenance actions to prevent failures and minimize costs. For example, a manufacturing plant might use RL to optimize the maintenance schedule of its machinery, balancing the risk of breakdowns against the cost of unnecessary servicing.

Process Control in Manufacturing

RL is increasingly used for process control in manufacturing, where it can dynamically adjust parameters such as temperature, pressure, or flow rates to maximize product quality and minimize waste. In chemical plants, for instance, RL agents have been deployed to control reactors, learning optimal control strategies that outperform traditional PID controllers.

Energy Management and Smart Grids

In the energy sector, RL is applied to optimize the operation of smart grids, battery storage, and demand response systems. RL agents can learn to balance supply and demand, reduce energy costs, and integrate renewable energy sources more effectively. For example, an RL-based system might control when to charge or discharge batteries in response to fluctuating electricity prices and grid conditions.

Robotics and Automation

Industrial robotics is a natural fit for RL, as robots often operate in complex, dynamic environments. RL enables robots to learn tasks such as assembly, welding, or material handling through trial and error, improving efficiency and flexibility. In warehouses, RL-powered robots can optimize picking routes and adapt to changing layouts or inventory.

Supply Chain and Logistics Optimization

RL is also making strides in supply chain and logistics, where it helps optimize inventory management, routing, and scheduling. For example, an RL agent can learn to dynamically adjust inventory levels based on demand forecasts, or optimize delivery routes to minimize transportation costs and delivery times.

Implementation Strategies

Data Requirements and Environment Simulation

Implementing reinforcement learning (RL) in industrial settings begins with understanding the data requirements. RL agents learn by interacting with their environment, which means they need access to detailed, high-frequency data about system states, actions, and resulting rewards. In many industrial applications, collecting such data directly from real-world operations can be expensive, risky, or impractical.

To address this, organizations often use environment simulation. By creating digital twins or simulated environments that accurately mimic real-world processes, RL agents can be trained safely and efficiently. These simulations allow for rapid experimentation, safe exploration of edge cases, and the ability to generate large volumes of training data without disrupting actual operations. The quality and fidelity of the simulation are critical—an inaccurate simulation can lead to suboptimal or unsafe policies when deployed in the real world.

Integration with Existing Systems

For RL solutions to deliver value, they must be integrated seamlessly with existing industrial systems such as SCADA (Supervisory Control and Data Acquisition), MES (Manufacturing Execution Systems), or ERP (Enterprise Resource Planning) platforms. This integration enables real-time data exchange, automated decision execution, and continuous feedback for model improvement.

Successful integration often requires collaboration between data scientists, engineers, and domain experts. It may involve developing custom APIs, middleware, or edge computing solutions to ensure that RL agents can interact with legacy equipment and software. Security, reliability, and minimal disruption to ongoing operations are key considerations during this process.

Safety, Reliability, and Real-Time Constraints

Industrial environments demand high standards of safety and reliability. RL agents must be designed to operate within strict safety constraints, ensuring that their actions never endanger people, equipment, or the environment. This can be achieved by incorporating safety rules into the reward function, using constrained RL algorithms, or implementing real-time monitoring and override mechanisms.

Real-time decision-making is another challenge. Many industrial processes require split-second responses, so RL models must be optimized for low-latency inference and robust performance under varying conditions. Testing and validation in both simulated and real-world environments are essential to ensure that RL solutions meet all operational requirements.

Tools and Frameworks for Industrial RL

Popular Libraries and Platforms

A variety of open-source and commercial tools are available to support RL development and deployment in industrial contexts:

OpenAI Gym: A widely used toolkit for developing and comparing RL algorithms. It provides a standard API and a collection of benchmark environments, making it ideal for prototyping and experimentation.

Stable Baselines3: A set of reliable implementations of state-of-the-art RL algorithms in Python, built on top of PyTorch. It is compatible with OpenAI Gym and suitable for both research and industrial applications.

Ray RLlib: A scalable RL library that supports distributed training and deployment. RLlib is designed for production use and integrates with cloud platforms, making it suitable for large-scale industrial projects.

Microsoft Project Bonsai: A commercial platform focused on industrial control and automation, offering tools for building, training, and deploying RL agents in real-world environments.

TensorFlow Agents (TF-Agents): A flexible library for RL in TensorFlow, supporting a range of algorithms and easy integration with simulation environments.

Example Python Code for RL Implementation

Below is a simple example of using Q-learning, a foundational RL algorithm, to solve a basic environment using Python and OpenAI Gym:

python

import gym

import numpy as np

env = gym.make("FrozenLake-v1", is_slippery=False)

n_states = env.observation_space.n

n_actions = env.action_space.n

q_table = np.zeros((n_states, n_actions))

alpha = 0.8 # Learning rate

gamma = 0.95 # Discount factor

epsilon = 0.1 # Exploration rate

for episode in range(1000):

state = env.reset()[0]

done = False

while not done:

if np.random.uniform(0, 1) < epsilon:

action = env.action_space.sample()

else:

action = np.argmax(q_table[state, :])

next_state, reward, done, _, _ = env.step(action)

q_table[state, action] = q_table[state, action] + alpha * (

reward + gamma * np.max(q_table[next_state, :]) - q_table[state, action]

)

state = next_state

# Test the trained agent

state = env.reset()[0]

env.render()

done = False

while not done:

action = np.argmax(q_table[state, :])

state, reward, done, _, _ = env.step(action)

env.render()This code demonstrates the basic workflow of training an RL agent in a simulated environment. In industrial applications, the environment would represent a real process or a high-fidelity simulation, and the RL agent would learn to optimize actions for long-term rewards.

Best Practices and Lessons Learned

Ensuring Robustness and Scalability

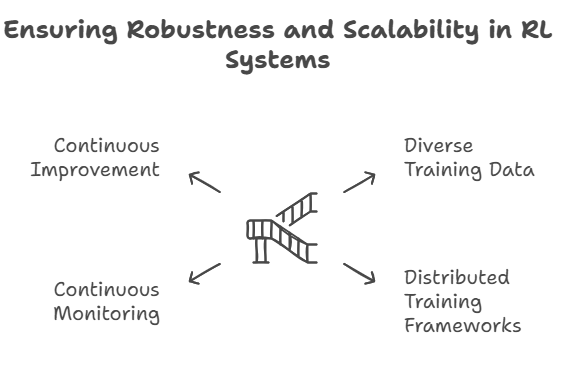

In industrial applications, robustness and scalability are critical for the successful deployment of reinforcement learning (RL) systems. Robustness means that the RL agent can handle a wide range of operating conditions, including unexpected disturbances and rare events, without failure. To achieve this, it is essential to train agents on diverse datasets and simulated scenarios that reflect real-world variability.

Scalability involves designing RL solutions that can grow with the complexity and size of industrial operations. This includes using distributed training frameworks, cloud computing resources, and modular architectures that allow easy expansion. Continuous monitoring and automated retraining help maintain performance as conditions evolve, ensuring that RL systems remain effective over time.

Monitoring, Evaluation, and Continuous Improvement

Ongoing monitoring and evaluation are vital to maintain the safety and effectiveness of RL agents in production. Key performance indicators (KPIs) should be defined to track the agent’s behavior, efficiency, and impact on operational goals. Automated alerting systems can detect anomalies or performance degradation, triggering investigations or retraining.

Continuous improvement involves incorporating feedback from real-world operations into the training process. This may include updating simulation models, refining reward functions, or integrating new data sources. Collaboration between data scientists, engineers, and domain experts ensures that RL systems evolve in alignment with business objectives and regulatory requirements.

Future Trends and Opportunities

Advances in RL Algorithms for Industry

The field of reinforcement learning is rapidly evolving, with new algorithms designed to address the unique challenges of industrial applications. Techniques such as safe RL, which incorporates safety constraints directly into the learning process, are gaining traction. Meta-learning and transfer learning enable agents to adapt quickly to new tasks or environments by leveraging prior knowledge.

Multi-agent RL, where multiple agents learn and interact within a shared environment, opens opportunities for optimizing complex systems like supply chains or smart grids. These advances promise to make RL more efficient, reliable, and applicable to a broader range of industrial problems.

The Role of AI and IoT Integration

The integration of RL with Artificial Intelligence (AI) and the Internet of Things (IoT) is creating new possibilities for smart industrial systems. IoT devices provide real-time data streams that RL agents can use to make informed decisions, enabling dynamic optimization of processes and resources.

AI techniques such as computer vision and natural language processing complement RL by enhancing perception and interaction capabilities. Together, these technologies support autonomous systems that can monitor, analyze, and control industrial operations with minimal human intervention, driving efficiency and innovation.

Conclusion

Key Takeaways

Reinforcement Learning (RL) is rapidly becoming a transformative technology in industrial applications. Its ability to learn optimal decision-making policies through interaction with complex and dynamic environments offers significant advantages over traditional control and optimization methods. RL enables industries to improve efficiency, reduce costs, and enhance automation in areas such as predictive maintenance, process control, energy management, robotics, and supply chain optimization.

However, deploying RL in industrial settings also presents challenges, including data requirements, safety concerns, interpretability, and integration with existing systems. Addressing these challenges requires careful planning, collaboration between domain experts and data scientists, and the use of advanced simulation and monitoring tools.

Recommendations for Industrial Adoption

For organizations considering RL adoption, it is essential to start with well-defined problems where RL’s strengths can be leveraged effectively. Investing in high-quality data collection and simulation environments is critical to safe and efficient training. Collaboration across multidisciplinary teams ensures that RL solutions align with operational goals and safety standards.

Implementing robust monitoring, evaluation, and continuous improvement processes helps maintain RL system performance and reliability over time. Finally, staying informed about advances in RL algorithms, tools, and best practices will enable organizations to capitalize on emerging opportunities and maintain a competitive edge.

Advanced Deep Learning Techniques: From Transformers to Generative Models

Hi, this is a comment.

To get started with moderating, editing, and deleting comments, please visit the Comments screen in the dashboard.

Commenter avatars come from Gravatar.