Introduction: The Changing Face of Code Review

Code review has always been a cornerstone of software development. It’s the process where developers scrutinize each other’s code, looking for bugs, vulnerabilities, style inconsistencies, and opportunities for improvement. A thorough review can catch errors early, prevent costly mistakes, and ensure that the codebase remains clean, maintainable, and secure.

However, traditional code review is not without its challenges. It can be time-consuming, subjective, and prone to human error. Developers are often overloaded with code to review, leading to fatigue and missed issues. Style debates can consume valuable time, and subtle bugs can slip through the cracks.

That’s where AI agents come in. These intelligent tools are transforming the code review process, bringing automation, consistency, and deeper insights to the table. AI agents can analyze code at scale, identify potential problems, and suggest improvements with remarkable speed and accuracy. They can enforce coding standards, detect security vulnerabilities, and even predict the likelihood of bugs based on historical data.

The integration of AI agents into code review is not about replacing human reviewers—it’s about augmenting their abilities. By automating routine checks and surfacing potential issues, AI agents free up developers to focus on the more complex, creative, and strategic aspects of code review. This leads to faster development cycles, higher code quality, and more secure applications.

As we explore the world of AI-powered code review, you’ll discover how these technologies are reshaping the software development landscape—and how you can harness their power to build better, more reliable, and more secure code. The future of code review is here, and it’s driven by AI.

What Are AI Agents? Key Concepts for Developers

AI agents are autonomous software components designed to perceive their environment, make decisions, and take actions to achieve specific goals. In the context of software development, these agents are increasingly being used to support, automate, and enhance various stages of the development lifecycle—including code review, testing, deployment, and monitoring.

At their core, AI agents combine several technologies: machine learning, natural language processing, pattern recognition, and rule-based systems. Unlike traditional scripts or static automation tools, AI agents can learn from data, adapt to new situations, and improve their performance over time. This makes them especially valuable in dynamic environments like modern software projects, where requirements and codebases are constantly evolving.

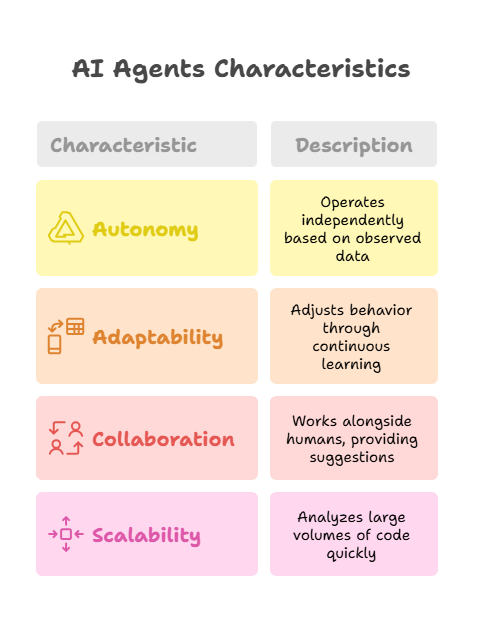

For developers, it’s important to understand a few key characteristics of AI agents:

Autonomy: AI agents operate independently, making decisions based on the data and context they observe. For example, an agent might automatically flag a risky code change or suggest a refactor without explicit human instruction.

Adaptability: Through continuous learning, AI agents can adjust their behavior as they encounter new patterns or feedback. This means their recommendations become more relevant and accurate over time.

Collaboration: AI agents are designed to work alongside humans, not replace them. They provide suggestions, automate repetitive checks, and surface insights, but the final decisions remain in the hands of developers.

Scalability: AI agents can analyze large volumes of code and data much faster than humans, making them ideal for projects with extensive codebases or distributed teams.

In the review process, AI agents might use static code analysis, machine learning models trained on historical bugs, or natural language processing to understand code comments and documentation. They can enforce coding standards, detect security vulnerabilities, and even predict the likelihood of defects before code is merged.

By understanding these key concepts, developers can better leverage AI agents as powerful allies in building high-quality, secure, and maintainable software. The next sections will explore how these agents are applied in practice and the tangible benefits they bring to the code review process.

The Traditional Review Process: Challenges and Limitations

Code review has long been a standard practice in software development teams. Typically, when a developer submits a pull request, peers are assigned to review the changes, check for bugs, ensure adherence to coding standards, and provide feedback. While this process is essential for maintaining code quality and fostering team collaboration, it comes with several inherent challenges and limitations.

Time-Consuming and Resource-Intensive

Manual code review can be slow, especially in large projects or distributed teams. Reviewers often juggle multiple responsibilities, leading to delays in feedback and longer development cycles. When deadlines are tight, reviews may be rushed or skipped altogether, increasing the risk of bugs slipping into production.

Subjectivity and Inconsistency

Human reviewers bring their own experiences, preferences, and biases to the process. This can result in inconsistent feedback, style debates, or overlooked issues. What one reviewer considers a minor problem, another might see as critical. Without clear guidelines and automated checks, maintaining consistency across reviews is difficult.

Cognitive Overload and Fatigue

Reviewing code—especially large or complex changes—requires intense focus and attention to detail. Over time, cognitive fatigue can set in, making it easier to miss subtle bugs or security vulnerabilities. In fast-paced environments, reviewers may only skim through code, further reducing the effectiveness of the process.

Limited Scope and Scalability

Manual reviews are inherently limited by human capacity. As codebases grow and teams scale, it becomes increasingly challenging to thoroughly review every change. Some issues, such as security vulnerabilities or performance bottlenecks, may require specialized knowledge that not all reviewers possess.

Communication Barriers

In distributed or cross-functional teams, communication gaps can arise. Misunderstandings about code intent, unclear feedback, or language barriers can slow down the review process and lead to frustration on both sides.

Summary

While traditional code review is invaluable for knowledge sharing and quality assurance, it is not without its flaws. These challenges highlight the need for tools and approaches that can automate routine checks, provide consistent feedback, and scale with the demands of modern software development. This is where AI agents are beginning to make a significant impact, augmenting human reviewers and transforming the review process for the better.

How AI Agents Support Code Review

AI agents are transforming the code review process by bringing automation, intelligence, and consistency to a traditionally manual and subjective task. Their role is not to replace human reviewers, but to augment their capabilities—making reviews faster, more thorough, and less prone to error.

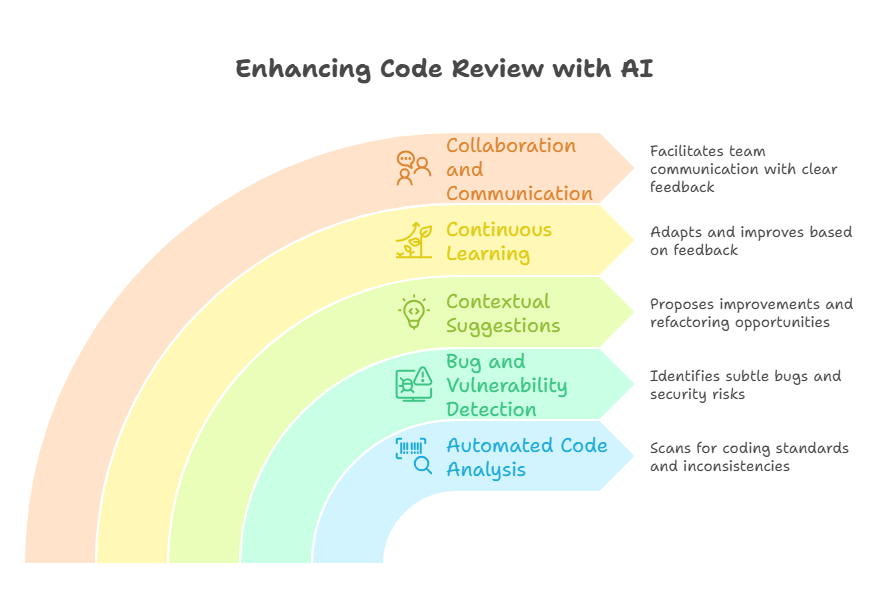

Automated Code Analysis and Style Enforcement

AI agents can automatically scan pull requests for adherence to coding standards, detect code smells, and flag stylistic inconsistencies. Unlike static linters, modern AI agents learn from historical code reviews and adapt their feedback to the evolving style and best practices of a given team or project. This ensures that code quality remains high and that reviews are consistent, regardless of who is on duty.

Bug and Vulnerability Detection

By leveraging machine learning models trained on vast datasets of code and known vulnerabilities, AI agents can identify subtle bugs and security risks that might escape human eyes. They can spot patterns associated with common errors, such as off-by-one mistakes, resource leaks, or insecure API usage, and alert reviewers before the code is merged.

Contextual Suggestions and Refactoring

AI agents don’t just point out problems—they can also suggest concrete improvements. For example, they might recommend more efficient algorithms, highlight redundant code, or propose refactoring opportunities. Some advanced agents can even generate code snippets or automated fixes, streamlining the review process and reducing the burden on developers.

Continuous Learning and Adaptation

The best AI agents improve over time. By learning from past reviews, accepted suggestions, and team feedback, they become more attuned to the specific needs and preferences of a project. This means their recommendations get smarter and more relevant with each iteration.

Collaboration and Communication

AI agents can facilitate better communication between team members by providing clear, objective feedback and documentation. They can summarize changes, highlight areas of concern, and even help prioritize review tasks based on risk or complexity.

Summary

By supporting code review with automation, intelligence, and adaptability, AI agents help teams catch more issues early, maintain higher code quality, and accelerate the path from pull request to deployment. The result is a more efficient, reliable, and enjoyable development process—where humans and AI work together to build better software.

Automating Code Quality Checks with AI

One of the most immediate benefits of AI agents in code review is their ability to automate quality checks that would otherwise require manual inspection. These agents can analyze code structure, detect anti-patterns, enforce coding standards, and even predict potential maintenance issues—all in real-time as part of the review process.

Code Quality Metrics and Analysis

AI agents can automatically calculate and track various code quality metrics such as cyclomatic complexity, code coverage, maintainability index, and technical debt. They can flag functions or classes that exceed complexity thresholds, identify areas with insufficient test coverage, and suggest refactoring opportunities.

Pattern Recognition and Anti-Pattern Detection

By training on large codebases, AI agents learn to recognize both good and bad coding patterns. They can detect common anti-patterns like god objects, spaghetti code, or inappropriate use of design patterns, providing specific recommendations for improvement.

Automated Testing and Validation

AI agents can generate test cases, validate existing tests, and even predict which parts of the code are most likely to contain bugs based on historical data and code characteristics.

Here’s a practical Python example demonstrating how to build an AI-powered code quality checker:

python

import ast

import re

import json

from typing import Dict, List, Tuple, Any

from dataclasses import dataclass

from collections import defaultdict

import subprocess

import os

@dataclass

class QualityIssue:

"""Represents a code quality issue found by AI analysis"""

severity: str # 'low', 'medium', 'high', 'critical'

category: str # 'complexity', 'style', 'security', 'performance'

message: str

line_number: int

suggestion: str = ""

confidence: float = 0.0

class AICodeQualityAgent:

"""

An AI agent that performs automated code quality checks

"""

def __init__(self):

self.complexity_threshold = 10

self.line_length_limit = 100

self.function_length_limit = 50

self.security_patterns = self._load_security_patterns()

self.quality_history = defaultdict(list)

def _load_security_patterns(self) -> Dict[str, str]:

"""Load common security vulnerability patterns"""

return {

r'eval\s*$$': 'Avoid using eval() - potential code injection vulnerability',

r'exec\s*\(': 'Avoid using exec() - potential code execution vulnerability',

r'input\s*\([^)]*$$': 'Consider using safer input validation methods',

r'pickle\.loads?\s*\(': 'Pickle can execute arbitrary code - consider safer serialization',

r'subprocess\.call\s*\([^)]*shell\s*=\s*True': 'Shell=True can be dangerous - validate input carefully'

}

def analyze_code_file(self, file_path: str) -> List[QualityIssue]:

"""Analyze a Python file for quality issues"""

issues = []

try:

with open(file_path, 'r', encoding='utf-8') as file:

content = file.read()

lines = content.split('\n')

# Parse AST for structural analysis

tree = ast.parse(content)

# Perform various quality checks

issues.extend(self._check_complexity(tree, lines))

issues.extend(self._check_style_issues(lines))

issues.extend(self._check_security_patterns(content, lines))

issues.extend(self._check_function_length(tree, lines))

issues.extend(self._check_naming_conventions(tree))

except Exception as e:

issues.append(QualityIssue(

severity='high',

category='parsing',

message=f'Failed to parse file: {str(e)}',

line_number=1

))

return issues

def _check_complexity(self, tree: ast.AST, lines: List[str]) -> List[QualityIssue]:

"""Check cyclomatic complexity of functions"""

issues = []

class ComplexityVisitor(ast.NodeVisitor):

def __init__(self):

self.complexity_issues = []

def visit_FunctionDef(self, node):

complexity = self._calculate_complexity(node)

if complexity > self.outer.complexity_threshold:

confidence = min(1.0, (complexity - self.outer.complexity_threshold) / 10)

self.complexity_issues.append(QualityIssue(

severity='medium' if complexity < 15 else 'high',

category='complexity',

message=f'Function "{node.name}" has high complexity ({complexity})',

line_number=node.lineno,

suggestion=f'Consider breaking down this function into smaller, more focused functions',

confidence=confidence

))

self.generic_visit(node)

def _calculate_complexity(self, node):

"""Simple complexity calculation"""

complexity = 1 # Base complexity

for child in ast.walk(node):

if isinstance(child, (ast.If, ast.While, ast.For, ast.Try, ast.With)):

complexity += 1

elif isinstance(child, ast.BoolOp):

complexity += len(child.values) - 1

return complexity

@property

def outer(self):

return outer_self

outer_self = self

visitor = ComplexityVisitor()

visitor.visit(tree)

issues.extend(visitor.complexity_issues)

return issues

def _check_style_issues(self, lines: List[str]) -> List[QualityIssue]:

"""Check for style and formatting issues"""

issues = []

for i, line in enumerate(lines, 1):

# Check line length

if len(line) > self.line_length_limit:

issues.append(QualityIssue(

severity='low',

category='style',

message=f'Line too long ({len(line)} > {self.line_length_limit} characters)',

line_number=i,

suggestion='Consider breaking long lines for better readability',

confidence=0.9

))

# Check for trailing whitespace

if line.rstrip() != line and line.strip():

issues.append(QualityIssue(

severity='low',

category='style',

message='Trailing whitespace detected',

line_number=i,

suggestion='Remove trailing whitespace',

confidence=1.0

))

# Check for TODO/FIXME comments

if re.search(r'#\s*(TODO|FIXME|HACK)', line, re.IGNORECASE):

issues.append(QualityIssue(

severity='low',

category='maintenance',

message='TODO/FIXME comment found',

line_number=i,

suggestion='Consider creating a ticket to track this work',

confidence=0.8

))

return issues

def _check_security_patterns(self, content: str, lines: List[str]) -> List[QualityIssue]:

"""Check for potential security vulnerabilities"""

issues = []

for pattern, message in self.security_patterns.items():

for match in re.finditer(pattern, content, re.MULTILINE):

line_num = content[:match.start()].count('\n') + 1

issues.append(QualityIssue(

severity='high',

category='security',

message=f'Potential security issue: {message}',

line_number=line_num,

suggestion='Review this code for security implications',

confidence=0.7

))

return issues

def _check_function_length(self, tree: ast.AST, lines: List[str]) -> List[QualityIssue]:

"""Check for overly long functions"""

issues = []

class FunctionLengthVisitor(ast.NodeVisitor):

def visit_FunctionDef(self, node):

if hasattr(node, 'end_lineno') and node.end_lineno:

length = node.end_lineno - node.lineno + 1

if length > outer_self.function_length_limit:

issues.append(QualityIssue(

severity='medium',

category='complexity',

message=f'Function "{node.name}" is too long ({length} lines)',

line_number=node.lineno,

suggestion='Consider breaking this function into smaller functions',

confidence=0.8

))

self.generic_visit(node)

outer_self = self

visitor = FunctionLengthVisitor()

visitor.visit(tree)

return issues

def _check_naming_conventions(self, tree: ast.AST) -> List[QualityIssue]:

"""Check naming conventions"""

issues = []

class NamingVisitor(ast.NodeVisitor):

def visit_FunctionDef(self, node):

if not re.match(r'^[a-z_][a-z0-9_]*$', node.name):

issues.append(QualityIssue(

severity='low',

category='style',

message=f'Function name "{node.name}" doesn\'t follow snake_case convention',

line_number=node.lineno,

suggestion='Use snake_case for function names',

confidence=0.9

))

self.generic_visit(node)

def visit_ClassDef(self, node):

if not re.match(r'^[A-Z][a-zA-Z0-9]*$', node.name):

issues.append(QualityIssue(

severity='low',

category='style',

message=f'Class name "{node.name}" doesn\'t follow PascalCase convention',

line_number=node.lineno,

suggestion='Use PascalCase for class names',

confidence=0.9

))

self.generic_visit(node)

visitor = NamingVisitor()

visitor.visit(tree)

return issues

def generate_quality_report(self, issues: List[QualityIssue]) -> Dict[str, Any]:

"""Generate a comprehensive quality report"""

if not issues:

return {

'overall_score': 100,

'total_issues': 0,

'issues_by_severity': {},

'issues_by_category': {},

'recommendations': ['Code quality looks excellent!']

}

# Calculate metrics

severity_counts = defaultdict(int)

category_counts = defaultdict(int)

for issue in issues:

severity_counts[issue.severity] += 1

category_counts[issue.category] += 1

# Calculate overall score (simple scoring system)

score = 100

score -= severity_counts['critical'] * 20

score -= severity_counts['high'] * 10

score -= severity_counts['medium'] * 5

score -= severity_counts['low'] * 1

score = max(0, score)

# Generate recommendations

recommendations = []

if severity_counts['critical'] > 0:

recommendations.append('Address critical issues immediately before deployment')

if severity_counts['high'] > 0:

recommendations.append('Review and fix high-severity issues')

if category_counts['complexity'] > 3:

recommendations.append('Consider refactoring complex functions')

if category_counts['security'] > 0:

recommendations.append('Review security-related issues carefully')

return {

'overall_score': score,

'total_issues': len(issues),

'issues_by_severity': dict(severity_counts),

'issues_by_category': dict(category_counts),

'recommendations': recommendations,

'detailed_issues': [

{

'severity': issue.severity,

'category': issue.category,

'message': issue.message,

'line': issue.line_number,

'suggestion': issue.suggestion,

'confidence': issue.confidence

}

for issue in sorted(issues, key=lambda x: (x.severity, x.line_number))

]

}

def analyze_pull_request(self, file_paths: List[str]) -> Dict[str, Any]:

"""Analyze multiple files in a pull request"""

all_issues = []

file_reports = {}

for file_path in file_paths:

if file_path.endswith('.py'):

print(f"Analyzing {file_path}...")

issues = self.analyze_code_file(file_path)

all_issues.extend(issues)

file_reports[file_path] = self.generate_quality_report(issues)

# Generate overall report

overall_report = self.generate_quality_report(all_issues)

overall_report['file_reports'] = file_reports

return overall_report

# Example usage and demonstration

def demonstrate_ai_code_quality():

"""Demonstrate the AI code quality agent"""

# Create a sample Python file with various quality issues

sample_code = '''

import os

import pickle

def very_long_function_name_that_violates_conventions():

# TODO: This function needs refactoring

x = input("Enter something: ") # Security issue

if x == "admin":

if len(x) > 0:

if x.isalpha():

if x.lower() == "admin":

if True: # Unnecessary complexity

eval(x) # Security vulnerability

return "admin access granted"Detecting Bugs and Vulnerabilities Before Deployment

One of the most valuable contributions of AI agents in the code review process is their ability to detect bugs and security vulnerabilities before code reaches production. By leveraging advanced analysis techniques and machine learning, these agents help teams catch issues early—reducing the risk of costly errors, downtime, or security breaches after deployment.

Static and Dynamic Analysis

AI agents use static code analysis to examine source code without executing it, identifying common bugs such as null pointer dereferences, uninitialized variables, or off-by-one errors. They can also flag risky patterns, like improper input validation or insecure use of external libraries. Some agents go further, performing dynamic analysis by running code in controlled environments to uncover runtime issues that static checks might miss.

Machine Learning for Bug Prediction

Modern AI agents are trained on vast datasets of historical code changes and bug reports. By learning from patterns associated with past defects, they can predict which new code changes are most likely to introduce bugs. This predictive capability allows teams to focus their attention on high-risk areas and prioritize reviews accordingly.

Security Vulnerability Detection

Security is a top concern in any deployment pipeline. AI agents can automatically scan for known vulnerability patterns, such as the use of unsafe functions (eval, exec, or insecure deserialization), hardcoded credentials, or improper error handling. They can also cross-reference code with public vulnerability databases (like CVE) to flag dependencies with known security issues.

Automated Feedback and Remediation Suggestions

Beyond just flagging problems, advanced AI agents provide actionable feedback and even suggest code changes to remediate vulnerabilities. For example, if an agent detects the use of an insecure function, it might recommend a safer alternative or provide a code snippet for secure input validation.

Continuous Monitoring and Integration

AI-powered bug and vulnerability detection can be integrated directly into CI/CD pipelines, ensuring that every pull request is automatically checked before merging. This continuous monitoring helps maintain a high standard of code quality and security throughout the development lifecycle.

Summary

By detecting bugs and vulnerabilities before deployment, AI agents act as an intelligent safety net for development teams. They not only reduce the risk of production incidents but also free up human reviewers to focus on more complex, creative, and architectural aspects of code review. The result is more robust, secure, and reliable software—delivered with greater confidence and speed.

AI Agents in Continuous Integration and Continuous Deployment (CI/CD)

The integration of AI agents into CI/CD pipelines is transforming the way modern software is built, tested, and delivered. By automating critical quality and security checks, AI agents help teams maintain high standards while accelerating the path from code submission to deployment.

Automated Quality Gates

AI agents can act as intelligent quality gates within CI/CD workflows. Every time a developer submits a pull request, the agent automatically analyzes the code for style violations, complexity, potential bugs, and security vulnerabilities. If issues are detected, the agent can block the merge, provide detailed feedback, or even suggest automated fixes—ensuring that only high-quality code progresses through the pipeline.

Smart Test Selection and Prioritization

Running all tests for every code change can be time-consuming and resource-intensive. AI agents can analyze the nature of code changes and historical test results to predict which tests are most relevant or likely to fail. This allows the CI system to prioritize or selectively run tests, speeding up feedback loops and reducing unnecessary computation.

Anomaly Detection and Rollback Automation

Once code is deployed, AI agents can monitor application behavior in real time, using anomaly detection algorithms to spot unusual patterns that might indicate a bug or performance regression. If a critical issue is detected, the agent can trigger automated rollbacks or alert the team, minimizing downtime and user impact.

Dependency and Security Management

AI agents can continuously scan project dependencies for known vulnerabilities, outdated packages, or license compliance issues. When a risky dependency is found, the agent can recommend or even automate safe upgrades, keeping the application secure and up to date.

Continuous Learning and Pipeline Optimization

As AI agents gather more data from each build and deployment, they learn which types of changes are most likely to cause problems. Over time, this allows them to optimize the pipeline—reducing false positives, improving test coverage, and streamlining the overall process.

Summary

By embedding AI agents into CI/CD pipelines, development teams gain a powerful ally that automates quality assurance, accelerates delivery, and reduces the risk of production issues. This not only leads to faster, safer releases but also frees up developers to focus on innovation and problem-solving, rather than repetitive manual checks. The future of DevOps is intelligent, adaptive, and AI-driven.

Best Practices for Integrating AI Agents into Developer Workflows

Successfully integrating AI agents into developer workflows requires more than just plugging in a new tool. To truly benefit from AI-driven automation and insights, teams should follow a set of best practices that ensure smooth adoption, maximize value, and maintain trust in the development process.

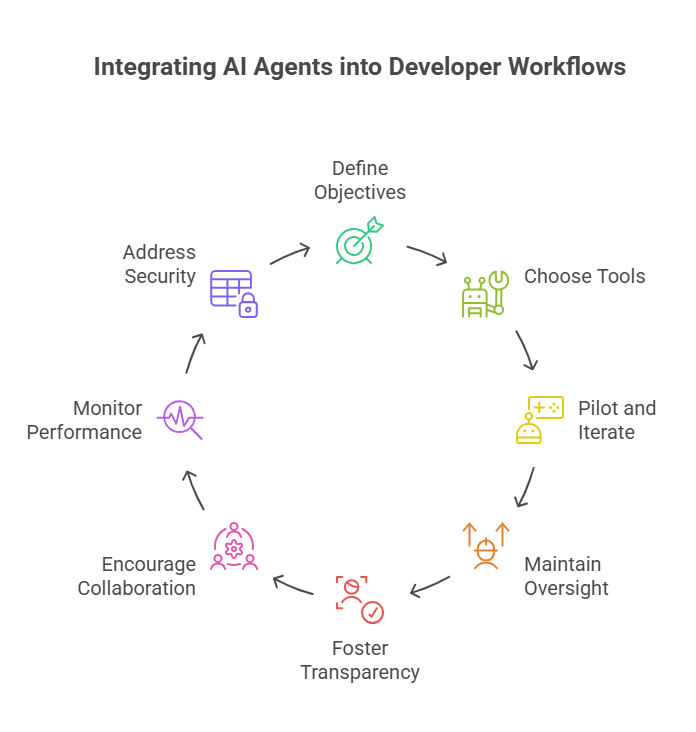

Start with Clear Objectives

Before introducing AI agents, define what you want to achieve. Are you aiming to improve code quality, speed up reviews, catch security issues, or automate repetitive tasks? Clear goals help you choose the right tools and measure their impact.

Choose the Right Tools for Your Stack

Select AI agents and platforms that integrate seamlessly with your existing development environment, version control system, and CI/CD pipeline. Look for solutions that support your programming languages, frameworks, and preferred workflows.

Pilot and Iterate

Begin with a pilot project or a subset of your codebase. Gather feedback from developers, monitor the agent’s recommendations, and adjust configurations as needed. Iterative adoption helps identify pain points early and builds team confidence.

Maintain Human Oversight

AI agents are powerful, but they’re not infallible. Always keep a human in the loop—especially for critical decisions, code merges, or security-related changes. Encourage developers to review, validate, and, if necessary, override AI suggestions.

Foster Transparency and Explainability

Choose AI tools that provide clear explanations for their recommendations. When developers understand why an agent flagged an issue or suggested a change, they’re more likely to trust and adopt its feedback.

Encourage Collaboration and Feedback

Treat AI agents as team members that learn and improve over time. Encourage developers to provide feedback on the agent’s performance, flag false positives, and suggest improvements. Many modern tools allow for this kind of interactive learning.

Monitor Performance and Impact

Track key metrics such as review times, bug rates, and deployment frequency before and after introducing AI agents. Use this data to assess the agent’s effectiveness and identify areas for further optimization.

Address Security and Privacy Concerns

Ensure that any code or data shared with AI agents is handled securely and in compliance with your organization’s policies. Be mindful of privacy, especially when using cloud-based or third-party solutions.

Summary

Integrating AI agents into developer workflows is a journey, not a one-time event. By following best practices—setting clear goals, maintaining oversight, fostering transparency, and encouraging collaboration—teams can harness the full potential of AI to build better, more reliable software, while keeping developers engaged and empowered throughout the process.

Real-World Examples: AI-Powered Review in Action

The adoption of AI agents in code review is no longer just a theoretical concept—it’s a practical reality in many organizations, from startups to tech giants. These real-world examples illustrate how AI-powered review is transforming software development workflows and delivering tangible benefits.

GitHub Copilot and Pull Request Suggestions

GitHub Copilot, powered by OpenAI, is one of the most recognizable AI tools for developers. While it’s best known for code completion, it also assists in code review by suggesting improvements, flagging potential issues, and even generating documentation. In some organizations, Copilot is integrated directly into the pull request process, where it can highlight risky changes or suggest alternative implementations before human reviewers step in.

DeepCode and Static Analysis

DeepCode (now part of Snyk) uses machine learning to analyze millions of open-source repositories and provide real-time feedback on code quality, security, and maintainability. When a developer submits a pull request, DeepCode’s AI agent scans the changes, flags bugs, and recommends fixes—often catching issues that traditional linters or manual reviews might miss.

Facebook’s Sapienz for Automated Bug Detection

Facebook developed Sapienz, an AI-driven tool that automatically tests and analyzes code changes in mobile apps. By generating and executing test cases, Sapienz uncovers bugs and performance regressions before code is merged, significantly reducing the number of issues that reach production.

Microsoft’s Security Risk Detection

Microsoft uses AI agents to scan code for security vulnerabilities as part of its Secure Development Lifecycle. These agents analyze pull requests, cross-reference code with known vulnerability databases, and provide actionable feedback to developers—helping teams address security risks early in the development process.

Custom AI Review Bots in CI/CD Pipelines

Many companies build their own AI-powered bots that integrate with CI/CD pipelines. These bots automatically review pull requests, enforce coding standards, and block merges if critical issues are detected. For example, a fintech company might use an AI agent to ensure that all code handling sensitive data passes strict security checks before deployment.

Summary

These examples show that AI-powered code review is already making a difference in real-world projects. By automating routine checks, surfacing hidden issues, and providing intelligent suggestions, AI agents help teams deliver higher-quality, more secure software—faster and with greater confidence. As adoption grows, we can expect even more innovative applications and deeper integration of AI into the software development lifecycle.

Challenges and Ethical Considerations

While AI agents bring significant benefits to the code review process, their adoption also introduces new challenges and ethical questions that development teams must address to ensure responsible and effective use.

False Positives and Over-Reliance

AI agents, despite their sophistication, are not infallible. They can generate false positives—flagging code as problematic when it’s actually correct—or miss subtle issues that require human intuition. Over-reliance on AI suggestions can lead to complacency, where developers trust the agent’s output without critical evaluation. Maintaining a balance between automation and human oversight is essential to preserve code quality and developer engagement.

Bias in Training Data

AI agents learn from historical codebases and review data. If this data contains biases—such as favoring certain coding styles, ignoring edge cases, or reflecting outdated practices—the agent may perpetuate these biases in its recommendations. This can result in unfair or inconsistent feedback, especially in diverse or evolving teams. Regularly updating training data and monitoring agent behavior helps mitigate this risk.

Transparency and Explainability

Developers are more likely to trust and adopt AI-powered tools when they understand how decisions are made. Some AI agents operate as “black boxes,” making it difficult to trace the reasoning behind their suggestions. Lack of transparency can lead to frustration or resistance among team members. Choosing tools that provide clear explanations and actionable feedback fosters trust and encourages adoption.

Security and Privacy Concerns

Integrating AI agents—especially those hosted in the cloud—raises questions about data security and privacy. Source code may contain sensitive business logic, credentials, or proprietary algorithms. Teams must ensure that any code shared with AI agents is protected, complies with organizational policies, and does not expose confidential information to third parties.

Intellectual Property and Ownership

When AI agents contribute to code changes or generate new code, questions arise about intellectual property and authorship. Who owns the code produced or modified by an AI agent? How should contributions be attributed? Organizations should establish clear guidelines and policies to address these issues, especially in open-source or collaborative environments.

Ethical Use and Impact on Jobs

The automation of code review and other development tasks can raise concerns about job displacement or the devaluation of human expertise. It’s important to position AI agents as tools that augment, rather than replace, human developers—freeing them to focus on higher-level problem-solving, creativity, and innovation.

Summary

The integration of AI agents into the code review process offers tremendous potential, but it also demands careful consideration of ethical, technical, and organizational challenges. By fostering transparency, maintaining human oversight, and regularly evaluating the impact of AI tools, teams can harness the benefits of automation while upholding the values of fairness, security, and responsible innovation.

The Future of Code Review: Trends and Predictions

The landscape of code review is evolving rapidly, driven by advances in artificial intelligence, automation, and collaborative development practices. As AI agents become more sophisticated and deeply integrated into developer workflows, several key trends and predictions are emerging for the future of code review.

Deeper Human-AI Collaboration

The future will see even tighter collaboration between developers and AI agents. Rather than simply flagging issues, AI will become a true partner in the review process—offering context-aware suggestions, learning from team preferences, and adapting to project-specific standards. Human reviewers will focus more on architectural decisions, creative problem-solving, and mentoring, while AI handles routine checks and pattern recognition.

Proactive and Predictive Review

AI agents will move beyond reactive analysis to proactive and predictive review. By analyzing historical data, code evolution, and team behavior, AI will anticipate potential issues before they arise, suggest improvements during coding (not just at review time), and help teams avoid common pitfalls. This shift will lead to fewer bugs, faster feedback, and a more seamless development experience.

Multi-Modal and Cross-Project Insights

Future AI agents will be able to analyze not just code, but also documentation, test cases, and even user feedback. They’ll provide holistic insights that span multiple projects, repositories, and teams—helping organizations identify systemic issues, share best practices, and maintain consistency at scale.

Personalized and Adaptive Feedback

AI-powered review will become increasingly personalized. Agents will learn individual developer strengths, weaknesses, and preferred coding styles, tailoring their feedback to maximize learning and productivity. This adaptive approach will make code review a more positive and constructive experience for everyone involved.

Integration with Emerging Technologies

As new technologies like quantum computing, blockchain, and edge AI mature, code review tools will evolve to address their unique challenges. AI agents will help developers navigate complex, distributed, and highly secure environments, ensuring that code remains robust and compliant with emerging standards.

Ethical and Responsible AI

With greater power comes greater responsibility. The future of code review will place a strong emphasis on ethical AI—ensuring transparency, fairness, and accountability in automated decision-making. Teams will adopt guidelines and tools to audit AI behavior, mitigate bias, and maintain human oversight.

Summary

The future of code review is intelligent, adaptive, and collaborative. AI agents will not replace human reviewers, but will empower them to work smarter, learn faster, and build better software. By embracing these trends and staying attuned to ethical considerations, development teams can look forward to a code review process that is not only more efficient and reliable, but also more engaging and rewarding for everyone involved.

Conclusion: Towards Smarter, Faster, and Safer Deployments

The integration of AI agents into the code review and deployment process marks a significant leap forward for software development teams. By automating routine checks, surfacing hidden issues, and providing intelligent, context-aware feedback, AI agents are transforming code review from a manual bottleneck into a streamlined, collaborative, and data-driven practice.

This shift brings tangible benefits: faster review cycles, higher code quality, and a dramatic reduction in bugs and vulnerabilities reaching production. Developers can focus on creative problem-solving and architectural decisions, while AI agents handle repetitive tasks and ensure that every change meets the team’s standards for quality and security.

However, the journey toward smarter, faster, and safer deployments is not just about technology. It’s about building trust between humans and AI, maintaining transparency, and keeping ethical considerations at the forefront. Teams must remain vigilant—reviewing AI recommendations critically, updating tools and practices as the landscape evolves, and ensuring that automation serves to empower, not replace, human expertise.

Looking ahead, the future of code review and deployment will be defined by even deeper collaboration between people and intelligent agents. As AI continues to learn and adapt, and as teams embrace best practices for integration, the software development process will become more resilient, innovative, and inclusive.

In summary, AI agents are not just tools—they are creative partners in the journey from pull request to deployment. By working together, developers and AI can achieve smarter, faster, and safer releases, setting a new standard for excellence in modern software engineering.

Quantum Programming with AI Agents: New Horizons in Computing

Human and AI: Agents as Creative Partners

AI Agents in Practice: Automating a Programmer’s Daily Tasks