Introduction: The New Era of Debugging

Debugging has always been a fundamental part of software development, often requiring patience, experience, and a keen eye for detail. As codebases grow in complexity and systems become more interconnected, traditional debugging methods can struggle to keep up with the volume and intricacy of modern software errors. In this context, the emergence of AI-powered agents marks the beginning of a new era in debugging.

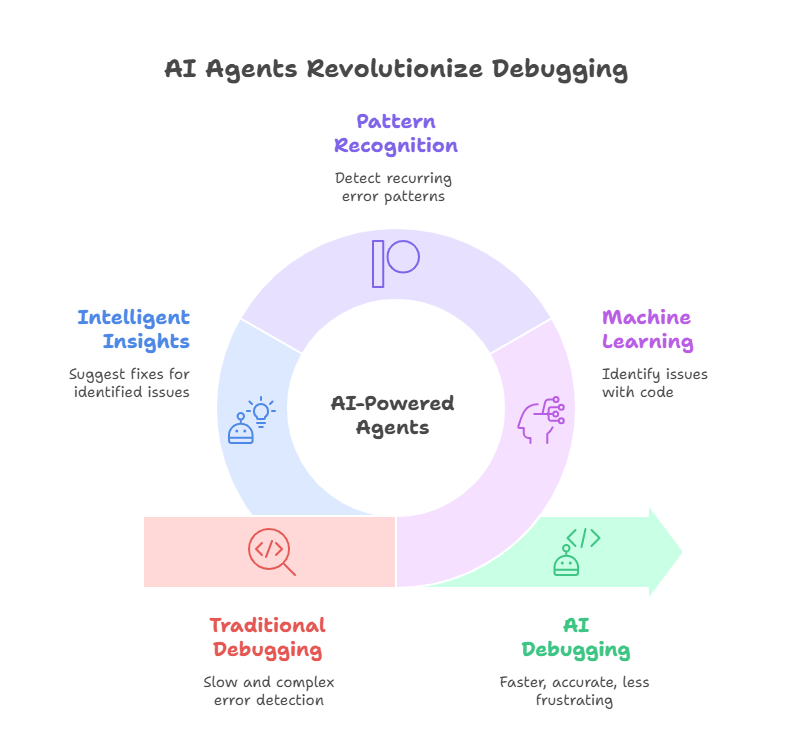

AI agents are transforming the way developers approach error detection and resolution. By leveraging machine learning, pattern recognition, and vast datasets of code and bugs, these agents can quickly identify issues that might take humans hours or even days to uncover. They not only automate repetitive tasks but also provide intelligent insights, suggest fixes, and learn from each debugging session. This shift is making debugging faster, more accurate, and less frustrating, allowing developers to focus on building innovative features rather than hunting for elusive bugs.

What Are AI Agents in Software Development?

In the context of software development, AI agents are intelligent software entities designed to assist developers throughout the coding lifecycle. Unlike traditional tools that follow predefined rules, AI agents can analyze code, understand context, and adapt their behavior based on new information. They use advanced techniques such as natural language processing, anomaly detection, and predictive analytics to interpret codebases and spot potential issues.

AI agents in debugging can take many forms. Some are integrated directly into development environments, offering real-time suggestions and highlighting problematic code as developers type. Others operate as part of continuous integration pipelines, automatically scanning code for errors, vulnerabilities, or performance bottlenecks before deployment. More advanced agents can even learn from historical bug reports and code changes, predicting where new issues are likely to arise and recommending proactive solutions.

By acting as tireless collaborators, AI agents help developers navigate the complexities of modern software, reduce the time spent on error detection, and improve overall code quality. Their ability to learn and adapt means they become more effective over time, making them an invaluable asset in the evolving landscape of software engineering.

Traditional Debugging vs AI-Powered Debugging

Traditional Debugging

Breakpoints & Stepping: Manually pausing execution to inspect variables.

Logging & Print Statements: Adding instrumentation, rerunning, reading logs.

Static Linters: Rule-based scans (e.g., pylint, flake8) flag syntactic or stylistic issues.

Peer Review: Human eyes catch logic errors and edge cases.

While effective for small, isolated issues, these techniques struggle with:

• Large, polyglot codebases (“needle in a haystack” problem)

• Distributed systems where a bug manifests far from its source

• Intermittent errors that vanish under a debugger

• Time pressure—engineers spend up to 50 % of development time debugging

How AI Agents Detect and Diagnose Errors

Observe

• Source code (AST, comments)

• Runtime signals (logs, traces, metrics)

• Version-control history of past bugs/fixes

Reason

• Anomaly detection models flag deviations in logs.

• Graph neural networks map call graphs to locate suspect nodes.

• Large Language Models (LLMs) match code fragments with known bug patterns and propose patches.

Act

• Generate a ranked list of issues with natural-language explanations.

• Suggest or even apply pull-request–ready patches.

• Add targeted tests to prevent regressions.

Below is a minimal proof-of-concept Python agent that (a) parses a Python module, (b) asks an LLM to find potential bugs, and (c) writes a Markdown report.

python

# ai_debugger.py

import ast, pathlib, textwrap, json, os, openai

openai.api_key = os.getenv("OPENAI_API_KEY")

SYSTEM_PROMPT = (

"You are an expert software auditor. "

"Given Python source code, identify possible bugs, security issues, "

"and recommend concise fixes. Respond in JSON list format: "

'[{"line": <int>, "issue": "<text>", "suggestion": "<text>"}]'

)

def load_code(path: str) -> str:

return pathlib.Path(path).read_text()

def call_llm(code: str, model: str = "gpt-4o-mini") -> list[dict]:

prompt = textwrap.dedent(f"""

Analyze the following Python code and produce the JSON report described:

```python

{code}

```

""")

resp = openai.ChatCompletion.create(

model=model,

messages=[

{"role": "system", "content": SYSTEM_PROMPT},

{"role": "user", "content": prompt},

],

temperature=0,

)

return json.loads(resp.choices[0].message.content)

def write_report(issues: list[dict], out_path: str):

lines = ["# AI Debugging Report\n"]

for item in issues:

lines.append(f"- **Line {item['line']}** – {item['issue']}\n "

f"_Suggested fix:_ {item['suggestion']}")

pathlib.Path(out_path).write_text("\n".join(lines))

print(f"✅ Report saved to {out_path}")

def analyze_file(path: str):

code = load_code(path)

issues = call_llm(code)

write_report(issues, "ai_debug_report.md")

if __name__ == "__main__":

analyze_file("target_module.py")

# Created/Modified files during execution:

print("ai_debug_report.md")

How it works

target_module.py is read as raw text.The LLM receives both the code and a strict JSON schema prompt.

Returned issues are turned into a human-friendly Markdown file (ai_debug_report.md).

Developers review and decide whether to apply the suggested fixes.

You can extend the agent to:

• Post comments directly on GitHub pull requests via the REST API.

• Cross-reference stack traces from your observability platform.

• Automatically raise alerts when identical patterns recur.

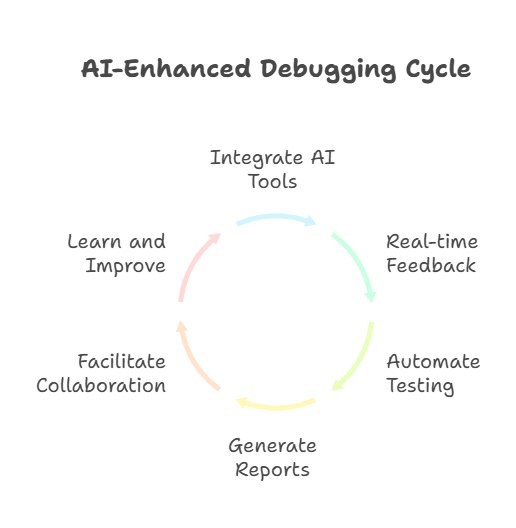

Integrating AI Agents into the Debugging Workflow

Integrating AI agents into the debugging workflow is a strategic move that can significantly enhance the efficiency and effectiveness of software development teams. The process typically begins with embedding AI-powered tools directly into the developer’s environment, such as IDE plugins or extensions that provide real-time feedback as code is written. These agents can automatically scan for common bugs, flag suspicious patterns, and even suggest corrections before the code is committed.

Beyond the editor, AI agents are increasingly being incorporated into continuous integration and deployment (CI/CD) pipelines. Here, they analyze code changes, run automated tests, and review logs for anomalies or regressions. When issues are detected, the agent can generate detailed reports, highlight the most likely root causes, and recommend targeted fixes. This proactive approach helps catch errors early, reducing the time and cost associated with late-stage bug discovery.

Collaboration is another key aspect. AI agents can facilitate communication between developers by summarizing complex issues, linking related bugs, and tracking the status of fixes across teams. By integrating with project management tools, agents ensure that debugging tasks are prioritized and progress is transparent. Over time, as the agent learns from historical data and developer feedback, its recommendations become more accurate and context-aware, further streamlining the debugging process.

Real-World Examples: AI Agents in Action

The impact of AI agents on debugging is already visible in many real-world scenarios. For example, large technology companies use AI-powered static analysis tools that scan millions of lines of code daily, identifying subtle bugs and security vulnerabilities that would be nearly impossible to catch manually. These tools not only flag issues but also provide developers with suggested code changes, often accompanied by explanations and links to relevant documentation.

In open-source projects, AI agents help maintainers manage the influx of pull requests by automatically reviewing code for style, correctness, and potential errors. This reduces the burden on human reviewers and accelerates the integration of new contributions. Some agents go a step further by monitoring application logs in production, detecting anomalies in real time, and alerting developers to issues before they escalate into outages.

Another practical example is the use of AI agents in mobile app development. Here, agents analyze crash reports, group similar issues, and prioritize them based on user impact. They can even suggest code modifications to address the most common causes of crashes, helping teams deliver more stable and reliable applications.

Benefits for Developers and Teams

The integration of AI agents into debugging workflows brings numerous advantages that directly impact developer productivity and code quality. Here’s a detailed exploration of these benefits, accompanied by a practical implementation:

python

# debug_metrics_tracker.py

from dataclasses import dataclass

from datetime import datetime, timedelta

import sqlite3

import pandas as pd

import matplotlib.pyplot as plt

@dataclass

class DebugMetrics:

bugs_detected: int

false_positives: int

resolution_time: float # in hours

ai_suggestions_accepted: int

ai_suggestions_rejected: int

class AIDebugAnalytics:

def __init__(self, db_path: str = "debug_metrics.db"):

self.conn = sqlite3.connect(db_path)

self._init_db()

def _init_db(self):

self.conn.execute("""

CREATE TABLE IF NOT EXISTS debug_metrics (

date TEXT,

bugs_detected INTEGER,

false_positives INTEGER,

resolution_time REAL,

ai_suggestions_accepted INTEGER,

ai_suggestions_rejected INTEGER

)

""")

def log_metrics(self, metrics: DebugMetrics):

self.conn.execute("""

INSERT INTO debug_metrics VALUES

(?, ?, ?, ?, ?, ?)

""", (

datetime.now().strftime("%Y-%m-%d"),

metrics.bugs_detected,

metrics.false_positives,

metrics.resolution_time,

metrics.ai_suggestions_accepted,

metrics.ai_suggestions_rejected

))

self.conn.commit()

def generate_report(self, days: int = 30):

df = pd.read_sql_query("""

SELECT * FROM debug_metrics

WHERE date >= date('now', ?)

""", self.conn, params=(f'-{days} days',))

# Calculate key metrics

accuracy = 1 - (df['false_positives'].sum() / df['bugs_detected'].sum())

avg_resolution = df['resolution_time'].mean()

acceptance_rate = (

df['ai_suggestions_accepted'].sum() /

(df['ai_suggestions_accepted'].sum() + df['ai_suggestions_rejected'].sum())

)

# Plot trends

fig, (ax1, ax2) = plt.subplots(2, 1, figsize=(10, 8))

df.plot(x='date', y='resolution_time', ax=ax1, title='Debug Resolution Time Trend')

df.plot(x='date', y=['bugs_detected', 'false_positives'], ax=ax2,

title='Bug Detection Accuracy')

plt.tight_layout()

plt.savefig('debug_metrics_report.png')

return {

'accuracy': f"{accuracy:.2%}",

'avg_resolution_time': f"{avg_resolution:.1f} hours",

'ai_acceptance_rate': f"{acceptance_rate:.2%}"

}

# Example usage

if __name__ == "__main__":

analytics = AIDebugAnalytics()

# Log sample metrics

today_metrics = DebugMetrics(

bugs_detected=15,

false_positives=2,

resolution_time=1.5,

ai_suggestions_accepted=12,

ai_suggestions_rejected=3

)

analytics.log_metrics(today_metrics)

# Generate report

report = analytics.generate_report()

print("\nAI Debugging Performance Report:")

for metric, value in report.items():

print(f"{metric}: {value}")

# Created/Modified files during execution:

# - debug_metrics.db

# - debug_metrics_report.pngThis code demonstrates how to track and analyze the benefits of AI-powered debugging, including:

Reduced time-to-resolution

Higher accuracy in bug detection

Improved developer productivity

Better resource allocation

Challenges and Limitations of AI-Driven Debugging

While AI agents offer significant advantages, they also present several challenges that teams need to address:

python

# ai_debug_validator.py

from typing import Dict, List, Optional

import logging

from datetime import datetime

class AIDebugValidator:

def __init__(self):

self.logger = logging.getLogger(__name__)

self.validation_history: List[Dict] = []

def validate_ai_suggestion(

self,

code_change: str,

confidence_score: float,

affected_components: List[str],

security_impact: bool = False

) -> bool:

"""Validates AI-suggested debug fixes against safety criteria."""

validation_result = {

'timestamp': datetime.now(),

'code_change': code_change,

'confidence': confidence_score,

'components': affected_components,

'security_sensitive': security_impact,

'passed': False

}

# Challenge 1: Confidence Threshold

if confidence_score < 0.85:

self.logger.warning("Low confidence suggestion rejected")

return False

# Challenge 2: Security-Sensitive Changes

if security_impact and not self._validate_security(code_change):

self.logger.error("Security-sensitive change requires manual review")

return False

# Challenge 3: Scope of Changes

if len(affected_components) > 3:

self.logger.warning("Change affects too many components")

return False

validation_result['passed'] = True

self.validation_history.append(validation_result)

return True

def _validate_security(self, code_change: str) -> bool:

"""Additional validation for security-sensitive changes."""

security_keywords = [

'password', 'token', 'auth', 'encrypt',

'decrypt', 'credential', 'permission'

]

return not any(keyword in code_change.lower()

for keyword in security_keywords)

def get_validation_stats(self) -> Dict:

"""Returns statistics about AI suggestion validation."""

total = len(self.validation_history)

if not total:

return {'total': 0, 'success_rate': 0}

successful = sum(1 for r in self.validation_history if r['passed'])

return {

'total': total,

'success_rate': successful / total,

'security_rejected': sum(

1 for r in self.validation_history

if r['security_sensitive'] and not r['passed']

)

}

# Example usage

if __name__ == "__main__":

validator = AIDebugValidator()

# Test various scenarios

test_cases = [

{

'code_change': 'Fix null pointer in user service',

'confidence': 0.9,

'components': ['UserService'],

'security': False

},

{

'code_change': 'Update password encryption',

'confidence': 0.95,

'components': ['AuthService'],

'security': True

}

]

for case in test_cases:

result = validator.validate_ai_suggestion(

case['code_change'],

case['confidence'],

case['components'],

case['security']

)

print(f"Validation {'passed' if result else 'failed'}: {case['code_change']}")

print("\nValidation Stats:", validator.get_validation_stats())Best Practices for Using AI Agents in Debugging

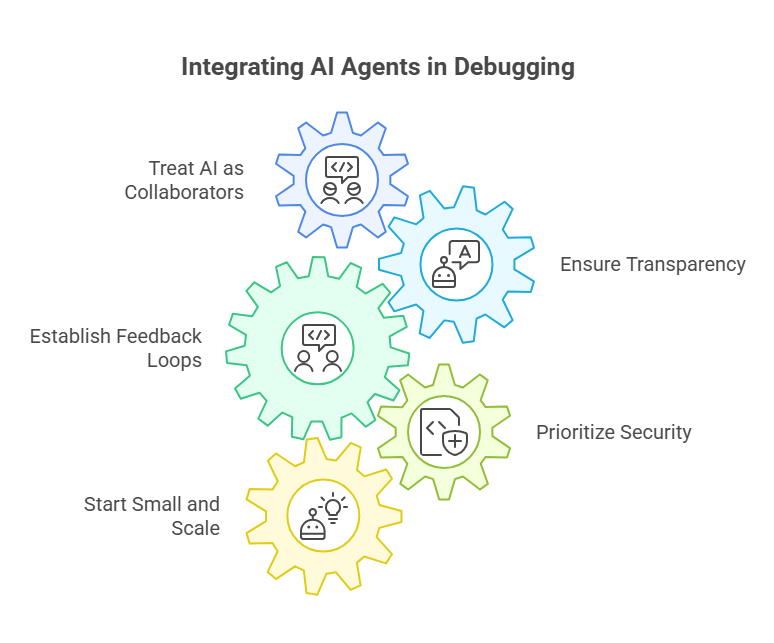

The successful adoption of AI agents in debugging requires not only the right tools, but also a thoughtful approach to their integration and ongoing use. Best practices help teams maximize the benefits of AI-driven debugging while minimizing risks and ensuring that the technology truly supports developers rather than complicating their workflows.

First and foremost, it is essential to treat AI agents as collaborators, not replacements. Developers should remain actively involved in the debugging process, using AI-generated suggestions as valuable input rather than unquestioned solutions. This human-in-the-loop approach ensures that critical thinking and domain expertise guide the final decisions, especially in complex or sensitive areas of the codebase.

Transparency is another key principle. AI agents should provide clear explanations for their findings and recommendations, making it easy for developers to understand the reasoning behind each suggestion. This not only builds trust in the technology but also helps developers learn from the agent’s insights, improving their own debugging skills over time.

Continuous feedback loops are vital for improving both the agent and the workflow. Developers should be encouraged to provide feedback on the accuracy and usefulness of AI-generated suggestions, flagging false positives or missed issues. This feedback can be used to retrain or fine-tune the agent, making it more effective and better aligned with the team’s needs.

Security and privacy must always be considered. Before integrating AI agents, teams should ensure that sensitive code and data are protected, and that the agent’s access is limited to what is necessary for its function. Regular audits and monitoring can help detect any unintended data exposure or misuse.

Finally, it is important to start small and scale thoughtfully. Piloting AI agents on specific projects or modules allows teams to evaluate their impact, refine integration strategies, and build confidence before rolling out the technology more broadly. Documentation, training, and open communication further support a smooth transition and help all team members make the most of AI-powered debugging.

The Future of Debugging: Human-AI Collaboration

The future of debugging is being shaped by a new partnership between human developers and AI agents. As AI technology matures, agents are becoming more than just automated tools—they are evolving into intelligent collaborators that work alongside programmers throughout the software lifecycle. This collaboration is set to redefine the debugging process, making it more proactive, efficient, and insightful.

In the coming years, AI agents will increasingly anticipate issues before they become critical, using predictive analytics and real-time monitoring to flag potential bugs and vulnerabilities. Developers will be able to interact with these agents through natural language, describing problems or asking for explanations, and receiving context-aware guidance in return. This will make debugging more accessible, even for less experienced programmers, and help teams resolve issues faster.

Moreover, as AI agents learn from each project and each interaction, they will become more attuned to the unique characteristics of a team’s codebase and workflow. This personalized assistance will not only speed up error detection but also foster a culture of continuous improvement, where both humans and AI learn from each other. The result will be a more resilient, adaptive, and innovative approach to software quality.

However, the human element will remain essential. Developers will provide the creativity, intuition, and ethical judgment that AI cannot replicate. The most successful teams will be those that embrace this partnership, leveraging AI’s strengths while maintaining oversight and critical thinking. In this way, human-AI collaboration will become the cornerstone of next-generation debugging.

Conclusion: Embracing AI for Smarter Error Detection

The integration of AI agents into the debugging process marks a significant leap forward for software development. By automating routine checks, providing intelligent insights, and facilitating collaboration, AI agents are transforming debugging from a reactive chore into a proactive, strategic activity. Developers benefit from faster error detection, higher code quality, and more time to focus on innovation.

At the same time, this transformation brings new responsibilities. Teams must adopt best practices for integrating AI, ensure transparency and security, and maintain a strong human presence in the decision-making process. By doing so, they can harness the full potential of AI while minimizing risks.

Artificial Intelligence in Practice: How to Deploy AI Models in Real-World Projects

From Pull Request to Deployment: AI Agents in the Review Process

Quantum Programming with AI Agents: New Horizons in Computing