Introduction to Adaptive User Interfaces

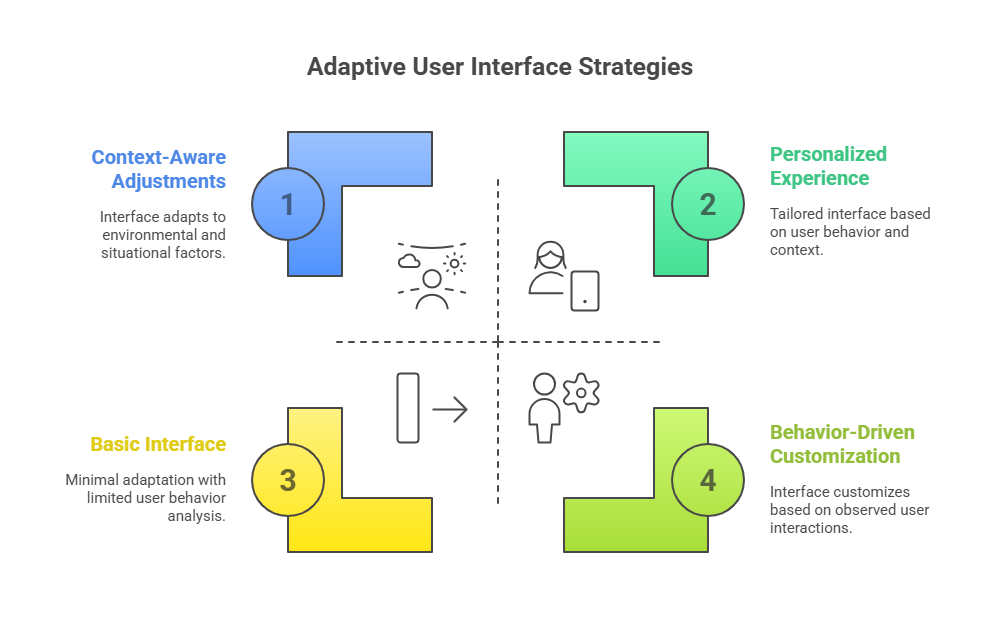

Adaptive user interfaces (UIs) represent a transformative approach in software design, where the interface dynamically adjusts to the needs, preferences, and context of individual users. Unlike static interfaces that present the same layout and options to every user, adaptive UIs aim to provide a personalized experience that enhances usability, efficiency, and satisfaction.

The growing diversity of users, devices, and usage scenarios has made adaptive interfaces increasingly important. For example, a mobile app might simplify its layout when used on a small screen or adjust content based on the user’s expertise level. Similarly, enterprise software can tailor dashboards and workflows to match different roles within an organization.

At the core of adaptive UIs is the ability to sense and interpret user behavior and environmental context. This includes tracking interactions, understanding user goals, and recognizing situational factors such as location, device type, or time of day. By leveraging this information, adaptive interfaces can modify visual elements, navigation paths, and available features in real time.

The benefits of adaptive UIs are multifaceted. They can reduce cognitive load by presenting only relevant information, improve accessibility for users with disabilities, and increase engagement by aligning with user preferences. Moreover, adaptive interfaces can support learning and skill development by gradually introducing complexity as users become more proficient.

However, designing effective adaptive UIs requires careful consideration. The system must balance adaptability with predictability to avoid confusing users. Transparency about changes and user control over adaptations are also critical to building trust.

In recent years, advances in artificial intelligence (AI) have significantly expanded the potential of adaptive user interfaces. AI agents can analyze vast amounts of data, detect patterns, and make intelligent decisions about how to tailor the UI dynamically. This integration opens new possibilities for creating highly personalized, context-aware, and responsive user experiences.

Role of AI Agents in User Interface Adaptation

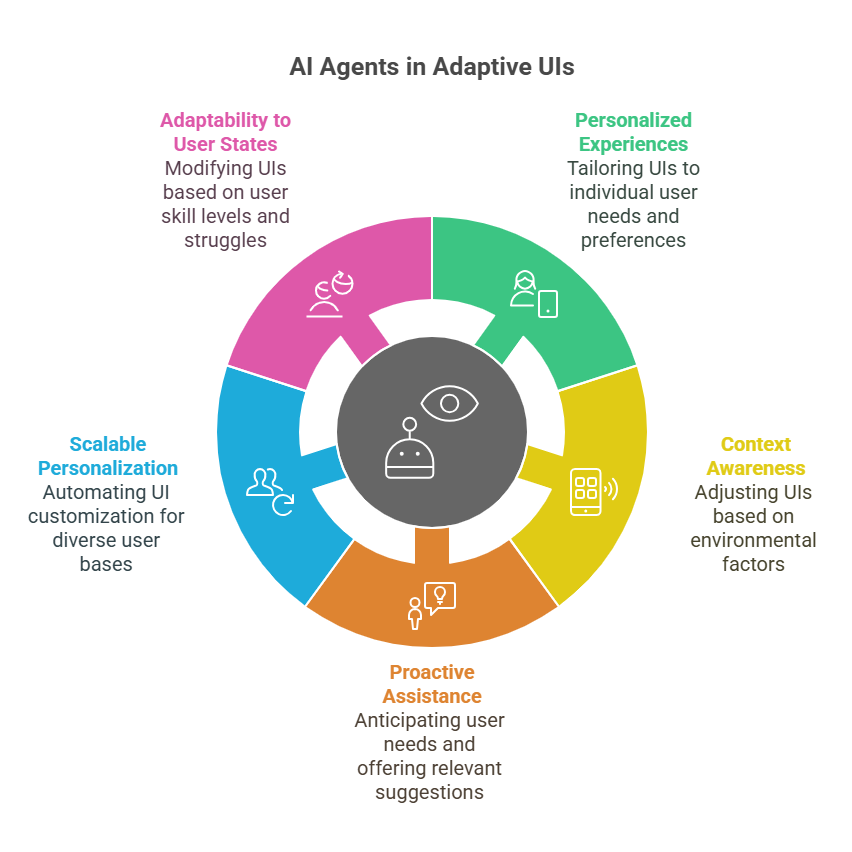

AI agents play a crucial role in enabling adaptive user interfaces by acting as intelligent intermediaries that observe, interpret, and respond to user behavior and context. Unlike traditional static interfaces, AI-powered adaptive UIs leverage these agents to deliver personalized and dynamic experiences tailored to each user’s unique needs.

At their core, AI agents collect and analyze data from user interactions, such as clicks, navigation patterns, time spent on tasks, and even biometric signals in some cases. They use this information to build a model of the user’s preferences, goals, and skill level. This continuous learning process allows the interface to evolve over time, becoming more aligned with the user’s expectations and improving overall usability.

One of the key functions of AI agents in UI adaptation is context awareness. They can detect environmental factors like device type, screen size, location, or network conditions and adjust the interface accordingly. For example, an AI agent might simplify the layout on a smartphone or prioritize offline functionality when connectivity is poor.

AI agents also enable proactive assistance by anticipating user needs. Through predictive analytics and pattern recognition, they can suggest relevant features, shortcuts, or content before the user explicitly requests them. This reduces friction and enhances efficiency, making the interaction smoother and more intuitive.

Moreover, AI agents facilitate personalization at scale. In applications with diverse user bases, manually customizing interfaces for each individual is impractical. AI agents automate this process, delivering tailored experiences that respect user diversity without requiring extensive manual configuration.

Another important aspect is adaptability to changing user states. For instance, an AI agent can recognize when a user is struggling with a task and offer additional guidance or simplify the interface. Conversely, it can introduce advanced features as the user gains expertise, supporting continuous learning and engagement.

Types of AI Agents Used in Adaptive UIs

Adaptive user interfaces rely on various types of AI agents to deliver personalized and context-aware experiences. These agents differ in their design, complexity, and methods of learning, each offering unique advantages depending on the application’s requirements. The most common types include rule-based agents, machine learning agents, and reinforcement learning agents.

Rule-Based Agents

Rule-based agents operate on predefined sets of rules created by developers or domain experts. These rules specify how the interface should adapt based on certain conditions or user actions. For example, a rule might state that if a user repeatedly struggles with a feature, the interface should display additional help tips. Rule-based agents are straightforward to implement and interpret, making them suitable for applications with well-understood adaptation logic. However, their rigidity limits their ability to handle complex or unforeseen user behaviors.

Machine Learning Agents

Machine learning (ML) agents use data-driven models to learn patterns from user interactions and make adaptation decisions. Unlike rule-based agents, ML agents can generalize from past data to predict user preferences and behaviors without explicit programming for every scenario. Common techniques include supervised learning, where the agent learns from labeled examples, and unsupervised learning, which identifies hidden patterns in data. ML agents enable more flexible and scalable adaptation, allowing interfaces to evolve as more user data becomes available.

Reinforcement Learning Agents

Reinforcement learning (RL) agents learn optimal adaptation strategies through trial and error by interacting with users and receiving feedback in the form of rewards or penalties. Over time, these agents discover which interface adjustments lead to better user outcomes, such as increased engagement or task completion rates. RL agents are particularly useful in dynamic environments where user preferences may change frequently. However, they require careful design to ensure that learning does not negatively impact the user experience during exploration phases.

Hybrid Agents

In practice, many adaptive UIs employ hybrid agents that combine elements of rule-based systems, machine learning, and reinforcement learning. This approach leverages the strengths of each type, such as the interpretability of rules and the adaptability of learning models, to create more robust and effective interfaces.

Other AI Agent Types

Additional AI agent types, such as natural language processing (NLP) agents and conversational agents, can also contribute to adaptive UIs by enabling more natural and intuitive interactions. These agents interpret user input in natural language and adapt the interface or provide assistance accordingly.

User Behavior Analysis and Context Awareness

A fundamental capability of AI agents in adaptive user interfaces is the ability to analyze user behavior and understand the context in which interactions occur. This insight enables the interface to respond intelligently and tailor itself to meet the user’s current needs, preferences, and environment.

User Behavior Analysis

User behavior analysis involves collecting and interpreting data generated by users as they interact with the interface. This data can include clicks, navigation paths, time spent on specific tasks, error rates, and even more subtle signals like hesitation or repeated attempts. By examining these patterns, AI agents can infer user goals, skill levels, and preferences.

For example, if a user frequently accesses a particular feature, the AI agent might prioritize that feature in the interface or create shortcuts to improve accessibility. Conversely, if a user struggles with certain functions, the agent can offer additional guidance or simplify the interface to reduce cognitive load.

Behavior analysis often employs machine learning techniques to detect trends and predict future actions. This predictive capability allows the interface to proactively adapt, anticipating user needs before they are explicitly expressed.

Context Awareness

Context awareness extends beyond user behavior to include environmental and situational factors that influence how the interface should adapt. Key contextual elements include device type (e.g., smartphone, tablet, desktop), screen size, location, time of day, network connectivity, and even ambient conditions like lighting or noise levels.

For instance, an AI agent might adjust the interface layout for a smaller screen, switch to a dark mode in low-light environments, or reduce data usage when network bandwidth is limited. Location-based adaptations could include displaying region-specific content or adjusting language settings automatically.

Context awareness also encompasses the user’s current task or workflow stage. By recognizing what the user is trying to accomplish, the AI agent can present relevant tools, information, or shortcuts to streamline the process.

Combining Behavior and Context

The most effective adaptive interfaces integrate both user behavior analysis and context awareness. This combination allows AI agents to create highly personalized experiences that are sensitive not only to who the user is but also to where, when, and how they are interacting with the system.

Privacy Considerations

Collecting and analyzing user behavior and context data raises important privacy and ethical concerns. Adaptive systems must ensure transparency, obtain user consent, and implement robust data protection measures to maintain trust.

Design Principles for Adaptive User Interfaces

Creating effective adaptive user interfaces requires careful adherence to design principles that ensure the system remains intuitive, user-friendly, and trustworthy. These principles guide how AI agents implement adaptations while maintaining a positive user experience.

User-Centered Adaptation

Adaptations should prioritize the user’s needs, preferences, and goals. Designers must ensure that changes enhance usability rather than complicate it. This involves understanding the target audience and tailoring adaptations to support their specific tasks and skill levels.

Transparency and Feedback

Users should be aware when the interface adapts and understand why changes occur. Providing clear feedback about adaptations helps build trust and reduces confusion. For example, subtle notifications or visual cues can inform users about new features or layout adjustments.

Control and Customization

While AI agents can automate adaptations, users should retain control over the interface. Offering options to customize or override adaptations empowers users and prevents frustration. This balance between automation and user control is essential for acceptance and satisfaction.

Consistency and Predictability

Adaptive changes must maintain a consistent look and feel to avoid disorienting users. Predictable behavior helps users build mental models of the interface, making it easier to learn and navigate. Sudden or frequent drastic changes should be avoided.

Minimal Intrusiveness

Adaptations should be subtle and non-disruptive. The interface must continue to support the user’s workflow without causing interruptions or distractions. Gradual changes are often more effective than abrupt modifications.

Context Sensitivity

Designers should ensure that adaptations are appropriate to the user’s current context, including device, environment, and task. Context-aware adaptations improve relevance and usability, making the interface more responsive to real-world conditions.

Privacy and Ethical Considerations

Respecting user privacy is paramount. Adaptive systems must handle personal data responsibly, obtain informed consent, and provide options to opt out of data collection or adaptation features.

Evaluation and Iteration

Continuous testing and user feedback are vital to refine adaptive interfaces. Designers should monitor how adaptations impact user experience and make iterative improvements based on real-world usage.

Technologies and Frameworks for Building AI-Driven UIs

Building AI-driven adaptive user interfaces requires a combination of tools, libraries, and platforms that facilitate the integration of artificial intelligence into UI development. These technologies enable developers to implement user behavior analysis, context awareness, machine learning models, and real-time adaptation efficiently.

AI and Machine Learning Frameworks

Popular machine learning frameworks like TensorFlow, PyTorch, and scikit-learn provide the foundation for building AI models that can analyze user data and predict preferences. These frameworks support training, evaluation, and deployment of models that power adaptive behaviors in UIs.

Natural Language Processing (NLP) Libraries

For interfaces involving conversational agents or text-based interactions, NLP libraries such as Hugging Face Transformers, spaCy, and NLTK are essential. They enable understanding and generation of human language, allowing AI agents to interpret user input and respond contextually.

Frontend Frameworks with AI Integration

Modern frontend frameworks like React, Angular, and Vue.js can be combined with AI services to create dynamic and responsive interfaces. Libraries such as TensorFlow.js allow running machine learning models directly in the browser, enabling real-time adaptation without server round-trips.

AI Service Platforms

Cloud platforms like Google Cloud AI, Microsoft Azure Cognitive Services, and AWS AI Services offer pre-built AI capabilities such as image recognition, speech-to-text, and recommendation engines. These services can be integrated into UIs to add intelligent features quickly.

User Behavior Analytics Tools

Tools like Mixpanel, Amplitude, and Hotjar collect and analyze user interaction data, which can feed AI models for personalization. These platforms provide APIs and SDKs to capture detailed behavioral metrics.

Context Awareness SDKs

To incorporate environmental context, developers can use SDKs that access device sensors, location data, and system information. For example, mobile development kits (Android SDK, iOS SDK) provide APIs for detecting device orientation, connectivity, and other contextual factors.

Adaptive UI Frameworks

Some specialized frameworks focus on adaptive UI design, such as Adaptive Cards by Microsoft, which allow dynamic content rendering based on user context. These can be combined with AI agents to deliver personalized layouts and content.

Example: Simple Python Code for User Behavior Analysis Using Machine Learning

Below is a basic example demonstrating how to use Python with scikit-learn to analyze user behavior data and predict user preferences for UI adaptation. This example uses a decision tree classifier to predict whether a user prefers a simplified or advanced interface based on interaction features.

python

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Sample user behavior data: [time_on_task, error_rate, clicks_per_session]

X = [

[300, 0.05, 20], # User 1

[600, 0.01, 50], # User 2

[200, 0.10, 15], # User 3

[800, 0.00, 70], # User 4

[150, 0.15, 10], # User 5

]

# Labels: 0 = prefers simplified UI, 1 = prefers advanced UI

y = [0, 1, 0, 1, 0]

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Initialize and train the decision tree classifier

clf = DecisionTreeClassifier()

clf.fit(X_train, y_train)

# Predict on test data

y_pred = clf.predict(X_test)

# Evaluate accuracy

accuracy = accuracy_score(y_test, y_pred)

print(f"Prediction accuracy: {accuracy:.2f}")

# Example prediction for a new user

new_user = [[400, 0.03, 30]]

preference = clf.predict(new_user)

ui_type = "Advanced UI" if preference[0] == 1 else "Simplified UI"

print(f"Recommended interface: {ui_type}")This code illustrates how AI models can be trained on user interaction data to inform UI adaptation decisions, enabling personalized experiences based on predicted user preferences.

Personalization Strategies Using AI Agents

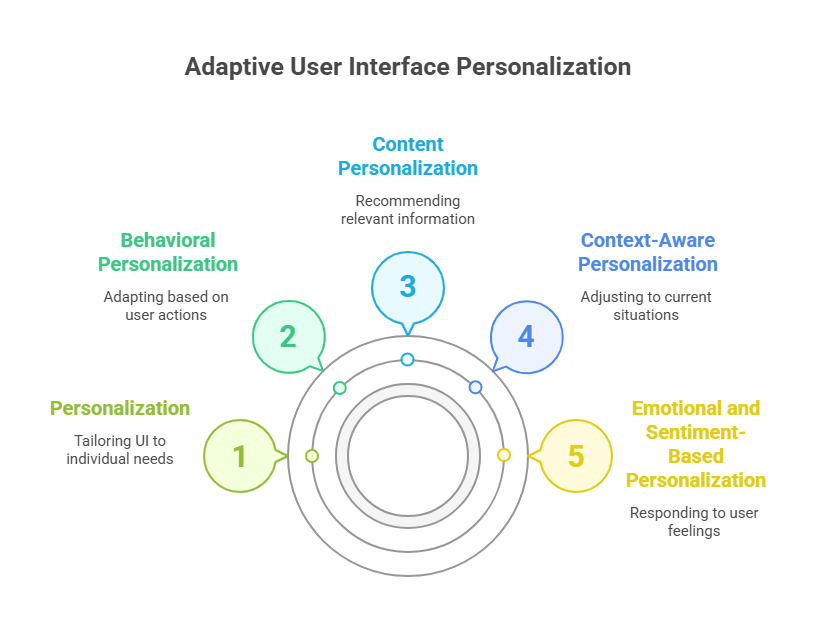

Personalization is at the heart of adaptive user interfaces, aiming to tailor UI elements, content, and workflows to meet the unique needs and preferences of individual users. AI agents enable dynamic and intelligent personalization by continuously learning from user interactions and contextual data. Below are key strategies for achieving effective personalization using AI agents.

Behavioral Personalization

AI agents analyze user behavior such as click patterns, navigation paths, time spent on tasks, and error rates to infer preferences and skill levels. Based on this analysis, the interface can adapt by highlighting frequently used features, simplifying complex workflows for novice users, or offering shortcuts for power users. For example, an AI agent might reorder menu items to prioritize the most accessed functions for a particular user.

Content Personalization

Personalizing content involves tailoring the information, recommendations, or media presented to the user. AI agents use collaborative filtering, content-based filtering, or hybrid recommendation algorithms to suggest relevant articles, products, or tutorials. This approach enhances user engagement by delivering content that aligns with individual interests and past behavior.

Context-Aware Personalization

By incorporating contextual information such as device type, location, time of day, and environmental conditions, AI agents can adjust the UI to better suit the current situation. For instance, a mobile app might switch to a simplified layout when the user is on the go or adjust brightness and contrast based on ambient lighting.

Adaptive Workflow Personalization

AI agents can personalize entire workflows by identifying the user’s goals and preferred methods of interaction. This might involve automating repetitive tasks, suggesting next steps, or dynamically adjusting the sequence of actions to optimize efficiency. For example, in a design tool, the AI might suggest templates or tools based on the user’s project history.

Emotional and Sentiment-Based Personalization

Advanced AI agents can analyze user sentiment through facial expressions, voice tone, or text input to adapt the interface emotionally. If frustration is detected, the system might offer additional help or simplify the interface to reduce cognitive load.

User Profile and Preference Learning

AI agents build and update detailed user profiles that capture preferences, expertise, and goals. These profiles enable long-term personalization that evolves as the user’s needs change. Machine learning models continuously refine these profiles by integrating new data from ongoing interactions.

Multi-Modal Personalization

Combining data from multiple input modalities—such as touch, voice, gaze, and gestures—allows AI agents to create richer user models and deliver more nuanced personalization. For example, gaze tracking can help identify which UI elements attract attention and which are ignored, guiding layout adjustments.

Privacy-Preserving Personalization

Personalization strategies must respect user privacy by implementing data minimization, anonymization, and giving users control over their data. AI agents can perform on-device learning to personalize without transmitting sensitive data to servers.

Challenges in Developing Adaptive UIs with AI

Developing adaptive user interfaces powered by AI presents a range of technical, ethical, and usability challenges. Addressing these challenges is crucial to creating systems that are effective, trustworthy, and user-friendly.

Technical Challenges

One major technical hurdle is the complexity of accurately modeling user behavior and context. User interactions can be highly variable and noisy, making it difficult for AI agents to infer intentions reliably. Additionally, real-time adaptation requires efficient processing and low-latency responses, which can be demanding, especially on resource-constrained devices.

Integrating AI models seamlessly with UI frameworks also poses challenges. Developers must ensure compatibility, maintain performance, and handle updates to both AI components and UI elements without disrupting the user experience. Data collection and management for training AI models require robust infrastructure and careful handling to maintain data quality and relevance.

Ethical Challenges

Privacy concerns are paramount when collecting and analyzing user data for adaptation. Users may be uncomfortable with extensive monitoring or unaware of how their data is used. Ensuring transparency, obtaining informed consent, and implementing strong data protection measures are essential to maintain user trust.

Bias in AI models is another ethical issue. If training data is unrepresentative or biased, the adaptive interface may unfairly favor certain user groups or reinforce stereotypes. Developers must actively work to identify and mitigate bias to create inclusive and equitable interfaces.

Usability Challenges

Adaptive interfaces risk confusing or frustrating users if changes are unpredictable or intrusive. Users may feel a loss of control if the system adapts too aggressively or without clear explanation. Balancing automation with user control is critical to avoid alienating users.

Maintaining consistency while adapting the interface is also challenging. Frequent or drastic changes can disrupt the user’s mental model, increasing cognitive load and reducing efficiency. Designers must carefully calibrate the degree and frequency of adaptations.

Evaluation and Testing Challenges

Measuring the effectiveness of adaptive UIs is complex. Traditional usability metrics may not capture the dynamic nature of adaptation. Longitudinal studies and A/B testing are often required to assess how adaptations impact user satisfaction and performance over time.

Scalability and Maintenance

As AI-driven interfaces evolve, maintaining and scaling the system becomes challenging. Models need regular retraining with fresh data, and adaptations must remain relevant as user needs and contexts change. Ensuring the system can scale to diverse user populations without degradation is essential.

Case Studies of AI-Driven Adaptive Interfaces

AI-driven adaptive interfaces have been successfully implemented across various domains, demonstrating the potential of AI agents to enhance user experience through personalization and context-aware adaptation. Below are some notable case studies illustrating these successes.

Case Study 1: Netflix Personalized Recommendations

Netflix uses sophisticated AI algorithms to analyze viewing history, user ratings, and browsing behavior to personalize the content displayed on the user’s homepage. The system adapts movie and show recommendations in real-time, increasing user engagement and satisfaction. This adaptive interface helps users discover relevant content quickly, reducing decision fatigue.

Case Study 2: Adaptive Learning Platforms (Khan Academy)

Khan Academy employs AI to tailor educational content and exercises to individual learners’ skill levels and progress. The platform adapts the difficulty of problems and suggests personalized learning paths, helping students learn at their own pace. AI agents analyze performance data to identify knowledge gaps and recommend targeted resources.

Case Study 3: Smart Home Interfaces (Google Nest)

Google Nest smart thermostats use AI to learn user preferences and daily routines, automatically adjusting temperature settings for comfort and energy efficiency. The interface adapts by providing personalized suggestions and notifications based on learned behavior and environmental context, such as weather changes.

Case Study 4: E-commerce Personalization (Amazon)

Amazon’s adaptive UI leverages AI to personalize product recommendations, search results, and promotional offers. By analyzing purchase history, browsing patterns, and demographic data, the interface dynamically adjusts to highlight products most relevant to each user, enhancing the shopping experience and increasing sales.

Example: Python Code for a Simple Recommendation System Using Collaborative Filtering

Below is a Python example demonstrating a basic collaborative filtering approach to recommend items based on user-item interaction data. This can be part of an AI-driven adaptive interface that personalizes content or product suggestions.

python

import numpy as np

from sklearn.metrics.pairwise import cosine_similarity

# Sample user-item interaction matrix (rows: users, columns: items)

# Values represent ratings (0 means no rating)

ratings = np.array([

[5, 3, 0, 1],

[4, 0, 0, 1],

[1, 1, 0, 5],

[0, 0, 5, 4],

[0, 1, 5, 4],

])

# Compute cosine similarity between users

user_similarity = cosine_similarity(ratings)

def recommend_items(user_index, ratings, similarity, top_n=2):

# Weighted sum of ratings from similar users

sim_scores = similarity[user_index]

weighted_ratings = sim_scores.dot(ratings)

sim_sums = np.array([np.abs(sim_scores).sum()] * ratings.shape[1])

predicted_ratings = weighted_ratings / sim_sums

# Exclude items already rated by the user

user_rated = ratings[user_index] > 0

predicted_ratings[user_rated] = 0

# Recommend top N items

recommended_items = np.argsort(predicted_ratings)[::-1][:top_n]

return recommended_items

# Example: Recommend items for user 0

user_id = 0

recommended = recommend_items(user_id, ratings, user_similarity)

print(f"Recommended items for user : {recommended}")This code calculates user similarity based on rating patterns and recommends items that similar users liked but the target user has not yet rated. Such recommendations can be integrated into adaptive UIs to personalize content dynamically.

Future Trends in AI-Powered Adaptive User Interfaces

The field of AI-powered adaptive user interfaces is rapidly evolving, driven by advances in artificial intelligence, machine learning, and human-computer interaction. Several emerging technologies and research directions are shaping the future of adaptive UIs, promising more intelligent, intuitive, and personalized experiences.

Multimodal Interaction and Fusion

Future adaptive interfaces will increasingly leverage multimodal inputs such as voice, gesture, gaze, facial expressions, and physiological signals. Combining these diverse data streams through AI enables richer understanding of user intent and emotional state, allowing interfaces to adapt more naturally and responsively.

Explainable and Transparent AI

As AI agents become more integral to UI adaptation, there is growing emphasis on explainability. Users will demand clear, understandable explanations for why the interface adapts in certain ways. Research into explainable AI (XAI) aims to make adaptive behaviors transparent, building user trust and facilitating better human-AI collaboration.

On-Device and Edge AI

To address privacy and latency concerns, more AI processing will move from the cloud to on-device or edge computing environments. This shift enables real-time adaptation without compromising user data security, making adaptive UIs more responsive and privacy-preserving.

Personalized AI Models and Federated Learning

Future systems will employ personalized AI models that learn and evolve uniquely for each user. Federated learning techniques will allow these models to improve collaboratively across users without sharing raw data, enhancing personalization while protecting privacy.

Contextual and Situational Awareness

Advances in sensor technology and AI will enable interfaces to better understand complex contextual factors such as social environment, physical activity, and cognitive load. Adaptive UIs will proactively adjust to these situational variables, optimizing usability and user comfort.

Emotional and Affective Computing

Integrating affective computing, AI agents will detect and respond to users’ emotional states more accurately. This will enable interfaces that not only adapt functionally but also provide empathetic support, improving user satisfaction and engagement.

Adaptive Interfaces for Augmented and Virtual Reality

As AR and VR technologies mature, AI-driven adaptive interfaces will play a crucial role in creating immersive, personalized experiences. AI will tailor virtual environments and interactions based on user preferences, behaviors, and physiological responses.

Ethical AI and Inclusive Design

Future adaptive UIs will increasingly incorporate ethical considerations and inclusive design principles. AI agents will be designed to avoid bias, respect user autonomy, and accommodate diverse user needs, ensuring equitable access and positive experiences for all.

Integration with Conversational AI and Digital Assistants

The convergence of adaptive UIs with conversational AI will lead to more seamless, natural interactions. AI agents will not only adapt visual elements but also engage users through dialogue, guiding workflows and providing personalized assistance.

Conclusion and Best Practices

AI-powered adaptive user interfaces represent a transformative approach to designing more personalized, efficient, and engaging user experiences. By leveraging AI agents that learn from user behavior and context, these interfaces can dynamically adjust to meet individual needs, improving usability and satisfaction.

Summary of Key Points

Adaptive UIs rely on AI techniques such as machine learning, user modeling, and context awareness to tailor content, layout, and interaction patterns. Successful implementations span diverse domains including entertainment, education, smart homes, and e-commerce. However, developing adaptive interfaces involves technical challenges like accurate user intent inference and real-time processing, as well as ethical concerns around privacy, transparency, and bias.

Future trends point toward richer multimodal interactions, explainable AI, on-device processing, and emotionally intelligent systems that better understand and respond to users. Ensuring inclusivity and ethical design remains critical as these technologies evolve.

Best Practices for Developers and Designers

To create effective AI-driven adaptive interfaces, practitioners should:

Prioritize User Privacy and Consent: Collect and use data transparently, with clear user consent and robust security measures.

Balance Adaptation and Control: Allow users to understand and override adaptations to maintain a sense of control and avoid frustration.

Design for Explainability: Provide clear explanations for adaptive behaviors to build trust and facilitate user acceptance.

Mitigate Bias: Use diverse, representative data and regularly audit AI models to prevent unfair or discriminatory outcomes.

Ensure Consistency: Adapt interfaces gradually and predictably to avoid disrupting users’ mental models.

Test Extensively: Employ longitudinal studies, A/B testing, and user feedback to evaluate the impact of adaptations on usability and satisfaction.

Leverage Multimodal Inputs: Incorporate multiple data sources to improve context awareness and adaptation accuracy.

Plan for Scalability and Maintenance: Design systems that can evolve with changing user needs and technological advances.

Building AI Applications: A Guide for Modern Developers

The AI Agent Revolution: Changing the Way We Develop Software

AI Agent Lifecycle Management: From Deployment to Self-Healing and Online Updates