Introduction to AI Microservices

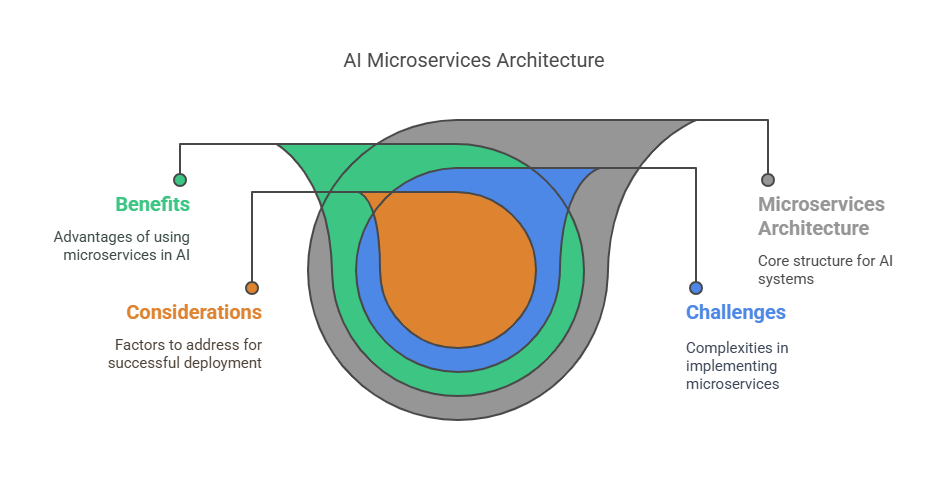

The rapid evolution of artificial intelligence has driven organizations to seek scalable, flexible, and maintainable ways to deploy machine learning (ML) solutions. One of the most effective architectural paradigms to address these needs is the microservices approach. In this section, we explore what microservices are, why they are particularly beneficial for AI and ML systems, and what unique challenges and considerations arise when applying this architecture to AI workloads.

What are Microservices?

Microservices are an architectural style that structures an application as a collection of small, independent services, each responsible for a specific business capability. Unlike monolithic architectures, where all components are tightly coupled and deployed as a single unit, microservices are loosely coupled and communicate over well-defined APIs, often using lightweight protocols such as HTTP or gRPC. Each microservice can be developed, deployed, and scaled independently, allowing teams to iterate quickly and adopt the best technology for each service.

In the context of AI, microservices might include components such as data preprocessing, feature extraction, model inference, monitoring, and result aggregation. By breaking down complex AI systems into manageable services, organizations can improve agility and resilience.

Benefits of Using Microservices for AI

Adopting microservices for AI and ML systems brings several significant advantages. First, scalability is greatly enhanced, as individual services can be scaled horizontally based on demand. For example, a model inference service can be replicated independently of a data ingestion service, ensuring efficient resource utilization.

Second, microservices foster flexibility and technology diversity. Teams can choose the most suitable programming languages, frameworks, or hardware accelerators for each service without being constrained by a monolithic codebase. This is particularly valuable in AI, where rapid innovation and experimentation are common.

Third, microservices improve maintainability and fault isolation. If a particular service fails or requires an update, it can be addressed without impacting the entire system. This modularity also supports continuous integration and deployment practices, enabling faster delivery of new features and bug fixes.

Finally, microservices facilitate collaboration among cross-functional teams. Data engineers, ML researchers, and DevOps specialists can work on different services simultaneously, streamlining the development lifecycle.

Challenges and Considerations

Despite their advantages, microservices introduce new complexities, especially in AI systems. One major challenge is managing inter-service communication and data consistency. AI workflows often require the transfer of large datasets or model artifacts between services, which can strain network resources and introduce latency.

Another consideration is orchestration and deployment. Coordinating the lifecycle of multiple services, ensuring compatibility, and managing dependencies can be daunting without robust automation tools and container orchestration platforms like Kubernetes.

Monitoring and debugging distributed AI microservices also require specialized tools for tracing, logging, and performance analysis. Ensuring security across multiple endpoints, handling authentication, and protecting sensitive data are additional concerns that must be addressed.

Core Principles of AI Microservices Architecture

Designing AI systems with a microservices architecture requires a solid understanding of several foundational principles. These principles ensure that the resulting system is scalable, maintainable, and robust, while also supporting the unique demands of machine learning workflows. In this section, we discuss the most important concepts: decoupling and independence, the single responsibility principle, API-first design, and statelessness with scalability.

Decoupling and Independence

A core tenet of microservices is that each service should be as independent as possible from the others. Decoupling means that services can be developed, deployed, and scaled without being tightly bound to the internal workings of other components. In AI systems, this might mean separating data preprocessing, model training, and inference into distinct services. Such independence allows teams to update or replace one service—such as deploying a new version of a model—without disrupting the rest of the system. Decoupling also makes it easier to isolate and resolve failures, as issues in one service are less likely to cascade throughout the architecture.

Single Responsibility Principle

The single responsibility principle states that each microservice should focus on one well-defined task or business capability. For AI microservices, this could translate to having a dedicated service for feature extraction, another for model inference, and another for monitoring predictions. By limiting the scope of each service, the architecture becomes easier to understand, test, and maintain. This principle also supports reusability, as a well-designed service can be leveraged in multiple workflows or projects without modification.

API-First Design

API-first design is the practice of defining clear, consistent, and well-documented interfaces for each microservice before implementation begins. In AI microservices, APIs are the contracts that govern how services interact, exchange data, and trigger workflows. By prioritizing API design, teams ensure that services can communicate reliably, regardless of the underlying technology stack. This approach also facilitates collaboration, as different teams can work on their respective services in parallel, guided by the agreed-upon API specifications. Well-designed APIs are essential for integrating AI microservices with external systems, such as data sources, user interfaces, or third-party platforms.

Statelessness and Scalability

Statelessness refers to the idea that each microservice should not rely on stored information from previous requests; instead, all necessary context should be provided with each interaction. Stateless services are easier to scale horizontally, as any instance can handle any request without requiring access to shared session data. In AI systems, statelessness is particularly important for services like model inference, where requests can be distributed across multiple replicas to handle high volumes of predictions. While some AI workflows, such as training or batch processing, may require stateful components, the overall architecture should strive for statelessness wherever possible to maximize scalability and resilience.

Designing AI Microservices

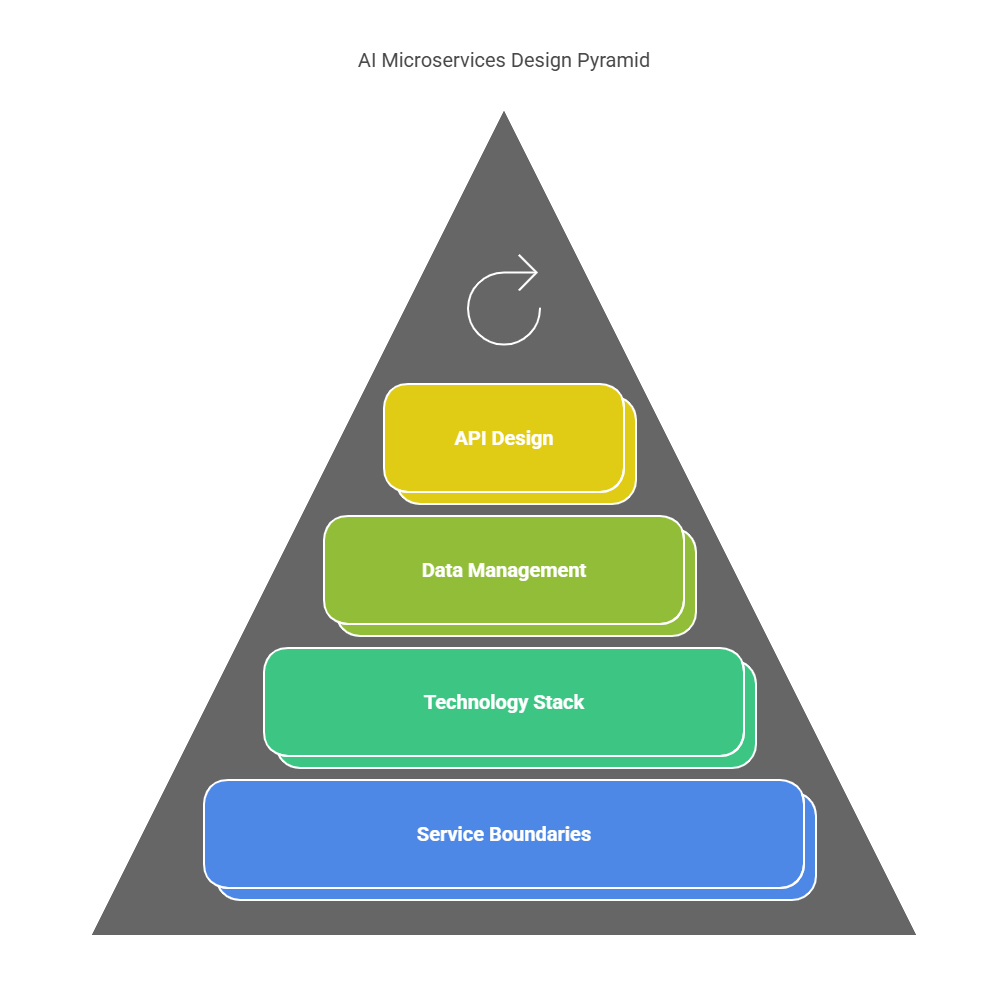

Designing effective AI microservices requires thoughtful planning and a clear understanding of both the technical and business requirements. The process involves identifying logical service boundaries, selecting appropriate technologies, managing data efficiently, and ensuring robust and future-proof API design. Each of these aspects plays a crucial role in building scalable and maintainable AI systems.

Identifying Service Boundaries

The first step in designing AI microservices is to define clear boundaries for each service. In practice, this means breaking down the overall AI workflow into discrete, manageable components. For example, data ingestion, preprocessing, feature engineering, model training, inference, and monitoring can each be implemented as separate services. The goal is to ensure that each microservice encapsulates a single, well-defined responsibility, which simplifies development, testing, and maintenance. Properly defined boundaries also help teams avoid unnecessary dependencies and make it easier to scale or update individual services as requirements evolve.

Choosing the Right Technology Stack

Selecting the appropriate technology stack for each microservice is a key design decision. AI microservices often have diverse requirements: a data preprocessing service might benefit from Python and libraries like Pandas, while a high-performance inference service could be implemented in C++ or Go for speed. The microservices approach allows teams to choose the best tool for each job, rather than being locked into a single language or framework. It is also important to consider factors such as hardware compatibility (e.g., GPU support for deep learning), ease of deployment, and the availability of community support and documentation. The right technology stack can significantly impact the performance, maintainability, and scalability of each service.

Data Management Strategies

Efficient data management is critical in AI microservices architectures. Each service may need to access, process, or store large volumes of data, and the way data flows between services can affect both performance and reliability. Common strategies include using shared data stores, message queues, or event-driven architectures to facilitate communication. For example, a data ingestion service might write raw data to a distributed storage system, which is then read by a preprocessing service. It is essential to ensure data consistency, minimize duplication, and implement robust error handling. Additionally, considerations around data privacy, security, and compliance must be addressed, especially when handling sensitive information.

API Design and Versioning

Well-designed APIs are the backbone of any microservices architecture. In AI systems, APIs define how services interact, exchange data, and trigger workflows. Good API design emphasizes clarity, consistency, and simplicity, making it easier for teams to integrate and extend services. It is also important to plan for API versioning from the outset, as changes to service interfaces are inevitable over time. Versioning allows teams to introduce new features or improvements without breaking existing clients or workflows. Documentation is equally important, as it ensures that all stakeholders understand how to use and interact with each service.

Building and Deploying AI Microservices

Translating a well-designed AI microservices architecture into a functioning, production-ready system involves several key engineering practices. These include containerization, orchestration, continuous integration and deployment (CI/CD), and infrastructure as code (IaC). Each of these practices helps ensure that AI microservices are reliable, scalable, and easy to manage throughout their lifecycle.

Containerization with Docker

Containerization is a foundational technology for microservices, and Docker is the most widely used tool for this purpose. By packaging each AI microservice and its dependencies into a lightweight, portable container, teams can ensure consistent behavior across different environments—from development to production. For AI workloads, containers can encapsulate everything from Python environments and ML libraries to pre-trained models and custom code. This approach simplifies deployment, reduces compatibility issues, and enables rapid scaling by allowing multiple instances of a service to run in parallel on the same infrastructure.

Orchestration with Kubernetes

While containers make it easy to package and run individual services, orchestrating large numbers of containers across a distributed system requires a more advanced solution. Kubernetes has become the industry standard for container orchestration. It automates the deployment, scaling, and management of containerized microservices, ensuring high availability and efficient resource utilization. In the context of AI, Kubernetes can manage GPU resources for model inference, handle rolling updates for new model versions, and automatically restart failed services. Its declarative configuration model also makes it easier to define and maintain complex AI workflows.

CI/CD Pipelines for AI Microservices

Continuous integration and continuous deployment (CI/CD) pipelines are essential for maintaining agility and quality in AI microservices development. CI/CD automates the process of building, testing, and deploying code changes, reducing the risk of human error and enabling rapid iteration. For AI projects, CI/CD pipelines can include steps such as running unit tests, validating model performance, building Docker images, and deploying updated services to staging or production environments. Automated pipelines also support reproducibility, as every deployment is tracked and can be rolled back if issues arise.

Infrastructure as Code (IaC)

Managing the infrastructure required for AI microservices—such as servers, storage, and networking—can be complex and error-prone if done manually. Infrastructure as code (IaC) addresses this challenge by allowing teams to define and provision infrastructure using machine-readable configuration files. Tools like Terraform or AWS CloudFormation enable the automated, repeatable setup of cloud or on-premises resources. For AI systems, IaC ensures that environments are consistent, scalable, and easy to update as requirements change. It also supports disaster recovery and compliance by making infrastructure changes auditable and reversible.

Communication and Integration

Effective communication and seamless integration are at the heart of any successful AI microservices architecture. As microservices interact to form complex AI workflows, the choice of communication patterns, integration tools, and data exchange mechanisms becomes critical for system performance, reliability, and scalability.

Synchronous vs. Asynchronous Communication

Microservices can communicate synchronously or asynchronously. Synchronous communication, such as RESTful APIs or gRPC, is straightforward and suitable for real-time interactions like model inference requests. However, it can introduce latency and tight coupling between services. Asynchronous communication, using message queues or event streams, decouples services and improves resilience. For example, a data preprocessing service can publish processed data to a queue, which is then consumed by a model inference service at its own pace.

Python Example: Asynchronous Communication with RabbitMQ

Here’s a simple example using the pika library to send and receive messages between microservices via RabbitMQ:

python

# Producer: sends a message to the queue

import pika

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='ai_tasks')

channel.basic_publish(exchange='',

routing_key='ai_tasks',

body='{"task": "run_inference", "data": [1,2,3]}')

print(" [x] Sent task to queue")

connection.close()python

# Consumer: receives messages from the queue

import pika

def callback(ch, method, properties, body):

print(f" [x] Received {body}")

connection = pika.BlockingConnection(pika.ConnectionParameters('localhost'))

channel = connection.channel()

channel.queue_declare(queue='ai_tasks')

channel.basic_consume(queue='ai_tasks',

on_message_callback=callback,

auto_ack=True)

print(' [*] Waiting for messages. To exit press CTRL+C')

channel.start_consuming()API Gateways and Service Meshes

As the number of microservices grows, managing communication, security, and monitoring becomes more complex. API gateways act as a single entry point for client requests, handling routing, authentication, and rate limiting. Service meshes, such as Istio or Linkerd, provide advanced features like traffic management, service discovery, and observability at the network level, without requiring changes to application code. These tools are especially valuable in AI systems where multiple services must interact securely and efficiently.

Event-Driven Architectures

Event-driven architectures enable microservices to react to changes or events in real time. For AI, this might mean triggering model retraining when new data arrives or updating downstream services when inference results are available. Event brokers like Apache Kafka or AWS SNS/SQS facilitate this pattern, allowing services to publish and subscribe to events asynchronously. This approach enhances scalability and decouples service lifecycles.

Message Queues (e.g., Kafka, RabbitMQ)

Message queues are essential for buffering and reliably delivering messages between microservices. They help smooth out spikes in workload, ensure that no data is lost if a service is temporarily unavailable, and support complex workflows such as batch processing or distributed training. In AI systems, message queues can coordinate tasks like data ingestion, preprocessing, and inference, ensuring that each stage operates efficiently and independently.

Scalability and Performance Optimization

Scalability and performance optimization are essential for AI microservices, especially as demand grows and workloads fluctuate. Ensuring that each service can handle increased traffic, process data efficiently, and maintain low latency is critical for delivering reliable AI solutions. This section explores key strategies such as horizontal scaling, load balancing, caching, and monitoring.

Horizontal Scaling

Horizontal scaling involves adding more instances of a microservice to distribute the workload. In AI systems, this is particularly useful for stateless services like model inference, where multiple replicas can process requests in parallel. Container orchestration platforms like Kubernetes make horizontal scaling straightforward by automatically launching or terminating service instances based on demand. This elasticity ensures that the system can handle traffic spikes without over-provisioning resources during quieter periods. For example, if a surge in user requests occurs, Kubernetes can spin up additional inference service pods to maintain performance.

Load Balancing

Load balancing is the process of distributing incoming requests evenly across multiple service instances. This prevents any single instance from becoming a bottleneck and helps maintain consistent response times. In AI microservices, load balancers can route requests to the healthiest and least busy instances, improving both reliability and throughput. Solutions like NGINX, HAProxy, or cloud-native load balancers (such as AWS Elastic Load Balancer) are commonly used. In Kubernetes, built-in services like kube-proxy and Ingress controllers provide robust load balancing for containerized microservices.

Caching Strategies

Caching is a powerful technique for reducing latency and improving throughput in AI microservices. Frequently accessed data, such as model predictions or preprocessed features, can be stored in fast, in-memory caches like Redis or Memcached. By serving repeated requests from the cache instead of recomputing results, services can respond more quickly and reduce the load on backend systems. In AI workflows, caching is especially useful for scenarios where the same input data is processed multiple times or when inference results are reused across different services.

Monitoring and Alerting

Continuous monitoring is vital for maintaining the health and performance of AI microservices. Monitoring tools collect metrics such as response times, error rates, resource utilization, and throughput, providing real-time visibility into system behavior. Solutions like Prometheus, Grafana, and ELK Stack (Elasticsearch, Logstash, Kibana) are widely used for this purpose. Alerting mechanisms can notify teams of anomalies, such as increased latency or service failures, enabling rapid response to issues before they impact users. In AI systems, monitoring can also track model-specific metrics, such as prediction accuracy or drift, ensuring that deployed models continue to perform as expected.

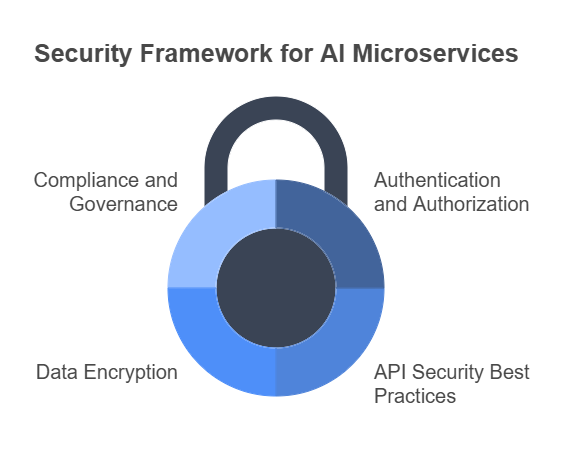

Security in AI Microservices

Security is a fundamental concern in any distributed system, and AI microservices are no exception. As these systems often handle sensitive data, proprietary models, and critical business logic, robust security measures are essential to protect against threats, ensure compliance, and maintain user trust. This section explores key aspects of security in AI microservices, including authentication and authorization, API security best practices, data encryption, and compliance and governance.

Authentication and Authorization

Authentication verifies the identity of users or services attempting to access a microservice, while authorization determines what actions they are permitted to perform. In AI microservices, strong authentication mechanisms such as OAuth 2.0, OpenID Connect, or mutual TLS are commonly used to ensure that only trusted entities can interact with services. Role-based access control (RBAC) or attribute-based access control (ABAC) can further restrict access to sensitive endpoints, such as those handling model management or data ingestion. Implementing centralized identity providers and single sign-on (SSO) solutions can simplify user management and enhance security across the microservices ecosystem.

API Security Best Practices

APIs are the primary interface for communication between microservices and with external clients, making them a frequent target for attacks. Securing APIs involves several best practices: validating all incoming data to prevent injection attacks, rate limiting to protect against denial-of-service (DoS) attacks, and using API gateways to centralize security policies. It is also important to keep API endpoints private unless public access is necessary, and to use secure protocols such as HTTPS for all communications. Regularly updating and patching dependencies, as well as conducting security audits and penetration testing, helps identify and mitigate vulnerabilities before they can be exploited.

Data Encryption

Data encryption is critical for protecting sensitive information both in transit and at rest. In AI microservices, data transmitted between services should always be encrypted using protocols like TLS to prevent interception or tampering. For data at rest, such as stored model parameters, training datasets, or logs, encryption mechanisms provided by cloud providers or open-source tools should be employed. Proper key management is essential to ensure that only authorized services and users can decrypt and access protected data. Additionally, encrypting sensitive environment variables and configuration files adds another layer of defense.

Compliance and Governance

Many AI applications operate in regulated industries, such as healthcare, finance, or government, where compliance with data protection laws and industry standards is mandatory. Ensuring compliance involves implementing policies for data retention, audit logging, and user consent, as well as maintaining detailed records of data access and processing activities. Governance frameworks help organizations define roles, responsibilities, and processes for managing security risks. Regular training and awareness programs for development and operations teams are also important to foster a culture of security and compliance.

Monitoring and Management

Effective monitoring and management are essential for maintaining the health, performance, and reliability of AI microservices in production. As these systems grow in complexity, proactive monitoring and robust management practices help teams quickly detect issues, trace problems, and ensure that services operate smoothly. This section covers the most important aspects: centralized logging, distributed tracing, health checks and self-healing, and performance monitoring.

Centralized Logging

Centralized logging involves collecting logs from all microservices into a single, searchable location. This approach simplifies troubleshooting by providing a unified view of system activity, making it easier to correlate events across services. In AI microservices, logs can capture everything from data processing steps and model predictions to error messages and system warnings. Tools like the ELK Stack (Elasticsearch, Logstash, Kibana) or cloud-native solutions such as AWS CloudWatch and Google Cloud Logging are commonly used. Centralized logging not only aids in debugging but also supports compliance and audit requirements by maintaining detailed records of system behavior.

Distributed Tracing

Distributed tracing is a technique for tracking requests as they flow through multiple microservices. In AI systems, a single user request might trigger a chain of actions—data retrieval, preprocessing, inference, and result aggregation—across several services. Distributed tracing tools like Jaeger or Zipkin assign unique identifiers to each request, allowing teams to visualize the entire journey and pinpoint bottlenecks or failures. This visibility is crucial for diagnosing latency issues, understanding dependencies, and optimizing the performance of complex AI workflows.

Health Checks and Self-Healing

Health checks are automated tests that verify whether a microservice is functioning correctly. These checks can be as simple as confirming that a service is running or as advanced as testing connectivity to databases or external APIs. Orchestration platforms like Kubernetes use health checks to monitor service status and can automatically restart or replace unhealthy instances—a process known as self-healing. In AI microservices, health checks might also include verifying that models are loaded correctly or that data pipelines are operational. Self-healing mechanisms reduce downtime and ensure that the system remains resilient in the face of failures.

Performance Monitoring

Performance monitoring involves tracking key metrics such as response times, throughput, error rates, and resource utilization (CPU, memory, GPU). In AI microservices, it is also important to monitor model-specific metrics like prediction latency, accuracy, and drift. Tools like Prometheus and Grafana provide real-time dashboards and alerting capabilities, enabling teams to detect anomalies and respond quickly to performance degradation. Continuous monitoring helps maintain service-level objectives (SLOs) and ensures that AI systems deliver consistent, reliable results to end users.

Case Studies and Real-World Examples

Examining real-world case studies provides valuable insights into how AI microservices architectures are applied across industries, the challenges teams encounter, and the lessons learned from deploying these systems at scale. This section highlights practical examples from e-commerce, finance, and healthcare, and distills key takeaways from their experiences.

AI Microservices in E-commerce

In the e-commerce sector, AI microservices are often used to power recommendation engines, dynamic pricing, and personalized marketing. For example, a large online retailer might implement separate microservices for user behavior tracking, product recommendation, and inventory management. Each service can be developed and scaled independently, allowing the retailer to quickly adapt to changing customer preferences or seasonal demand spikes. By decoupling these components, the company can update its recommendation algorithms without disrupting the checkout process or inventory updates. This modularity also enables rapid experimentation with new models and features, leading to improved customer engagement and increased sales.

AI Microservices in Finance

Financial institutions leverage AI microservices for tasks such as fraud detection, credit scoring, and risk assessment. A typical architecture might include microservices for real-time transaction monitoring, anomaly detection, and customer profiling. For instance, a bank could deploy a fraud detection service that analyzes transaction patterns and flags suspicious activity, while a separate credit scoring service evaluates loan applications. This separation of concerns enhances security and compliance, as sensitive data can be isolated and access tightly controlled. Additionally, the ability to independently update or retrain models ensures that the system remains effective as new fraud patterns emerge or regulatory requirements change.

AI Microservices in Healthcare

Healthcare organizations use AI microservices to support diagnostics, patient monitoring, and personalized treatment recommendations. For example, a hospital might implement microservices for medical image analysis, electronic health record (EHR) integration, and alerting clinicians to critical changes in patient status. By breaking down complex workflows into specialized services, healthcare providers can more easily comply with data privacy regulations and integrate new AI capabilities as they become available. This approach also facilitates collaboration between data scientists, clinicians, and IT teams, leading to more accurate diagnoses and better patient outcomes.

Lessons Learned

Across these industries, several common lessons emerge. First, clear service boundaries and robust APIs are essential for enabling independent development and deployment. Second, investing in monitoring, security, and automation pays dividends in reliability and compliance. Third, organizations must be prepared to address challenges such as data consistency, inter-service communication, and operational complexity. Finally, the flexibility of microservices allows teams to rapidly iterate, experiment, and respond to evolving business needs—making AI microservices a powerful approach for delivering real-world value.

Future Trends and Challenges

The landscape of AI microservices is rapidly evolving, driven by advances in technology, changing business needs, and new opportunities at the edge and in the cloud. As organizations continue to adopt and scale AI microservices, several emerging trends and challenges are shaping the future of this architectural approach. This section explores serverless AI microservices, edge AI microservices, AI-powered observability, and ethical considerations.

Serverless AI Microservices

Serverless computing is transforming how microservices are deployed and managed. In a serverless model, cloud providers automatically handle infrastructure provisioning, scaling, and maintenance, allowing developers to focus solely on writing code. For AI microservices, this means that inference, data processing, or even model training tasks can be executed as lightweight, event-driven functions that scale instantly with demand. Serverless platforms such as AWS Lambda, Google Cloud Functions, and Azure Functions are increasingly supporting AI workloads, including integration with GPU resources and managed ML services. The main benefits are reduced operational overhead, cost efficiency, and the ability to respond quickly to fluctuating workloads. However, challenges remain around cold start latency, resource limits, and the need for stateless design.

Edge AI Microservices

As AI applications move closer to where data is generated, edge AI microservices are gaining traction. Deploying microservices on edge devices—such as IoT sensors, smartphones, or autonomous vehicles—enables real-time inference and decision-making with minimal latency. This is particularly valuable in scenarios where connectivity to the cloud is limited or where privacy concerns require data to remain local. Edge AI microservices must be lightweight, efficient, and capable of running on resource-constrained hardware. Advances in model compression, quantization, and hardware acceleration are making it increasingly feasible to deploy sophisticated AI models at the edge. The main challenges include managing updates, ensuring security, and orchestrating distributed deployments across thousands of devices.

AI-Powered Observability

Observability is critical for understanding the behavior and performance of complex microservices systems. The next wave of observability tools is leveraging AI to provide deeper insights, automate anomaly detection, and predict potential failures before they impact users. AI-powered observability platforms can analyze vast amounts of telemetry data—logs, metrics, traces—and surface actionable insights for operations teams. For example, machine learning models can identify subtle patterns that indicate emerging issues, recommend remediation steps, or even trigger automated responses. As AI microservices architectures grow in scale and complexity, these intelligent observability tools will become essential for maintaining reliability and performance.

Ethical Considerations

As AI systems become more pervasive, ethical considerations are taking center stage. Microservices architectures introduce new challenges around transparency, accountability, and fairness. For example, when decision-making is distributed across multiple services, it can be difficult to trace how a particular outcome was reached or to audit the data and models involved. Organizations must implement robust governance frameworks, ensure explainability, and monitor for bias or unintended consequences. Privacy and data protection are also critical, especially when handling sensitive information in regulated industries. Addressing these ethical challenges requires collaboration between technologists, ethicists, and policymakers, as well as a commitment to responsible AI development and deployment.

Conclusion

The journey through AI microservices architecture reveals a landscape rich with opportunity, innovation, and complexity. As organizations strive to deliver intelligent, scalable, and reliable AI solutions, the principles and practices discussed throughout this guide become essential building blocks for success. In this final section, we summarize the key concepts, highlight best practices, and offer final thoughts and recommendations for teams embarking on or refining their AI microservices journey.

Summary of Key Concepts

AI microservices architecture is defined by its modularity, flexibility, and scalability. By decomposing complex AI systems into independent, focused services, organizations can accelerate development, improve maintainability, and respond more effectively to changing business needs. Core principles such as decoupling, single responsibility, API-first design, and statelessness provide a strong foundation for building robust systems. The adoption of modern engineering practices—containerization, orchestration, CI/CD, and infrastructure as code—enables teams to deploy and manage AI microservices efficiently at scale. Effective communication and integration, whether synchronous or asynchronous, ensure that services work together seamlessly, while strategies for scalability, performance, and security safeguard the system’s reliability and integrity.

Best Practices for AI Microservices

Success with AI microservices depends on a commitment to best practices at every stage of the lifecycle. Teams should invest in clear service boundaries, well-documented APIs, and automated testing to ensure quality and consistency. Security must be integrated from the outset, with strong authentication, encrypted data flows, and regular audits. Monitoring and management are equally important, providing the visibility needed to detect issues early and maintain high availability. Embracing automation—through CI/CD pipelines, self-healing infrastructure, and AI-powered observability—frees teams to focus on innovation rather than manual operations. Finally, fostering a culture of collaboration, continuous learning, and ethical responsibility ensures that AI systems remain trustworthy and aligned with organizational values.

Final Thoughts and Recommendations

AI microservices architecture is not a one-size-fits-all solution, but rather a flexible approach that can be tailored to the unique needs of each organization and project. Teams should start small, iteratively refine their designs, and leverage the wealth of open-source tools and cloud services available today. It is important to remain vigilant about emerging trends—such as serverless and edge computing—and to proactively address challenges around security, compliance, and ethics. By building on a solid architectural foundation and embracing best practices, organizations can unlock the full potential of AI microservices, delivering intelligent solutions that scale, adapt, and create lasting value.

AI Agents: Potential in Projects

Integration of AI agents with microservices

Next-Generation Programming: Working Side by Side with AI Agents