Introduction to AI Agents in Cloud Management

As cloud computing continues to grow in complexity and scale, managing costs and resources efficiently has become a critical challenge for organizations. Traditional manual approaches to cloud management often fall short due to the dynamic nature of cloud environments, fluctuating workloads, and the vast array of available services and pricing models. This is where AI agents come into play, offering intelligent automation and optimization capabilities that can significantly improve cloud cost management and resource utilization.

AI agents are software entities designed to autonomously perform tasks by perceiving their environment, making decisions, and learning from outcomes. In the context of cloud management, these agents continuously monitor cloud usage patterns, analyze performance metrics, and predict future demands. By leveraging machine learning, reinforcement learning, and other AI techniques, they can identify inefficiencies, recommend or implement cost-saving measures, and dynamically adjust resource allocation to match workload requirements.

The adoption of AI agents in cloud management brings several advantages. They enable real-time, data-driven decision-making that adapts to changing conditions without human intervention. This leads to more precise scaling of resources, avoidance of overprovisioning, and better alignment of cloud spending with actual business needs. Moreover, AI agents can uncover hidden cost drivers and optimize complex multi-cloud or hybrid cloud environments, which are often difficult to manage manually.

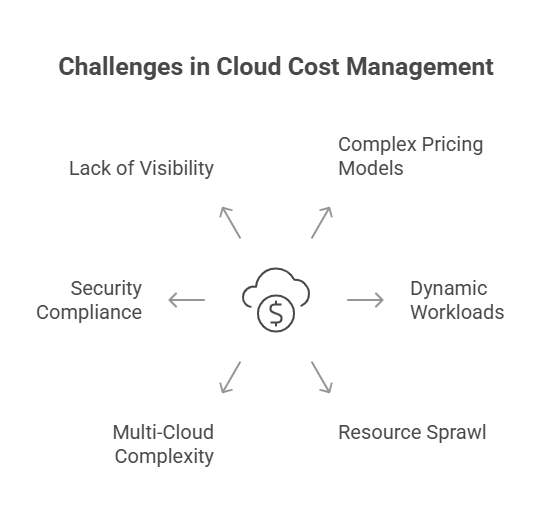

Challenges in Cloud Cost and Resource Management

Managing costs and resources in cloud environments presents a unique set of challenges that can hinder organizations from fully optimizing their cloud investments. Understanding these challenges is essential for appreciating the value that AI agents bring to cloud cost and resource optimization.

One of the primary challenges is the complexity and variability of cloud pricing models. Cloud providers offer a wide range of services with different pricing structures, including on-demand, reserved, and spot instances, each with its own cost implications. Navigating these options to select the most cost-effective combination requires deep expertise and continuous analysis.

Another significant issue is dynamic and unpredictable workloads. Cloud resource demands can fluctuate rapidly due to seasonal trends, marketing campaigns, or unexpected spikes in user activity. Without real-time monitoring and adaptive scaling, organizations risk either overprovisioning—leading to unnecessary expenses—or underprovisioning, which can degrade performance and user experience.

Resource sprawl and underutilization also contribute to inefficiencies. As organizations deploy numerous cloud services across multiple teams or departments, unused or idle resources often accumulate unnoticed, driving up costs without delivering value.

Additionally, multi-cloud and hybrid cloud environments add layers of complexity. Managing resources and costs across different platforms with varying tools, APIs, and billing systems can be overwhelming, making it difficult to maintain a unified optimization strategy.

Security and compliance requirements further complicate resource management. Ensuring that cost-saving measures do not compromise data protection or regulatory adherence demands careful balancing.

Finally, the lack of real-time visibility and actionable insights into cloud usage hampers timely decision-making. Manual analysis of billing reports and logs is often too slow and error-prone to keep pace with the dynamic cloud landscape.

Types of AI Agents Used for Optimization

In the realm of cloud cost and resource optimization, various types of AI agents are employed to address different aspects of the problem. These agents differ in their design, learning capabilities, and decision-making approaches, enabling organizations to choose or combine solutions that best fit their cloud environments and optimization goals.

Rule-Based Agents are the simplest form of AI agents. They operate based on predefined rules and policies set by cloud administrators. For example, a rule might specify shutting down idle virtual machines after a certain period or switching to cheaper instance types during low-demand hours. While rule-based agents are straightforward to implement and understand, their effectiveness is limited by the quality and completeness of the rules. They lack adaptability and cannot learn from new data or changing conditions.

Machine Learning (ML) Agents leverage historical cloud usage data to identify patterns and make predictions. These agents use supervised or unsupervised learning techniques to forecast resource demand, detect anomalies, or classify workloads. For instance, an ML agent might predict peak usage periods to proactively scale resources or identify underutilized assets for rightsizing. Unlike rule-based agents, ML agents improve over time as they are exposed to more data, enabling more accurate and nuanced optimization decisions.

Reinforcement Learning (RL) Agents represent a more advanced class of AI agents that learn optimal strategies through trial and error interactions with the cloud environment. RL agents receive feedback in the form of rewards or penalties based on the outcomes of their actions, such as cost savings or performance improvements. Over time, they develop policies that balance competing objectives like minimizing costs while maintaining service quality. RL agents are particularly suited for dynamic and complex cloud environments where explicit rules are hard to define.

Hybrid Agents combine elements of rule-based, ML, and RL approaches to leverage the strengths of each. For example, a hybrid agent might use rules to enforce critical compliance constraints, ML models to predict workload trends, and RL to optimize resource allocation dynamically. This combination allows for flexible, robust, and context-aware optimization.

In addition to these core types, AI agents may incorporate natural language processing (NLP) to interpret user commands or integrate with cloud management platforms via APIs for seamless automation.

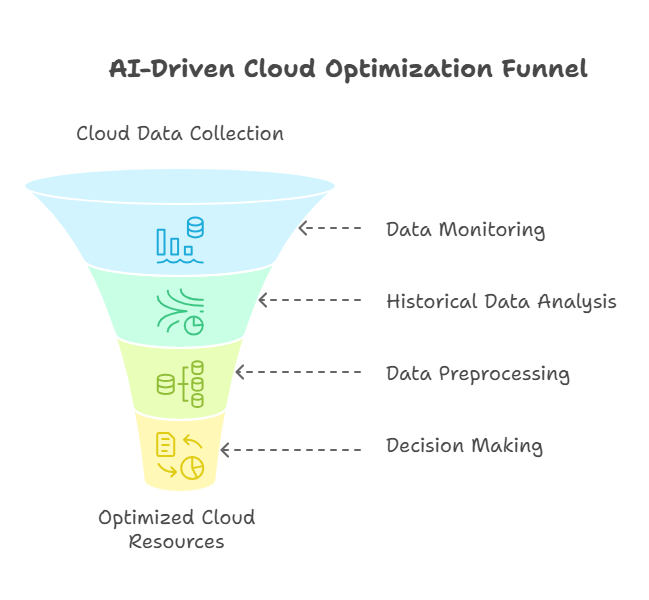

Data Collection and Monitoring for Optimization

Effective cloud cost and resource optimization by AI agents relies heavily on comprehensive data collection and continuous monitoring. Gathering accurate, timely, and relevant data is the foundation that enables AI agents to analyze usage patterns, detect inefficiencies, and make informed decisions.

Cloud environments generate vast amounts of data from various sources, including virtual machines, containers, storage systems, network components, and application logs. Key data points collected typically include resource utilization metrics (CPU, memory, disk I/O), network traffic, instance uptime, workload characteristics, and billing information. Additionally, metadata such as instance types, regions, and service configurations provide context necessary for meaningful analysis.

Monitoring tools and cloud provider APIs play a crucial role in data acquisition. Many cloud platforms offer native monitoring services—like AWS CloudWatch, Azure Monitor, or Google Cloud Operations—that provide real-time metrics and alerts. AI agents integrate with these services to continuously ingest data streams, ensuring up-to-date visibility into the cloud environment.

Beyond raw metrics, AI agents often collect historical data to identify trends and seasonal patterns. This historical perspective is essential for building predictive models that forecast future resource demands and costs.

Data quality and granularity are important considerations. High-resolution data enables more precise optimization but may increase storage and processing overhead. AI agents must balance these factors to maintain efficiency.

Once collected, data is preprocessed to handle missing values, normalize scales, and extract relevant features. This step prepares the data for machine learning algorithms or rule-based analysis.

Optimization Techniques Employed by AI Agents

AI agents use a variety of optimization techniques to improve cloud cost efficiency and resource utilization. These techniques leverage data insights and intelligent algorithms to make decisions that balance performance, cost, and reliability.

One common approach is predictive analytics, where AI agents use historical data and machine learning models to forecast future resource demands. By anticipating workload spikes or lulls, agents can proactively scale resources up or down, avoiding overprovisioning and reducing unnecessary expenses.

Resource rightsizing is another key technique. AI agents analyze usage patterns to identify underutilized or oversized resources and recommend or automatically implement adjustments, such as switching to smaller instance types or consolidating workloads. This helps eliminate waste and optimize capacity.

Dynamic scaling and auto-scaling mechanisms enable AI agents to adjust resource allocation in real time based on current demand. Unlike static scaling policies, AI-driven scaling can respond more precisely and quickly to workload changes, improving both cost efficiency and application performance.

Workload scheduling and placement optimization involves intelligently distributing workloads across available resources, regions, or cloud providers to minimize costs while meeting performance and compliance requirements. AI agents can evaluate factors like latency, pricing differences, and resource availability to make optimal placement decisions.

Spot instance and reserved instance management is another area where AI agents excel. They can predict when to use cheaper spot instances without risking interruptions or when to purchase reserved instances for long-term savings, balancing cost and reliability.

Some AI agents employ reinforcement learning to continuously improve optimization strategies by learning from the outcomes of their actions, adapting to changing cloud environments and business needs.

Finally, anomaly detection helps identify unusual usage patterns or cost spikes that may indicate misconfigurations, security issues, or inefficiencies, enabling timely corrective actions.

Dynamic Resource Allocation and Scaling

Dynamic resource allocation and scaling are critical capabilities in cloud environments, enabling organizations to efficiently match resource supply with fluctuating demand. AI agents play a pivotal role in automating this process by continuously monitoring workloads and adjusting resources in real time to optimize performance and cost.

Traditional scaling methods often rely on static thresholds or simple rules, which can lead to delayed responses or inefficient resource usage. In contrast, AI-driven dynamic scaling uses predictive analytics and machine learning models to anticipate workload changes before they occur. This proactive approach allows cloud resources to be scaled up or down smoothly, minimizing latency and avoiding overprovisioning.

AI agents analyze multiple factors such as historical usage patterns, time of day, application behavior, and external events to forecast demand. Based on these predictions, they can trigger scaling actions like launching additional instances, resizing virtual machines, or reallocating storage and network bandwidth.

Moreover, AI agents can optimize scaling decisions by considering cost implications, service-level agreements (SLAs), and performance metrics simultaneously. This multi-objective optimization ensures that resources are allocated not only to meet demand but also to minimize expenses and maintain compliance.

The benefits of AI-driven dynamic resource allocation include improved application responsiveness, reduced operational costs, and enhanced agility in adapting to changing business needs.

Below is a simple Python example demonstrating how an AI agent might use a predictive model to decide on scaling actions based on forecasted CPU utilization:

python

import numpy as np

from sklearn.linear_model import LinearRegression

# Sample historical CPU utilization data (percentage) over time

time_steps = np.array([[1], [2], [3], [4], [5], [6], [7], [8], [9], [10]])

cpu_usage = np.array([30, 35, 40, 45, 50, 55, 60, 65, 70, 75])

# Train a simple linear regression model to predict future CPU usage

model = LinearRegression()

model.fit(time_steps, cpu_usage)

# Predict CPU usage for the next time step

next_time_step = np.array([[11]])

predicted_cpu = model.predict(next_time_step)[0]

# Define scaling thresholds

scale_up_threshold = 70

scale_down_threshold = 40

# Decide scaling action based on predicted CPU usage

if predicted_cpu > scale_up_threshold:

action = "Scale Up: Add more resources"

elif predicted_cpu < scale_down_threshold:

action = "Scale Down: Reduce resources"

else:

action = "Maintain current resource levels"

print(f"Predicted CPU Usage: {predicted_cpu:.2f}%")

print(f"Scaling Decision: {action}")This example illustrates a basic predictive scaling decision. In real-world scenarios, AI agents use more sophisticated models and integrate with cloud APIs to automate scaling actions seamlessly.

Automated Cost Reduction Strategies

AI agents employ a variety of automated cost reduction strategies to help organizations optimize their cloud spending without compromising performance or reliability. These strategies leverage data-driven insights and intelligent automation to identify inefficiencies and implement cost-saving measures proactively.

One of the most effective strategies is rightsizing, where AI agents analyze resource utilization patterns to recommend or automatically adjust the size and type of cloud instances. By identifying underutilized or oversized resources, rightsizing helps eliminate waste and ensures that workloads run on appropriately sized infrastructure, reducing unnecessary expenses.

Another key approach is spot instance utilization. Spot instances are spare cloud compute capacity offered at significantly lower prices but with the risk of interruption. AI agents can predict workload tolerance for interruptions and dynamically schedule non-critical or flexible tasks on spot instances, maximizing cost savings while maintaining service continuity.

Workload scheduling is also crucial for cost reduction. AI agents optimize the timing and placement of workloads to take advantage of lower-cost regions, off-peak hours, or reserved instance availability. By intelligently shifting workloads, organizations can benefit from pricing variations and discounts.

Additionally, AI-driven automated shutdown of idle resources prevents costs from accumulating on unused or forgotten assets. Agents continuously monitor resource activity and can power down or decommission idle instances, storage volumes, or databases.

Some AI agents incorporate budget forecasting and anomaly detection to alert teams about unexpected cost spikes or trends, enabling timely interventions before costs escalate.

Together, these automated cost reduction strategies empower organizations to maintain efficient cloud operations, reduce waste, and achieve significant savings with minimal manual effort.

Integration with Cloud Platforms and Tools

AI agents designed for cloud cost and resource optimization must seamlessly integrate with major cloud platforms and management tools to access real-time data, execute optimization actions, and provide actionable insights. This integration enables AI agents to operate effectively within the cloud ecosystem, leveraging native APIs, monitoring services, and automation frameworks.

Most leading cloud providers—such as Amazon Web Services (AWS), Microsoft Azure, and Google Cloud Platform (GCP)—offer comprehensive APIs and SDKs that allow AI agents to collect metrics, manage resources, and trigger scaling or configuration changes programmatically. AI agents use these interfaces to gather data on resource utilization, billing, and performance, which forms the basis for optimization decisions.

Integration with cloud-native monitoring tools like AWS CloudWatch, Azure Monitor, and Google Cloud Operations Suite provides AI agents with continuous visibility into the health and usage of cloud assets. These tools supply metrics, logs, and alerts that AI agents analyze to detect inefficiencies or anomalies.

Furthermore, AI agents often connect with infrastructure-as-code (IaC) and automation tools such as Terraform, Ansible, or Kubernetes operators. This enables automated deployment, configuration, and scaling of resources based on AI-driven recommendations, ensuring that optimization actions are executed reliably and consistently.

Security and access management are critical in these integrations. AI agents typically operate with least-privilege roles and use secure authentication methods to interact with cloud services, maintaining compliance and protecting sensitive data.

Below is a simple Python example demonstrating how an AI agent might use the AWS SDK (boto3) to retrieve CPU utilization metrics from CloudWatch and make a basic scaling decision:

python

import boto3

from datetime import datetime, timedelta

# Initialize CloudWatch client

cloudwatch = boto3.client('cloudwatch', region_name='us-east-1')

# Define parameters

instance_id = 'i-0123456789abcdef0'

metric_name = 'CPUUtilization'

namespace = 'AWS/EC2'

period = 300 # 5 minutes

statistics = ['Average']

# Get CPU utilization metrics for the last 15 minutes

end_time = datetime.utcnow()

start_time = end_time - timedelta(minutes=15)

response = cloudwatch.get_metric_statistics(

Namespace=namespace,

MetricName=metric_name,

Dimensions=[{'Name': 'InstanceId', 'Value': instance_id}],

StartTime=start_time,

EndTime=end_time,

Period=period,

Statistics=statistics

)

# Extract average CPU utilization

datapoints = response['Datapoints']

if datapoints:

avg_cpu = sum(dp['Average'] for dp in datapoints) / len(datapoints)

else:

avg_cpu = 0

# Define scaling thresholds

scale_up_threshold = 70

scale_down_threshold = 30

# Decide scaling action

if avg_cpu > scale_up_threshold:

action = "Scale Up: Add more instances"

elif avg_cpu < scale_down_threshold:

action = "Scale Down: Remove instances"

else:

action = "Maintain current capacity"

print(f"Average CPU Utilization: {avg_cpu:.2f}%")

print(f"Scaling Decision: {action}")This example shows how AI agents can integrate with cloud platform APIs to monitor resource metrics and make informed scaling decisions automatically.

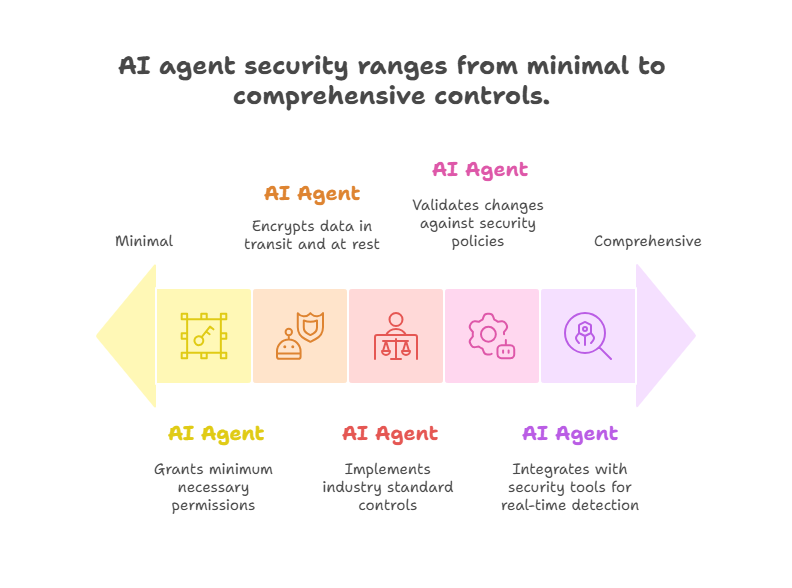

Security and Compliance Considerations

When deploying AI agents for cloud cost and resource optimization, ensuring security and compliance is paramount. These agents often require access to sensitive cloud infrastructure and data, so their design and operation must align with organizational security policies and regulatory requirements.

First, AI agents should operate under the principle of least privilege, meaning they are granted only the minimum permissions necessary to perform their tasks. This reduces the risk of accidental or malicious misuse of cloud resources. Role-based access control (RBAC) and fine-grained identity and access management (IAM) policies are essential to enforce this principle.

Data privacy is another critical concern. AI agents process usage metrics, billing information, and sometimes application data, which may contain sensitive or personally identifiable information (PII). Ensuring that data is encrypted both in transit and at rest, and that access is logged and monitored, helps maintain confidentiality and integrity.

Compliance with industry standards and regulations—such as GDPR, HIPAA, PCI DSS, or SOC 2—requires that AI agents and their supporting infrastructure implement appropriate controls. This includes audit trails, data retention policies, and secure handling of credentials and secrets.

AI-driven automation must also be carefully controlled to prevent unintended disruptions or security vulnerabilities. For example, automated scaling or configuration changes should be validated against security policies to avoid exposing resources to unauthorized access or creating compliance gaps.

Regular security assessments, vulnerability scanning, and penetration testing of AI agent components help identify and mitigate risks. Additionally, integrating AI agents with cloud security tools and monitoring services enables real-time detection of suspicious activities or policy violations.

Case Studies and Industry Applications

AI agents for cloud cost and resource optimization have been successfully adopted across various industries, demonstrating significant improvements in efficiency, cost savings, and operational agility. These real-world examples highlight how organizations leverage AI-driven automation to address complex cloud management challenges.

In the e-commerce sector, a leading online retailer implemented AI agents to dynamically scale their cloud infrastructure during peak shopping seasons. By predicting traffic surges using machine learning models, the AI system automatically provisioned additional resources ahead of demand, ensuring smooth user experiences while avoiding overprovisioning. This approach reduced cloud costs by 25% compared to previous manual scaling methods.

A financial services company used AI agents to optimize their batch processing workloads by scheduling non-critical jobs during off-peak hours and utilizing spot instances where possible. The AI-driven workload scheduling and rightsizing led to a 30% reduction in compute expenses while maintaining compliance with strict security and regulatory requirements.

In the media and entertainment industry, a streaming service integrated AI agents with their Kubernetes clusters to monitor resource utilization and automatically adjust container sizes and replica counts. This continuous optimization improved application performance and reduced infrastructure costs by 20%, enabling the company to scale efficiently during new content releases.

A healthcare provider employed AI agents to enforce security and compliance policies while optimizing cloud resources. The agents monitored resource configurations and usage patterns, automatically remediating non-compliant settings and recommending cost-saving adjustments. This dual focus on security and cost optimization helped the provider maintain HIPAA compliance and reduce cloud spending by 15%.

These case studies illustrate the versatility and impact of AI agents in diverse environments. By combining predictive analytics, automation, and integration with cloud platforms, organizations can achieve smarter resource management, enhanced security, and substantial cost savings.

Future Trends and Emerging Technologies

The landscape of AI agents for cloud cost and resource optimization is rapidly evolving, driven by advances in artificial intelligence, cloud computing, and automation technologies. Looking ahead, several key trends and emerging innovations are poised to shape the future of this field.

One major trend is the increasing use of reinforcement learning and self-learning AI agents that continuously improve their optimization strategies based on real-time feedback. Unlike rule-based systems, these agents can adapt dynamically to changing workloads, pricing models, and infrastructure conditions, achieving more efficient and nuanced cost management.

Multi-cloud and hybrid cloud optimization is becoming more prominent as organizations distribute workloads across multiple cloud providers and on-premises environments. AI agents will evolve to provide unified visibility and optimization across heterogeneous infrastructures, enabling seamless workload migration, cost balancing, and compliance enforcement.

The integration of explainable AI (XAI) techniques will enhance transparency and trust in AI-driven decisions. Users will be able to understand why certain optimization actions are recommended or executed, facilitating better collaboration between human operators and AI agents.

Advances in edge computing and serverless architectures will introduce new optimization challenges and opportunities. AI agents will need to manage highly distributed, ephemeral resources with fine-grained control, optimizing costs while maintaining performance and reliability at the edge.

Finally, the growing emphasis on sustainability and green computing will drive AI agents to incorporate environmental impact metrics alongside cost and performance. Optimizing for energy efficiency and carbon footprint reduction will become integral to cloud resource management strategies.

AI Agent Lifecycle Management: From Deployment to Self-Healing and Online Updates