Introduction: The Role of AI Agents in Performance Monitoring

In today’s fast-paced digital landscape, ensuring optimal application performance is critical for delivering seamless user experiences and maintaining business continuity. Application Performance Monitoring (APM) traditionally involves tracking key metrics such as response times, error rates, and resource utilization to detect and resolve issues. However, as applications grow increasingly complex—often distributed across cloud environments, microservices architectures, and diverse platforms—traditional monitoring approaches face significant challenges in managing the volume, variety, and velocity of performance data.

This is where AI agents come into play. AI agents are autonomous software entities equipped with artificial intelligence capabilities that enable them to analyze vast amounts of performance data, detect anomalies, and diagnose problems with minimal human intervention. Unlike conventional rule-based monitoring systems, AI agents learn from historical data, adapt to changing conditions, and can uncover subtle patterns that might elude human operators.

The integration of AI agents into performance monitoring transforms the process from reactive troubleshooting to proactive management. These agents continuously observe application behavior, predict potential failures before they impact users, and provide actionable insights to development and operations teams. This shift not only reduces downtime and operational costs but also accelerates the identification and resolution of performance bottlenecks.

In this article series, we will explore how AI agents enhance application performance monitoring by improving detection accuracy, enabling root cause analysis, and supporting real-time alerting. We will also discuss practical considerations for deploying AI agents, their integration with existing tools, and future trends shaping this evolving field. Understanding the role of AI agents in performance monitoring is essential for organizations aiming to maintain high service quality in increasingly complex software environments.

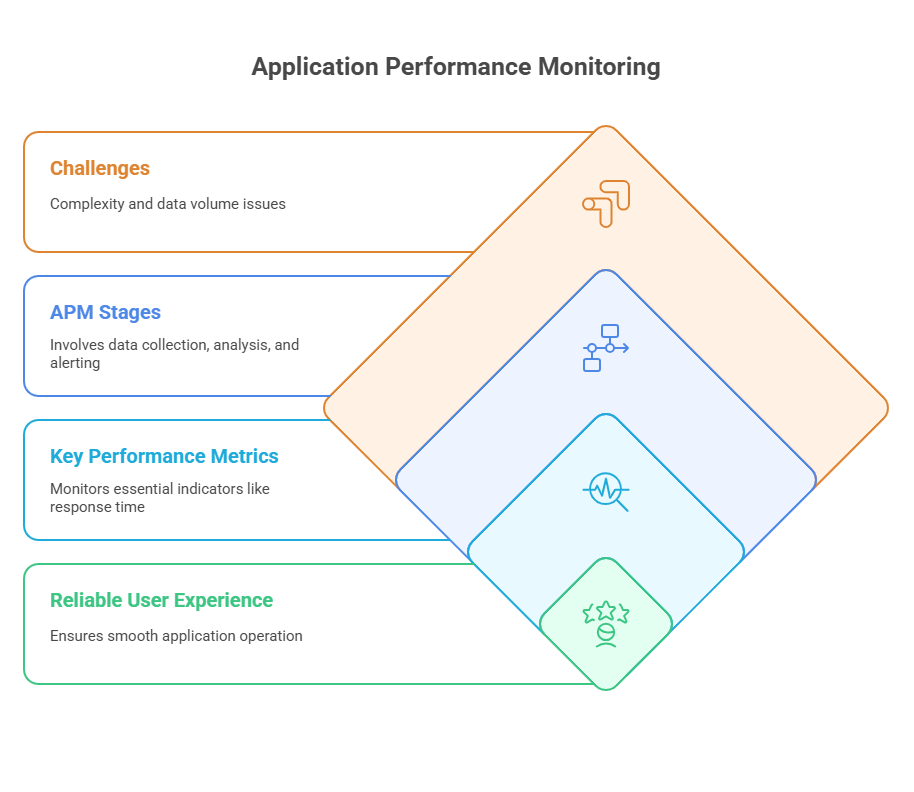

Fundamentals of Application Performance Monitoring (APM)

Application Performance Monitoring (APM) is a critical practice that involves tracking and managing the performance and availability of software applications. The primary goal of APM is to ensure that applications run smoothly, deliver fast response times, and provide a reliable user experience. To achieve this, APM focuses on collecting, analyzing, and visualizing key performance metrics.

At its core, APM monitors several essential indicators, including response time, throughput, error rates, and resource utilization such as CPU, memory, and network usage. These metrics help identify performance bottlenecks, failures, or degradation in real time. Traditional APM tools rely on instrumentation techniques like logging, tracing, and synthetic transactions to gather data from various components of an application, including servers, databases, and external services.

APM typically involves three main stages: data collection, data analysis, and alerting. Data collection gathers raw performance data from multiple sources. Data analysis processes this information to detect anomalies or deviations from expected behavior. Alerting mechanisms notify operations teams when performance issues arise, enabling timely intervention.

Despite its effectiveness, traditional APM faces challenges in modern software environments. The rise of distributed systems, microservices, and cloud-native applications has increased the complexity and volume of performance data, making manual analysis difficult and error-prone. Moreover, static thresholds and rule-based alerts often generate false positives or miss subtle issues.

Understanding these fundamentals sets the stage for appreciating how AI agents can augment APM by automating data analysis, improving anomaly detection, and providing deeper insights into application behavior. In the following sections, we will delve into how AI agents leverage advanced techniques to overcome the limitations of traditional monitoring approaches.

AI Agents: Definition and Capabilities

AI agents are autonomous software entities designed to perceive their environment, process information, and take actions to achieve specific goals. In the context of application performance monitoring (APM), AI agents act as intelligent assistants that continuously analyze performance data, detect anomalies, and support decision-making processes without requiring constant human oversight.

Unlike traditional monitoring tools that rely on predefined rules and static thresholds, AI agents leverage machine learning, pattern recognition, and statistical analysis to understand normal application behavior and identify deviations that may indicate performance issues. Their ability to learn from historical data enables them to adapt to evolving system dynamics, reducing false alarms and improving detection accuracy.

Key capabilities of AI agents in APM include:

Anomaly Detection: AI agents can identify unusual patterns in metrics such as response times, error rates, or resource usage, even when these anomalies are subtle or previously unseen.

Root Cause Analysis: By correlating data from multiple sources and analyzing dependencies, AI agents help pinpoint the underlying causes of performance problems, accelerating troubleshooting.

Predictive Analytics: Some AI agents can forecast potential performance degradations or failures before they occur, enabling proactive maintenance.

Automation: AI agents can automate routine monitoring tasks, such as data collection, alert generation, and report creation, freeing up human resources for more complex activities.

Continuous Learning: Through ongoing data ingestion and feedback, AI agents refine their models to maintain effectiveness in dynamic environments.

Data Collection and Preprocessing for AI Agents

Effective application performance monitoring with AI agents begins with robust data collection and preprocessing. The quality and relevance of the data directly impact the accuracy of anomaly detection, diagnostics, and predictive analytics performed by AI agents.

Data Collection:

Modern applications generate vast amounts of performance data from various sources, including application logs, server metrics, network traffic, user interactions, and third-party services. AI agents rely on continuous streams of this data to build a comprehensive view of system health. Data can be collected through instrumentation (embedding monitoring code within the application), agent-based monitoring (deploying lightweight software agents on servers), or agentless methods (using APIs and external probes).

Preprocessing:

Raw performance data is often noisy, incomplete, or inconsistent. Preprocessing is essential to transform this data into a usable format for AI analysis. Key preprocessing steps include:

Data Cleaning: Removing duplicates, handling missing values, and filtering out irrelevant information to ensure data integrity.

Normalization: Scaling metrics to a common range, which helps AI models compare and analyze different types of data effectively.

Aggregation: Summarizing data over specific time intervals (e.g., averages, percentiles) to reduce volume and highlight trends.

Feature Engineering: Creating new features or metrics that capture important aspects of application behavior, such as error rates per user session or resource usage per transaction.

Challenges:

Data collection and preprocessing in distributed, cloud-native environments can be complex. Ensuring data consistency across multiple sources, dealing with high data velocity, and maintaining privacy and security are ongoing challenges. Additionally, the preprocessing pipeline must be efficient to support real-time analysis and alerting.

Anomaly Detection and Diagnosis with AI Agents

Anomaly detection is a core function of AI agents in application performance monitoring (APM). It involves identifying deviations from normal behavior that may indicate performance issues, failures, or security threats. Traditional monitoring systems often rely on static thresholds, which can lead to false positives or missed anomalies in dynamic environments. AI agents, however, use advanced machine learning techniques to detect subtle and complex anomalies with higher accuracy.

AI agents analyze historical and real-time data to learn patterns of normal application behavior. When incoming data deviates significantly from these learned patterns, the agents flag it as an anomaly. This process can involve statistical methods, clustering, classification, or deep learning models, depending on the complexity of the system and the nature of the data.

Once an anomaly is detected, diagnosis is the next step. AI agents correlate anomalies across multiple metrics and components to identify the root cause. For example, a spike in response time might be linked to increased CPU usage on a specific server or a database query slowdown. By automating this correlation, AI agents reduce the time and effort required for troubleshooting.

Below is a simple Python example demonstrating anomaly detection using the Isolation Forest algorithm, a popular method for identifying outliers in performance metrics data:

python

from sklearn.ensemble import IsolationForest

import numpy as np

# Sample performance data: response times in milliseconds

response_times = np.array([100, 102, 98, 105, 110, 500, 108, 99, 101, 103]).reshape(-1, 1)

# Initialize the Isolation Forest model

model = IsolationForest(contamination=0.1, random_state=42)

# Fit the model on the data

model.fit(response_times)

# Predict anomalies: -1 for anomaly, 1 for normal

predictions = model.predict(response_times)

for i, (time, pred) in enumerate(zip(response_times.flatten(), predictions)):

status = "Anomaly" if pred == -1 else "Normal"

print(f"Data point {i}: Response time = {time} ms - {status}")This code trains an Isolation Forest model on a small dataset of response times and identifies the outlier (in this case, the unusually high value 500 ms) as an anomaly. In real-world scenarios, AI agents would process much larger and more complex datasets, continuously updating their models to adapt to changing application behavior.

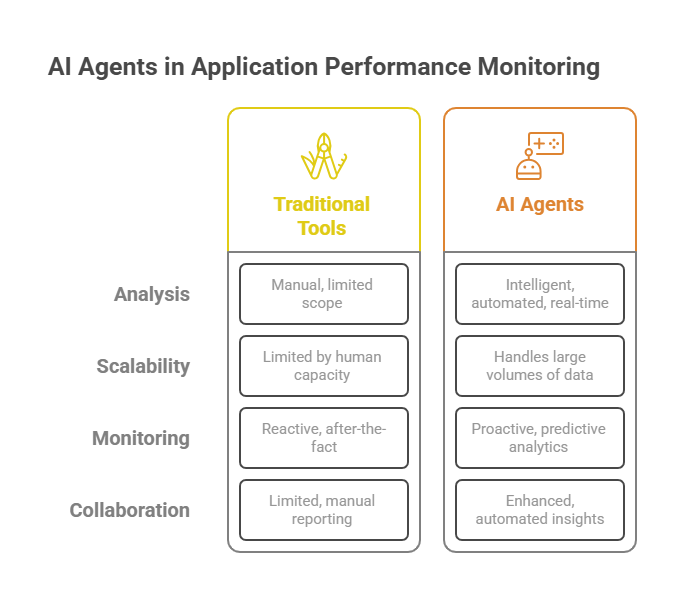

Integration of AI Agents with Modern Monitoring Architectures

The integration of AI agents into modern application performance monitoring (APM) architectures marks a significant advancement in how organizations manage and optimize their software systems. Contemporary monitoring environments are increasingly complex, often involving distributed microservices, cloud-native infrastructures, and hybrid deployments. AI agents enhance these architectures by providing intelligent, automated analysis and decision-making capabilities that traditional tools cannot match.

Modern monitoring architectures typically consist of multiple layers, including data collection, storage, processing, visualization, and alerting. AI agents can be embedded at various points within this stack to augment functionality. For example, they can be deployed alongside data collectors to preprocess and filter data, reducing noise and focusing on relevant signals. Within the processing layer, AI agents apply machine learning models to detect anomalies, predict failures, and perform root cause analysis in real time.

One key advantage of integrating AI agents is scalability. As systems grow in size and complexity, manual monitoring becomes impractical. AI agents can handle large volumes of data continuously, adapting to changes in system behavior without requiring constant human intervention. This capability is especially valuable in cloud environments where resources and workloads fluctuate dynamically.

Furthermore, AI agents facilitate proactive monitoring by enabling predictive analytics. By analyzing historical trends and current metrics, they can forecast potential issues before they impact users, allowing teams to take preventive actions. This shift from reactive to proactive monitoring improves system reliability and reduces downtime.

Integration also supports enhanced collaboration between AI agents and human operators. AI-generated insights and recommendations can be presented through dashboards and alerting systems, empowering teams to make informed decisions quickly. Additionally, AI agents can automate routine tasks such as report generation and incident ticketing, freeing up human resources for strategic activities.

Despite these benefits, successful integration requires careful consideration of system architecture, data privacy, and security. AI agents must be designed to work seamlessly with existing tools and workflows, ensuring interoperability and minimal disruption.

Real-Time Monitoring and Alerting Systems

Real-time monitoring and alerting are critical components of effective application performance management (APM). AI-driven real-time detection and notification mechanisms empower organizations to identify and respond to performance issues as they occur, minimizing downtime and enhancing user experience.

Traditional monitoring systems often rely on static thresholds and periodic checks, which can delay the detection of anomalies or generate excessive false alarms. In contrast, AI agents continuously analyze streaming data from applications and infrastructure, using machine learning models to detect subtle deviations from normal behavior instantly. This dynamic approach allows for more accurate and timely identification of potential problems.

AI-driven real-time monitoring involves several key elements. First, data ingestion pipelines must support high-throughput, low-latency processing to handle continuous streams of metrics, logs, and traces. AI agents preprocess this data to filter noise and extract meaningful features. Next, anomaly detection algorithms evaluate incoming data against learned patterns, flagging unusual events or trends.

Once an anomaly is detected, alerting systems notify relevant stakeholders through various channels such as email, SMS, chat platforms, or integrated incident management tools. AI agents can also prioritize alerts based on severity and potential impact, reducing alert fatigue and helping teams focus on critical issues.

Moreover, some AI agents incorporate automated remediation capabilities, triggering predefined actions like scaling resources, restarting services, or rolling back deployments to mitigate problems without human intervention. This level of automation accelerates response times and improves system resilience.

Implementing AI-driven real-time monitoring requires careful design to ensure scalability, reliability, and security. It is essential to balance sensitivity and specificity in detection algorithms to minimize false positives and negatives. Additionally, integrating alerting mechanisms with existing workflows and communication tools enhances operational efficiency.

Integration of AI Agents with Existing Monitoring Tools

Integrating AI agents with traditional application performance monitoring (APM) tools allows organizations to leverage the strengths of both approaches. While conventional monitoring solutions provide comprehensive data collection, visualization, and alerting capabilities, AI agents add intelligent analysis, anomaly detection, and predictive insights. Combining these technologies creates a more powerful and adaptive monitoring ecosystem.

Strategies for Integration

One common strategy is to deploy AI agents as an additional analytics layer on top of existing monitoring platforms. AI agents can consume data exported from tools like Prometheus, Nagios, or Datadog via APIs, log files, or message queues. This approach avoids disrupting established workflows while enhancing detection accuracy and reducing noise.

Another approach involves embedding AI capabilities directly into monitoring pipelines. For example, AI agents can be integrated with log collectors or metric exporters to preprocess data in real time, enabling faster anomaly detection and alerting. This tight coupling improves responsiveness and scalability.

Interoperability is key. AI agents should support standard data formats (e.g., JSON, OpenTelemetry) and protocols (e.g., HTTP, gRPC) to facilitate seamless communication with existing tools. Additionally, integration with incident management systems (e.g., PagerDuty, Opsgenie) ensures that AI-generated alerts fit naturally into operational processes.

Example: Integrating an AI Anomaly Detector with Prometheus Metrics

Below is a simplified Python example demonstrating how an AI agent might fetch metrics from a Prometheus server, perform anomaly detection using a simple statistical method, and print alerts for detected anomalies. This illustrates the concept of augmenting existing monitoring data with AI-driven analysis.

python

import requests

import numpy as np

PROMETHEUS_URL = "http://localhost:9090/api/v1/query"

QUERY = 'rate(http_requests_total[5m])' # Example metric: HTTP request rate

def fetch_prometheus_metric(query):

response = requests.get(PROMETHEUS_URL, params={'query': query})

result = response.json()

values = []

if result['status'] == 'success':

for item in result['data']['result']:

for value in item['values']:

# value format: [timestamp, metric_value]

values.append(float(value[1]))

return values

def detect_anomalies(data, threshold=3):

mean = np.mean(data)

std = np.std(data)

anomalies = []

for i, val in enumerate(data):

z_score = (val - mean) / std if std > 0 else 0

if abs(z_score) > threshold:

anomalies.append((i, val, z_score))

return anomalies

def main():

data = fetch_prometheus_metric(QUERY)

if not data:

print("No data retrieved from Prometheus.")

return

anomalies = detect_anomalies(data)

if anomalies:

print("Anomalies detected:")

for idx, val, z in anomalies:

print(f" Index {idx}: Value={val:.2f}, Z-score={z:.2f}")

else:

print("No anomalies detected.")

if __name__ == "__main__":

main()How This Works:

The script queries Prometheus for a specific metric (HTTP request rate over the last 5 minutes).

It collects the metric values over time.

Using a simple z-score method, it identifies data points that deviate significantly from the mean.

Detected anomalies are printed as alerts.

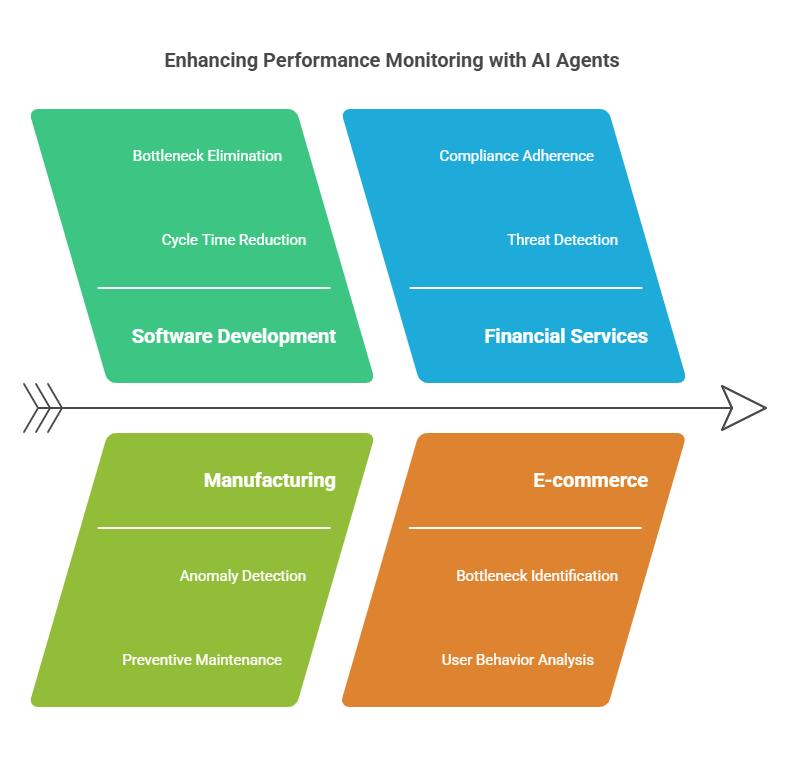

Case Studies: AI Agents in Action

AI agents have been successfully deployed in performance monitoring across various industries, demonstrating their ability to enhance system reliability, reduce downtime, and improve operational efficiency.

Case Study 1: Global Tech Company

A leading technology firm implemented AI agents to monitor software development team performance. By automating the measurement of cycle times and review processes, the agents provided real-time insights that helped streamline workflows and reduce bottlenecks. This resulted in a significant increase in development velocity and product quality.

Case Study 2: Manufacturing Industry

In a manufacturing setting, AI agents were deployed to monitor equipment performance and predict failures. Using sensor data and machine learning models, the agents detected anomalies early, enabling preventive maintenance that reduced unplanned downtime by 30%.

Case Study 3: Financial Services

A financial institution integrated AI agents into their application monitoring systems to detect performance degradations and security threats. The agents analyzed transaction patterns and system logs, providing alerts and diagnostics that improved incident response times and compliance adherence.

Case Study 4: E-commerce Platform

An e-commerce company used AI agents to analyze user behavior and system metrics in real time. The agents identified performance bottlenecks during peak traffic and suggested optimizations that enhanced user experience and increased conversion rates.

These examples illustrate the versatility and impact of AI agents in performance monitoring, showcasing their role in proactive issue detection, operational optimization, and strategic decision-making.

Challenges and Limitations

Despite their benefits, AI agents in performance monitoring face challenges such as data quality issues, false positives, and scalability concerns. Ensuring accurate, timely, and actionable insights requires robust data pipelines, continuous model training, and effective integration with existing systems.

Future Trends and Research Directions

Emerging trends include the use of explainable AI for transparency, edge computing for low-latency monitoring, and advanced anomaly detection algorithms. Ongoing research focuses on improving model robustness, reducing false alarms, and enhancing multi-agent collaboration for comprehensive system oversight.

AI Agents: Building intelligent applications with ease

AI Agents: Potential in Projects

The Programmer and the AI Agent: Human-Machine Collaboration in Modern Projects