Introduction to Adaptive Testing and AI Agents

Adaptive testing is an innovative approach in software quality assurance that dynamically adjusts test scenarios based on the system’s current behavior, performance, and feedback. Unlike traditional static testing, where test cases are predefined and executed uniformly, adaptive testing aims to optimize the testing process by focusing resources on the most relevant and high-risk areas of the software under test.

AI agents play a crucial role in enabling adaptive testing by acting as intelligent entities capable of monitoring system responses, analyzing test results, and modifying test scenarios in real time. These agents leverage artificial intelligence techniques such as machine learning, pattern recognition, and decision-making algorithms to continuously learn from testing outcomes and adapt their strategies accordingly.

The integration of AI agents into adaptive testing offers several advantages. It enhances test efficiency by reducing redundant or irrelevant test executions, improves defect detection by targeting critical functionalities, and accelerates the testing cycle through automation and intelligent decision-making. Moreover, AI agents can handle complex and dynamic software environments where traditional testing methods struggle to keep pace.

In this context, AI agents serve as autonomous testers that not only execute tests but also evolve the testing process itself. They can identify emerging patterns of failure, predict potential problem areas, and dynamically generate or modify test cases to address these insights. This capability is particularly valuable in agile and continuous integration/continuous deployment (CI/CD) environments, where rapid feedback and adaptability are essential.

Fundamentals of AI Agents in Software Testing

AI agents in software testing are autonomous or semi-autonomous software entities designed to perform testing tasks intelligently and adaptively. Their fundamental purpose is to enhance the testing process by automating decision-making, learning from test outcomes, and dynamically adjusting test activities to improve efficiency and effectiveness.

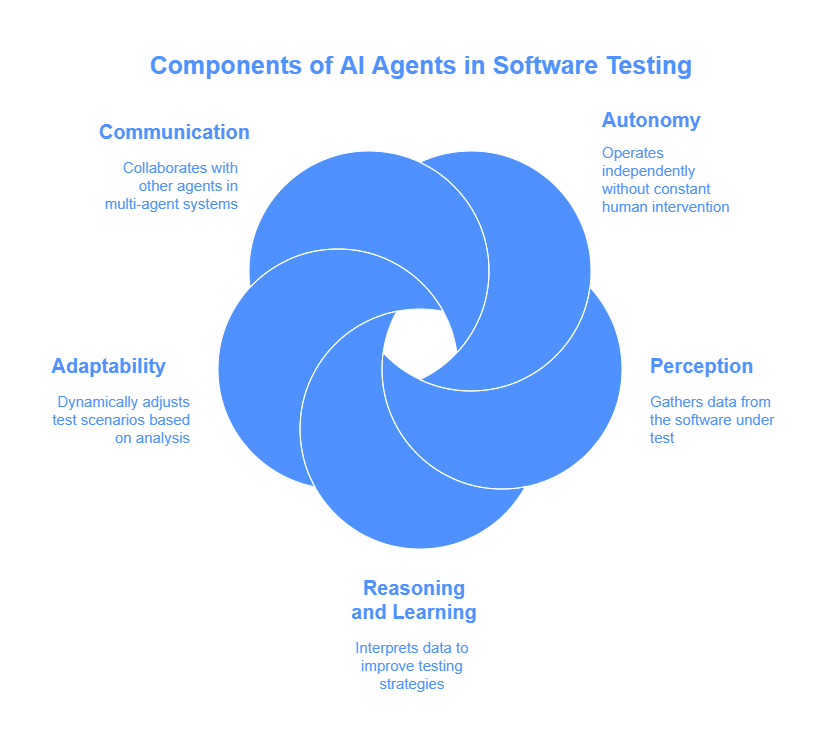

At their core, AI agents possess several key characteristics that make them suitable for adaptive testing:

Autonomy: AI agents operate independently without constant human intervention. They can initiate tests, analyze results, and modify test plans based on predefined goals and learned experiences.

Perception: Agents gather data from the software under test, including logs, performance metrics, error reports, and user interactions. This sensory input allows them to understand the current state of the system.

Reasoning and Learning: Using machine learning algorithms and rule-based systems, AI agents interpret collected data to identify patterns, anomalies, or failures. They learn from past test executions to improve future testing strategies.

Adaptability: Based on their analysis, agents can dynamically adjust test scenarios—adding, removing, or modifying test cases—to better target areas of concern or optimize resource usage.

Communication: In multi-agent systems, AI agents can collaborate by sharing information and coordinating testing efforts, which is especially useful for complex or distributed software systems.

In software testing, AI agents can be implemented using various AI techniques such as supervised learning for defect prediction, reinforcement learning for test case prioritization, and natural language processing for understanding requirements or test documentation.

Designing Adaptive Test Scenarios

Designing adaptive test scenarios is a critical step in leveraging AI agents to create flexible and responsive testing processes. Unlike fixed test cases, adaptive scenarios evolve based on real-time feedback from the system under test, enabling more targeted and efficient validation.

The design process typically involves several key elements:

Defining Test Objectives and Metrics

Before creating adaptive scenarios, it is essential to establish clear testing goals, such as maximizing coverage, detecting specific types of defects, or validating performance under varying conditions. Metrics like code coverage, fault detection rate, and execution time guide the adaptation process.

Modular Test Case Structure

Adaptive test scenarios are often built from modular components or test fragments that can be combined, modified, or reordered dynamically. This modularity allows AI agents to tailor test sequences based on observed system behavior.

Incorporating Decision Points

Test scenarios should include decision points where AI agents evaluate system responses and decide the next steps. For example, if a test uncovers a failure, the agent might trigger additional exploratory tests focusing on the affected module.

Parameterization and Variability

Designing tests with variable inputs and configurations enables agents to explore a broader range of conditions. Parameters can be adjusted dynamically to stress-test the system or validate edge cases.

Feedback Loops for Continuous Adaptation

Effective adaptive scenarios incorporate feedback mechanisms where test results influence subsequent test design. AI agents analyze outcomes to refine test paths, prioritize critical areas, and eliminate redundant checks.

Risk-Based Prioritization

Adaptive testing often focuses on high-risk components identified through historical data, code complexity, or recent changes. Agents use this information to allocate testing effort where it is most needed.

Integration with Test Management Systems

Adaptive scenarios should be compatible with existing test management tools to facilitate tracking, reporting, and collaboration among development and QA teams.

Techniques for Dynamic Test Adjustment

Dynamic test adjustment is the core capability that enables AI agents to modify test scenarios in real time based on system feedback and evolving conditions. This adaptability ensures that testing remains relevant, efficient, and focused on areas with the highest potential for defects or failures. Several techniques empower AI agents to perform dynamic adjustments effectively:

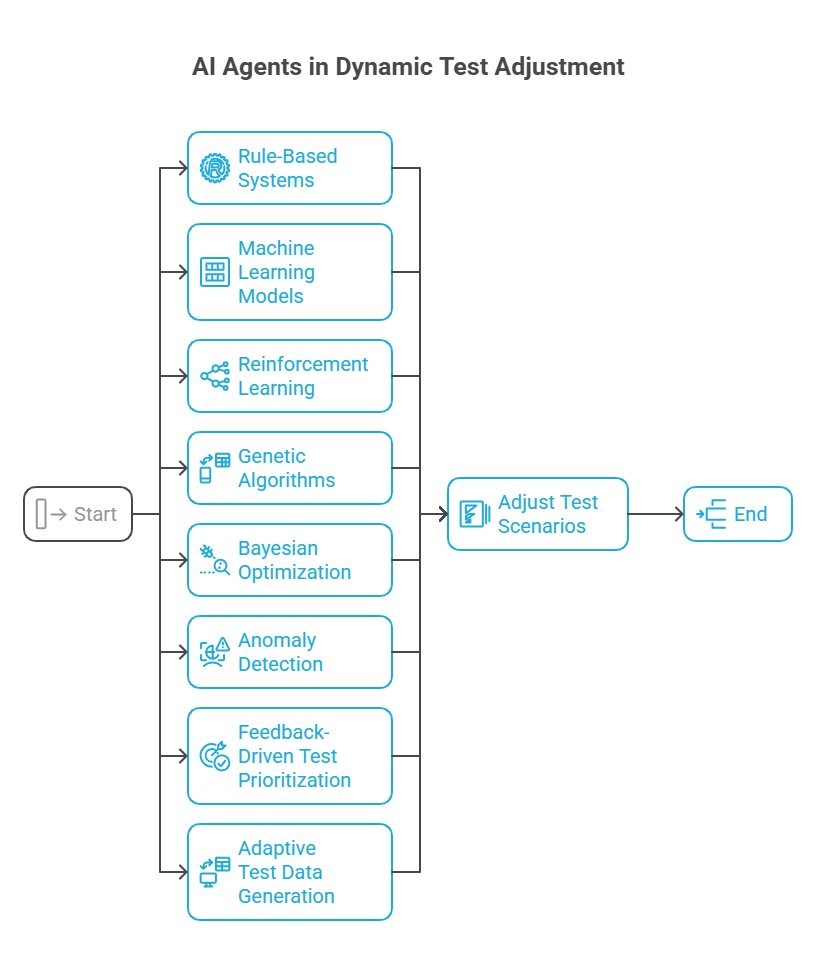

Rule-Based Systems

AI agents can use predefined rules to trigger changes in test scenarios. For example, if a particular test case fails, the agent might automatically add related tests or increase the frequency of testing in that area. Rule-based approaches are straightforward and interpretable but may lack flexibility in complex environments.

Machine Learning Models

Supervised and unsupervised learning algorithms help agents identify patterns in test results and system behavior. For instance, classification models can predict which components are more likely to fail, guiding the agent to prioritize tests accordingly. Clustering techniques can group similar failures to focus testing efforts.

Reinforcement Learning (RL)

RL enables agents to learn optimal testing strategies through trial and error by receiving feedback in the form of rewards or penalties. Agents iteratively improve their test selection and sequencing policies to maximize defect detection or coverage over time.

Genetic Algorithms and Evolutionary Strategies

These optimization techniques allow agents to evolve test scenarios by combining and mutating test cases to discover the most effective test suites. They are particularly useful for exploring large and complex test spaces.

Bayesian Optimization

Bayesian methods help agents make informed decisions about which tests to run next by modeling uncertainty and balancing exploration (testing new areas) and exploitation (focusing on known risky areas).

Anomaly Detection

AI agents use anomaly detection algorithms to identify unusual system behaviors or outputs during testing. When anomalies are detected, agents can adjust test scenarios to investigate these irregularities more thoroughly.

Feedback-Driven Test Prioritization

Agents continuously analyze test outcomes and system metrics to reorder or select test cases dynamically, ensuring that the most critical or failure-prone tests are executed earlier.

Adaptive Test Data Generation

AI agents generate or modify test inputs on the fly to cover new scenarios or stress system boundaries, improving the robustness of testing.

Benefits of Adaptive Testing with AI Agents

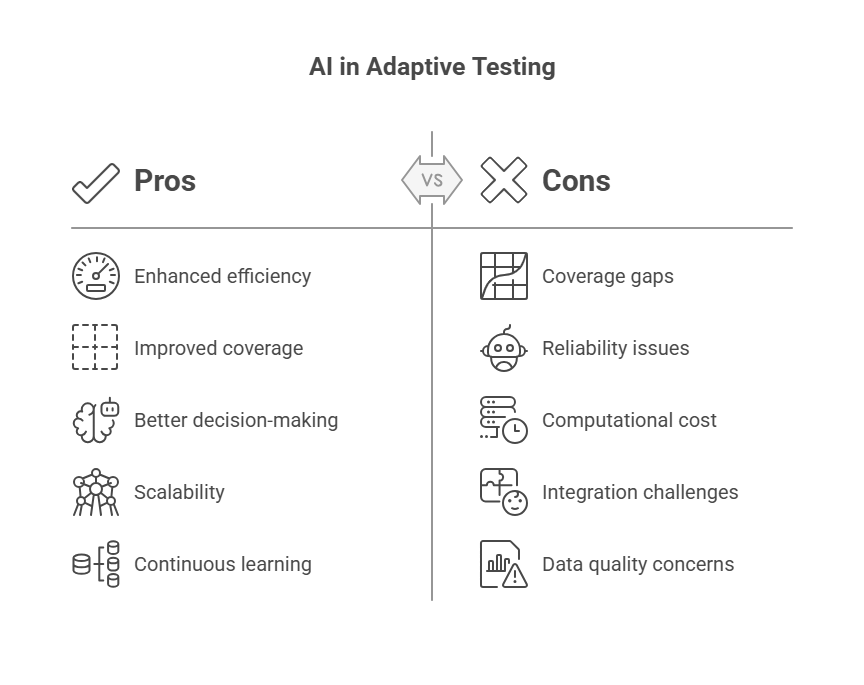

Adaptive testing powered by AI agents offers significant advantages over traditional static testing approaches, transforming how software quality assurance is conducted. The key benefits include:

Increased Testing Efficiency

AI agents dynamically focus testing efforts on the most critical and high-risk areas, reducing redundant or low-value test executions. This targeted approach saves time and computational resources, enabling faster test cycles.

Improved Defect Detection

By continuously analyzing system behavior and adapting test scenarios, AI agents can uncover defects that might be missed by static test suites. They can explore edge cases, unexpected interactions, and evolving system states more effectively.

Faster Feedback Loops

Adaptive testing accelerates the delivery of actionable insights to developers by prioritizing tests that are most likely to reveal issues. This rapid feedback supports agile development and continuous integration/continuous deployment (CI/CD) practices.

Enhanced Test Coverage

AI agents can generate diverse and variable test inputs, increasing the breadth and depth of testing. This adaptability helps ensure that different usage scenarios and configurations are adequately tested.

Scalability in Complex Systems

As software systems grow in complexity and scale, manual test design becomes impractical. AI agents can manage and adapt large test suites autonomously, maintaining effectiveness without proportional increases in human effort.

Reduced Human Error and Bias

Automated adaptation minimizes reliance on manual test planning, which can be prone to oversight or bias. AI agents make data-driven decisions based on objective analysis of system behavior.

Continuous Learning and Improvement

AI agents learn from past test executions and system changes, continuously refining their strategies. This ongoing improvement leads to progressively more effective testing over time.

Support for Dynamic and Evolving Software

In environments where software changes frequently, such as agile or DevOps workflows, adaptive testing ensures that test scenarios remain relevant and aligned with the current state of the system.

Cost Savings

By optimizing test execution and reducing unnecessary tests, organizations can lower the costs associated with testing infrastructure, personnel, and time-to-market delays.

Machine Learning Models for Test Scenario Optimization

Machine learning (ML) plays a pivotal role in optimizing test scenarios by enabling AI agents to learn from data, predict outcomes, and make informed decisions about which tests to run, modify, or discard. Different ML paradigms—supervised, unsupervised, and reinforcement learning—offer unique advantages in adaptive testing:

Supervised Learning

In supervised learning, models are trained on labeled data, such as past test results indicating pass or fail outcomes. These models can predict the likelihood of defects in new test cases or prioritize tests based on predicted risk. Common algorithms include decision trees, support vector machines, and neural networks.

Unsupervised Learning

Unsupervised learning helps identify hidden patterns or clusters in test data without predefined labels. Techniques like clustering and anomaly detection can reveal unusual behaviors or group similar test cases, guiding agents to focus on critical areas or reduce redundant tests.

Reinforcement Learning (RL)

RL enables agents to learn optimal testing strategies through interaction with the environment. By receiving rewards for successful defect detection or coverage improvement, agents iteratively refine their test selection and sequencing policies to maximize testing effectiveness.

Hybrid Approaches

Combining these learning methods can yield more robust adaptive testing systems. For example, supervised models can guide initial test prioritization, while RL fine-tunes strategies based on ongoing feedback.

Example: Simple Supervised Learning for Test Prioritization in Python

Below is a basic example demonstrating how a supervised learning model (using a decision tree classifier) can be used to prioritize test cases based on features such as code complexity and historical failure rates.

python

from sklearn.tree import DecisionTreeClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

# Sample dataset: features = [code_complexity, past_failure_rate], label = test_priority (1=high, 0=low)

X = [

[5, 0.8],

[3, 0.1],

[7, 0.9],

[2, 0.2],

[6, 0.7],

[4, 0.3],

[8, 0.95],

[1, 0.05]

]

y = [1, 0, 1, 0, 1, 0, 1, 0]

# Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.25, random_state=42)

# Train decision tree classifier

clf = DecisionTreeClassifier()

clf.fit(X_train, y_train)

# Predict test priorities on test set

y_pred = clf.predict(X_test)

# Evaluate accuracy

print("Accuracy:", accuracy_score(y_test, y_pred))

# Example: Predict priority for a new test case

new_test_case = [[6, 0.6]]

priority = clf.predict(new_test_case)

print("Predicted test priority (1=high, 0=low):", priority[0])This simple model helps an AI agent decide which test cases to prioritize, improving testing efficiency by focusing on high-risk areas. More sophisticated models and features can be incorporated for real-world adaptive testing systems.

Integration of AI Agents with Testing Frameworks

Integrating AI agents into existing automated testing tools and pipelines is essential for leveraging adaptive testing capabilities without disrupting established workflows. This integration enables seamless collaboration between AI-driven intelligence and traditional testing infrastructure, enhancing efficiency and effectiveness.

Key approaches to embedding AI agents within testing frameworks include:

Plugin and Extension Development

Many popular testing frameworks (e.g., Selenium, JUnit, pytest) support plugins or extensions. AI agents can be implemented as plugins that monitor test execution, analyze results, and dynamically adjust test suites or parameters during runtime.

API-Based Integration

AI agents can expose RESTful or gRPC APIs that testing pipelines call to receive recommendations on test prioritization, generation, or adaptation. This decouples the AI logic from the testing framework, allowing flexible deployment and updates.

Middleware Components

AI agents can act as middleware between test management systems and execution engines, intercepting test plans and injecting adaptive logic based on real-time feedback and analytics.

Continuous Integration/Continuous Deployment (CI/CD) Pipeline Embedding

Integrating AI agents into CI/CD pipelines (e.g., Jenkins, GitLab CI) allows automated triggering of adaptive testing processes. Agents can analyze build artifacts, code changes, and historical test data to optimize test runs before deployment.

Data Integration and Feedback Loops

Effective integration requires AI agents to access diverse data sources such as test logs, code repositories, bug trackers, and performance metrics. This data fuels learning and adaptation, while feedback loops ensure continuous improvement.

User Interface and Reporting Tools

Embedding AI agents with dashboards and reporting interfaces helps testers and developers understand adaptive testing decisions, fostering trust and facilitating manual overrides when necessary.

Containerization and Microservices

Deploying AI agents as containerized microservices enables scalable, platform-independent integration with testing environments, supporting distributed and parallel testing scenarios.

Challenges in Adaptive Testing with AI Agents

While adaptive testing powered by AI agents offers many advantages, it also presents several challenges that must be addressed to ensure effectiveness, reliability, and practical adoption:

Ensuring Comprehensive Test Coverage

Adaptive testing focuses on prioritizing and modifying tests dynamically, which may inadvertently lead to gaps in coverage if certain areas are deprioritized or overlooked. Balancing exploration of new or less-tested parts of the system with exploitation of known risky areas is critical.

Reliability and Consistency of AI Decisions

AI agents rely on data and models that may be imperfect or biased. Ensuring that adaptive decisions are reliable, reproducible, and do not introduce instability in the testing process is a significant challenge.

Computational Overhead and Scalability

Running machine learning models, analyzing large volumes of test data, and dynamically adjusting test suites can require substantial computational resources. Scaling adaptive testing to large, complex systems without excessive overhead is non-trivial.

Integration Complexity

Embedding AI agents into existing testing frameworks and CI/CD pipelines can be technically complex, requiring careful design to avoid disrupting established workflows or introducing new points of failure.

Data Quality and Availability

Effective adaptation depends on high-quality, relevant data such as historical test results, code metrics, and runtime logs. Incomplete, noisy, or inconsistent data can degrade AI agent performance.

Explainability and Trust

Developers and testers need to understand and trust the decisions made by AI agents. Lack of transparency in adaptive testing strategies can hinder adoption and make debugging difficult.

Handling Dynamic and Evolving Systems

Software systems frequently change, and AI agents must continuously learn and adapt to new codebases, architectures, and requirements. Maintaining up-to-date models and avoiding concept drift is challenging.

Security and Privacy Concerns

Collecting and processing test data, especially in regulated industries, raises concerns about data privacy and security. Ensuring compliance while enabling adaptive testing is essential.

Managing False Positives and Negatives

AI-driven test prioritization or generation may produce false positives (flagging non-issues) or false negatives (missing defects), impacting developer confidence and testing effectiveness.

Human-AI Collaboration

Defining the right balance between automated adaptation and human oversight is crucial. Over-automation may reduce human insight, while under-automation limits benefits.

Case Studies and Industry Applications

Adaptive testing powered by AI agents has been successfully implemented across various industries, demonstrating significant improvements in test efficiency, defect detection, and resource optimization. Below are some notable examples:

E-commerce Platforms

AI agents dynamically prioritize test cases based on user behavior analytics and recent code changes, reducing test execution time while maintaining high coverage of critical features like payment processing and product search.

Financial Services

Adaptive testing frameworks use reinforcement learning to optimize regression test suites for banking applications, focusing on high-risk transactions and compliance-related functionalities, thereby improving reliability and reducing manual effort.

Telecommunications

AI-driven test agents analyze network performance data and automatically generate test scenarios to validate new protocol implementations, enabling faster rollout of updates with minimized service disruptions.

Healthcare Software

Machine learning models help prioritize tests for electronic health record systems by identifying modules with frequent past failures, ensuring patient data integrity and regulatory compliance.

Automotive Industry

AI agents assist in testing autonomous driving software by simulating diverse driving conditions and adapting test scenarios based on real-time sensor data, enhancing safety and robustness.

Example: Simplified Python Code for Adaptive Test Selection Based on Historical Failure Rates

This example demonstrates how an AI agent might select test cases to run based on their past failure rates, prioritizing tests that are more likely to uncover defects.

python

# Sample test cases with historical failure rates

test_cases = {

"test_login": 0.1,

"test_payment": 0.4,

"test_search": 0.05,

"test_checkout": 0.3,

"test_profile_update": 0.2

}

# Threshold for selecting high-priority tests

failure_rate_threshold = 0.2

# AI agent selects tests with failure rate above threshold

selected_tests = [test for test, rate in test_cases.items() if rate >= failure_rate_threshold]

print("Selected tests for execution based on failure rates:")

for test in selected_tests:

print(f"- {test}")Tools and Frameworks Supporting AI-Driven Adaptive Testing

The development and deployment of AI agents for adaptive testing are facilitated by a growing ecosystem of tools and frameworks. These platforms provide capabilities ranging from test automation and data analysis to machine learning model integration and continuous testing orchestration. Below are some key tools and frameworks commonly used in AI-driven adaptive testing:

Selenium and Appium

Widely used open-source tools for automated UI testing that can be extended with AI agents to enable adaptive test case selection, dynamic element identification, and self-healing tests.

Test.ai

A commercial platform that leverages AI to automatically generate, execute, and maintain tests for mobile and web applications, reducing manual test creation and improving coverage.

Applitools

Provides AI-powered visual testing and monitoring, enabling adaptive detection of UI anomalies and reducing false positives in visual regression testing.

TensorFlow and PyTorch

Popular machine learning frameworks used to build custom AI models that analyze test data, predict flaky tests, and optimize test suites based on historical trends.

Jenkins and GitLab CI/CD

Continuous integration tools that support integration of AI agents into automated pipelines, enabling adaptive testing workflows triggered by code changes.

Testim.io

An AI-based test automation platform that uses machine learning to create stable and maintainable tests, adapting to UI changes and improving test reliability.

Mabl

Combines AI and automation to provide adaptive testing solutions with features like self-healing tests, anomaly detection, and intelligent test maintenance.

Allure TestOps

A test management tool that integrates with AI analytics to provide insights into test effectiveness, flakiness, and coverage, supporting adaptive test planning.

OpenAI and Hugging Face APIs

These platforms offer pre-trained AI models and APIs that can be integrated into testing frameworks to enhance natural language processing, test generation, and anomaly detection capabilities.

Custom Microservices and Container Orchestration

Many organizations build bespoke AI agents deployed as microservices using Docker and Kubernetes, enabling scalable, distributed adaptive testing architectures.

Future Trends and Research Directions

AI-driven adaptive testing is a rapidly evolving field with numerous promising directions for future research and development. These trends aim to enhance the intelligence, efficiency, and applicability of adaptive testing systems, addressing current limitations and unlocking new capabilities.

Advanced Reinforcement Learning for Test Optimization

Leveraging reinforcement learning to enable AI agents to autonomously explore and exploit testing strategies, dynamically balancing test coverage and resource constraints in complex software environments.

Explainable AI in Testing

Developing methods to make AI-driven test decisions transparent and interpretable, helping testers and developers understand why certain tests are prioritized or generated, thereby increasing trust and adoption.

Integration of Natural Language Processing (NLP)

Using NLP to automatically generate test cases from requirements, user stories, or documentation, enabling adaptive testing agents to better align tests with evolving business needs.

Self-Healing and Self-Adaptive Tests

Creating tests that automatically detect and recover from failures caused by environmental changes, UI updates, or flaky behavior, reducing manual maintenance efforts.

Cross-Platform and Cross-Domain Adaptation

Expanding adaptive testing techniques to support heterogeneous environments, including mobile, web, IoT, and embedded systems, allowing AI agents to generalize learning across domains.

Federated and Privacy-Preserving Testing

Applying federated learning to enable collaborative adaptive testing across organizations without sharing sensitive data, enhancing test models while preserving privacy.

Quantum Computing for Test Optimization

Exploring quantum algorithms to solve complex optimization problems in test suite selection and scheduling more efficiently than classical methods.

Ethical and Responsible AI in Testing

Addressing ethical considerations such as bias, fairness, and accountability in AI-driven testing processes to ensure equitable and trustworthy outcomes.

Real-Time Adaptive Testing in Continuous Deployment

Enhancing AI agents to operate in real-time within CI/CD pipelines, adapting tests instantly based on live feedback from production environments and user behavior.

Hybrid Human-AI Collaboration Models

Designing frameworks that combine human expertise with AI adaptability, enabling testers to guide, override, or refine AI-driven decisions for optimal results.

AI Agent Lifecycle Management: From Deployment to Self-Healing and Online Updates

Advanced Deep Learning Techniques: From Transformers to Generative Models

The AI Agent Revolution: Changing the Way We Develop Software