Introduction

The Rise of Advanced AI Systems

In recent years, artificial intelligence (AI) has evolved from a niche area of computer science into a transformative force across industries. Advanced AI systems, powered by deep learning, natural language processing, and reinforcement learning, are now capable of performing complex tasks such as medical diagnosis, autonomous driving, financial forecasting, and even creative writing. The rapid development and deployment of these technologies have brought about significant benefits, including increased efficiency, improved decision-making, and the automation of repetitive tasks.

However, as AI systems become more sophisticated and integrated into critical aspects of society, concerns about their security and ethical implications have grown. The potential for misuse, unintended consequences, and the amplification of existing biases has made it essential to address these issues proactively. Organizations and governments worldwide are now prioritizing the development of secure and ethical AI frameworks to ensure that these technologies are used responsibly and for the benefit of all.

Importance of Security and Ethics

Security and ethics are foundational pillars in the development and deployment of advanced AI systems. Security ensures that AI models and the data they process are protected from malicious attacks, unauthorized access, and manipulation. Without robust security measures, AI systems can become targets for adversarial attacks, data breaches, and other cyber threats that can compromise their integrity and reliability.

Ethics, on the other hand, focuses on the responsible use of AI. This includes ensuring fairness, transparency, accountability, and respect for human rights. Ethical considerations help prevent the development of AI systems that perpetuate discrimination, invade privacy, or operate without adequate oversight. By embedding ethical principles into AI design and implementation, organizations can build trust with users and stakeholders, mitigate risks, and promote positive societal outcomes.

Understanding Advanced Artificial Intelligence

Definitions and Key Concepts

Artificial intelligence (AI) refers to the development of computer systems capable of performing tasks that typically require human intelligence. These tasks include reasoning, learning, problem-solving, perception, and language understanding. Advanced AI systems go beyond basic automation or rule-based algorithms by leveraging sophisticated techniques such as machine learning, deep learning, and neural networks.

Machine learning is a subset of AI that enables systems to learn from data and improve their performance over time without being explicitly programmed. Deep learning, a further subset of machine learning, uses multi-layered neural networks to analyze complex patterns in large datasets. These technologies allow AI systems to recognize images, understand speech, translate languages, and make predictions with remarkable accuracy.

Key concepts in advanced AI also include natural language processing (NLP), which enables machines to understand and generate human language, and reinforcement learning, where systems learn optimal actions through trial and error. Together, these technologies form the backbone of modern AI applications, powering everything from virtual assistants to autonomous vehicles.

Types of Advanced AI Systems

Advanced AI systems can be categorized based on their capabilities and applications. The most common types include:

Narrow AI (Weak AI): These systems are designed to perform specific tasks, such as facial recognition, language translation, or playing chess. They operate within a limited domain and cannot generalize beyond their programmed functions. Most AI applications in use today fall into this category.

General AI (Strong AI): This type of AI would possess the ability to understand, learn, and apply knowledge across a wide range of tasks at a level comparable to human intelligence. While general AI remains a theoretical concept, ongoing research aims to bridge the gap between narrow and general AI.

Superintelligent AI: This hypothetical form of AI would surpass human intelligence in all aspects, including creativity, problem-solving, and social intelligence. While superintelligent AI is still the subject of speculation and debate, its potential impact on society underscores the importance of addressing security and ethical considerations early in AI development.

Security Challenges in AI Systems

Data Privacy and Protection

One of the most significant security challenges in advanced AI systems is ensuring data privacy and protection. AI models often require vast amounts of data to learn and make accurate predictions. This data can include sensitive personal information, such as medical records, financial details, or user behavior patterns. If not properly secured, this information can be exposed to unauthorized parties, leading to privacy breaches and potential misuse.

To address these risks, organizations must implement robust data protection measures. This includes encrypting data both at rest and in transit, anonymizing datasets to remove personally identifiable information, and enforcing strict access controls. Additionally, compliance with data protection regulations such as the General Data Protection Regulation (GDPR) is essential to safeguard user privacy and maintain trust.

Vulnerabilities and Threats

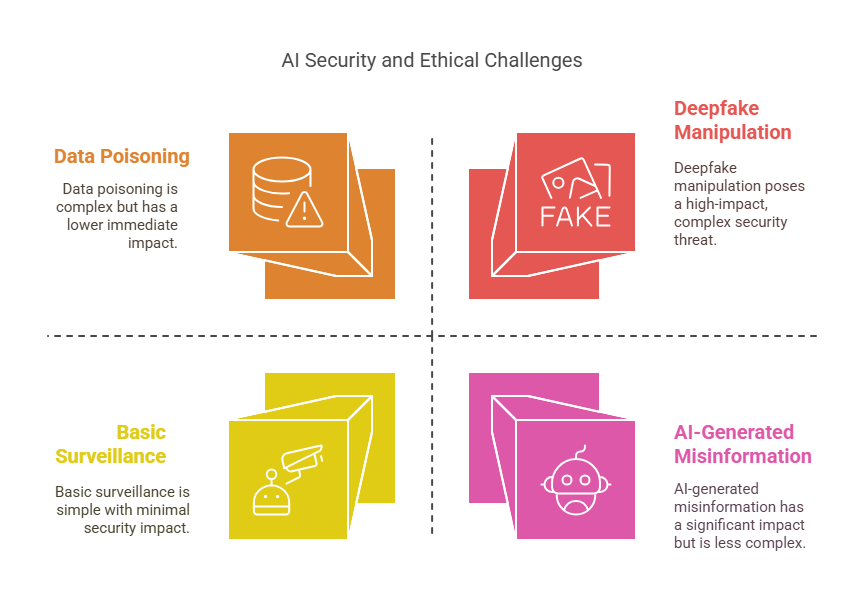

AI systems, like any software, are susceptible to various vulnerabilities and threats. Attackers may exploit weaknesses in the underlying code, data pipelines, or deployment infrastructure. Common threats include data poisoning, where malicious actors manipulate training data to influence model behavior, and model inversion, where attackers attempt to reconstruct sensitive information from the model’s outputs.

Another concern is the exposure of AI models through APIs or cloud services, which can be targeted by cybercriminals seeking to steal intellectual property or disrupt operations. Regular security assessments, vulnerability scanning, and the use of secure coding practices are critical to minimizing these risks.

Adversarial Attacks on AI Models

Adversarial attacks are a unique and growing threat to AI systems. In these attacks, small, carefully crafted changes are made to input data with the goal of deceiving the AI model. For example, an attacker might subtly alter an image so that a facial recognition system misidentifies a person, or modify a stop sign so that an autonomous vehicle fails to recognize it.

These attacks exploit the way AI models interpret data, often revealing blind spots or weaknesses in their design. Defending against adversarial attacks requires ongoing research and the implementation of techniques such as adversarial training, input validation, and model robustness testing.

Securing AI Supply Chains

The AI supply chain encompasses all components involved in developing, training, and deploying AI systems, including data sources, software libraries, hardware, and third-party services. Each element of the supply chain can introduce security risks if not properly managed.

For instance, using open-source libraries with known vulnerabilities or relying on untrusted data sources can compromise the integrity of the entire AI system. Organizations should conduct thorough vetting of suppliers, maintain an inventory of all components, and regularly update and patch software to mitigate supply chain risks.

Ethical Considerations in AI

Fairness and Bias in AI

One of the most pressing ethical concerns in artificial intelligence is the issue of fairness and bias. AI systems learn from data, and if that data reflects historical biases or societal inequalities, the resulting models can perpetuate or even amplify these problems. For example, an AI used in hiring might favor certain demographics if trained on biased recruitment data, or a facial recognition system might perform poorly on underrepresented groups.

To address these challenges, it is essential to carefully curate training datasets, regularly audit AI models for biased outcomes, and implement fairness-aware algorithms. Transparency in data collection and model design also helps ensure that AI systems treat all individuals and groups equitably.

Transparency and Explainability

Transparency and explainability are crucial for building trust in AI systems. Many advanced AI models, especially those based on deep learning, are often described as „black boxes” because their decision-making processes are difficult to interpret. This lack of transparency can be problematic, especially in high-stakes applications such as healthcare, finance, or criminal justice, where understanding the rationale behind a decision is essential.

To improve explainability, developers can use techniques such as feature importance analysis, model visualization, and the creation of simpler surrogate models that approximate the behavior of complex systems. Providing clear documentation and user-friendly explanations of how AI systems work also supports transparency and accountability.

Accountability and Responsibility

As AI systems become more autonomous, questions arise about who is responsible for their actions and decisions. Accountability in AI involves clearly defining the roles and responsibilities of all stakeholders, including developers, operators, and end-users. It also means establishing mechanisms for redress when AI systems cause harm or make mistakes.

Organizations should implement governance frameworks that outline ethical guidelines, decision-making processes, and escalation procedures for addressing issues. Regular audits, impact assessments, and the involvement of multidisciplinary teams can help ensure that AI systems are developed and deployed responsibly.

Human Rights and AI

AI technologies have the potential to impact fundamental human rights, including privacy, freedom of expression, and non-discrimination. For instance, surveillance systems powered by AI can threaten privacy, while automated decision-making can affect access to services or opportunities.

To safeguard human rights, it is important to design AI systems that respect individual autonomy, provide meaningful consent, and avoid unjust discrimination. Engaging with diverse stakeholders, including affected communities, ethicists, and legal experts, can help identify and mitigate potential risks to human rights.

Regulatory and Legal Frameworks

International Standards and Guidelines

As artificial intelligence becomes increasingly integrated into global society, the need for international standards and guidelines has grown. Organizations such as the International Organization for Standardization (ISO) and the Institute of Electrical and Electronics Engineers (IEEE) have developed frameworks to guide the ethical and secure development of AI systems. These standards address issues such as data privacy, transparency, accountability, and risk management, providing a common language and set of expectations for AI practitioners worldwide.

International guidelines, such as the OECD Principles on Artificial Intelligence, emphasize values like human-centeredness, fairness, and transparency. These principles encourage countries and organizations to adopt best practices that promote responsible AI innovation while minimizing potential harms. By adhering to international standards, organizations can ensure interoperability, foster trust, and facilitate cross-border collaboration in AI development.

National Regulations and Policies

Many countries are enacting their own regulations and policies to govern the use of AI. The European Union’s Artificial Intelligence Act is a prominent example, introducing a risk-based approach to AI regulation. It categorizes AI systems according to their potential impact on safety and fundamental rights, imposing stricter requirements on high-risk applications such as biometric identification or critical infrastructure management.

Other countries, including the United States, China, and Canada, are also developing national AI strategies and regulatory frameworks. These policies often address issues such as data protection, algorithmic transparency, and the ethical use of AI in sensitive sectors. National regulations play a crucial role in shaping the development and deployment of AI technologies within specific legal and cultural contexts.

Compliance and Enforcement

Ensuring compliance with regulatory and legal frameworks is essential for the responsible use of AI. Organizations must implement processes to monitor and document their AI systems’ development, deployment, and ongoing operation. This includes conducting regular risk assessments, maintaining detailed records of data sources and model decisions, and establishing clear lines of accountability.

Enforcement mechanisms vary by jurisdiction but may include audits, fines, or restrictions on the use of non-compliant AI systems. Regulatory bodies and independent oversight organizations are increasingly involved in monitoring AI applications and investigating potential violations. Proactive compliance not only helps organizations avoid legal penalties but also builds public trust and supports the long-term sustainability of AI initiatives.

Best Practices for Secure and Ethical AI

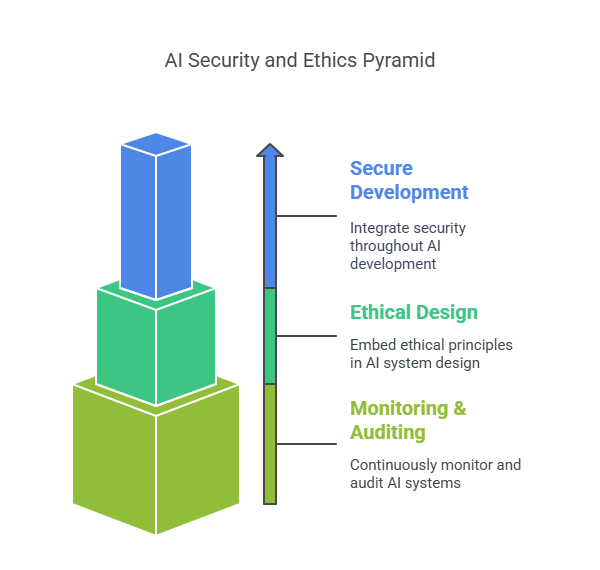

Secure AI Development Lifecycle

Building secure AI systems starts with integrating security at every stage of the development lifecycle. This means considering security from the initial design phase through to deployment and ongoing maintenance. Developers should conduct threat modeling to identify potential vulnerabilities early, use secure coding practices, and regularly update software to address new threats. Data used for training and testing should be protected through encryption and access controls, and all third-party components should be vetted for security risks. Continuous monitoring and incident response plans are also essential to quickly detect and mitigate any security breaches that may occur after deployment.

Ethical AI Design Principles

Ethical considerations must be embedded into the design of AI systems from the outset. This involves defining clear ethical guidelines that align with organizational values and societal expectations. Key principles include fairness, transparency, accountability, and respect for user privacy. Developers should strive to minimize bias in data and algorithms, provide clear explanations for AI decisions, and ensure that users can understand and challenge outcomes when necessary. Engaging diverse teams and stakeholders in the design process helps identify potential ethical risks and ensures that AI systems serve the interests of all users.

Monitoring and Auditing AI Systems

Ongoing monitoring and auditing are critical for maintaining the security and ethical integrity of AI systems. Regular audits help identify and address issues such as model drift, bias, or unexpected behavior that may arise as AI systems interact with real-world data. Automated monitoring tools can track system performance, detect anomalies, and flag potential security incidents in real time. Transparent documentation of model development, data sources, and decision-making processes supports accountability and facilitates external reviews. By establishing clear audit trails and feedback mechanisms, organizations can continuously improve their AI systems and respond effectively to emerging risks.

Case Studies

Security Breaches in AI Applications

Real-world incidents have demonstrated that even the most advanced AI systems are not immune to security breaches. For example, in the financial sector, AI-powered fraud detection systems have been targeted by adversarial attacks, where cybercriminals manipulate transaction data to evade detection. In another case, a healthcare AI system was compromised when attackers gained access to sensitive patient data used for model training, leading to a significant privacy breach and regulatory scrutiny.

These incidents highlight the importance of robust security measures, such as data encryption, access controls, and regular vulnerability assessments. They also underscore the need for organizations to remain vigilant and proactive in identifying and mitigating new threats as AI technologies evolve.

Ethical Dilemmas in Real-World AI Deployments

Ethical dilemmas often arise when AI systems are deployed in complex, real-world environments. One notable example is the use of facial recognition technology by law enforcement agencies. While these systems can enhance public safety, they have also been criticized for potential biases and the risk of wrongful identification, particularly among minority groups. This has led to public debates and, in some regions, temporary bans or strict regulations on the use of such technologies.

Another case involves AI-driven recruitment tools that inadvertently perpetuated gender or racial biases present in historical hiring data. These tools, intended to streamline the hiring process, ended up reinforcing existing inequalities, prompting organizations to reevaluate their data sources and implement fairness checks.

These case studies illustrate the critical need for transparency, accountability, and ongoing ethical oversight in AI deployments. Engaging diverse stakeholders, conducting regular impact assessments, and being willing to pause or modify AI systems in response to ethical concerns are essential steps for responsible AI adoption.

Future Trends and Challenges

Emerging Security Threats

As artificial intelligence continues to advance, new security threats are emerging that require constant vigilance and adaptation. One significant trend is the rise of more sophisticated adversarial attacks, where malicious actors exploit subtle weaknesses in AI models to manipulate outcomes. For example, attackers may use generative AI to create highly realistic deepfakes or to craft adversarial inputs that deceive image recognition systems. Additionally, as AI becomes more integrated into critical infrastructure—such as energy grids, healthcare, and transportation—the potential impact of security breaches grows, making robust protection measures even more essential.

Another emerging threat is the risk of data poisoning, where attackers intentionally introduce misleading or harmful data into training datasets. This can compromise the integrity of AI models and lead to incorrect or dangerous decisions. As AI systems become more autonomous and interconnected, the attack surface expands, requiring organizations to adopt advanced security strategies, such as continuous monitoring, anomaly detection, and rapid incident response.

Evolving Ethical Issues

The ethical landscape of AI is also evolving, presenting new challenges for developers, policymakers, and society at large. As AI systems become more capable, questions about autonomy, consent, and the boundaries of machine decision-making become increasingly complex. For instance, the use of AI in surveillance and predictive policing raises concerns about privacy, civil liberties, and potential misuse by authorities.

Bias and fairness remain ongoing challenges, especially as AI is deployed in diverse cultural and social contexts. Ensuring that AI systems respect local values and do not perpetuate discrimination requires continuous evaluation and adaptation. Moreover, the growing use of AI-generated content, such as text, images, and videos, introduces new ethical dilemmas related to misinformation, intellectual property, and the authenticity of digital media.

The Role of AI Governance

Effective governance will play a crucial role in addressing the future challenges of AI security and ethics. AI governance refers to the frameworks, policies, and processes that guide the responsible development and use of artificial intelligence. This includes establishing clear standards for transparency, accountability, and risk management, as well as mechanisms for stakeholder engagement and public oversight.

As AI technologies evolve, governance structures must be flexible and adaptive, capable of responding to new risks and societal expectations. International collaboration will be essential to harmonize standards and address cross-border challenges, such as data sharing and global security threats. Organizations should invest in ongoing education, ethical training, and multidisciplinary teams to ensure that governance keeps pace with technological innovation.

Conclusion

Key Takeaways

The rapid advancement of artificial intelligence brings both remarkable opportunities and significant responsibilities. As AI systems become more integrated into daily life and critical infrastructure, the importance of addressing security and ethical considerations cannot be overstated. Key takeaways from this discussion include the necessity of robust data protection, the need to guard against adversarial and supply chain attacks, and the importance of designing AI systems that are fair, transparent, and accountable.

Organizations must recognize that security and ethics are not optional add-ons but essential components of trustworthy AI. Proactive risk management, regular audits, and adherence to both international standards and local regulations are vital for minimizing harm and building public trust. Furthermore, the involvement of diverse stakeholders and multidisciplinary teams ensures that AI systems are developed with a broad perspective, reducing the risk of unintended consequences.

Recommendations for Stakeholders

For organizations and developers, it is crucial to embed security and ethical principles throughout the AI lifecycle—from data collection and model training to deployment and ongoing monitoring. This includes investing in secure infrastructure, conducting regular impact assessments, and fostering a culture of transparency and accountability.

Policymakers and regulators should continue to develop and refine legal frameworks that keep pace with technological innovation, ensuring that AI systems are used responsibly and for the benefit of society. International cooperation and harmonization of standards will be key to addressing cross-border challenges and promoting global best practices.

For end-users and the general public, staying informed about the capabilities and limitations of AI systems is essential. Engaging in public dialogue and advocating for responsible AI use can help shape the future of technology in a way that aligns with societal values and protects fundamental rights.

References

A well-researched article on security and ethics in advanced artificial intelligence systems should be supported by credible references. Below is a sample list of references that readers can consult for further information, best practices, and in-depth analysis of the topics discussed in this guide.

1. International Organization for Standardization (ISO).

ISO/IEC JTC 1/SC 42 Artificial Intelligence. https://www.iso.org/committee/6794475.html

2. Organisation for Economic Co-operation and Development (OECD).

OECD Principles on Artificial Intelligence. https://oecd.ai/en/ai-principles

3. European Commission.

Proposal for a Regulation Laying Down Harmonised Rules on Artificial Intelligence (Artificial Intelligence Act). https://artificial-intelligence-act.eu/

4. Institute of Electrical and Electronics Engineers (IEEE).

Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. https://ethicsinaction.ieee.org/

5. General Data Protection Regulation (GDPR).

Regulation (EU) 2016/679 of the European Parliament and of the Council. https://gdpr.eu/

6. Future of Life Institute.

AI Policy – Principles and Recommendations. https://futureoflife.org/ai-policy/

7. Jobin, A., Ienca, M., & Vayena, E. (2019).

The global landscape of AI ethics guidelines. Nature Machine Intelligence, 1(9), 389–399. https://www.nature.com/articles/s42256-019-0088-2

8. Brundage, M., et al. (2018).

The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation. https://arxiv.org/abs/1802.07228

9. Bostrom, N. (2014).

Superintelligence: Paths, Dangers, Strategies. Oxford University Press.

10. Russell, S., & Norvig, P. (2020).

Artificial Intelligence: A Modern Approach (4th Edition). Pearson.

AI Agents in Practice: How to Automate a Programmer’s Daily Work

Agents AI: A New Era of Automation and Intelligent Decision-Making in Business

Security and Resilience of AI Agents: Detection, Defense, and Self-Healing After Adversarial Attacks