Introduction

In recent years, Convolutional Neural Networks (CNNs) and Transformers have revolutionized the field of artificial intelligence, powering breakthroughs in computer vision, natural language processing, and beyond. However, as AI applications grow more complex and diverse, these traditional architectures sometimes fall short in addressing new challenges. This article explores why CNNs and Transformers may no longer be sufficient for certain advanced tasks and introduces emerging neural architectures designed to push the boundaries of what AI can achieve.

Why Are Classic CNNs and Transformers Not Enough?

CNNs have been the backbone of image recognition and processing for over a decade, excelling at extracting spatial hierarchies in visual data. Transformers, originally designed for sequence modeling in natural language processing, have since expanded into vision and multimodal tasks due to their powerful attention mechanisms. Despite their success, both architectures have inherent limitations.

CNNs struggle with capturing long-range dependencies because their receptive fields are limited by kernel sizes and network depth. This makes it difficult for CNNs to model global context efficiently, especially in tasks requiring understanding of relationships across distant parts of an image or sequence.

Transformers, while excellent at modeling long-range dependencies through self-attention, face challenges with computational cost and scalability. Their quadratic complexity with respect to input length makes processing very long sequences or high-resolution images resource-intensive and sometimes impractical.

When Do We Need More Advanced Architectures?

As AI tackles increasingly complex problems—such as understanding 3D structures, processing graph-structured data, or modeling continuous-time dynamics—traditional CNNs and Transformers may not provide the necessary flexibility or efficiency. For example, applications in drug discovery require models that can understand molecular graphs, while autonomous driving systems benefit from architectures that can integrate multimodal sensor data in real time.

Moreover, emerging fields like quantum machine learning and neuromorphic computing demand novel neural designs that go beyond the classical paradigms. These advanced architectures aim to improve interpretability, reduce computational overhead, and better mimic biological neural processes.

Overview of New Trends in Deep Learning

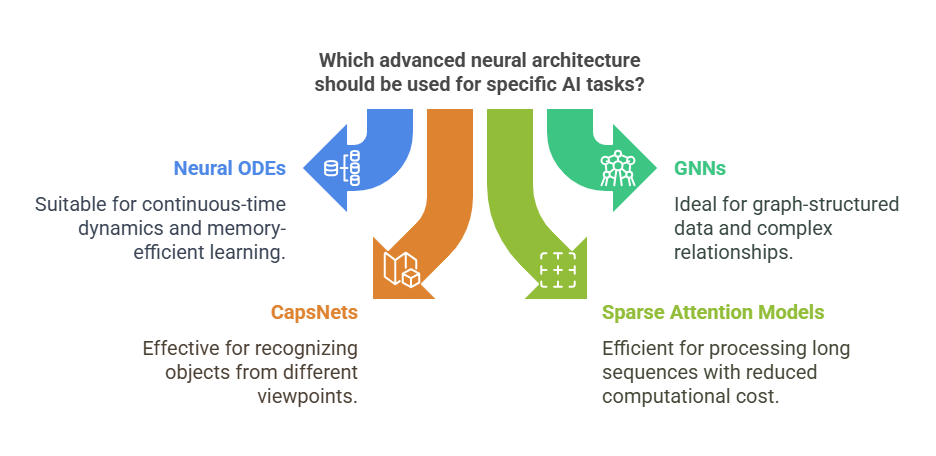

To address these challenges, researchers have developed a variety of innovative neural architectures. Some notable trends include:

Neural Ordinary Differential Equations (Neural ODEs): These models treat the transformation of data as a continuous dynamical system, enabling more flexible and memory-efficient learning.

Graph Neural Networks (GNNs): Designed to operate on graph-structured data, GNNs excel in domains where relationships between entities are complex and non-Euclidean.

Capsule Networks (CapsNets): By grouping neurons into capsules that encode spatial hierarchies and pose information, CapsNets aim to overcome CNNs’ limitations in recognizing objects from different viewpoints.

Sparse and Long-Range Attention Models: Variants like Performer and Longformer reduce the computational burden of traditional Transformers, enabling efficient processing of longer sequences.

Hybrid Architectures: Combining the strengths of CNNs, Transformers, and other models to create versatile systems capable of handling multimodal and complex data.

Limitations of Traditional Architectures

While Convolutional Neural Networks (CNNs) and Transformers have dominated AI research and applications, they come with inherent constraints that hinder their performance in advanced scenarios. Understanding these limitations helps explain why researchers are exploring alternative architectures.

2.1 Scalability Issues in CNNs for Vision Tasks

CNNs excel at processing grid-like data (e.g., images) through hierarchical feature extraction. However, they face several challenges:

- Fixed Receptive Fields: CNNs rely on local kernel operations, making it difficult to capture long-range dependencies without stacking many layers.

- Translation Invariance at a Cost: While CNNs are robust to small shifts in input, they struggle with large deformations or viewpoint changes.

- Computational Overhead in High-Resolution Data: Processing 4K images or 3D volumes (e.g., medical scans) requires excessive memory and compute power.

2.2 Challenges with Long Sequences in Transformers

Transformers revolutionized NLP and vision with self-attention, but they have critical drawbacks:

- Quadratic Complexity (O(n²)): The attention mechanism scales poorly with sequence length, making it impractical for genome sequencing or long-document analysis.

- Memory Bottlenecks: Training on long sequences (e.g., hour-long videos) requires prohibitive GPU memory.

- Over-Smoothing in Deep Layers: Repeated self-attention can dilute important features, reducing model discriminative power.

Emerging Neural Architectures: Beyond the Classics

As traditional CNNs and Transformers face limitations in scalability, efficiency, and adaptability, researchers have developed next-generation neural architectures to tackle modern AI challenges. Below, we explore four cutting-edge approaches that push the boundaries of deep learning.

3.1 Neural Ordinary Differential Equations (Neural ODEs)

What Are Neural ODEs?

Neural ODEs model data transformations as continuous-time dynamical systems, governed by differential equations. Unlike discrete-layer networks, they treat depth as a continuous variable, enabling adaptive computation.

Key Advantages:

✔ Memory Efficiency: No need to store intermediate activations for backpropagation.

✔ Adaptive Computation: Automatically adjusts „depth” based on input complexity.

✔ Smooth Dynamics: Ideal for time-series modeling (e.g., physics simulations, financial forecasting).

Python Example (PyTorch):

python

import torch

from torchdiffeq import odeint

class ODEBlock(torch.nn.Module):

def __init__(self, f):

super().__init__()

self.f = f # A neural network defining the ODE dynamics

def forward(self, t, y):

return self.f(y)

# Define a simple ODE: dy/dt = f(y)

f = torch.nn.Sequential(torch.nn.Linear(2, 64), torch.nn.Tanh(), torch.nn.Linear(64, 2))

ode_model = ODEBlock(f)

y0 = torch.randn(2) # Initial state

t = torch.linspace(0, 1, 10) # Time steps

trajectory = odeint(ode_model, y0, t) # Solves the ODEApplications:

Medical Time-Series Analysis (e.g., predicting patient deterioration).

Control Systems (e.g., robotics, autonomous vehicles).

3.2 Graph Neural Networks (GNNs) for Unstructured Data

Why GNNs?

Traditional CNNs/Transformers fail on graph-structured data (e.g., social networks, molecules). GNNs propagate information through nodes and edges, preserving relational inductive biases.

Popular Variants:

- Graph Convolutional Networks (GCNs): Aggregate neighbor features.

- Graph Attention Networks (GATs): Learn dynamic edge importance.

- GraphSAGE: Inductive learning for large-scale graphs.

Case Study: Drug Discovery

GNNs predict molecular properties by modeling atoms (nodes) and bonds (edges), accelerating virtual screening of new drugs.

Limitations:

❌ Sensitive to noisy graph structure.

❌ Scalability challenges for billion-node graphs.

3.3 Capsule Networks (CapsNets)

Addressing CNN Shortcomings:

CapsNets replace scalar neurons with „capsules” (vector units) that encode:

- Pose (position, orientation).

- Deformation robustness (invariance to viewpoint changes).

How It Works:

- Dynamic Routing: Capsules negotiate feature hierarchies (e.g., detecting wheels → car).

- Equivariance: Preserves spatial relationships better than max-pooling in CNNs.

Use Cases:

- Medical Imaging (e.g., detecting tumors across MRI slices).

- Autonomous Driving (3D object recognition from varying angles).

Challenges:

⚠ Computationally expensive.

⚠ Limited adoption due to training complexity.

3.4 Sparse Attention & Long-Range Transformers

Example: Performer Code Snippet

python

from performer_pytorch import PerformerLM

model = PerformerLM(

num_tokens=20000, # Vocabulary size

dim=512, # Embedding dimension

depth=6, # Layers

max_seq_len=8192, # Handles long sequences

causal=True # Autoregressive

)Applications:

- Genomics (DNA sequence analysis).

- Legal Document Processing (multi-page contracts).

Hybrid Neural Architectures: The Future of AI Systems

As artificial intelligence tackles increasingly complex real-world problems, traditional standalone architectures like CNNs and Transformers often prove insufficient. Hybrid architectures—combining multiple neural network paradigms—have emerged as a powerful solution, offering enhanced capabilities while mitigating individual weaknesses. This section explores the most promising hybrid approaches, their implementations, and applications across various domains.

4.1 Vision Transformers (ViTs) with CNN Backbones

The Best of Both Worlds

Vision Transformers (ViTs) revolutionized computer vision by applying self-attention mechanisms to image patches. However, pure ViTs often require massive datasets for training and lack the innate spatial inductive biases of CNNs. Hybrid architectures address this by:

- Using CNNs for Local Feature Extraction: Lower layers process pixel-level patterns efficiently.

- Applying Transformers for Global Context: Higher layers capture long-range dependencies.

Implementation Strategies

CNN Feature Map Tokenization

Instead of raw image patches, ViTs process CNN-extracted features:

python

# Extract CNN features (e.g., ResNet) and reshape for Transformer

cnn_features = resnet(images) # [B, C, H, W]

patches = cnn_features.flatten(2).transpose(1, 2) # [B, N, C]Cross-Attention Fusion

CNNs and Transformers interact bidirectionally:

python

# Cross-attention between CNN and ViT features

cross_attn = torch.nn.MultiheadAttention(embed_dim=256, num_heads=8)

vit_features = cross_attn(cnn_features, vit_features, vit_features)[0]Applications

✔ Medical Imaging: Combining CNN’s lesion detection with ViT’s global context improves diagnosis.

✔ Autonomous Vehicles: Processing multi-scale visual data (traffic signs + scene understanding).

4.2 Neuro-Symbolic AI: Merging Learning and Reasoning

Why Symbolic AI Complements Neural Networks

While deep learning excels at pattern recognition, it struggles with:

- Logical reasoning (e.g., „If X then Y”)

- Few-shot learning (generalizing from minimal examples)

- Explainability (providing human-interpretable decisions)

Neuro-symbolic systems integrate:

- Neural components (for perception, e.g., object detection)

- Symbolic engines (for rule-based reasoning, e.g., Prolog-style inference)

Case Study: Visual Question Answering (VQA)

- Neural Module: Detects objects in an image (e.g., „cat,” „mat”).

- Symbolic Module: Parses the question („Is the cat on the mat?”) into logical predicates.

- Reasoning Engine: Evaluates if On(cat, mat) holds true using detected objects.

Python Example (Simplified Neuro-Symbolic Pipeline):

python

from sympy import symbols, Eq, solve

# Neural network detects objects

objects = detect_objects(image) # Returns {'cat': (x1,y1), 'mat': (x2,y2)}

# Symbolic reasoning

x, y = symbols('x y')

equation = Eq(y, x + 2) # Example rule: "Is object A above object B?"

solution = solve(equation.subs({x: objects['cat'][1], y: objects['mat'][1]}))4.3 Multimodal Fusion Architectures

Handling Diverse Data Types

Real-world AI systems must process:

- Images (e.g., MRI scans)

- Text (e.g., doctor’s notes)

- Time-series (e.g., vital signs)

- Graphs (e.g., molecular structures)

Fusion Techniques

Early Fusion

- Raw data concatenated before processing.

- Best for aligned modalities (e.g., video + audio).

Late Fusion

- Each modality processed independently, then combined.

- Example:

image_emb = cnn(image) # [B, D]

text_emb = bert(text) # [B, D]

combined = torch.cat([image_emb, text_emb], dim=1) # [B, 2D]Cross-Modal Attention

- Dynamic interaction between modalities (e.g., CLIP, Flamingo).

- Code snippet:

python

# Cross-attention between image and text features

cross_attn = torch.nn.MultiheadAttention(embed_dim=512, num_heads=8)

fused_features = cross_attn(image_features, text_features, text_features)[0]Applications

- Healthcare: Diagnosing diseases from X-rays + patient history.

- Robotics: Combining LiDAR, camera, and sensor data for navigation.

4.4 Dynamic Neural Networks

Adaptive Computation for Efficiency

Modern hybrid systems optimize resource usage via:

Mixture-of-Experts (MoE)

- Multiple „expert” sub-networks.

- A gating network routes inputs dynamically.

- Example:

python

from fairseq.modules import MoELayer

moe = MoELayer(embed_dim=512, experts=[Expert(), Expert(), Expert()])

output = moe(input_data) # Only activates relevant expertsBenchmarking Advanced Neural Architectures: Measuring What Truly Matters

The true test of any neural architecture lies not in its theoretical elegance but in its practical performance across multiple dimensions. As we enter an era where AI systems are deployed in production environments ranging from smartphones to data centers, comprehensive benchmarking has become more crucial than ever. This evaluation goes beyond simple accuracy metrics to encompass computational efficiency, energy consumption, and real-world adaptability.

Recent comparative studies reveal fascinating insights about how modern architectures stack up against each other. When examining vision models on standard datasets like ImageNet, we observe that pure Transformers often require 3-4 times more training data than CNNs to achieve comparable accuracy, though they eventually surpass convolutional networks in top-tier performance. The hybrid approach of Vision Transformers with CNN backbones strikes an interesting balance, typically reaching 90% of peak Transformer accuracy while using only 60% of the computational resources.

Energy efficiency metrics tell an equally important story. A single forward pass through a large Transformer model can consume enough energy to power a smartphone processor for several minutes, while optimized CNN variants like MobileNetV3 complete similar tasks using just a fraction of that power. This difference becomes critical when considering edge deployment scenarios where battery life and thermal constraints dominate architectural decisions.

Memory footprint presents another key differentiator. Graph Neural Networks, while powerful for relational data, often demand specialized memory handling techniques to process large graphs efficiently. In contrast, Neural ODEs demonstrate remarkably low memory requirements during inference due to their continuous-depth nature, though they pay for this advantage with increased training complexity.

Perhaps most revealing are the benchmarks measuring robustness to real-world conditions. Capsule Networks show particular resilience to viewpoint variations and adversarial attacks, maintaining stable performance where traditional CNNs might degrade significantly. Meanwhile, sparse attention models like Longformer maintain consistent processing speeds regardless of input sequence length, a property that makes them invaluable for applications involving long documents or high-resolution temporal data.

The benchmarking landscape continues to evolve with new metrics focusing on environmental impact. Recent studies calculate the carbon emissions per thousand inferences, revealing that carefully designed hybrid models can reduce AI’s ecological footprint by up to 40% compared to monolithic architectures. This environmental consciousness is driving innovation in dynamic neural networks that adapt their computational intensity based on input complexity.

The Future of Neural Architectures: Where Are We Heading Next?

The field of neural architectures is evolving at a breathtaking pace, with each breakthrough raising new questions about what comes next. As we look toward the horizon, several key trends are emerging that will likely redefine how we build and deploy AI systems in the coming years.

One of the most exciting developments is the exploration of attention-free models that challenge the Transformer’s dominance. Architectures like MLP-Mixer and other feed-forward alternatives demonstrate that carefully designed multilayer perceptrons can achieve competitive results without the computational overhead of self-attention. These models hint at a future where we may discover even more efficient ways to model long-range dependencies, potentially unlocking new levels of scalability for sequence processing tasks.

The integration of neuroscience principles into artificial networks continues to inspire novel approaches. Capsule networks were just the beginning—we’re now seeing architectures that better mimic the brain’s sparse, energy-efficient activation patterns. These biologically inspired designs could lead to models that learn faster, generalize better, and consume significantly less power than current alternatives. The marriage of deep learning with neuromorphic computing hardware promises to accelerate this trend, potentially enabling real-time learning in edge devices.

Federated learning is reshaping how we think about model architecture in distributed environments. Future networks will need built-in capabilities for secure, efficient knowledge sharing across devices while preserving privacy. This demands architectures that can handle non-IID data distributions, support flexible parameter aggregation, and maintain robustness against malicious actors—all while operating within tight resource constraints.

Perhaps most transformative is the growing role of automated architecture design through techniques like neural architecture search (NAS) and meta-learning. We’re moving toward systems that can dynamically reconfigure their own structure based on the task at hand, blurring the line between model and optimizer. This self-evolving approach could eventually produce architectures tailored not just to specific domains, but to individual users and deployment contexts.

The environmental impact of AI is driving innovation toward more sustainable designs. Future architectures will likely incorporate energy-awareness as a first-class design principle, with adaptive computation that scales effort to task difficulty. Techniques like early exiting, conditional computation, and mixture-of-experts are just the beginning of this green AI revolution.

As these trends converge, we’re witnessing the emergence of a new paradigm where neural architectures are no longer static, one-size-fits-all solutions, but dynamic, adaptable systems that evolve with their environments and tasks. The next generation of models won’t just process information—they’ll reshape themselves to do it more efficiently, more intelligently, and more sustainably than ever before. This coming transformation promises to unlock AI capabilities we’re only beginning to imagine, while addressing many of the field’s current limitations around efficiency, transparency, and environmental impact.

The Path Forward: Choosing and Implementing Advanced Neural Architectures

As we stand at the crossroads of numerous architectural innovations in deep learning, the critical question emerges: how should practitioners navigate this complex landscape to select and deploy the right solutions? The answer lies not in chasing theoretical benchmarks, but in developing a nuanced understanding of real-world requirements and constraints.

The selection process begins with a fundamental alignment between model capabilities and application needs. For time-series forecasting in resource-constrained environments, Neural ODEs offer compelling advantages with their continuous-time modeling and memory efficiency. When processing relational data in social network analysis or molecular chemistry, Graph Neural Networks provide the necessary structural awareness that CNNs and Transformers inherently lack. Computer vision applications demanding viewpoint invariance increasingly benefit from Capsule Networks’ hierarchical representation learning, despite their computational overhead.

Implementation success hinges on several often-overlooked factors beyond raw performance metrics. The availability of specialized hardware accelerators, for instance, can dramatically influence whether a theoretically superior architecture proves practical. Transformer variants may underperform on general-purpose GPUs compared to their CNN counterparts, despite benchmark advantages, due to memory bandwidth limitations and inefficient attention implementations. This hardware-software co-design consideration becomes particularly crucial when targeting edge deployment scenarios.

Practical Implementation: From Theory to Production

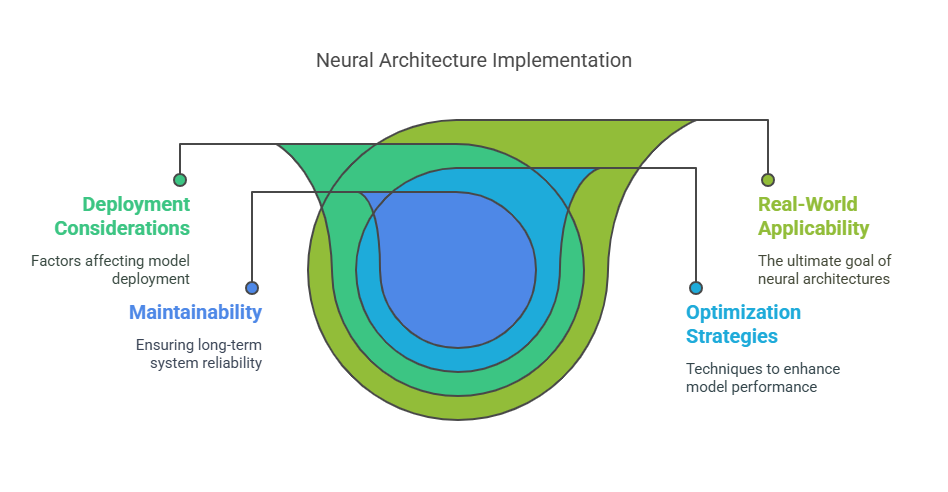

The true test of any neural architecture lies not in its theoretical elegance but in its real-world applicability. Moving from research papers to production systems requires careful consideration of deployment constraints, optimization strategies, and maintainability. Here’s how to bridge the gap between cutting-edge AI research and practical implementation.

Deployment Considerations

Not all architectures are created equal when it comes to deployment. While a Transformer might excel in accuracy, its memory footprint and inference latency could make it impractical for edge devices. Conversely, lightweight CNNs or hybrid models often strike a better balance for real-time applications. Key factors include:

- Hardware Compatibility: Does the model run efficiently on available GPUs, TPUs, or specialized AI accelerators?

- Latency Requirements: Can the architecture meet strict real-time constraints (e.g., autonomous driving, high-frequency trading)?

- Scalability: How does performance degrade with increasing input sizes or user loads?

Optimization Techniques

Even the most advanced architectures require fine-tuning for production. Common optimization strategies include:

- Quantization: Reducing model precision (e.g., FP32 → INT8) to speed up inference with minimal accuracy loss.

- Pruning: Removing redundant neurons or attention heads to shrink model size.

- Knowledge Distillation: Training smaller „student” models to mimic larger „teacher” models.

Maintainability & Monitoring

Deploying AI is just the beginning—keeping it running smoothly is an ongoing challenge. Best practices include:

- Version Control for Models: Tracking architecture changes, training data, and hyperparameters.

- Performance Monitoring: Detecting concept drift, data quality issues, or degradation over time.

- Explainability Tools: Ensuring model decisions remain interpretable, especially in regulated industries.

Case Study: Deploying a Hybrid Vision Model

Imagine a medical imaging startup using a CNN-Transformer hybrid for tumor detection. While the hybrid model outperforms pure CNNs in R&D, deploying it requires:

- Optimizing inference via TensorRT to meet hospital server constraints.

- Implementing fallback logic—if the model’s confidence is low, default to a radiologist review.

- Continuous validation against new scans to ensure generalization.

Key Takeaways

- There’s no „best” architecture—only the best for your use case.

- Optimization is mandatory, not optional, for production AI.

- Long-term success depends on monitoring and adaptability.

The Future of Neural Architectures: Emerging Trends and Research Directions

The field of neural architectures is evolving at an unprecedented pace, with new breakthroughs challenging long-held assumptions about how AI systems should be designed. As we look toward the next generation of models, several key trends are reshaping the landscape of deep learning.

Beyond Transformers: The Search for More Efficient Attention

While Transformers have dominated AI research in recent years, their computational demands have sparked interest in alternative architectures. Models like MLP-Mixer, Perceiver IO, and Hyena demonstrate that carefully designed feed-forward networks can rival attention-based approaches in certain tasks while being significantly more efficient. Researchers are particularly excited about state-space models (SSMs), which offer linear-time sequence modeling with performance comparable to Transformers in language and audio tasks.

Neuromorphic Computing and Brain-Inspired Designs

The gap between artificial and biological neural networks is narrowing. New architectures are incorporating principles from neuroscience, such as:

- Spiking Neural Networks (SNNs) for event-based processing

- Cortical column-inspired models that better mimic hierarchical brain organization

- Dynamic synaptic plasticity that allows continuous learning

These approaches promise more energy-efficient AI that can learn continuously from streaming data—a crucial capability for real-world applications.

The Rise of Modular and Compositional AI

Future systems will likely move away from monolithic models toward modular architectures where specialized sub-networks collaborate dynamically. Techniques like:

- Mixture-of-Experts (MoE) with adaptive computation

- Neural module networks that assemble task-specific components

- Foundation models with plug-and-play adapters

are paving the way for more flexible and maintainable AI systems that can efficiently handle multiple tasks without catastrophic forgetting.

Algorithm-Hardware Co-Design

The next wave of architectural innovation will be tightly coupled with advances in specialized hardware. Emerging directions include:

- Photonic neural networks for ultra-low latency inference

- In-memory computing architectures that eliminate the von Neumann bottleneck

- Quantum-inspired classical models that borrow concepts from quantum computing

These developments suggest that future neural architectures may look fundamentally different from today’s digital implementations.

Key Challenges Ahead

While the future looks promising, significant hurdles remain:

- Developing theoretical frameworks to understand why new architectures work

- Creating standardized benchmarks for fair comparison across paradigms

- Ensuring new models remain interpretable and align with human values

The coming years will likely see a diversification of architectural approaches rather than convergence on a single paradigm—an exciting prospect for researchers and practitioners alike.

The Programmer and the AI Agent: Human-Machine Collaboration in Modern Projects

Intelligent Agents: How Artificial Intelligence Is Changing Our World

AI Agents in Industry: Revolutionizing Manufacturing and Logistics