Introduction: The Rise of Large-Scale AI Deployment

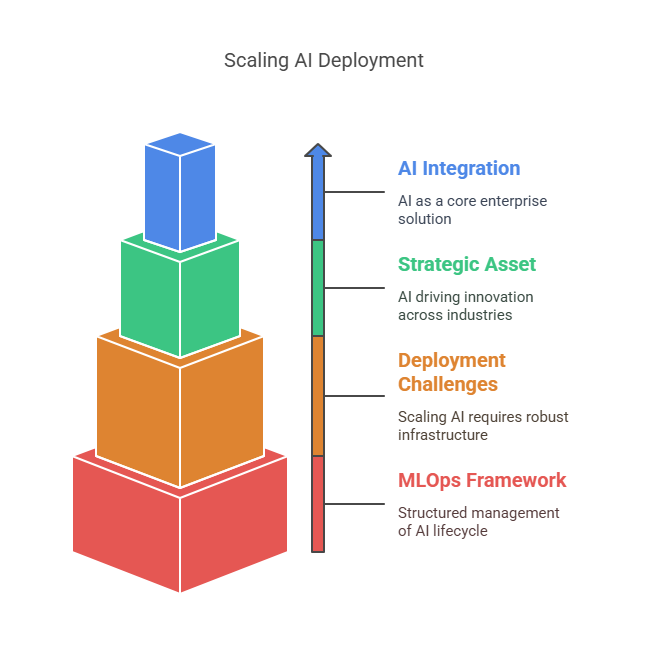

Artificial intelligence has rapidly evolved from a research-driven field to a core component of modern enterprise solutions. As organizations increasingly recognize the value of AI, the focus has shifted from isolated experiments to deploying AI models at scale in production environments. This transition brings both significant opportunities and unique challenges, making it essential to understand the landscape of large-scale AI deployment.

The Increasing Importance of AI in Enterprise Environments

AI is now a strategic asset for businesses across industries, driving innovation in areas such as customer service, supply chain optimization, fraud detection, and personalized marketing. Enterprises are leveraging AI to automate complex processes, extract insights from vast datasets, and deliver smarter products and services. As a result, the ability to deploy and manage AI models at scale has become a key differentiator for organizations seeking to maintain a competitive edge.

However, scaling AI from proof-of-concept to production is not a trivial task. It requires robust infrastructure, cross-functional collaboration, and a clear understanding of both technical and business objectives. The complexity increases as the number of models, data sources, and stakeholders grows, making it crucial to adopt best practices and frameworks that support sustainable AI operations.

Why MLOps Is Essential for Managing AI at Scale

MLOps (Machine Learning Operations) has emerged as a discipline that addresses the operational challenges of deploying and maintaining AI models in production. Unlike traditional software development, where code is the primary artifact, machine learning introduces additional variables such as data, model parameters, and training environments. This complexity demands new approaches to version control, testing, monitoring, and governance.

MLOps provides a structured framework for managing the entire machine learning lifecycle, from data collection and model development to deployment, monitoring, and retraining. By automating key processes and fostering collaboration between data scientists, engineers, and business stakeholders, MLOps helps organizations reduce time to market, minimize risk, and ensure the reliability and scalability of AI solutions.

Key Challenges in Production AI Deployment

Deploying AI at scale in production environments is a complex process that goes far beyond building accurate models in a lab setting. Organizations face a range of technical, organizational, and regulatory challenges that must be addressed to ensure reliable, scalable, and valuable AI systems.

Data-Related Challenges

One of the most significant challenges is managing data in a dynamic, real-world environment. Unlike traditional software, where code is the main variable, machine learning systems depend on both code and data. Data can change over time, leading to issues such as data drift, where the input data in production no longer matches the data the model was trained on. This can cause a rapid decline in model performance and requires constant monitoring, updating, and retraining of models.

Maintaining data quality and consistency is also a major concern. As datasets evolve, it becomes essential to track changes, manage data versioning, and ensure that all stakeholders are working with the correct and most up-to-date data. Without robust data management practices, it is difficult to reproduce results, compare experiments, or roll back to previous versions if issues arise.

Model-Related Challenges

Machine learning models are not static artifacts. They are the result of a combination of code, data, parameters, and the training environment. This complexity makes version control and reproducibility much more challenging than in traditional software development. To reproduce a model, you need to know exactly which data, code, and parameters were used, as well as the environment in which the model was trained.

Another challenge is managing dependencies and compatibility between development and production environments. Models often rely on specific versions of libraries and frameworks, and mismatches can lead to failures or unexpected behavior in production.

Model performance can also degrade over time due to changes in data or business requirements. Continuous monitoring and retraining are necessary to maintain high performance and relevance.

Team and Process-Related Challenges

AI projects require close collaboration between data scientists, engineers, IT, and business stakeholders. However, handovers between model development and operations are often messy and manual, leading to delays and misunderstandings. The „bus factor”—the risk that key knowledge is lost if a team member leaves—is especially high in machine learning, where undocumented data processing steps or model training scripts can make it difficult for others to pick up where someone else left off.

Bridging the gap between development and production requires clear roles, responsibilities, and communication channels. Without these, projects can stall or fail to deliver value.

Infrastructure and Resource Management

Scaling AI in production demands significant computational resources, especially for training and retraining large models. Efficient resource allocation, job scheduling, and dynamic management of compute and memory are essential to avoid bottlenecks and ensure smooth operation. As the number of models and experiments grows, so does the complexity of managing infrastructure.

Regulatory and Ethical Considerations

AI models are subject to increasing regulatory and ethical scrutiny. Ensuring compliance with data privacy laws, industry regulations, and internal governance standards is critical. This includes maintaining detailed records of how models were trained, what data was used, and how decisions are made. Ethical concerns, such as bias and fairness, must also be addressed, as models that perform well technically may still fail from a regulatory or reputational perspective.

Solutions and Best Practices for Large-Scale AI Deployment

Successfully deploying AI at scale requires more than just building accurate models—it demands a robust set of solutions and best practices that address the unique challenges of production environments. Drawing on insights from practical-mlops-ebook.pdf and other leading resources, this section explores proven strategies for managing data, models, teams, and infrastructure in enterprise AI projects.

MLOps Best Practices

MLOps (Machine Learning Operations) is the foundation for reliable, scalable AI deployment. The core principles include version control for all artifacts (models, code, data, parameters, and environments), componentizing the model creation process into reusable pipelines, codifying tests and checkpoints, and automating repetitive tasks. By enforcing these practices, organizations can ensure reproducibility, traceability, and faster time to market.

Automated CI/CD pipelines are essential for streamlining the transition from development to production. These pipelines automate data collection, model training, evaluation, and deployment, reducing manual errors and freeing up data scientists to focus on innovation. Containerization technologies like Docker and Kubernetes further enhance reproducibility and scalability by encapsulating models and their dependencies, making it easier to deploy across different environments.

Feature stores are another key element, providing a centralized repository for managing, sharing, and reusing features across projects. This not only accelerates development but also ensures consistency and governance in data usage.

Data Management Strategies

Data is the lifeblood of machine learning, and its dynamic nature in production environments requires advanced management strategies. Data versioning and change tracking are critical for maintaining a clear history of dataset evolution, enabling teams to revert to previous versions, conduct A/B tests, and ensure reproducibility. Modern data platforms offer tools for tracking raw data additions, annotation updates, and other changes, making collaboration transparent and efficient.

Automating data labeling is vital for scaling AI initiatives. While manual labeling is time-consuming and costly, AI-powered auto-labeling—using pre-trained models or interactive tools—can accelerate the process. However, organizations must balance automation with quality, as generic APIs may not always meet specific needs. Advanced techniques like few-shot, semi-supervised, and weakly supervised learning help build models with limited labeled data, while transfer learning leverages knowledge from related tasks. Data augmentation and synthetic data generation further expand dataset diversity without additional manual effort.

Model Monitoring and Retraining

Once deployed, models are exposed to data drift and changing business requirements, which can degrade performance over time. Automated monitoring pipelines track key metrics such as accuracy, precision, and recall, and can trigger alerts or retraining when thresholds are breached. Techniques like active learning prioritize the most informative data for annotation and retraining, ensuring models remain robust and relevant.

Retraining pipelines should be integrated with data versioning systems to guarantee that new models are built on the correct data and configurations. This approach supports continuous improvement and minimizes the risk of model staleness.

Collaboration and Communication

Effective collaboration between data scientists, engineers, business stakeholders, and external partners is essential for successful AI deployment. Clear roles and responsibilities, transparent communication channels, and shared platforms for data and model management foster alignment and reduce the risk of knowledge loss (the „bus factor”). Data platforms that support permission management and task allocation help coordinate efforts across teams and organizations.

Open-source collaboration is also gaining traction, enabling broader contributions to datasets and models. By adopting practices from software development—such as branching, merging, and conflict resolution—AI teams can work more efficiently and share knowledge more widely.

Infrastructure and Resource Optimization

Scaling AI workloads requires efficient management of computational resources. Dynamic resource allocation, virtualization, and orchestration tools like Kubernetes enable organizations to optimize compute and memory usage, avoid bottlenecks, and support distributed training across multiple GPU nodes. Automated job scheduling and monitoring provide visibility into resource utilization, helping teams balance workloads and control costs.

Governance and Compliance

As AI systems become more integral to business operations, governance and compliance take on greater importance. Strong governance frameworks ensure that all aspects of the machine learning lifecycle—data collection, model training, deployment, and monitoring—are transparent, auditable, and aligned with regulatory requirements. Version control for all artifacts, detailed documentation, and automated audit trails are essential for passing governance audits and maintaining trust.

Responsible AI principles, including fairness, transparency, and bias mitigation, should be embedded into the MLOps workflow. This involves codifying tests for ethical concerns, monitoring for unintended biases, and ensuring that models are explainable and accountable.

The MLOps Toolchain

A robust MLOps toolchain is the backbone of any large-scale AI deployment. It integrates the essential components and workflows needed to manage data, models, infrastructure, and collaboration across teams. By leveraging the right tools and platforms, organizations can streamline the machine learning lifecycle, ensure reproducibility, and accelerate the path from experimentation to production.

Data Platforms for Dynamic Data Management

Modern machine learning projects rarely work with static datasets. Data is constantly evolving, requiring platforms that support dynamic data management. These platforms enable teams to track changes in datasets, manage versioning, and maintain a clear history of updates—whether it’s new raw data, annotation changes, or corrections. This transparency is crucial for collaboration, as it allows in-house teams, third-party vendors, and project managers to stay aligned and allocate tasks efficiently.

A good data platform should offer features like change tracking, branching, merging, and conflict resolution, similar to how code is managed in Git. This approach guarantees reproducibility and allows teams to conduct A/B tests, roll back to previous versions, and experiment with different data configurations without losing track of progress.

Tools for Data Labeling and Annotation

Data labeling is often one of the most resource-intensive steps in building machine learning models. To scale effectively, organizations must automate as much of this process as possible. There are several approaches:

AI API Services: These are readily available and easy to integrate but are often too general and not tailored to specific datasets.

Interactive AI Tools: These tools assist human labelers by making annotation more efficient, but their effectiveness can vary depending on the use case.

Custom AI Models: Training your own models for auto-labeling can be highly effective for specialized datasets, though it requires a critical mass of labeled data to avoid the “cold start” problem.

Advanced techniques such as few-shot, semi-supervised, and weakly supervised learning, as well as data augmentation and synthetic data generation, can further reduce the need for manual labeling and expand the diversity of training data.

Machine Learning Frameworks and Model Training Environments

The choice of machine learning frameworks (such as TensorFlow, PyTorch, or scikit-learn) and training environments is central to the MLOps toolchain. These tools must support experiment tracking, version control, and integration with data platforms. Experiment tracking tools help data scientists record configurations, parameters, and results, making it easier to compare models and ensure reproducibility.

Containerization technologies like Docker and orchestration tools like Kubernetes are increasingly used to standardize environments, manage dependencies, and enable scalable, distributed training across multiple nodes or GPUs.

Monitoring and Visualization Tools

Once models are deployed, continuous monitoring is essential to detect data drift, performance degradation, and other issues. Monitoring platforms track key metrics such as accuracy, precision, recall, and latency, and can trigger alerts or automated retraining when thresholds are breached. Visualization dashboards provide stakeholders with real-time insights into model health, data quality, and resource utilization.

Explainability tools are also becoming standard, offering techniques like partial dependence plots, subpopulation analyses, and Shapley values to help teams understand and communicate how models make decisions—an important aspect for responsible AI and regulatory compliance.

CI/CD and Automation Tools

Continuous integration and continuous deployment (CI/CD) pipelines are the backbone of modern MLOps. These pipelines automate the steps from data ingestion and preprocessing to model training, evaluation, and deployment. Automation reduces manual errors, accelerates iteration, and ensures that models can be reliably and repeatedly pushed to production.

CI/CD tools should integrate with version control systems, data platforms, and monitoring solutions to provide a seamless workflow. In regulated industries, automated pipelines also support auditability and compliance by maintaining detailed logs and documentation of every step in the process.

Monitoring, Maintenance, and Governance in Production AI

As organizations scale their AI initiatives, the focus shifts from just deploying models to ensuring their ongoing reliability, compliance, and business value. This is where monitoring, maintenance, and governance become essential pillars of a mature MLOps strategy. Let’s explore each of these areas and the best practices that support them, drawing on insights from leading MLOps resources such as practical-mlops-ebook.pdf, Complete-Guide-to-MLOps.pdf, and Comment-mettre-à-l’échelle-le-Machine-Learning-en-entreprise.pdf.

Model Monitoring: Ensuring Performance and Detecting Drift

Once a model is in production, its environment is dynamic—data distributions can shift, user behavior may change, and external factors can impact predictions. Continuous monitoring is therefore critical to detect issues such as data drift (when input data changes over time), model drift (when model performance degrades), and infrastructure failures.

Effective monitoring involves tracking key metrics like accuracy, precision, recall, latency, and throughput. Modern MLOps platforms provide dashboards and automated alerts to notify teams when metrics fall outside acceptable thresholds. For example, input drift detection compares recent production data with the data used for model evaluation, while output monitoring ensures predictions remain within expected ranges.

Automated feedback loops are also important. By integrating validation feedback into the pipeline, organizations can automatically retrain and redeploy models when performance drops, minimizing downtime and maintaining business value.

Maintenance: Retraining, Updating, and Scaling Models

AI models are not static assets—they require regular maintenance to stay relevant and effective. This includes retraining models with new data, updating them to reflect changing business requirements, and scaling them to handle increased demand or new use cases.

Retraining strategies can be scheduled (e.g., nightly or weekly) or triggered by monitoring alerts. The retraining process should be automated as much as possible, leveraging versioned datasets and reproducible pipelines to ensure consistency. In some cases, organizations may need to manage multiple versions of a model simultaneously, such as retraining per customer or region, which adds complexity but also enables more personalized and accurate predictions.

Scaling models also involves optimizing infrastructure, such as using distributed training on multiple GPUs or leveraging cloud resources for elasticity. Automated resource management and orchestration tools help ensure that models can scale efficiently without hitting compute or memory bottlenecks.

Governance: Compliance, Auditability, and Responsible AI

As AI becomes more deeply integrated into business processes, governance and compliance are increasingly important. Regulatory requirements—such as GDPR, the EU AI Act, or industry-specific standards—demand that organizations can explain, audit, and control their AI systems.

Good governance starts with robust version control for all model artifacts, including code, data, parameters, and training environments. This ensures that every prediction can be traced back to the exact model and data used, supporting auditability and reproducibility. Automated audit trails and detailed documentation are essential for passing governance audits and maintaining trust with stakeholders.

Responsible AI goes beyond technical compliance to address ethical concerns such as bias, fairness, and transparency. MLOps pipelines should include tests and checkpoints for ethical risks, such as monitoring for unintended biases or ensuring that models are explainable. Explainability tools—like feature importance plots or Shapley values—help teams and regulators understand how models make decisions.

Best Practices and Tools

To support monitoring, maintenance, and governance, organizations should adopt the following best practices:

Implement automated monitoring pipelines with real-time dashboards and alerting for key metrics.

Use versioned data and model pipelines to ensure reproducibility and easy rollback in case of issues.

Automate retraining and deployment workflows to minimize manual intervention and reduce risk.

Maintain detailed documentation and audit trails for all model artifacts and decisions.

Integrate explainability and fairness checks into the MLOps workflow to support responsible AI.

Foster collaboration between data scientists, engineers, compliance officers, and business stakeholders to ensure alignment and shared understanding.

Popular tools and platforms in this space include Dataiku, IBM OpenScale, and open-source solutions like MLflow and Kubeflow, which provide end-to-end support for monitoring, retraining, and governance.

Example: Automated Model Monitoring in Python

Here’s a simple Python example using scikit-learn and pandas to monitor model accuracy and trigger retraining if performance drops below a threshold:

python

import pandas as pd

from sklearn.metrics import accuracy_score

from sklearn.ensemble import RandomForestClassifier

import joblib

# Load production data and model

X_new = pd.read_csv('production_data.csv')

y_true = pd.read_csv('production_labels.csv')

model = joblib.load('model_v1.pkl')

# Make predictions and calculate accuracy

y_pred = model.predict(X_new)

accuracy = accuracy_score(y_true, y_pred)

# Define threshold and retrain if needed

THRESHOLD = 0.85

if accuracy < THRESHOLD:

print("Accuracy dropped below threshold. Retraining model...")

# Load new training data

X_train = pd.read_csv('new_training_data.csv')

y_train = pd.read_csv('new_training_labels.csv')

# Retrain model

model.fit(X_train, y_train)

joblib.dump(model, 'model_v2.pkl')

print("Model retrained and saved as model_v2.pkl")

else:

print(f"Model accuracy is {accuracy:.2f}, no retraining needed.")

# Created/Modified files during execution:

print(file_name) for file_name in ["model_v2.pkl"]This script demonstrates a basic monitoring and retraining loop. In production, this logic would be part of a larger automated pipeline, integrated with dashboards, alerting, and governance tools.

Practical Implementation of MLOps: From Experiment to Production

Moving from machine learning experiments to stable production systems is a journey that requires careful planning, robust processes, and the right tools. Practical MLOps bridges the gap between data science prototypes and reliable, scalable AI services. Below, we explore the key stages and best practices for implementing MLOps in real-world projects, drawing on insights from industry guides and practical-mlops-ebook.pdf.

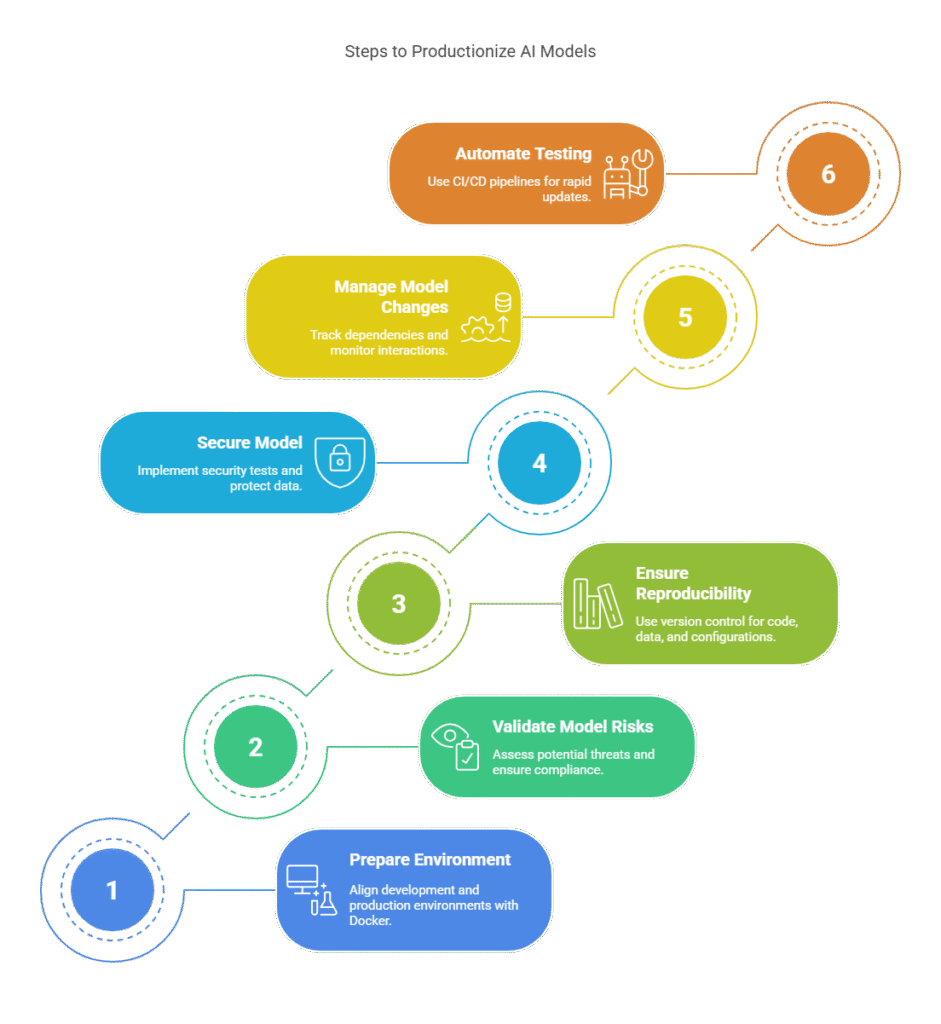

6.1 Preparing the Runtime Environment

The first step in productionizing machine learning is to ensure that the runtime environment is consistent and reproducible. This means aligning the development environment with production, managing dependencies, and configuring hardware resources. Tools like Docker are widely used to containerize models, making it easy to move them between environments and ensuring that they run the same way everywhere.

It is also crucial to provide the model with access to the same data sources it will use in production before validation and deployment. This helps avoid surprises related to data structure or quality differences between development and production.

6.2 Model Risk Validation

Before deploying a model, it is essential to validate its risks. This goes beyond checking prediction quality—it includes assessing potential threats such as overfitting, vulnerability to adversarial attacks, and compliance with industry regulations. Practical validation involves running tests on the latest production data, performing statistical checks, and analyzing the model’s robustness to input changes.

Validation should be well-documented and repeatable, making it easier to audit and roll back models if issues are detected.

6.3 Ensuring Reproducibility and Auditability

Reproducibility and auditability are foundational for production AI. Every model should be reproducible in terms of code, data, and training parameters. This is achieved by using version control not only for code but also for data and environment configurations.

Automating the build and deployment process with CI/CD pipelines enables rapid, safe updates and easy rollbacks. Detailed logs and documentation are essential for tracking the full history of a model, from initial experiments to production deployment.

6.4 Machine Learning Model Security

Security is a critical aspect of deploying AI models. Models can be vulnerable to various attacks, such as adversarial inputs or data manipulation. In practice, this means implementing security tests, monitoring for anomalies, and protecting models and data from unauthorized access.

Regularly updating the environment and following cybersecurity best practices—such as data encryption and access control—are also important for maintaining a secure production system.

6.5 Managing Model Changes and Interactions

Production environments often involve multiple models that may interact with each other. Managing changes—both in code and data—requires well-defined processes and tools for tracking dependencies between models. Orchestration tools help automate the sequence of testing, validation, and deployment steps.

It is also important to monitor interactions between models, especially when one model’s output serves as another’s input. Errors in one model can propagate through the system, so rapid detection and response to anomalies are crucial.

6.6 Example: Automating Testing and Deployment in Python

Below is a Python example that demonstrates how to automate model testing and deployment using CI/CD principles and version control:

python

import joblib

import pandas as pd

from sklearn.metrics import accuracy_score

from sklearn.ensemble import RandomForestClassifier

# Load the latest test data and model

X_test = pd.read_csv('latest_test_data.csv')

y_test = pd.read_csv('latest_test_labels.csv')

model = joblib.load('model_production.pkl')

# Perform quality tests

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

# Define acceptance threshold

THRESHOLD = 0.90

if accuracy >= THRESHOLD:

print(f"Model passed validation with accuracy: {accuracy:.2f}. Deploying to production.")

# Here you could add code to automatically deploy the model

else:

print(f"Model failed validation with accuracy: {accuracy:.2f}. Rolling back to previous version.")

# Created/Modified files during execution:

print(file_name) for file_name in ["model_production.pkl"]

This simple pipeline can be integrated with CI/CD tools like Jenkins, GitLab CI, or GitHub Actions to automatically test and deploy models after each repository update.

The Human Factor in MLOps: Roles and Collaboration

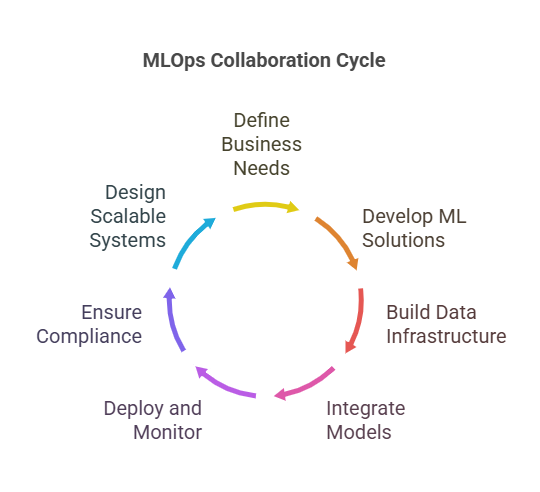

While MLOps is often associated with tools, automation, and technical workflows, its true success in the enterprise depends on people. Effective collaboration between diverse roles is essential for building, deploying, and maintaining machine learning models at scale. This article explores the key human roles in MLOps, their responsibilities, and how they work together to ensure robust, scalable, and responsible AI systems, drawing on insights from Comment-mettre-à-l’échelle-le-Machine-Learning-en-entreprise.pdf and practical-mlops-ebook.pdf.

Subject Matter Experts (SMEs)

Subject matter experts are the bridge between business needs and technical solutions. They define the goals, business questions, and key performance indicators (KPIs) that machine learning models should address. SMEs are involved at the very start of the ML lifecycle, helping to frame the problem and ensure that the models being built are aligned with real business objectives. Their involvement doesn’t end at deployment; they also help interpret model results in business terms and provide feedback to data teams when outcomes don’t match expectations. This feedback loop is crucial for continuous improvement and for ensuring that AI delivers tangible business value.

Data Scientists

Data scientists are responsible for translating business problems into machine learning solutions. Their work spans data exploration, feature engineering, model selection, training, and evaluation. In a mature MLOps environment, data scientists collaborate closely with SMEs to understand the business context and with engineers to ensure that models are production-ready. They benefit from MLOps by gaining access to standardized tools, reproducible workflows, and automated pipelines, which free them from repetitive tasks and allow them to focus on innovation and experimentation.

Data Engineers

Data engineers build and maintain the data infrastructure that powers machine learning. They ensure that data is accessible, reliable, and of high quality, and they design pipelines for data ingestion, transformation, and storage. In the MLOps context, data engineers work with data scientists to provide the datasets needed for model development and with DevOps teams to ensure that data flows smoothly into production systems. Their expertise is critical for managing dynamic, evolving datasets and for implementing data versioning and lineage tracking.

Software Engineers

Software engineers bring best practices from traditional software development into the MLOps ecosystem. They focus on code quality, testing, and maintainability, and they help integrate machine learning models into larger applications and services. Their skills are essential for building robust APIs, user interfaces, and backend systems that interact with deployed models. Software engineers also play a key role in automating tests and ensuring that ML code adheres to enterprise standards.

DevOps Engineers

DevOps engineers are responsible for the operational aspects of machine learning systems. They manage CI/CD pipelines, automate deployment processes, and ensure the security, performance, and availability of ML models in production. DevOps teams work closely with data scientists and engineers to bridge the gap between development and operations, applying proven DevOps principles—such as automation, monitoring, and continuous delivery—to the unique challenges of ML.

Model Risk Managers and Auditors

In regulated industries, model risk managers and auditors play a crucial role in ensuring compliance and minimizing risk. They analyze not only model outcomes but also the business objectives and regulatory requirements that models must meet. Their responsibilities include reviewing model documentation, validating compliance before deployment, and monitoring models in production for ongoing risk. MLOps platforms support these roles by providing robust reporting, data lineage tracking, and automated audit trails, making the audit process more efficient and transparent.

Machine Learning Architects

Machine learning architects oversee the design and scalability of the entire ML infrastructure. They ensure that the environment supports flexible, scalable model pipelines and that resources are allocated efficiently. ML architects collaborate across teams to identify bottlenecks, introduce new technologies, and drive long-term improvements in the MLOps strategy. Their strategic perspective is essential for building systems that can grow with the organization’s needs.

Collaboration and Communication

The complexity of enterprise AI means that no single role can succeed in isolation. Effective MLOps requires clear communication, shared platforms, and well-defined processes that enable collaboration across all these roles. This includes transparent documentation, permission management, and feedback mechanisms that allow business and technical teams to stay aligned. Open-source and inter-company collaborations are also becoming more common, enabling organizations to leverage external expertise and contribute to shared datasets and models.

How AI agents can help you write better code

AI Agents: Potential in Projects

AI Agents in Practice: How to Automate a Programmer’s Daily Work