Introduction: The Importance of Hyperparameter Optimization

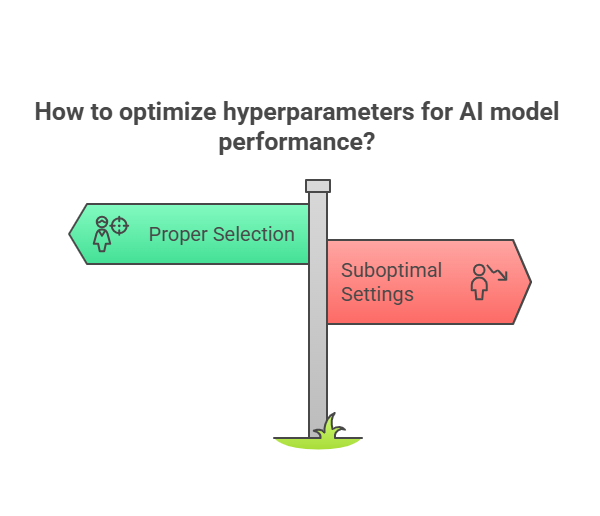

Hyperparameter optimization is one of the key stages in building effective artificial intelligence models. Proper selection of these parameters can significantly improve model performance, while suboptimal settings often lead to poor results, overfitting, or underfitting.

What are Hyperparameters and Why Are They Important?

Hyperparameters are external settings of a model that are not directly learned during the training process but must be specified before training begins. Examples of hyperparameters include:

the number of trees in a random forest,

the depth of a decision tree,

the learning rate in neural networks,

the number of training epochs,

batch size.

Unlike model parameters (e.g., weights in a neural network), hyperparameters are set by the user and have a huge impact on the learning process and the final quality of the model.

The Impact of Hyperparameters on Model Performance

Proper selection of hyperparameters can:

increase prediction accuracy,

shorten training time,

improve the model’s ability to generalize to new data,

minimize the risk of overfitting or underfitting.

On the other hand, incorrect hyperparameter settings can cause even the best algorithm to fail to achieve satisfactory results. That’s why hyperparameter optimization is essential in every AI project, regardless of the chosen technology or model type.

Basic Techniques for Hyperparameter Optimization

Hyperparameter optimization is the process of searching for the best values of a model’s external parameters to maximize its performance. There are several basic techniques that are widely used in both scientific and commercial projects.

Manual Tuning

The simplest, but often time-consuming, method. It involves manually experimenting with different hyperparameter values and observing how they affect the model’s results. Manual tuning is useful at the initial stage of working with a new model or when the number of hyperparameters is small.

Advantages:

Intuitive and gives full control over the process.

Allows for quick elimination of obviously poor settings.

Disadvantages:

Very time-consuming with a larger number of hyperparameters.

No guarantee of finding optimal values.

Grid Search

Grid Search involves defining ranges of values for selected hyperparameters and testing all possible combinations. For each combination, the model is trained and evaluated, and the best set of hyperparameters is chosen based on a selected metric (e.g., accuracy).

Example: Grid Search in scikit-learn (Python)

python

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 5, 10]

}

model = RandomForestClassifier()

grid_search = GridSearchCV(model, param_grid, cv=3)

grid_search.fit(X_train, y_train)

print("Best hyperparameters:", grid_search.best_params_)Advantages:

Systematic search of the hyperparameter space.

Easy to automate.

Disadvantages:

Very computationally expensive with a large number of parameters and wide ranges.

May test many suboptimal combinations.

Random Search

Random Search involves randomly selecting combinations of hyperparameters from specified ranges and testing their performance. In practice, it often finds good settings faster than Grid Search, especially when not all hyperparameters have an equally large impact on the result.

Example: Random Search in scikit-learn (Python)

python

from sklearn.model_selection import RandomizedSearchCV

from sklearn.ensemble import RandomForestClassifier

import numpy as np

param_dist = {

'n_estimators': np.arange(50, 201, 10),

'max_depth': [None, 5, 10, 15]

}

model = RandomForestClassifier()

random_search = RandomizedSearchCV(model, param_distributions=param_dist, n_iter=10, cv=3, random_state=42)

random_search.fit(X_train, y_train)

print("Best hyperparameters:", random_search.best_params_)Advantages:

Faster than Grid Search with a large number of hyperparameters.

Often finds good solutions with fewer trials.

Disadvantages:

Results may be unrepresentative if the number of trials is too small.

No guarantee of finding the absolute best set.

Advanced Techniques for Hyperparameter Optimization

As the complexity of AI models and the number of hyperparameters increase, basic optimization methods become insufficient or too computationally expensive. That’s why more advanced techniques are increasingly used, allowing for faster and more effective discovery of optimal settings.

Bayesian Optimization

Bayesian optimization is an intelligent method for searching the hyperparameter space, using probabilistic models (e.g., Gaussian processes) to predict which combinations of hyperparameters may yield the best results. Instead of testing all possibilities, the algorithm learns from previous trials and selects the next combinations in a way that maximizes the chance of improvement.

Advantages:

Efficient search even in large and complex hyperparameter spaces.

Fewer trials needed to find a good solution compared to Grid Search or Random Search.

Disadvantages:

Greater implementation complexity.

Can be slower with very large datasets or long model training times.

Example: Bayesian optimization using the Hyperopt library (Python)

python

from hyperopt import fmin, tpe, hp, Trials

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

def objective(params):

model = RandomForestClassifier(**params)

score = cross_val_score(model, X_train, y_train, cv=3).mean()

return -score # minimize the objective function

space = {

'n_estimators': hp.choice('n_estimators', [50, 100, 200]),

'max_depth': hp.choice('max_depth', [None, 5, 10, 15])

}

trials = Trials()

best = fmin(fn=objective, space=space, algo=tpe.suggest, max_evals=20, trials=trials)

print("Best hyperparameters:", best)Evolutionary Algorithms

Evolutionary algorithms are inspired by biological evolution processes. They create a population of different sets of hyperparameters, which are “crossed” and “mutated,” and then the best combinations are selected for the next generations. This makes it possible to explore very large and complex hyperparameter spaces.

Advantages:

Good for optimizing problems with many local minima.

Ability to test many solutions in parallel.

Disadvantages:

High computational costs.

Difficulty in selecting algorithm parameters (e.g., population size, mutation probability).

Gradient-Based Methods

In some models, especially deep neural networks, it is possible to use gradients to optimize certain hyperparameters, such as the learning rate. These methods automatically adjust hyperparameter values during training, which can speed up convergence and improve results.

Advantages:

Automatic adjustment of hyperparameters during training.

Faster convergence in some cases.

Disadvantages:

Limited application—not all hyperparameters can be optimized with gradients.

Requires support in the model architecture and framework.

Tools for Hyperparameter Optimization

With the growing popularity of artificial intelligence, many tools have been developed to automate and streamline the hyperparameter optimization process. These tools make it easy to test different techniques, monitor results, and integrate optimization with popular ML and DL frameworks.

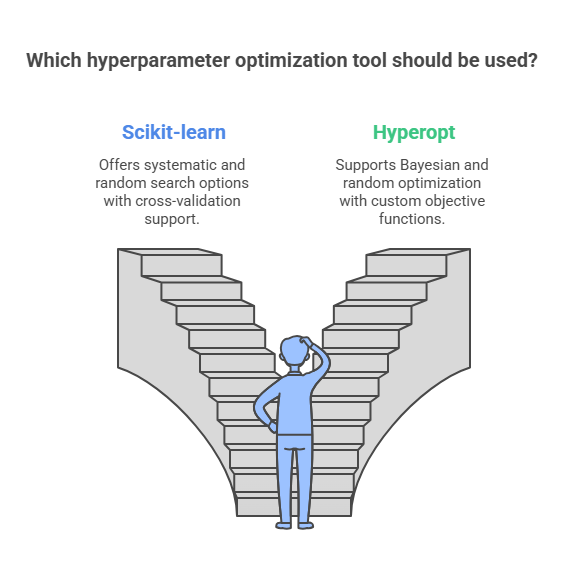

Scikit-learn (GridSearchCV, RandomizedSearchCV)

Scikit-learn is one of the most popular ML libraries in Python. It offers built-in tools for hyperparameter optimization:

GridSearchCV – enables systematic searching of the hyperparameter space based on a grid of defined values.

RandomizedSearchCV – allows random sampling of hyperparameter combinations from specified ranges.

Both tools integrate with scikit-learn pipelines and support cross-validation.

Hyperopt

Hyperopt is a library for Bayesian and random optimization that supports both simple and complex hyperparameter spaces. It allows you to define your own objective functions and easily integrates with popular ML frameworks.

Example usage of Hyperopt

python

from hyperopt import fmin, tpe, hp, Trials

# ... (example as in the previous article)Optuna

Optuna is a modern tool for automatic hyperparameter optimization that supports both Bayesian optimization and other strategies. It offers advanced monitoring, visualization, and automatic stopping of inefficient trials.

Advantages:

High performance and flexibility.

Integration with many frameworks (scikit-learn, PyTorch, TensorFlow).

Keras Tuner

Keras Tuner is a tool created specifically for hyperparameter optimization in models built with Keras and TensorFlow. It allows easy definition of hyperparameter ranges and automatic testing of different neural network architectures.

Ray Tune

Ray Tune is a scalable tool for hyperparameter optimization that enables parallel execution of many experiments on clusters or in the cloud. It supports various optimization strategies, including Bayesian, evolutionary, and random search.

Practical Tips for Hyperparameter Optimization

Hyperparameter optimization is not just about choosing the right technique or tool, but also about skillfully planning and monitoring the entire process. Here are the most important practical tips to help you effectively carry out optimization in AI projects.

Defining Hyperparameter Ranges

Before starting optimization, it’s worth considering which hyperparameters have the greatest impact on model performance and setting reasonable value ranges for them. Ranges that are too wide can prolong the search time, while ranges that are too narrow may prevent you from finding optimal settings. It’s helpful to use model documentation and experience from previous experiments.

Choosing the Right Evaluation Metric

It’s crucial to define the appropriate metric by which you will assess the effectiveness of individual hyperparameter combinations. In classification, this could be accuracy, precision, recall, or F1-score; in regression—MSE, MAE, or R². The choice of metric should align with the business goal of the project.

Using Cross-Validation

To obtain a reliable assessment of model performance for different hyperparameter settings, it’s worth using cross-validation. This minimizes the risk of overfitting and provides a better estimate of how the model will perform on new data.

Example: Optimization with cross-validation in scikit-learn (Python)

python

from sklearn.model_selection import GridSearchCV

from sklearn.ensemble import RandomForestClassifier

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 5, 10]

}

model = RandomForestClassifier()

grid_search = GridSearchCV(model, param_grid, cv=5, scoring='accuracy')

grid_search.fit(X_train, y_train)

print("Best hyperparameters:", grid_search.best_params_)

print("Best validation score:", grid_search.best_score_)Monitoring the Optimization Process

It’s important to continuously monitor the optimization process—both in terms of results and computation time. Good tools (e.g., Optuna, Ray Tune) offer visualizations and reports that help track progress and quickly identify inefficient trials. Regularly saving results helps avoid losing valuable information if the experiment is interrupted.

Case Study: Hyperparameter Optimization for a Specific AI Model

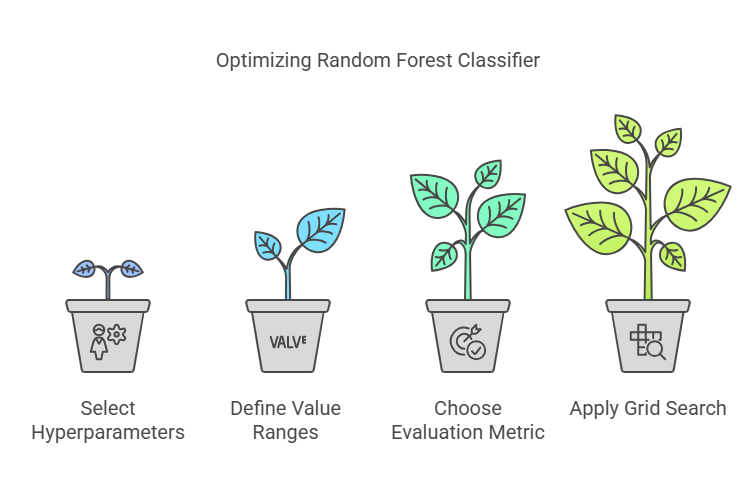

To illustrate the practical process of hyperparameter optimization, let’s walk through a step-by-step example of applying this technique to a Random Forest classifier on the Iris dataset.

Problem and Model Description

The goal is to build a model that classifies iris species as accurately as possible based on features such as petal and sepal length and width. We will use a Random Forest classifier, which has several key hyperparameters affecting its performance, including the number of trees (n_estimators) and the maximum tree depth (max_depth).

Step-by-Step Optimization Process

Selecting Hyperparameters to Optimize:

We will focus on n_estimators (number of trees) and max_depth (maximum tree depth).

Defining Value Ranges:

n_estimators: [50, 100, 200]

max_depth: [None, 5, 10]

Choosing the Evaluation Metric:

We will use accuracy as the main metric.

Applying Grid Search with Cross-Validation:

We will test all combinations of hyperparameters using 5-fold cross-validation.

Sample code (Python, scikit-learn):

python

from sklearn.datasets import load_iris

from sklearn.model_selection import GridSearchCV, train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load data

iris = load_iris()

X_train, X_test, y_train, y_test = train_test_split(

iris.data, iris.target, test_size=0.2, random_state=42

)

# Define hyperparameter grid

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 5, 10]

}

# Grid Search with cross-validation

model = RandomForestClassifier()

grid_search = GridSearchCV(model, param_grid, cv=5, scoring='accuracy')

grid_search.fit(X_train, y_train)

print("Best hyperparameters:", grid_search.best_params_)

# Evaluate on the test set

best_model = grid_search.best_estimator_

y_pred = best_model.predict(X_test)

print("Test set accuracy:", accuracy_score(y_test, y_pred))Results and Conclusions

After optimization, we obtain a set of hyperparameters that provide the highest effectiveness on the validation set. The model with these settings is then tested on the test data to check its ability to generalize. Thanks to this process, we can be confident that the selected model is not only well-fitted to the training data but also effective in practical applications.

Challenges and Limitations of Hyperparameter Optimization

Hyperparameter optimization, although crucial for achieving high performance in AI models, comes with a number of challenges and limitations that should be considered when planning and executing projects.

Computational Costs

Advanced optimization techniques such as Grid Search, Random Search, or Bayesian optimization require repeatedly training the model for different combinations of hyperparameters. For large datasets or complex models (e.g., deep neural networks), this process can be very time-consuming and resource-intensive. In practice, it is often necessary to use computing clusters or the cloud, which generates additional costs.

Overfitting During Optimization

During intensive hyperparameter optimization, there is a risk that the model will become too closely fitted to the validation data used in the optimization process. This can lead to reduced effectiveness on new, unseen data. To prevent this, it is worth using additional techniques such as nested cross-validation or reserving a separate test set for the final model evaluation.

Choosing the Right Technique

Not every optimization technique will work for every project. Grid Search is effective with a small number of hyperparameters but becomes impractical with larger spaces. Random Search is more flexible but may miss the best combinations. Advanced methods such as Bayesian optimization or evolutionary algorithms require additional knowledge and configuration.

The Future of Hyperparameter Optimization

Hyperparameter optimization is a rapidly evolving field, with increasingly advanced techniques and tools emerging. Automation of this process and the use of new algorithms allow for even more effective and faster discovery of optimal settings for AI models.

Automation and Transfer Learning

AutoML (Automated Machine Learning) tools are gaining popularity, automating not only hyperparameter selection but also feature selection, algorithm choice, and data preparation. Thanks to this, even people without advanced technical knowledge can build effective AI models.

Transfer learning is another trend that allows knowledge gained during hyperparameter optimization in one project to be used to speed up the process in another, similar task. This can shorten the search time and reduce computational costs.

New Algorithms and Tools

More and more advanced optimization algorithms are appearing on the market, such as meta-learning (learning how to learn), population-based optimization, or hybrid approaches combining different techniques. Modern tools like Optuna, Ray Tune, or Google Vizier offer support for automatic stopping of inefficient trials, distributed processing, and advanced result visualization.

Summary: Key Takeaways and Best Practices

Hyperparameter optimization is an essential part of building effective AI models, and it can significantly impact their performance, generalization ability, and practical usefulness. The right approach to this process not only leads to better results but also helps optimize the time and cost of working on a project.

Key takeaways and best practices:

Conscious selection of hyperparameters: Focus on the parameters that have the greatest impact on the model. It’s not worth optimizing everything at once—start with the most important ones.

Reasonable range setting: Set value ranges based on documentation, previous experiments, and domain knowledge. Ranges that are too wide prolong the search time, while those that are too narrow may prevent you from finding optimal settings.

Choosing the right technique: Match the optimization method to the number of hyperparameters, available resources, and the specifics of the problem. Grid Search works well with a small number of parameters, while Random Search and Bayesian methods are better for larger spaces.

Cross-validation: Use cross-validation to obtain a reliable assessment of model effectiveness and minimize the risk of overfitting.

Monitoring and documentation: Regularly monitor the optimization process, record results, and analyze which settings yield the best outcomes.

Caution against overfitting: Be careful not to overfit the model to the validation data. It’s a good idea to reserve a separate test set for the final evaluation.

Use of modern tools: Take advantage of libraries such as scikit-learn, Optuna, Ray Tune, or Keras Tuner, which automate and speed up the optimization process.

Additional Resources

Links to Articles, Courses, and Libraries

To deepen your knowledge of hyperparameter optimization and the practical use of this technique in AI projects, it’s worth reaching for proven educational sources, tools, and expert materials. Here are some selected, recommended resources:

Articles and Guides:

A Comprehensive Introduction to Different Types of Hyperparameter Optimization Techniques – an overview of the most important methods and their applications.

Hyperparameter tuning the random forest in scikit-learn – a practical step-by-step guide.

Optuna Documentation – official documentation for one of the most popular optimization tools.

Online Courses:

Coursera: Hyperparameter Tuning in Machine Learning – a course dedicated to practical hyperparameter optimization.

DeepLearning.AI: Machine Learning Engineering for Production (MLOps) – a course on deploying and optimizing AI models in production environments.

Tools and Libraries:

scikit-learn – documentation for GridSearchCV and RandomizedSearchCV.

Optuna – a modern library for automatic hyperparameter optimization.

Ray Tune – a tool for scalable optimization on clusters and in the cloud.

Keras Tuner – a tool for hyperparameter optimization in Keras/TensorFlow models.

AI Agent Lifecycle Management: From Deployment to Self-Healing and Online Updates

Advanced Deep Learning Techniques: From Transformers to Generative Models