Introduction: The Role of AI in Modern Projects

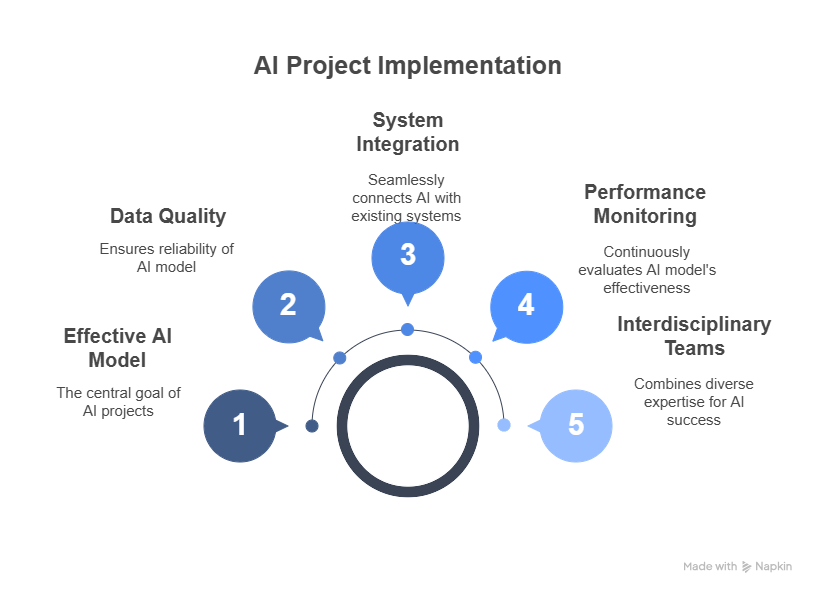

Artificial intelligence (AI) has become one of the key elements of digital transformation across many industries. Companies are increasingly using AI to automate processes, analyze large datasets, personalize services, and optimize costs. In practice, implementing AI is not just about building a model, but also about integrating it with existing systems, ensuring data quality, and monitoring its performance in a production environment.

Modern AI projects require the collaboration of interdisciplinary teams that combine expertise in programming, data analysis, project management, and industry-specific knowledge. This approach enables not only the creation of effective models but also their efficient deployment and maintenance.

Choosing the Right AI Model for the Task

A crucial stage of any AI project is selecting the right model that best addresses the business needs and data specifics. This choice depends on several factors:

Type of problem: Is the task about classification, regression, image analysis, natural language processing, or anomaly detection?

Availability and quality of data: Deep learning models require large, well-labeled datasets, while simpler algorithms can work effectively with smaller datasets.

Interpretability requirements: In some industries (e.g., healthcare, finance), it is essential for the model to be understandable and explainable.

Computational resources: Deep learning models are more resource-intensive than traditional machine learning algorithms.

For example, text classification can be performed using simple models like Naive Bayes or advanced neural networks such as BERT. For image analysis, convolutional neural networks (CNNs) are popular, while time series forecasting often uses models like LSTM or Prophet.

Below is a sample Python code illustrating the selection and training of a simple classification model using scikit-learn:

python

Copy Code

from sklearn.datasets import load_iris

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

# Load sample dataset

data = load_iris()

X = data.data

y = data.target

# Split into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Select and train the model

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Evaluate the model

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

print(f"Model accuracy: {accuracy:.2f}")Data Preparation – The Key to Success

Data preparation is one of the most critical stages in any AI project. The quality, consistency, and relevance of the data directly impact the performance of the AI model. Even the most advanced algorithms will not deliver good results if the input data is incomplete, noisy, or poorly structured.

The data preparation process typically involves several steps. First, data collection is performed from various sources such as databases, APIs, or files. Next, data cleaning is necessary to remove duplicates, handle missing values, and correct errors. Feature engineering follows, where new variables are created or existing ones are transformed to better represent the underlying patterns in the data. Data normalization or standardization is often required, especially for algorithms sensitive to the scale of input features.

Another important aspect is splitting the dataset into training, validation, and test sets. This allows for objective evaluation of the model’s performance and helps prevent overfitting.

Here is a sample Python code demonstrating basic data preparation steps using pandas and scikit-learn:

python

Copy Code

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

# Load data from CSV file

df = pd.read_csv('data.csv')

# Remove duplicates

df = df.drop_duplicates()

# Fill missing values with the mean

df = df.fillna(df.mean())

# Feature selection (example: select specific columns)

features = ['feature1', 'feature2', 'feature3']

X = df[features]

y = df['target']

# Split data into training and test sets

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Standardize features

scaler = StandardScaler()

X_train = scaler.fit_transform(X_train)

X_test = scaler.transform(X_test)Properly prepared data is the foundation for building robust and reliable AI models. Investing time in this stage significantly increases the chances of project success.

Training and Validation of the Model

Once the data is ready, the next step is training the AI model. Model training involves feeding the algorithm with training data so it can learn the relationships between input features and the target variable. The choice of algorithm and its parameters depends on the problem type and data characteristics.

During training, it is essential to monitor the model’s performance not only on the training set but also on a separate validation set. This helps detect overfitting, where the model performs well on training data but poorly on new, unseen data. Common metrics for evaluation include accuracy, precision, recall, F1-score for classification tasks, and mean squared error (MSE) for regression.

Cross-validation is a popular technique for robust model evaluation. It involves splitting the data into several folds and training the model multiple times, each time using a different fold as the validation set.

Below is an example of model training and validation using scikit-learn:

python

Copy Code

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from sklearn.model_selection import cross_val_score

# Assume X_train, y_train are already prepared

# Initialize the model

model = RandomForestClassifier(n_estimators=100, random_state=42)

# Train the model

model.fit(X_train, y_train)

# Cross-validation

cv_scores = cross_val_score(model, X_train, y_train, cv=5)

print(f"Cross-validation accuracy: {cv_scores.mean():.2f}")

# Evaluate on the test set

y_pred = model.predict(X_test)

test_accuracy = accuracy_score(y_test, y_pred)

print(f"Test set accuracy: {test_accuracy:.2f}")

Model Optimization and Hyperparameter Tuning

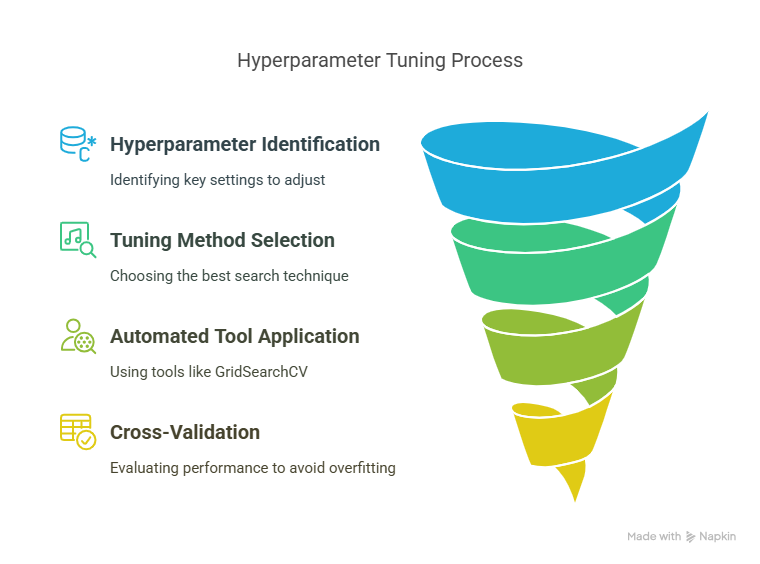

After initial training, most AI models can be significantly improved through optimization and hyperparameter tuning. Hyperparameters are settings that control the learning process and the structure of the model, such as the number of trees in a random forest, the learning rate in gradient boosting, or the number of layers in a neural network. Unlike model parameters, which are learned during training, hyperparameters must be set before the training process begins.

The process of hyperparameter tuning involves systematically searching for the best combination of settings that maximize the model’s performance. The most common methods include grid search, random search, and more advanced techniques like Bayesian optimization. Grid search tests all possible combinations from a predefined set of values, while random search samples random combinations, often finding good results faster.

Automated tools such as scikit-learn’s GridSearchCV or RandomizedSearchCV make this process easier. These tools evaluate model performance for each hyperparameter combination using cross-validation, helping to avoid overfitting and select the most robust configuration.

Here is a sample Python code demonstrating hyperparameter tuning with grid search:

python

Copy Code

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import GridSearchCV

# Define the parameter grid

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 10, 20, 30],

'min_samples_split': [2, 5, 10]

}

# Initialize the model

rf = RandomForestClassifier(random_state=42)

# Set up GridSearchCV

grid_search = GridSearchCV(estimator=rf, param_grid=param_grid, cv=5, scoring='accuracy')

# Fit to training data

grid_search.fit(X_train, y_train)

# Best parameters and score

print("Best parameters:", grid_search.best_params_)

print("Best cross-validation accuracy:", grid_search.best_score_)

Optimizing hyperparameters can lead to significant improvements in model accuracy, robustness, and generalization. It is a crucial step before moving to production deployment.

Testing the Model in a Production Environment

Before deploying an AI model to production, it is essential to thoroughly test it in an environment that closely resembles the real-world system. This stage ensures that the model not only performs well on historical data but also handles new, unseen data and integrates smoothly with existing workflows.

Testing in production involves several aspects. First, the model should be evaluated on a hold-out test set that was not used during training or validation. This provides an unbiased estimate of real-world performance. Next, it is important to monitor the model’s predictions for consistency, latency, and resource usage. In some cases, A/B testing or shadow deployment can be used, where the new model runs alongside the existing system to compare results without affecting end users.

Another key aspect is monitoring for data drift and concept drift. Data drift occurs when the statistical properties of input data change over time, while concept drift refers to changes in the relationship between input and output variables. Both can degrade model performance and require regular retraining or updating of the model.

Here is a simple example of evaluating a model on a test set and monitoring prediction latency:

python

Copy Code

import time

from sklearn.metrics import accuracy_score

# Predict and measure latency

start_time = time.time()

y_pred = grid_search.predict(X_test)

latency = time.time() - start_time

# Evaluate accuracy

test_accuracy = accuracy_score(y_test, y_pred)

print(f"Test set accuracy: {test_accuracy:.2f}")

print(f"Prediction latency: {latency:.4f} seconds")Deploying the Model: Tools and Platforms

Once the AI model has been trained, validated, and optimized, the next step is deployment—making the model available for use in real-world applications. Model deployment bridges the gap between data science and business value, allowing organizations to integrate AI into their products, services, or internal processes.

There are several approaches and tools for deploying AI models, depending on the use case and infrastructure. The most common deployment methods include:

REST API Services: Wrapping the model in a web service (using frameworks like Flask, FastAPI, or Django) allows other applications to send data and receive predictions via HTTP requests. This is a flexible and widely used approach.

Cloud Platforms: Major cloud providers such as AWS (SageMaker), Google Cloud (AI Platform), and Microsoft Azure (Machine Learning) offer managed services for deploying, scaling, and monitoring AI models. These platforms simplify deployment, provide scalability, and handle security and maintenance.

On-Premises Deployment: For organizations with strict data privacy or regulatory requirements, deploying models on local servers or edge devices may be necessary.

Containerization: Using Docker containers to package the model and its dependencies ensures consistency across different environments and simplifies scaling and orchestration (e.g., with Kubernetes).

Here is a simple example of deploying a trained model as a REST API using Flask in Python:

python

Copy Code

from flask import Flask, request, jsonify

import pickle

# Load the trained model

with open('model.pkl', 'rb') as f:

model = pickle.load(f)

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict():

data = request.get_json(force=True)

features = [data['feature1'], data['feature2'], data['feature3']]

prediction = model.predict([features])

return jsonify({'prediction': int(prediction[0])})

if __name__ == '__main__':

app.run(debug=True)This example demonstrates how to expose a model as an API endpoint, making it accessible to other systems or applications.

Monitoring and Maintaining the Deployed Model

Deployment is not the end of the AI model’s lifecycle. Continuous monitoring and maintenance are essential to ensure the model remains accurate, reliable, and aligned with business objectives over time.

Key aspects of model monitoring include:

Performance Tracking: Regularly measure key metrics (such as accuracy, precision, recall, or latency) on new data to detect performance degradation.

Data and Concept Drift Detection: Monitor for changes in input data distribution (data drift) or the relationship between inputs and outputs (concept drift). These changes can negatively impact model predictions and require retraining or updating the model.

Logging and Alerting: Implement logging of predictions, errors, and system metrics. Set up alerts to notify the team of anomalies or failures.

Automated Retraining Pipelines: Use MLOps tools and practices to automate the retraining and redeployment of models when performance drops below a certain threshold.

Cloud platforms and MLOps frameworks (such as MLflow, Kubeflow, or TensorFlow Extended) provide built-in tools for monitoring, versioning, and managing the lifecycle of AI models.

Here is a simple example of logging predictions and monitoring accuracy over time:

python

Copy Code

import pandas as pd

from sklearn.metrics import accuracy_score

# Simulate logging predictions

predictions = model.predict(X_new)

results = pd.DataFrame({'actual': y_new, 'predicted': predictions})

results.to_csv('predictions_log.csv', index=False)

# Monitor accuracy

accuracy = accuracy_score(y_new, predictions)

print(f"Current accuracy: {accuracy:.2f}")

# If accuracy drops below threshold, trigger retraining

if accuracy < 0.8:

print("Warning: Model accuracy below threshold. Consider retraining.")Common Challenges and How to Overcome Them

Implementing AI models in real-world projects is a complex process that often comes with a variety of challenges. Understanding these obstacles and knowing how to address them is crucial for successful AI adoption.

One of the most common challenges is data quality. Incomplete, inconsistent, or biased data can lead to poor model performance and unreliable predictions. To mitigate this, organizations should invest in robust data collection, cleaning, and validation processes. Regular audits and the use of data versioning tools can help maintain high data standards.

Another significant challenge is model interpretability. In industries such as healthcare or finance, it is essential to understand and explain how a model arrives at its decisions. Using interpretable models, applying explainability techniques (like SHAP or LIME), and providing clear documentation can help build trust among stakeholders and meet regulatory requirements.

Scalability is also a frequent concern. As the volume of data and the number of users grow, models must be able to handle increased demand without sacrificing performance. Leveraging cloud infrastructure, containerization, and orchestration tools like Kubernetes can help scale AI solutions efficiently.

Integration with existing systems can be technically challenging, especially in organizations with legacy infrastructure. Adopting standard APIs, microservices architecture, and thorough testing can ease the integration process.

Finally, monitoring and maintenance are often underestimated. Models can degrade over time due to data drift or changing business requirements. Setting up automated monitoring, alerting, and retraining pipelines ensures that models remain accurate and relevant.

By proactively addressing these challenges, organizations can maximize the value of their AI initiatives and reduce the risk of project failure.

Examples of AI Deployments Across Industries

AI is transforming a wide range of industries, delivering tangible benefits and driving innovation. Here are a few real-world examples of successful AI deployments:

In healthcare, AI models are used for early disease detection, medical image analysis, and personalized treatment recommendations. For instance, deep learning algorithms can analyze X-rays or MRI scans to identify anomalies with high accuracy, supporting doctors in making faster and more accurate diagnoses.

In the financial sector, AI powers fraud detection systems, credit scoring, and algorithmic trading. Machine learning models can analyze transaction patterns in real time to flag suspicious activities, reducing financial losses and improving security.

Retail and e-commerce companies use AI for demand forecasting, personalized product recommendations, and inventory optimization. Recommendation engines analyze customer behavior and preferences to suggest relevant products, increasing sales and customer satisfaction.

In manufacturing, AI-driven predictive maintenance systems monitor equipment health and predict failures before they occur, minimizing downtime and reducing maintenance costs.

Transportation and logistics benefit from AI through route optimization, autonomous vehicles, and supply chain management. AI algorithms can optimize delivery routes based on real-time traffic data, improving efficiency and reducing fuel consumption.

These examples illustrate the versatility and impact of AI across different sectors. By learning from successful deployments, organizations can identify best practices and tailor AI solutions to their unique needs.

Summary and Best Practices

Successfully deploying artificial intelligence in real-world projects requires more than just technical expertise. It is a multidisciplinary effort that combines data science, software engineering, business understanding, and ongoing maintenance. To maximize the value of AI initiatives and ensure long-term success, it is essential to follow proven best practices at every stage of the project.

First and foremost, always start with a clear definition of the business problem and measurable objectives. Understanding the specific goals and constraints of the project helps guide model selection, data preparation, and evaluation metrics. Close collaboration between data scientists, domain experts, and business stakeholders ensures that the AI solution addresses real needs and delivers tangible value.

High-quality data is the foundation of any successful AI project. Invest time in thorough data collection, cleaning, and feature engineering. Regularly monitor data quality and be prepared to update datasets as business conditions change. Use data versioning tools to track changes and maintain reproducibility.

When building and training models, prioritize interpretability and transparency, especially in regulated industries. Use explainable AI techniques to make model decisions understandable to end users and stakeholders. Document all steps of the modeling process, including data sources, feature selection, and hyperparameter choices.

Testing and validation are critical before moving to production. Use cross-validation, hold-out test sets, and, if possible, real-world pilot deployments to ensure the model generalizes well to new data. Monitor for overfitting and adjust model complexity as needed.

For deployment, choose tools and platforms that fit your organization’s infrastructure and scalability needs. Containerization and cloud services can simplify deployment and scaling. Expose models via APIs to enable easy integration with other systems.

Ongoing monitoring and maintenance are essential for long-term success. Set up automated pipelines for performance tracking, drift detection, and retraining. Establish clear processes for logging, alerting, and incident response. Regularly review model performance and update models as data or business requirements evolve.

Finally, foster a culture of continuous learning and improvement. Encourage teams to stay up to date with the latest AI research, tools, and best practices. Share knowledge across the organization and celebrate successful AI deployments.

By following these best practices, organizations can build robust, reliable, and valuable AI solutions that drive innovation and support business growth.

AI Agents: Building intelligent applications with ease