Introduction to Transfer Learning

What is Transfer Learning and Why is it Important?

Transfer learning is an artificial intelligence technique that involves leveraging knowledge gained by a model while solving one task to accelerate and improve learning in another, often related, task. Instead of training a model from scratch, we use pre-trained models that have been trained on large, general datasets such as ImageNet for images or Wikipedia for text.

The importance of transfer learning is growing alongside the development of AI, as it enables high performance even when we have a limited amount of our own data. Thanks to this, companies and research teams can implement advanced AI solutions more quickly, saving both time and computational resources.

Traditional Machine Learning vs. Transfer Learning

In traditional machine learning, each model is trained from the ground up on a specific dataset. This means that to achieve good results, we need a large amount of data and significant computational power. This process can be time-consuming and costly, especially for complex tasks such as image recognition or natural language processing.

Transfer learning changes this approach. Instead of starting from scratch, we use a model that has already „learned” to recognize general patterns and adapt it to our specific task. For example, a model trained to recognize thousands of image categories can be easily adapted to classify medical images, even if we only have a few hundred examples.

Benefits of Using Transfer Learning

Transfer learning offers a range of benefits that make it one of the most important techniques in modern artificial intelligence:

Time and resource savings – It shortens model training time and reduces the need for computational power.

Better results with small datasets – It enables high accuracy even when your own dataset is small.

Faster deployment – It allows for rapid prototyping and testing of new AI solutions.

Versatility – Pre-trained models can be used across various fields, from image analysis and natural language processing to audio recognition.

Reduced risk of overfitting – By leveraging knowledge from large datasets, models are less prone to overfitting on small, specific datasets.

Key Concepts of Transfer Learning

Domain and Task

In transfer learning, two fundamental concepts are domain and task.

A domain refers to a specific area of knowledge, characterized by a feature space and a marginal probability distribution. For example, the domain could be medical images, product reviews, or financial time series.

A task is the specific problem to be solved within a domain, such as classifying images, predicting sentiment, or forecasting stock prices.

Transfer learning typically involves a source domain and task (where the model was originally trained) and a target domain and task (where we want to apply the model). The goal is to transfer knowledge from the source to the target, even if the domains or tasks are not identical.

Features and Models

Features are the measurable properties or characteristics used as input for machine learning models. In transfer learning, the features learned by a model on a large, general dataset (such as edges and shapes in images, or word embeddings in text) can be reused for a new, related task. This is possible because many low-level features are universal and useful across different problems.

Models in transfer learning are typically deep neural networks that have been pre-trained on massive datasets. These models have already learned to extract meaningful features from data, which can be adapted to new tasks with minimal additional training. Examples include convolutional neural networks (CNNs) for images and transformer-based models (like BERT or GPT) for text.

Types of Transfer Learning: Inductive, Transductive, Unsupervised

There are several types of transfer learning, depending on the relationship between the source and target domains and tasks:

Inductive Transfer Learning: The source and target tasks are different, but the domains may be the same or different. The main goal is to improve performance on the target task using knowledge from the source task. Fine-tuning a pre-trained image classifier for a specific medical diagnosis is an example of inductive transfer learning.

Transductive Transfer Learning: The source and target tasks are the same, but the domains are different. For instance, a sentiment analysis model trained on English reviews (source domain) is adapted to work on French reviews (target domain) without changing the task.

Unsupervised Transfer Learning: Both the source and target tasks are unsupervised (e.g., clustering or dimensionality reduction), and knowledge is transferred to improve performance on the target task.

Transfer Learning Techniques

Fine-tuning

Fine-tuning is one of the most popular and effective transfer learning techniques. It involves taking a pre-trained model and continuing its training on a new, typically smaller dataset that is specific to your task. During fine-tuning, you usually start by freezing the early layers of the model (which have learned general features) and only train the later layers (which learn more task-specific features). In some cases, you may gradually unfreeze more layers and retrain the entire model with a lower learning rate.

Fine-tuning is widely used in computer vision and natural language processing. For example, you can take a convolutional neural network (CNN) pre-trained on ImageNet and fine-tune it to classify medical images, or use a language model like BERT and fine-tune it for sentiment analysis.

Example in Python (using Keras for image classification):

python

Copy Code

from tensorflow.keras.applications import VGG16

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, Flatten

from tensorflow.keras.optimizers import Adam

# Load pre-trained model without the top layer

base_model = VGG16(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze base model layers

for layer in base_model.layers:

layer.trainable = False

# Add custom classification head

x = Flatten()(base_model.output)

x = Dense(128, activation='relu')(x)

output = Dense(2, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

# Compile and train the model

model.compile(optimizer=Adam(learning_rate=0.0001), loss='categorical_crossentropy', metrics=['accuracy'])

# model.fit(X_train, y_train, epochs=5, validation_data=(X_val, y_val))Feature Extraction

Feature extraction is another common transfer learning technique. Instead of retraining the entire model, you use the pre-trained model as a fixed feature extractor. You pass your data through the model and use the output from one of the intermediate layers as input features for a new, simpler model (such as a logistic regression or a small neural network).

This approach is especially useful when you have very little data for your specific task, as it avoids overfitting and leverages the rich representations learned by the pre-trained model.

Example in Python (using PyTorch for image feature extraction):

python

Copy Code

import torch

import torchvision.models as models

import torchvision.transforms as transforms

from PIL import Image

# Load pre-trained model

resnet = models.resnet50(pretrained=True)

resnet.eval()

# Remove the final classification layer

feature_extractor = torch.nn.Sequential(*list(resnet.children())[:-1])

# Prepare image

img = Image.open('example.jpg')

preprocess = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

])

img_tensor = preprocess(img).unsqueeze(0)

# Extract features

with torch.no_grad():

features = feature_extractor(img_tensor)

print(features.shape)Model Repurposing

Model repurposing refers to using a pre-trained model for a task that is different from its original purpose, sometimes with minimal or no retraining. This is possible when the source and target tasks share similar data structures or underlying patterns. For example, a model trained for object detection in street scenes might be repurposed for detecting anomalies in industrial images.

Repurposing can also involve combining parts of different pre-trained models or using them as building blocks in larger AI systems. This approach accelerates development and allows for creative solutions to new problems.

Choosing the Right Pre-trained Model

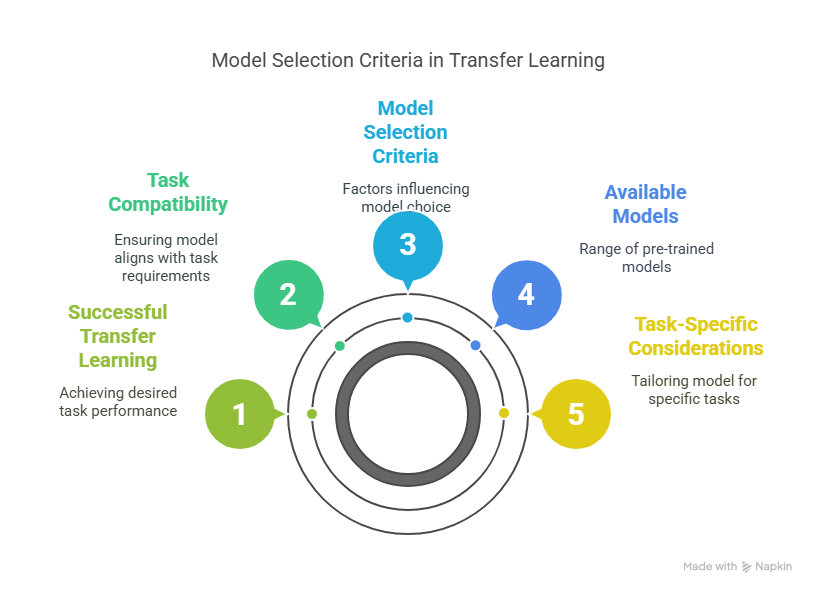

Criteria for Model Selection

Selecting the right pre-trained model is a crucial step in transfer learning. The choice depends on several factors, including the similarity between your target task and the original task the model was trained on, the size and quality of your dataset, computational resources, and the required level of interpretability.

Key criteria to consider include:

Domain Similarity: The closer your data is to the data used for pre-training, the better the model will perform after transfer. For example, if you are working with natural images, models pre-trained on ImageNet are a good choice.

Model Architecture: Some architectures are better suited for certain tasks. For instance, convolutional neural networks (CNNs) excel in image processing, while transformer-based models are state-of-the-art for natural language processing.

Size and Complexity: Larger models often achieve higher accuracy but require more computational power and memory. Choose a model that fits your hardware and latency requirements.

Community Support and Documentation: Well-documented models with active community support are easier to implement and troubleshoot.

Licensing and Usage Restrictions: Ensure the model’s license allows for your intended use, especially in commercial projects.

Available Pre-trained Models (ImageNet, BERT, GPT)

There is a wide range of pre-trained models available for different domains and tasks. Some of the most popular include:

ImageNet Models: Models like VGG, ResNet, Inception, and EfficientNet are pre-trained on the ImageNet dataset, which contains millions of labeled images across thousands of categories. These models are widely used for image classification, object detection, and feature extraction.

BERT (Bidirectional Encoder Representations from Transformers): BERT is a transformer-based model pre-trained on large text corpora. It has set new benchmarks in natural language understanding tasks such as question answering, sentiment analysis, and text classification.

GPT (Generative Pre-trained Transformer): GPT models are designed for natural language generation and understanding. They are pre-trained on massive text datasets and can be fine-tuned for tasks like text summarization, translation, and chatbot development.

Other Models: There are also pre-trained models for audio processing (e.g., Wav2Vec), time series analysis, and more specialized domains.

Most of these models are available through popular machine learning libraries such as TensorFlow, PyTorch, and Hugging Face Transformers, making them easy to download and integrate.

Model Compatibility with the Task

Ensuring compatibility between the pre-trained model and your specific task is essential for successful transfer learning. Consider the following:

Input Data Format: The model’s expected input (e.g., image size, text tokenization) should match your data. You may need to preprocess your data accordingly.

Output Layer: You might need to modify or replace the output layer to match the number of classes or the type of prediction required for your task.

Fine-tuning vs. Feature Extraction: Decide whether you will fine-tune the entire model or use it as a fixed feature extractor, based on the amount of available data and the complexity of your task.

Evaluation Metrics: Use appropriate metrics to assess the model’s performance after transfer, ensuring it meets your project’s goals.

Example: Adapting a Pre-trained ImageNet Model for a Custom Task in Keras

python

Copy Code

from tensorflow.keras.applications import ResNet50

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

# Load pre-trained ResNet50 without the top layer

base_model = ResNet50(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Add custom output layer for a new classification task (e.g., 3 classes)

x = GlobalAveragePooling2D()(base_model.output)

output = Dense(3, activation='softmax')(x)

model = Model(inputs=base_model.input, outputs=output)

# Now you can fine-tune or use as a feature extractor

By carefully selecting and adapting a pre-trained model, you can accelerate development, improve performance, and make the most of transfer learning for your specific application.

Practical Examples of Transfer Learning Applications

Image Processing (Image Classification, Object Detection)

Transfer learning has revolutionized image processing tasks, making it possible to achieve high accuracy even with limited data. In image classification, pre-trained convolutional neural networks (CNNs) such as ResNet, VGG, or EfficientNet, trained on large datasets like ImageNet, can be fine-tuned for specific tasks—such as classifying medical images, identifying plant species, or detecting product defects.

For object detection, models like YOLO (You Only Look Once) or Faster R-CNN, pre-trained on general datasets, can be adapted to detect custom objects in specialized environments, such as industrial quality control or wildlife monitoring.

Example: Fine-tuning a pre-trained CNN for image classification in Keras

python

Copy Code

from tensorflow.keras.applications import EfficientNetB0

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

# Load pre-trained EfficientNetB0 without the top layer

base_model = EfficientNetB0(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Add custom classification head

x = GlobalAveragePooling2D()(base_model.output)

output = Dense(5, activation='softmax')(x) # For 5 classes

model = Model(inputs=base_model.input, outputs=output)

# Compile and train the model as usualNatural Language Processing (Text Classification, Sentiment Analysis)

In natural language processing (NLP), transfer learning has become the standard approach for tasks such as text classification, sentiment analysis, and question answering. Pre-trained transformer models like BERT, RoBERTa, or GPT can be fine-tuned on domain-specific datasets, enabling high performance even with relatively small amounts of labeled data.

For example, a BERT model pre-trained on general English text can be fine-tuned to classify customer reviews as positive or negative, or to detect spam in emails.

Example: Fine-tuning BERT for sentiment analysis using Hugging Face Transformers

python

Copy Code

from transformers import BertTokenizer, TFBertForSequenceClassification

from tensorflow.keras.optimizers import Adam

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = TFBertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

# Tokenize your text data and prepare input tensors

# inputs = tokenizer(texts, padding=True, truncation=True, return_tensors="tf")

# Compile and train the model

model.compile(optimizer=Adam(learning_rate=2e-5), loss='binary_crossentropy', metrics=['accuracy'])

# model.fit(inputs, labels, epochs=3, batch_size=16)Other Domains (Healthcare, Finance)

Transfer learning is also making a significant impact in specialized domains such as healthcare and finance. In healthcare, pre-trained models are used for tasks like disease diagnosis from medical images, patient risk prediction, and automated analysis of electronic health records. For example, a CNN pre-trained on general images can be fine-tuned to detect tumors in X-ray or MRI scans, even with a limited number of labeled medical images.

In finance, transfer learning is applied to tasks such as fraud detection, credit scoring, and algorithmic trading. Pre-trained models can be adapted to recognize patterns in transaction data or predict market trends, often outperforming traditional statistical methods.

Example: Using a pre-trained model for anomaly detection in financial transactions

python

Copy Code

from sklearn.ensemble import IsolationForest

# Assume features have been extracted using a pre-trained model

# features = ...

# Train Isolation Forest for anomaly detection

model = IsolationForest(contamination=0.01)

model.fit(features)

# Predict anomalies

anomalies = model.predict(features)Transfer learning enables rapid development and high performance across a wide range of applications, from image and text analysis to specialized fields like healthcare and finance. By leveraging pre-trained models and adapting them to your specific needs, you can achieve state-of-the-art results with less data, time, and computational resources.

Implementing Transfer Learning in Python

Using TensorFlow, Keras, and PyTorch

Python is the most popular language for machine learning and deep learning, offering powerful libraries that make transfer learning accessible and efficient. The three most widely used frameworks—TensorFlow, Keras, and PyTorch—provide built-in support for loading pre-trained models, modifying architectures, and fine-tuning on custom datasets.

TensorFlow/Keras: Keras, now integrated with TensorFlow, offers a high-level API for building and training neural networks. It includes a variety of pre-trained models (e.g., VGG, ResNet, EfficientNet) that can be easily loaded and customized for transfer learning tasks.

PyTorch: PyTorch is a flexible and widely adopted deep learning framework, especially popular in research. It provides access to pre-trained models through the torchvision and transformers libraries, making it straightforward to implement both feature extraction and fine-tuning.

Code Examples for Fine-tuning and Feature Extraction

Below are practical code examples demonstrating how to implement transfer learning using both Keras (TensorFlow) and PyTorch.

Fine-tuning a Pre-trained Model in Keras (Image Classification):

python

Copy Code

from tensorflow.keras.applications import MobileNetV2

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Dense, GlobalAveragePooling2D

from tensorflow.keras.optimizers import Adam

# Load pre-trained MobileNetV2 without the top layer

base_model = MobileNetV2(weights='imagenet', include_top=False, input_shape=(224, 224, 3))

# Freeze base model layers

for layer in base_model.layers:

layer.trainable = False

# Add custom classification head

x = GlobalAveragePooling2D()(base_model.output)

output = Dense(3, activation='softmax')(x) # For 3 classes

model = Model(inputs=base_model.input, outputs=output)

# Compile and train the model

model.compile(optimizer=Adam(learning_rate=0.0001), loss='categorical_crossentropy', metrics=['accuracy'])

# model.fit(X_train, y_train, epochs=5, validation_data=(X_val, y_val))

Feature Extraction with PyTorch (Image Features):

python

Copy Code

import torch

import torchvision.models as models

import torchvision.transforms as transforms

from PIL import Image

# Load pre-trained ResNet50

resnet = models.resnet50(pretrained=True)

resnet.eval()

# Remove the final classification layer

feature_extractor = torch.nn.Sequential(*list(resnet.children())[:-1])

# Prepare image

img = Image.open('example.jpg')

preprocess = transforms.Compose([

transforms.Resize(256),

transforms.CenterCrop(224),

transforms.ToTensor(),

])

img_tensor = preprocess(img).unsqueeze(0)

# Extract features

with torch.no_grad():

features = feature_extractor(img_tensor)

print(features.shape)

Fine-tuning BERT for Text Classification with Hugging Face Transformers:

python

Copy Code

from transformers import BertTokenizer, TFBertForSequenceClassification

from tensorflow.keras.optimizers import Adam

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

model = TFBertForSequenceClassification.from_pretrained('bert-base-uncased', num_labels=2)

# Tokenize your text data and prepare input tensors

# inputs = tokenizer(texts, padding=True, truncation=True, return_tensors="tf")

# Compile and train the model

model.compile(optimizer=Adam(learning_rate=2e-5), loss='binary_crossentropy', metrics=['accuracy'])

# model.fit(inputs, labels, epochs=3, batch_size=16)Tips for Effective Implementation

Start with a suitable pre-trained model: Choose a model that matches your data type and task (e.g., CNNs for images, transformers for text).

Freeze and unfreeze layers strategically: Begin by freezing most layers and only training the final layers. Gradually unfreeze more layers if you have enough data.

Use appropriate data preprocessing: Ensure your input data matches the format expected by the pre-trained model (e.g., image size, normalization, tokenization).

Monitor for overfitting: Especially with small datasets, use regularization techniques and monitor validation performance.

Leverage available libraries: Use TensorFlow Hub, PyTorch Hub, or Hugging Face Transformers to access a wide range of pre-trained models and utilities.

Challenges and Limitations of Transfer Learning

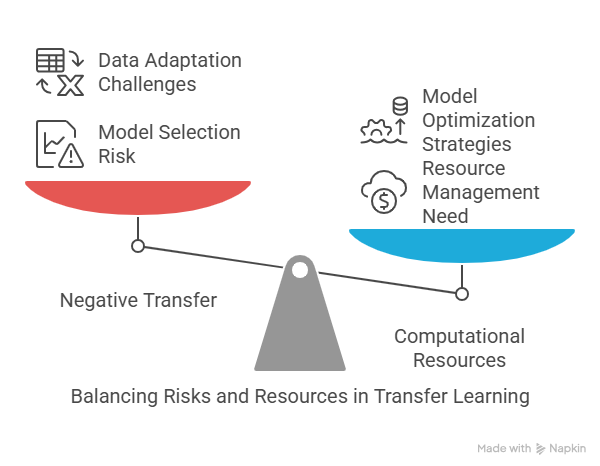

Negative Transfer

One of the main risks in transfer learning is negative transfer. This occurs when the knowledge transferred from the source model actually harms the performance on the target task, rather than improving it. Negative transfer is most likely when the source and target domains or tasks are too different—for example, using a model trained on natural images to classify medical X-rays. In such cases, the features learned by the pre-trained model may not be relevant, leading to poor results.

To minimize the risk of negative transfer, it’s important to:

Carefully select a pre-trained model that is as close as possible to your target domain and task.

Experiment with different models and monitor performance on validation data.

Consider using only the early layers of a pre-trained model (which learn more general features) and retrain the later layers from scratch.

Adapting the Model to Specific Data

Even when the source and target domains are similar, adapting a pre-trained model to your specific data can be challenging. Issues may include:

Data format mismatches: The input size, data type, or preprocessing steps required by the pre-trained model may differ from your dataset.

Label differences: The number and type of output classes may not match, requiring modification of the model’s output layer.

Domain-specific features: Your data may contain unique patterns or features not present in the original training data, necessitating additional feature engineering or fine-tuning.

Best practices for adaptation include:

Preprocess your data to match the input requirements of the pre-trained model (e.g., resizing images, normalizing pixel values, tokenizing text).

Replace or modify the output layer to fit your classification or regression task.

Use domain-specific data for fine-tuning, even if the dataset is small.

Managing Computational Resources

Transfer learning can significantly reduce the amount of data and training time required, but working with large pre-trained models still demands substantial computational resources. Challenges include:

Memory usage: Large models like BERT or ResNet can consume significant amounts of RAM and GPU memory.

Training time: Fine-tuning deep models, especially on large datasets, can still take hours or days.

Deployment constraints: Running large models in production may require specialized hardware or cloud infrastructure.

To address these challenges:

Use model distillation or pruning techniques to reduce model size and inference time.

Opt for lighter architectures (e.g., MobileNet, DistilBERT) when deploying on edge devices or with limited resources.

Take advantage of cloud-based solutions and GPU acceleration for training and inference.

The Future of Transfer Learning

New Techniques and Trends

Transfer learning continues to evolve rapidly, driven by advances in deep learning research and the growing availability of large-scale datasets and pre-trained models. One of the most significant trends is the development of foundation models—very large, general-purpose models trained on massive and diverse datasets. Examples include GPT-4, PaLM, and CLIP. These models can be adapted to a wide range of downstream tasks with minimal additional training, making transfer learning even more powerful and accessible.

Another emerging technique is multi-task learning, where a single model is trained on multiple related tasks simultaneously. This approach enables the model to learn more general representations, which can be transferred more effectively to new tasks. Similarly, meta-learning (or „learning to learn”) focuses on training models that can quickly adapt to new tasks with very little data, further enhancing the flexibility of transfer learning.

Self-supervised learning is also gaining traction. In this paradigm, models learn useful representations from unlabeled data by solving pretext tasks (such as predicting missing parts of an image or the next word in a sentence). These representations can then be transferred to supervised tasks, reducing the need for large labeled datasets.

Automated Transfer Learning (AutoML)

Automated machine learning (AutoML) is transforming the way transfer learning is applied. AutoML platforms can automatically select, fine-tune, and deploy pre-trained models for a given task, often outperforming manually designed solutions. This democratizes access to advanced AI, enabling non-experts to leverage transfer learning for their own applications.

AutoML frameworks, such as Google AutoML, H2O.ai, and AutoKeras, streamline the process of model selection, hyperparameter tuning, and pipeline optimization. They can also automate the process of adapting pre-trained models to new domains, making transfer learning more efficient and scalable.

Impact on AI Development

The ongoing evolution of transfer learning is having a profound impact on the field of artificial intelligence. By enabling rapid adaptation to new tasks and domains, transfer learning accelerates innovation and reduces the barriers to entry for organizations of all sizes. It allows for the creation of high-performing AI systems with less data, lower costs, and shorter development cycles.

Transfer learning is also fostering greater collaboration and knowledge sharing within the AI community. Open-source repositories of pre-trained models, such as Hugging Face Model Hub and TensorFlow Hub, make it easy for researchers and practitioners to build on each other’s work, driving progress across the entire field.

Looking ahead, transfer learning is expected to play a central role in the development of more general, adaptable, and human-like AI systems. As new techniques and tools emerge, the potential applications of transfer learning will continue to expand, shaping the future of artificial intelligence.

Summary: Key Takeaways and Best Practices

Key Takeaways

Transfer learning has become a cornerstone of modern artificial intelligence, enabling rapid development and high performance even with limited data. By leveraging knowledge from large, pre-trained models, you can adapt powerful AI solutions to your own tasks with less time, data, and computational resources. The main points to remember include:

Transfer learning reuses knowledge from one domain or task to improve performance on another, related task.

Pre-trained models (such as those for images or text) can be fine-tuned or used as feature extractors for new applications.

Careful model selection is crucial—choose a pre-trained model that closely matches your data and task.

Fine-tuning and feature extraction are the two main strategies for adapting pre-trained models.

Transfer learning is widely applicable across domains, including image processing, NLP, healthcare, and finance.

Challenges exist, such as negative transfer, data adaptation, and resource management, but can be mitigated with best practices.

Best Practices

To maximize the benefits of transfer learning and avoid common pitfalls, consider the following best practices:

Start with a clear understanding of your task and data. Analyze how similar your problem is to the original task the model was trained on.

Preprocess your data to match the input requirements of the pre-trained model (e.g., image size, normalization, text tokenization).

Freeze and unfreeze layers strategically. Begin by training only the final layers, then gradually unfreeze more layers if you have enough data.

Monitor for overfitting. Use validation data, regularization techniques, and early stopping to ensure your model generalizes well.

Evaluate performance with appropriate metrics. Choose metrics that reflect your business or research goals.

Leverage open-source resources. Use model hubs and libraries (like Hugging Face, TensorFlow Hub, or PyTorch Hub) to access a wide range of pre-trained models and tools.

Document your process. Keep track of model versions, data preprocessing steps, and hyperparameters for reproducibility.

Plan for ongoing monitoring and maintenance. Set up pipelines to track model performance and retrain as needed when data or requirements change.

Additional Resources

Links to Articles, Courses, and Libraries

To deepen your understanding of transfer learning and stay up to date with the latest developments, it’s helpful to explore high-quality resources. Here are some recommended links:

Articles and Tutorials:

A Comprehensive Introduction to Transfer Learning (Towards Data Science)

Transfer Learning Guide (Machine Learning Mastery)

Fine-tuning Pre-trained Models in Keras

Transfer Learning with PyTorch

Hugging Face Transformers Documentation

Online Courses:

DeepLearning.AI: Transfer Learning for NLP with TensorFlow

Coursera: Convolutional Neural Networks (part of Deep Learning Specialization)

Udemy: Transfer Learning for Computer Vision

Libraries and Model Hubs:

TensorFlow Hub

PyTorch Hub

Hugging Face Model Hub

Keras Applications

Knowledge Sources

Books:

„Deep Learning” by Ian Goodfellow, Yoshua Bengio, and Aaron Courville (Chapter on Transfer Learning)

„Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow” by Aurélien Géron

Research Papers:

A Survey on Transfer Learning

How transferable are features in deep neural networks?

Communities and Forums:

Stack Overflow

Reddit r/MachineLearning

Kaggle Discussions

Where to Go Next

If you’re ready to apply transfer learning in your own projects, start by exploring the model hubs and official documentation for your chosen framework. Experiment with fine-tuning and feature extraction on sample datasets, and join online communities to ask questions and share your progress.

Remember, the field of transfer learning is evolving rapidly. Staying engaged with the latest research, tools, and best practices will help you make the most of this powerful technique and keep your skills up to date.

Ethical Challenges in Artificial Intelligence Development: What Should an AI Engineer Know?