Introduction to AI Model Optimization

Why is AI Model Optimization Important?

AI model optimization is a crucial step in the machine learning lifecycle, directly impacting the efficiency, accuracy, and scalability of AI solutions. As organizations increasingly rely on AI to automate processes, make predictions, and drive business value, the need to optimize models becomes more pressing. Optimization ensures that models not only perform well on training data but also generalize effectively to new, unseen data. This process helps reduce computational costs, shortens inference times, and enables deployment on a wider range of devices, from cloud servers to edge devices.

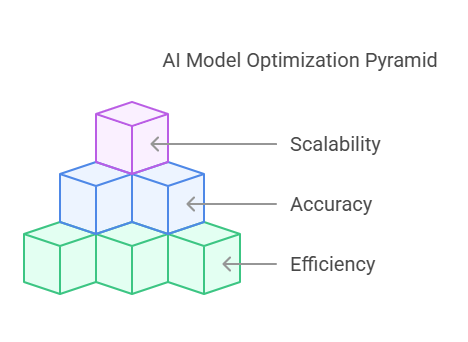

The Goal: Efficiency, Accuracy, and Scalability

The primary goal of AI model optimization is to strike a balance between efficiency, accuracy, and scalability. Efficiency refers to the model’s ability to make predictions quickly and with minimal resource consumption. Accuracy is about how well the model predicts outcomes or classifies data, while scalability ensures that the model can handle increasing amounts of data or be deployed across multiple environments.

For example, a highly accurate model that is too slow or resource-intensive may not be practical for real-time applications. Conversely, a very fast model that sacrifices too much accuracy may not deliver the desired business value. Therefore, optimization involves a series of trade-offs and decisions tailored to the specific use case and deployment scenario.

Practical Example: The Impact of Optimization

Consider a scenario where a company wants to deploy an image recognition model on mobile devices. The original model, trained on a large dataset, achieves high accuracy but is too large and slow for mobile hardware. Through optimization techniques such as model pruning, quantization, and knowledge distillation, the model’s size and computational requirements can be significantly reduced, enabling real-time inference on smartphones without a substantial loss in accuracy.

Python Example: Measuring Model Efficiency

Below is a simple Python example using TensorFlow to compare the inference time of two models—a standard model and an optimized (quantized) version:

python

import tensorflow as tf

import numpy as np

import time

# Load a pre-trained model (for example purposes, MobileNetV2)

model = tf.keras.applications.MobileNetV2(weights='imagenet')

# Convert to a quantized TFLite model for optimization

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tflite_model = converter.convert()

# Prepare a random input

input_data = np.random.rand(1, 224, 224, 3).astype(np.float32)

# Measure inference time for the original model

start = time.time()

_ = model.predict(input_data)

end = time.time()

print(f"Original model inference time: {end - start:.4f} seconds")

# Measure inference time for the TFLite model

interpreter = tf.lite.Interpreter(model_content=tflite_model)

interpreter.allocate_tensors()

input_index = interpreter.get_input_details()[0]["index"]

output_index = interpreter.get_output_details()[0]["index"]

start = time.time()

interpreter.set_tensor(input_index, input_data)

interpreter.invoke()

_ = interpreter.get_tensor(output_index)

end = time.time()

print(f"Optimized (TFLite) model inference time: {end - start:.4f} seconds")

# Created/Modified files during execution:

print("No files created; models are kept in memory for this example.")This code demonstrates how optimization can lead to faster inference, which is essential for deploying AI models in production environments with limited resources.

Understanding the AI/ML Workflow

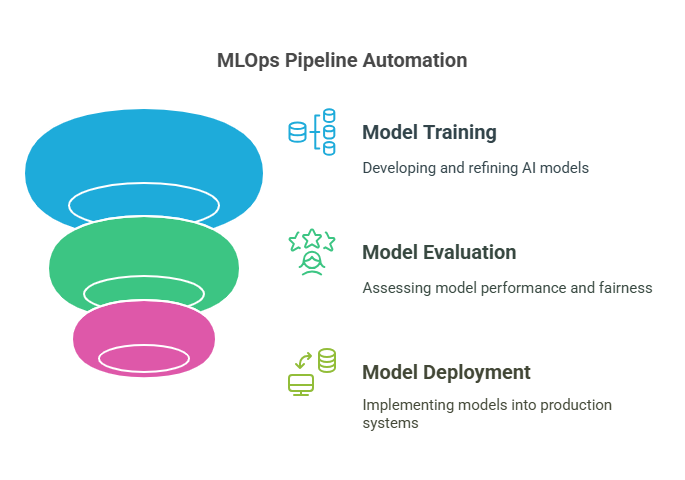

A well-structured AI and machine learning (ML) workflow is the foundation for building, optimizing, and deploying effective models. Each stage in this workflow plays a critical role in ensuring that AI solutions are robust, scalable, and aligned with business objectives. Below, we break down the key phases of the AI/ML workflow, highlighting their importance and best practices.

Data Collection and Pre-processing

The journey of any AI project begins with data. High-quality, relevant data is essential for training models that generalize well to new situations. Data collection involves gathering information from various sources, such as databases, APIs, sensors, or user interactions. Once collected, data often requires significant pre-processing to ensure it is clean, consistent, and suitable for modeling.

Pre-processing steps typically include handling missing values, removing duplicates, normalizing or standardizing features, encoding categorical variables, and detecting outliers. Tools like IBM DataStage and Data Refinery, as described in the MLOps guides, help automate and streamline these tasks, enabling data engineers to design complex ETL (Extract, Transform, Load) pipelines and transform raw data into structured, analysis-ready datasets.

Model Training and Selection

With a prepared dataset, the next step is to train machine learning models. This phase involves selecting appropriate algorithms, splitting data into training and validation sets, and fitting models to the data. Model selection is a critical process, as different algorithms may perform better depending on the problem and data characteristics.

Experimentation is key—data scientists often try multiple models and tune their hyperparameters to find the best performer. Automated tools like AutoML platforms (e.g., IBM AutoAI) can accelerate this process by automatically testing various algorithms and configurations, ranking candidate models, and suggesting the most promising ones for deployment.

Evaluation and Validation

Before deploying a model, it must be rigorously evaluated to ensure it meets performance requirements and generalizes well to unseen data. Evaluation involves using metrics appropriate to the task, such as accuracy, precision, recall, F1-score for classification, or mean squared error for regression.

Validation techniques like cross-validation help assess model stability and prevent overfitting. In production environments, additional validation steps may include A/B testing, where the new model is compared against existing solutions to confirm its added value. Tools like Watson OpenScale provide monitoring and evaluation capabilities, ensuring models perform as expected and remain fair, robust, and compliant with regulations.

Deployment and Monitoring

Once validated, the model is ready for deployment. This step involves integrating the model into production systems, making it accessible via APIs or embedding it within applications. Deployment must be carefully managed to ensure reliability, scalability, and security.

After deployment, continuous monitoring is essential. Models can degrade over time due to changes in data distributions (data drift) or evolving business requirements. Monitoring tools track model performance, detect anomalies, and trigger retraining or updates as needed. MLOps platforms like Watson Machine Learning and Run:ai offer features for automated deployment, resource management, and real-time monitoring, supporting the full lifecycle of AI models.

Example: End-to-End Workflow in Python

Below is a simplified Python example that demonstrates a basic AI/ML workflow using scikit-learn. This script covers data loading, pre-processing, model training, evaluation, and deployment as a REST API using Flask.

python

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

from sklearn.metrics import accuracy_score

from sklearn.preprocessing import StandardScaler

from flask import Flask, request, jsonify

import joblib

# Step 1: Data Collection and Pre-processing

data = pd.read_csv('data.csv')

X = data.drop('target', axis=1)

y = data['target']

scaler = StandardScaler()

X_scaled = scaler.fit_transform(X)

# Step 2: Model Training and Selection

X_train, X_val, y_train, y_val = train_test_split(X_scaled, y, test_size=0.2, random_state=42)

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# Step 3: Evaluation and Validation

y_pred = model.predict(X_val)

print(f"Validation Accuracy: {accuracy_score(y_val, y_pred):.2f}")

# Save the model and scaler for deployment

joblib.dump(model, 'rf_model.pkl')

joblib.dump(scaler, 'scaler.pkl')

# Step 4: Deployment (Simple REST API)

app = Flask(__name__)

@app.route('/predict', methods=['POST'])

def predict():

data = request.get_json(force=True)

features = pd.DataFrame([data])

scaler = joblib.load('scaler.pkl')

model = joblib.load('rf_model.pkl')

features_scaled = scaler.transform(features)

prediction = model.predict(features_scaled)

return jsonify({'prediction': int(prediction[0])})

if __name__ == '__main__':

app.run(debug=True)

# Created/Modified files during execution:

print("rf_model.pkl")

print("scaler.pkl")This example illustrates the core workflow: data is pre-processed, a model is trained and evaluated, and the trained model is deployed as a web service for real-time predictions.

Key Optimization Strategies for AI Models

Optimizing AI models is a multifaceted process that directly impacts their performance, efficiency, and scalability. In this section, we explore the most effective strategies for AI model optimization, drawing on best practices and tools highlighted in leading MLOps resources. These strategies include hyperparameter optimization, feature selection, model selection, data preprocessing, transfer learning, and neural architecture search.

Hyperparameter Optimization

Hyperparameters are the settings that govern the training process of machine learning models, such as learning rate, batch size, number of layers, and activation functions. Choosing the right hyperparameters can significantly improve model accuracy and efficiency. Manual tuning is time-consuming and often suboptimal, so automated methods are widely used.

Popular approaches include grid search, which systematically tests combinations of parameters; random search, which samples parameter combinations randomly; and Bayesian optimization, which uses probabilistic models to find the best parameters more efficiently. Tools like SigOpt, Katib, TensorFlow Vizier, and Spearmint automate these processes, enabling data scientists to quickly identify optimal configurations.

Here’s a simple Python example using scikit-learn’s GridSearchCV for hyperparameter optimization:

python

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import GridSearchCV

param_grid = {

'n_estimators': [50, 100, 200],

'max_depth': [None, 10, 20],

'min_samples_split': [2, 5, 10]

}

clf = RandomForestClassifier(random_state=42)

grid_search = GridSearchCV(clf, param_grid, cv=3, scoring='accuracy')

grid_search.fit(X_train, y_train)

print("Best parameters:", grid_search.best_params_)

print("Best cross-validation score:", grid_search.best_score_)This approach helps ensure that the model is not only accurate but also efficient and robust.

Feature Selection

Feature selection involves identifying the most relevant variables (features) for your model, which can reduce overfitting, improve interpretability, and speed up training. There are three main types of feature selection methods: wrapper methods (which use predictive models to score feature subsets), filter methods (which use statistical tests), and embedded methods (which perform feature selection during model training).

Automated feature selection tools can quickly evaluate large numbers of features, selecting those that contribute most to model performance. This process is essential for high-dimensional datasets, where irrelevant or redundant features can degrade model quality.

Model Selection

Model selection is the process of choosing the best algorithm for your specific problem and dataset. This involves comparing different models based on performance metrics such as accuracy, precision, recall, or F1-score. Automated model selection tools, including AutoML platforms, can test multiple algorithms and configurations, ranking them according to their results.

Advanced criteria like Akaike Information Criterion (AIC) and Bayesian Information Criterion (BIC) can also be used to balance model complexity and performance, helping to avoid overfitting.

Data Preprocessing Techniques

Data preprocessing is a foundational step in any machine learning workflow. It includes cleaning data (handling missing values, removing duplicates), encoding categorical variables, normalizing or standardizing features, and compressing data when necessary. Automated tools like IBM DataStage and Data Refinery streamline these tasks, ensuring that data is consistent and ready for modeling.

Effective preprocessing not only improves model accuracy but also reduces the risk of errors and biases in downstream tasks.

Transfer Learning and Pre-trained Models

Transfer learning leverages models that have already been trained on similar tasks, allowing you to adapt them to your specific problem with less data and computational effort. This approach is especially valuable in domains where labeled data is scarce or expensive to obtain.

By fine-tuning pre-trained models, organizations can achieve high performance with fewer resources, accelerating the development and deployment of AI solutions.

Network Architecture Search (NAS)

Neural Architecture Search (NAS) is an advanced optimization technique that automates the design of neural network architectures. Using methods like reinforcement learning, evolutionary algorithms, or gradient-based search, NAS can discover novel architectures that outperform manually designed models.

Open-source tools like AutoKeras make NAS accessible, enabling data scientists to experiment with different architectures and find the best fit for their data and objectives.

Tools for AI Model Optimization

Optimizing AI models is not just about choosing the right strategies—it’s also about leveraging the best tools available to streamline, automate, and enhance every stage of the machine learning lifecycle. In this section, we’ll explore the most important categories of tools for AI model optimization, including AutoML platforms, hyperparameter optimization frameworks, data preprocessing solutions, and model monitoring systems. These tools, many of which are highlighted in leading MLOps guides and industry best practices, help teams accelerate development, improve model quality, and ensure robust deployment.

AutoML Platforms and Services

AutoML (Automated Machine Learning) platforms are designed to automate many of the repetitive and complex tasks involved in building machine learning models. These platforms can handle data preprocessing, feature engineering, model selection, and hyperparameter tuning with minimal human intervention. For example, IBM’s AutoAI, available in Watson Machine Learning, automatically analyzes structured data, selects the best estimators, and generates candidate model pipelines for review and deployment. Other notable AutoML solutions include Google AutoML, H2O.ai, and DataRobot. By reducing the need for manual experimentation, AutoML platforms enable data scientists and even non-experts to rapidly prototype and deploy high-quality models.

Hyperparameter Optimization Tools

Hyperparameter optimization is a critical step in maximizing model performance. Instead of manually tuning parameters like learning rate, batch size, or the number of layers, specialized tools automate this process using advanced search algorithms. Popular frameworks include SigOpt, Katib (for Kubernetes environments), Eclipse Arbiter, TensorFlow Vizier, and Spearmint. These tools support various optimization strategies, such as grid search, random search, and Bayesian optimization, allowing teams to efficiently explore large parameter spaces and identify the best configurations for their models.

Here’s a Python example using Optuna, a modern hyperparameter optimization library:

python

import optuna

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import cross_val_score

def objective(trial):

n_estimators = trial.suggest_int('n_estimators', 50, 200)

max_depth = trial.suggest_int('max_depth', 5, 30)

clf = RandomForestClassifier(n_estimators=n_estimators, max_depth=max_depth)

return cross_val_score(clf, X_train, y_train, cv=3).mean()

study = optuna.create_study(direction='maximize')

study.optimize(objective, n_trials=20)

print("Best hyperparameters:", study.best_params)This code demonstrates how hyperparameter optimization can be automated, saving time and improving results.

Data Preprocessing Tools

Data preprocessing is foundational for any successful AI project. Tools like IBM DataStage and Data Refinery help data engineers design and run complex ETL (Extract, Transform, Load) pipelines, connecting to various data sources and transforming raw data into clean, structured datasets. Data Refinery, for instance, supports data cleansing, integration, enrichment, and validation, ensuring high data quality before model training. These tools often provide visual interfaces and automation features, making it easier to handle large-scale data preparation tasks.

Model Monitoring Tools

Once a model is deployed, continuous monitoring is essential to ensure it remains accurate, fair, and reliable. IBM Watson OpenScale is a leading solution for model monitoring, offering dashboards to track model performance, fairness, drift, and explainability. OpenScale can automatically alert teams when a model’s predictions deviate from expected thresholds, helping organizations maintain compliance and quickly address issues like bias or data drift. Other monitoring tools include Evidently AI, Fiddler, and Arize AI, each providing unique features for tracking and explaining model behavior in production.

Integration and Automation Platforms

Modern MLOps platforms, such as Watson Machine Learning and Run:ai, provide end-to-end solutions for managing the entire AI lifecycle. These platforms support automated resource allocation, distributed training, experiment tracking, and seamless integration with other tools. For example, Run:ai enables advanced queueing, fair scheduling, and fractional GPU usage, allowing teams to maximize hardware utilization and accelerate model development. Watson Pipelines, part of IBM’s Cloud Pak for Data, allows users to design, automate, and monitor complex machine learning workflows, from data collection to deployment.

Best Practices for AI Model Optimization

Optimizing AI models is not just about algorithms and tools—it’s about following a set of best practices that ensure your models are effective, reliable, and scalable in real-world environments. Drawing from leading MLOps guides and practical industry experience, here are the most important best practices for AI model optimization, along with actionable advice for each.

Define Clear Project Goals

Before starting any AI project, it’s essential to clearly define what you want to achieve. This means understanding the current process you aim to improve, identifying the specific outcomes you want to predict, and setting measurable success criteria. For example, if you’re building a model to automate customer support, define what “success” looks like—such as reducing response time by 30% or increasing customer satisfaction scores. Clear goals help guide data collection, model selection, and evaluation, ensuring your efforts are aligned with business value.

Automate Repetitive Tasks

Automation is a cornerstone of efficient AI workflows. By automating repetitive tasks—such as data preprocessing, feature selection, hyperparameter tuning, and model deployment—you free up valuable time for experimentation and innovation. Tools like AutoML platforms, Watson Pipelines, and Run:ai can automate many steps in the machine learning lifecycle, from data cleaning to model training and deployment. Automation not only speeds up development but also reduces the risk of human error and increases reproducibility.

Monitor Model Performance

Once a model is deployed, continuous monitoring is critical. Models can degrade over time due to data drift, changing user behavior, or evolving business requirements. Use monitoring tools like IBM Watson OpenScale to track key metrics such as accuracy, fairness, and drift. Set up alerts for when performance drops below acceptable thresholds, and schedule regular evaluations to ensure your model remains reliable. Monitoring also supports compliance with regulatory requirements and helps build trust in AI systems.

Ensure Reproducibility

Reproducibility is vital for both collaboration and compliance. This means being able to recreate your results at any time, even months after the original model was built. Use version control systems not only for code but also for data, model parameters, and environment configurations. Tools like DVC (Data Version Control) and MLflow can help track experiments, datasets, and model versions. Document your workflows thoroughly, including data sources, preprocessing steps, and model configurations, so others can understand and reproduce your work.

Implement Version Control

Effective version control goes beyond tracking code changes. In machine learning, you need to version datasets, model artifacts, and even the environment in which models are trained and deployed. This ensures that you can roll back to previous versions if issues arise and that you can trace the lineage of any model in production. Integrate tools like Git for code, DVC for data, and MLflow or similar platforms for model artifacts.

Address Data Drift

Data drift occurs when the statistical properties of your input data change over time, potentially degrading model performance. Regularly monitor for drift using tools like Watson OpenScale or custom scripts. When drift is detected, retrain your models with updated data or adjust preprocessing steps as needed. Proactive drift management helps maintain model accuracy and reliability in dynamic environments.

Foster Collaboration

AI projects are inherently multidisciplinary, involving data scientists, engineers, domain experts, and business stakeholders. Foster collaboration by using shared platforms, clear documentation, and transparent workflows. Tools like Watson Studio, Dataiku, and collaborative notebooks make it easier for teams to work together, share insights, and iterate on models. Regular communication and feedback loops ensure that models are aligned with business needs and user expectations.

Consider Ethical Implications

Responsible AI is about more than just technical performance. Consider the ethical implications of your models, including fairness, transparency, and accountability. Use tools like Watson OpenScale to monitor for bias and ensure your models are fair across different groups. Document decision-making processes and provide explanations for model predictions where possible. Adhering to ethical standards not only reduces regulatory risk but also builds trust with users and stakeholders.

Example: Automating Model Monitoring with Python

Here’s a simple Python example that demonstrates how to set up automated model monitoring using a custom script. This script checks model accuracy on new data and sends an alert if performance drops below a threshold.

python

import joblib

import pandas as pd

from sklearn.metrics import accuracy_score

import smtplib

# Load the deployed model and new data

model = joblib.load('rf_model.pkl')

new_data = pd.read_csv('new_data.csv')

X_new = new_data.drop('target', axis=1)

y_new = new_data['target']

# Predict and evaluate

y_pred = model.predict(X_new)

accuracy = accuracy_score(y_new, y_pred)

print(f"Current model accuracy: {accuracy:.2f}")

# Alert if accuracy drops below threshold

threshold = 0.85

if accuracy < threshold:

with smtplib.SMTP('smtp.example.com') as server:

server.login('user@example.com', 'password')

message = f"Subject: Model Alert\n\nModel accuracy dropped to {accuracy:.2f}."

server.sendmail('user@example.com', 'admin@example.com', message)

# Created/Modified files during execution:

print("No files created; monitoring is performed in-memory.")This approach can be extended with more advanced monitoring tools and integrated into your MLOps pipeline for continuous oversight.

Case Studies and Examples of AI Model Optimization

Real-world case studies provide invaluable insights into how AI model optimization strategies are applied across different domains. By examining practical examples, we can better understand the challenges, solutions, and measurable benefits of optimization in action. Below, we explore three key areas: image recognition, natural language processing, and predictive analytics, highlighting the optimization techniques and tools that drive success.

Optimizing Image Recognition Models

Image recognition is a classic application of deep learning, but deploying high-performing models in production—especially on resource-constrained devices—requires careful optimization. For example, a retail company might use a convolutional neural network (CNN) to automate product identification on store shelves. The initial model, trained for maximum accuracy, may be too large and slow for real-time use on mobile devices.

To address this, the team applies model compression techniques such as pruning (removing unnecessary weights), quantization (reducing the precision of weights), and knowledge distillation (training a smaller model to mimic a larger one). These methods significantly reduce model size and inference time, enabling deployment on smartphones without a major loss in accuracy. Tools like TensorFlow Lite and ONNX Runtime are commonly used for this purpose.

Here’s a Python example of model quantization using TensorFlow Lite:

python

import tensorflow as tf

# Load a pre-trained Keras model

model = tf.keras.applications.MobileNetV2(weights='imagenet')

# Convert to a quantized TFLite model

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tflite_quant_model = converter.convert()

# Save the quantized model

with open('mobilenetv2_quant.tflite', 'wb') as f:

f.write(tflite_quant_model)

# Created/Modified files during execution:

print("mobilenetv2_quant.tflite")This approach enables real-time image recognition in mobile apps, improving user experience and operational efficiency.

Improving Natural Language Processing Models

Natural language processing (NLP) models, such as those used for sentiment analysis or chatbots, often require optimization to handle large-scale, real-time interactions. For instance, a customer service chatbot built on a transformer-based model like BERT may deliver excellent accuracy but struggle with latency and high memory usage in production.

To optimize, the team might use transfer learning to fine-tune a smaller, pre-trained model on their specific dataset, or apply model distillation to create a lightweight version. Additionally, batching requests and leveraging efficient serving frameworks like ONNX Runtime or TensorFlow Serving can further reduce response times.

A practical example is using Hugging Face’s transformers library to distill a BERT model:

python

from transformers import DistilBertForSequenceClassification, DistilBertTokenizer

# Load a distilled BERT model and tokenizer

model = DistilBertForSequenceClassification.from_pretrained('distilbert-base-uncased')

tokenizer = DistilBertTokenizer.from_pretrained('distilbert-base-uncased')

# Example inference

inputs = tokenizer("Optimize NLP models for production.", return_tensors="pt")

outputs = model(**inputs)

print(outputs.logits)By deploying a distilled model, organizations achieve faster inference and lower resource consumption, making NLP solutions more scalable.

Enhancing Predictive Analytics Models

Predictive analytics is widely used in industries like finance, healthcare, and logistics to forecast outcomes and inform decision-making. For example, a bank may use a machine learning model to predict credit risk. Initially, the model might be highly complex, using hundreds of features and advanced algorithms to maximize predictive power.

However, complexity can hinder interpretability and slow down deployment. The optimization process may involve feature selection to retain only the most relevant variables, hyperparameter tuning to balance accuracy and efficiency, and regularization techniques to prevent overfitting. Automated tools like AutoML platforms (e.g., IBM AutoAI, Google AutoML) can streamline these steps, allowing teams to quickly iterate and deploy robust models.

A typical workflow might look like this in Python using scikit-learn:

python

from sklearn.ensemble import RandomForestClassifier

from sklearn.feature_selection import SelectFromModel

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score

# Assume X, y are your features and labels

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Feature selection with Random Forest

selector = RandomForestClassifier(n_estimators=100, random_state=42)

selector.fit(X_train, y_train)

sfm = SelectFromModel(selector, prefit=True)

X_train_selected = sfm.transform(X_train)

X_test_selected = sfm.transform(X_test)

# Train optimized model

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train_selected, y_train)

y_pred = model.predict_proba(X_test_selected)[:, 1]

print("AUC:", roc_auc_score(y_test, y_pred))This process results in a more efficient, interpretable, and production-ready predictive model.

The Future of AI Model Optimization

The field of AI model optimization is evolving rapidly, driven by advances in algorithms, hardware, and the growing need for responsible, scalable, and efficient AI systems. In this section, we explore the most important trends shaping the future of AI model optimization, including emerging techniques, the expanding role of AutoML, and the increasing importance of responsible AI.

Emerging Techniques

AI model optimization is benefiting from a wave of innovative techniques that are making models faster, more accurate, and more adaptable. Among the most promising are neural architecture search (NAS), which automates the design of deep learning architectures, and advanced transfer learning methods that allow models to leverage knowledge from related tasks with minimal data. Techniques like few-shot and zero-shot learning are enabling models to generalize from very limited examples, which is especially valuable in domains where labeled data is scarce.

Another key trend is the use of synthetic data and data augmentation. By generating realistic data samples, organizations can train more robust models without the need for massive, manually labeled datasets. Generative models, such as GANs (Generative Adversarial Networks), are increasingly used to create synthetic images, text, and even tabular data, supporting both model training and testing.

On the hardware side, optimization is being accelerated by specialized AI chips and distributed computing frameworks. Technologies like fractional GPU allocation, as offered by platforms like Run:ai, allow for more efficient use of computational resources, enabling faster experimentation and larger-scale model training.

The Role of AutoML

AutoML (Automated Machine Learning) is set to play an even greater role in the future of AI model optimization. As highlighted in the latest MLOps guides, AutoML platforms are evolving to handle not just model selection and hyperparameter tuning, but also feature engineering, data preprocessing, and even aspects of model governance. This democratizes AI development, making it accessible to a broader range of users, including those without deep expertise in machine learning.

AutoML is also being integrated into end-to-end MLOps pipelines, enabling seamless automation from data ingestion to model deployment and monitoring. With the rise of no-code and low-code solutions, organizations can accelerate time-to-market and focus on solving business problems rather than managing technical complexity.

The Importance of Responsible AI

As AI systems become more pervasive, the need for responsible AI practices is paramount. Future optimization strategies will increasingly incorporate fairness, transparency, and explainability as core objectives, not just afterthoughts. Tools like IBM Watson OpenScale and AI Factsheets are already enabling organizations to monitor models for bias, drift, and compliance, and these capabilities will only become more sophisticated.

Regulatory requirements, such as the EU AI Act, are pushing organizations to adopt robust governance frameworks that ensure AI models are ethical, auditable, and aligned with societal values. This means that optimization will not only focus on technical performance but also on building trust and accountability into AI systems.

Practical Example: Integrating Responsible AI in Model Optimization

Here’s a Python example that demonstrates how to use the fairlearn library to evaluate and mitigate bias in a classification model:

python

from fairlearn.metrics import MetricFrame, selection_rate, accuracy_score

from sklearn.metrics import confusion_matrix

import pandas as pd

# Example predictions and sensitive feature

y_true = [0, 1, 0, 1, 0, 1, 1, 0]

y_pred = [0, 1, 0, 0, 1, 1, 1, 0]

sensitive_feature = ['A', 'A', 'B', 'B', 'A', 'B', 'A', 'B']

# Create a MetricFrame to evaluate fairness

metrics = {

'accuracy': accuracy_score,

'selection_rate': selection_rate

}

frame = MetricFrame(metrics=metrics, y_true=y_true, y_pred=y_pred, sensitive_features=sensitive_feature)

print("Overall accuracy:", frame.overall['accuracy'])

print("Accuracy by group:", frame.by_group['accuracy'])

print("Selection rate by group:", frame.by_group['selection_rate'])This approach helps teams identify and address disparities in model performance across different groups, supporting the development of fair and responsible AI solutions.

MLOps Examples and Templates: Automating and Standardizing Processes

Implementing MLOps in an organization is not just about theory, but above all about practice based on proven templates and examples that allow you to quickly build repeatable, automated processes for the entire AI model lifecycle. In this section, we present how to use ready-made MLOps templates, end-to-end examples, and automation tools to accelerate deployments and increase the efficiency of data science teams.

MLOps Templates in Practice

Leading platforms such as IBM Cloud Pak for Data offer ready-made templates that allow you to build a complete MLOps pipeline—from data preparation, through model training, to deployment and monitoring. An example is the CP4D MLOps Accelerator, which guides you step by step through environment configuration, tool integration, and automation of key stages of the model lifecycle. This way, you can quickly launch a repeatable workflow that can be easily adapted to your needs, for example by integrating your own PyTorch or TensorFlow models.

Templates typically include:

documentation of the environment and configuration,

sample notebooks for data validation, model training, and deployment,

a pipeline that connects individual steps into one automated process.

Example: End-to-End Pipeline in Python

Below is a simplified example of an MLOps pipeline in Python that automates data validation, model training, and deployment. In practice, such a pipeline can be extended with additional steps, such as automatic monitoring or retraining.

python

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import joblib

# 1. Data validation and preparation

data = pd.read_csv('data.csv')

X = data.drop('target', axis=1)

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# 2. Model training

model = RandomForestClassifier(n_estimators=100, random_state=42)

model.fit(X_train, y_train)

# 3. Evaluation and saving the model

accuracy = model.score(X_test, y_test)

print(f"Test accuracy: {accuracy:.2f}")

joblib.dump(model, 'rf_model.pkl')

# 4. Deployment (for example: saving the model to a file, integration with an API)

# In practice, deployment may include registering the model in an MLOps system, e.g., Watson Machine Learning

# Created/Modified files during execution:

print("rf_model.pkl")Automation and Pipeline Scheduling

With tools like Watson Pipelines, you can build a graphical or code-based pipeline that automates the execution of individual steps (e.g., validation, training, deployment, monitoring) in a specified order and with selected parameters. Pipelines can be run manually, on a schedule (e.g., nightly), or triggered by specific events (e.g., arrival of new data).

Sample Watson Pipelines features:

visual editor for building and configuring pipelines,

ability to run custom functions and scripts,

scheduling and conditional execution of tasks,

monitoring and notifications about pipeline status.

End-to-End Examples: From Data to Production

In IBM documentation and other platforms, you can find ready-made end-to-end examples, such as a workflow for flood forecasting with automatic data and model versioning and the ability to quickly roll back. Such examples show how to combine data validation, training, deployment, monitoring, and automatic retraining into one coherent process.

Quantum Programming with AI Agents: New Horizons in Computing