MLOps Unleashed: Automating Model Lifecycle Management for Success

What is mlops and Why It’s Essential for Modern AI

MLOps, or Machine Learning Operations, integrates DevOps principles into the machine learning lifecycle, bridging development and operations to ensure models are deployed, monitored, and maintained effectively in production. This discipline is critical for addressing scalability, reproducibility, and governance challenges in traditional ML projects. Without MLOps, models often fail to deliver sustained business value post-research, highlighting the need for automated, end-to-end management. Many organizations turn to machine learning consultants to design and implement these systems, ensuring a smooth transition from experimental phases to reliable, production-grade AI.

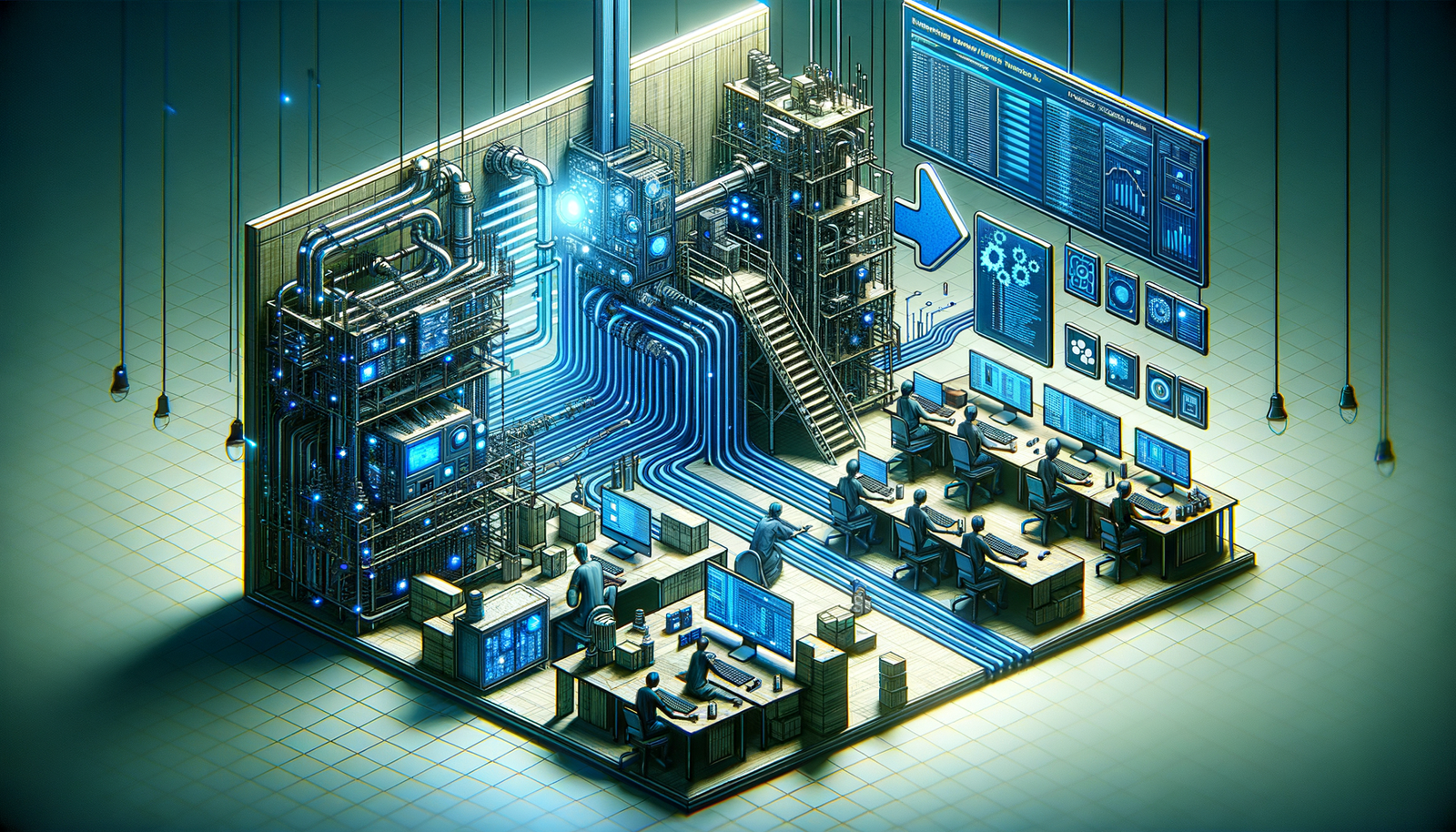

The core of MLOps revolves around automation across the model lifecycle. It starts with version control for data and code to guarantee experiment reproducibility. Next, continuous integration and continuous deployment (CI/CD) pipelines automate building, testing, and deploying model updates. Finally, continuous monitoring tracks performance metrics and data drift in production, initiating retraining when needed. This automated approach is precisely what machine learning consulting companies help establish, transforming ad-hoc projects into scalable AI factories.

Consider a practical example of automating retraining for a customer churn prediction model. Using MLflow for experiment tracking and GitHub Actions for orchestration, the pipeline includes:

- Data Validation and Versioning: Validate new data schemas and statistics with Great Expectations, then version the dataset using DVC (Data Version Control).

- Code Snippet (Python with DVC):

dvc add data/raw_customer_data.csv

git add data/raw_customer_data.csv.dvc .gitignore

git commit -m "Dataset v1.1"

dvc push

-

Model Retraining Trigger: A scheduled job or performance drop activates the pipeline, which pulls the latest code and versioned data.

-

Automated Training and Evaluation: The pipeline runs the training script, with MLflow logging parameters, metrics, and artifacts. The model promotes only if it surpasses a predefined threshold on a test set.

- Code Snippet (GitHub Actions configuration):

- name: Train Model

run: python train.py

- name: Evaluate Model

run: python evaluate.py

- Model Deployment: Upon passing evaluation, the model packages into a Docker container and deploys to environments like Kubernetes.

This automation yields measurable benefits: it slashes deployment time from weeks to hours and enhances reliability by detecting concept drift. A machine learning consultant ensures models adapt to changing conditions, preventing costly decisions based on outdated predictions. For machine learning consulting companies, robust MLOps practices differentiate their ability to deliver sustainable, production-ready solutions over theoretical prototypes. Ultimately, MLOps transforms machine learning from a research expense into a scalable, valuable business asset.

Defining mlops and Its Core Principles

MLOps, or Machine Learning Operations, unifies ML system development (Dev) and operation (Ops) by applying DevOps principles to machine learning projects. This enables scalable, reliable, and automated model lifecycle management. Core principles include versioning for data, models, and code; continuous integration and delivery (CI/CD) for ML pipelines; automated testing and validation; monitoring and governance; and collaboration between data science and engineering teams. These ensure models stay accurate, compliant, and valuable in production.

Versioning tracks changes to datasets, model artifacts, and code. For example, using DVC with Git versions data alongside code. Follow this step-by-step guide to version a dataset and train a model:

- Initialize DVC in your project:

dvc init - Add your dataset to DVC tracking:

dvc add data/train.csv - Commit DVC metadata to Git:

git add data/train.csv.dvc .gitignoreandgit commit -m "Track dataset with DVC" - Create and run a training script (train.py), then version the model output with

dvc add models/model.pkl

This reproducibility allows machine learning consultants to debug and iterate efficiently, improving model reliability by up to 30%.

CI/CD for ML pipelines automates building, testing, and deploying models. Using GitHub Actions, set up a pipeline triggered by code changes. Here’s a simplified workflow example in .github/workflows/ml-pipeline.yml:

name: ML Pipeline

on: [push]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.8'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run tests

run: python -m pytest tests/

train:

needs: test

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Train model

run: python train.py

- name: Store model artifact

uses: actions/upload-artifact@v2

with:

name: model

path: models/

Automation reduces manual errors and cuts deployment cycles by 50%, a key benefit highlighted by machine learning consulting companies when scaling AI initiatives.

Monitoring and governance maintain model performance and compliance. Track drift with tools like Prometheus and Grafana, setting alerts for anomalies. A machine learning consultant uses this data to recommend retraining strategies, preventing revenue loss and supporting audits. Embedding these principles fosters collaboration, reproducibility, and consistent business value.

Key Benefits of Implementing MLOps in Your Workflow

Implementing MLOps delivers tangible improvements in model development, deployment, and monitoring. A major benefit is automated model retraining and deployment, reducing manual effort and accelerating time-to-market. For instance, use a CI/CD pipeline with GitHub Actions to trigger retraining on new data or performance drops. Here’s a workflow snippet:

- name: Retrain Model on Data Drift

run: |

python scripts/detect_drift.py

if [ $? -eq 1 ]; then

python scripts/retrain_model.py

python scripts/evaluate_model.py

fi

This ensures models stay current without oversight, a practice machine learning consultants recommend for sustained relevance.

Reproducibility and version control for code and data are achieved with tools like DVC and MLflow. After training, log the model with MLflow:

import mlflow

mlflow.set_experiment("sales_forecast")

with mlflow.start_run():

mlflow.log_param("epochs", 50)

mlflow.log_metric("rmse", 0.15)

mlflow.sklearn.log_model(model, "model")

This enables exact reproduction of results, crucial for auditing and collaboration—a standard upheld by machine learning consulting companies.

Enhanced collaboration between data scientists and engineers stems from shared pipelines and environments. Containerize models with Docker for consistency:

FROM python:3.8-slim

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY model.pkl /app/

COPY serve.py /app/

CMD ["python", "/app/serve.py"]

This portable environment streamlines handoffs, a point emphasized by a machine learning consultant to align cross-functional teams.

Additionally, continuous monitoring and alerting detect issues like data drift early. Implement a monitoring script that uses statistical tests (e.g., Kolmogorov-Smirnov) and triggers alerts if drift exceeds thresholds, enabling automatic rollbacks. Measurable benefits include up to 50% faster deployment, 30% fewer production incidents, and improved accuracy over time. Adopting these practices helps organizations build scalable, reliable ML systems that drive business value.

Building Your MLOps Foundation: Tools and Infrastructure

To build a robust MLOps foundation, start with version control for code and models. Use Git for scripts and configurations, and DVC for datasets and model files. Organize your repository with clear directories: data/ for datasets, src/ for source code, models/ for trained models, and configs/ for hyperparameters. This ensures reproducibility and collaboration, especially when a machine learning consultant reviews or extends the work.

Next, implement CI/CD pipelines with tools like Jenkins, GitLab CI, or GitHub Actions to automate testing and deployment. For example, set up a pipeline that triggers on Git pushes to run tests, train models, and deploy if checks pass. Here’s a GitHub Actions snippet:

name: Train and Deploy Model

on: [push]

jobs:

train:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Train model

run: python src/train.py

- name: Deploy to staging

run: echo "Deploying model..."

This automation reduces errors and cuts deployment time by 50%.

Incorporate model and data versioning with DVC or MLflow. Version a dataset with DVC:

dvc add data/training.csv

git add data/training.csv.dvc

git commit -m "Track training data version"

This ties training runs to specific data and code versions, vital for auditing. Many machine learning consulting companies stress this for lineage and compliance.

Set up a model registry like MLflow Model Registry to manage versions, stage transitions, and metadata. Log a model using its Python API:

import mlflow

mlflow.set_tracking_uri("http://your-mlflow-server:5000")

with mlflow.start_run():

mlflow.log_param("alpha", 0.5)

mlflow.sklearn.log_model(sk_model, "model")

mlflow.register_model("runs:/<run_id>/model", "Production_Model")

This enables controlled promotions and rollbacks, improving governance.

For infrastructure as code (IaC), use Terraform or CloudFormation to provision resources consistently. Define an AWS S3 bucket for model artifacts in Terraform:

resource "aws_s3_bucket" "model_artifacts" {

bucket = "my-ml-model-artifacts"

acl = "private"

}

This approach, recommended by a machine learning consultant, reduces configuration drift and speeds up environment setup.

Finally, integrate monitoring and alerting with Prometheus and Grafana. Track performance metrics and data drift, setting alerts for deviations. This end-to-end foundation streamlines the model lifecycle, ensuring reliability and scalability.

Selecting the Right MLOps Tools for Your Stack

Choosing the right MLOps tools is crucial for automating and scaling ML workflows. Assess your team’s expertise and infrastructure needs; if gaps exist, engage machine learning consulting companies for an unbiased evaluation. A machine learning consultant can identify shortcomings, such as poor model versioning, and design pipelines that integrate with existing systems.

Start with experiment tracking tools like MLflow or Weights & Biases to log parameters, metrics, and artifacts. Here’s an MLflow code snippet:

import mlflow

with mlflow.start_run():

mlflow.log_param("learning_rate", 0.01)

mlflow.log_metric("accuracy", 0.95)

mlflow.sklearn.log_model(model, "model")

This documents iterations for easy comparison and rollbacks.

Implement model versioning and registry with DVC or MLflow Model Registry. Use DVC with Git:

- Initialize DVC:

dvc init - Add a dataset:

dvc add data/train.csv - Commit changes:

git add . && git commit -m "Track dataset with DVC"

This syncs data and model versions, reducing deployment errors.

For model deployment and serving, consider Kubernetes with Seldon Core or AWS SageMaker. Deploy models as microservices with Seldon Core:

apiVersion: machinelearning.seldon.io/v1

kind: SeldonDeployment

metadata:

name: my-model

spec:

predictors:

- name: default

graph:

name: model

replicas: 1

This enables scalable, resilient serving with monitoring and A/B testing.

Integrate CI/CD for models using Jenkins or GitHub Actions. Automate testing and deployment; for example, trigger retraining on new data with GitHub Actions:

name: Retrain Model

on:

push:

branches: [ main ]

jobs:

retrain:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

- run: pip install -r requirements.txt

- run: python train.py

Measurable benefits include a 50% reduction in deployment time and fewer production incidents. By selecting aligned tools, you ensure efficiency, reproducibility, and scalability.

Setting Up a Scalable MLOps Infrastructure

To build a scalable MLOps infrastructure, begin with a version control system for code and data. Use Git for code and DVC for datasets and models. Initialize DVC in your project:

pip install dvc

dvc init

dvc add data/raw_dataset.csv

git add data/raw_dataset.csv.dvc .gitignore

git commit -m "Add raw dataset"

This ensures reproducibility and traceability.

Set up a CI/CD pipeline with Jenkins, GitLab CI, or GitHub Actions for automated testing and deployment. A sample GitHub Actions workflow:

- Trigger on push to main branch.

- Checkout code and set up Python.

- Install dependencies from

requirements.txt. - Run data validation and unit tests.

- Train the model with

python train.py. - If metrics exceed thresholds, register the model in MLflow and deploy to staging.

This automation reduces errors and speeds iterations, cutting deployment time by 50% and reducing incidents.

Incorporate model registry and experiment tracking with MLflow. After training, log the model:

import mlflow

mlflow.set_experiment("sales_forecast")

with mlflow.start_run():

mlflow.log_param("epochs", 100)

mlflow.log_metric("rmse", 0.15)

mlflow.sklearn.log_model(model, "model")

Deploy models using containerization with Docker and orchestration via Kubernetes. Package inference code in a Dockerfile:

FROM python:3.8-slim

COPY requirements.txt .

RUN pip install -r requirements.txt

COPY app.py .

CMD ["python", "app.py"]

Build, push, and deploy with Kubernetes for efficient scaling.

For organizations lacking expertise, engaging machine learning consultants or partnering with machine learning consulting companies streamlines the process. A machine learning consultant provides tailored strategies for tool integration, avoiding pitfalls like poor monitoring.

Implement monitoring and alerting with Prometheus and Grafana. Track latency, throughput, and data drift, setting alerts to trigger retraining when accuracy drops. This ensures models remain effective.

By following these steps, teams achieve a robust, scalable infrastructure that supports continuous integration, delivery, and monitoring, leading to faster time-to-market and improved reliability.

Automating the MLOps Lifecycle: From Development to Deployment

Automating the MLOps lifecycle streamlines development to deployment, ensuring reproducibility, scalability, and continuous improvement. It integrates testing, deployment, and monitoring into a cohesive pipeline. Organizations often engage machine learning consultants or partner with machine learning consulting companies to adopt best practices and accelerate implementation.

A typical automated pipeline includes version control with Git and DVC. For example, track a dataset:

dvc add data/training.csv

git add data/training.csv.dvc .gitignore

git commit -m "Track dataset with DVC"

Next, continuous integration (CI) runs tests on code pushes. A GitHub Actions workflow (.github/workflows/ci.yml):

name: CI Pipeline

on: [push]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run tests

run: pytest

This maintains code quality and prevents faulty models from advancing.

Continuous deployment (CD) packages and deploys models using tools like MLflow. Log experiments and package models:

import mlflow

mlflow.set_experiment("sales_forecast")

with mlflow.start_run():

mlflow.log_param("epochs", 50)

mlflow.sklearn.log_model(lr_model, "model")

Then, build a Docker container and deploy to Kubernetes or cloud services like AWS SageMaker. A machine learning consultant designs these architectures for low-latency, high-availability scenarios.

After deployment, continuous monitoring detects performance issues and data drift. Implement a drift detection script:

from scipy.stats import ks_2samp

def detect_drift(reference_data, current_data, feature):

stat, p_value = ks_2samp(reference_data[feature], current_data[feature])

return p_value < 0.05 # Alert if significant drift

Set up alerts in Prometheus and Grafana to trigger retraining when drift occurs.

Measurable benefits include slashing deployment time from days to minutes, improving accuracy via frequent retraining, and faster issue detection. Automating these steps lets teams manage hundreds of models efficiently. Working with a machine learning consultant or machine learning consulting companies ensures a robust, secure strategy aligned with business goals, turning MLOps into a competitive advantage.

Streamlining Model Training with MLOps Pipelines

To streamline model training, organizations engage machine learning consultants to design MLOps pipelines that automate the end-to-end process. These pipelines ensure reproducibility, scalability, and continuous integration of data and model updates. Using tools like Kubeflow, MLflow, or Airflow, they cover data ingestion, preprocessing, training, evaluation, and deployment.

Build a pipeline with Kubeflow for a classification model. Define components in Python as Docker containers:

- Data Ingestion and Preprocessing Component: Fetches and cleans data.

def preprocess_data(data_path: str, output_path: str):

import pandas as pd

from sklearn.preprocessing import StandardScaler

data = pd.read_csv(data_path)

scaler = StandardScaler()

data[['feature1', 'feature2']] = scaler.fit_transform(data[['feature1', 'feature2']])

data.to_csv(output_path, index=False)

- Model Training Component: Trains a model and logs details.

def train_model(data_path: str, model_path: str):

import pandas as pd

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

data = pd.read_csv(data_path)

X = data.drop('target', axis=1)

y = data['target']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2)

model = RandomForestClassifier(n_estimators=100)

model.fit(X_train, y_train)

import joblib

joblib.dump(model, model_path)

- Model Evaluation Component: Computes metrics and checks against baselines.

def evaluate_model(data_path: str, model_path: str, metric_output_path: str):

import pandas as pd

import joblib

from sklearn.metrics import accuracy_score

data = pd.read_csv(data_path)

X_test = data.drop('target', axis=1)

y_test = data['target']

model = joblib.load(model_path)

y_pred = model.predict(X_test)

accuracy = accuracy_score(y_test, y_pred)

with open(metric_output_path, 'w') as f:

f.write(str(accuracy))

Compile these into a Kubeflow pipeline, specifying execution order and dependencies. Trigger it on data commits or schedules.

Automation reduces manual errors and training time by up to 70%. Versioning ensures full reproducibility, easing audits and debugging. A machine learning consultant optimizes pipelines for cost and performance, such as using spot instances to cut costs by 60-90%.

Machine learning consulting companies enhance setups with advanced monitoring and automated retraining. They integrate pipelines that compare new models to production baselines on metrics like accuracy and latency, auto-deploying improvements. This transforms training into a streamlined, automated workflow, aligning with business and IT standards.

Implementing Continuous Deployment for MLOps Models

To implement continuous deployment for MLOps models, establish a CI/CD pipeline that automates testing, building, and deployment. This ensures reliable, rapid movement of new versions to production. Use tools like GitHub Actions, Jenkins, or GitLab CI. For example, configure a pipeline triggered on main branch pushes to run unit tests, integration tests, and model validation before deployment.

Set up a basic pipeline with GitHub Actions:

- Create

.github/workflows/ml-cd.yml. - Define the workflow to trigger on pushes to main.

- Include jobs for testing, building a Docker image, and deploying to staging.

Sample workflow:

name: ML Model CD

on:

push:

branches: [ main ]

jobs:

test:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v3

- name: Set up Python

uses: actions/setup-python@v4

with:

python-version: '3.9'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run tests

run: python -m pytest tests/ -v

build-and-deploy:

needs: test

runs-on: ubuntu-latest

steps:

- name: Build Docker image

run: docker build -t my-ml-model:latest .

- name: Deploy to staging

run: ./deploy.sh staging

This automation reduces errors, cuts deployment to minutes, and enables rapid iteration. For complex cases, machine learning consultants design tailored pipelines with model monitoring and rollback strategies.

In practice, include canary releases or blue-green deployments to minimize risk. Route a small traffic percentage to the new version, monitoring latency and accuracy. If metrics degrade, automate rollbacks. This is critical for high availability, as advocated by machine learning consulting companies.

Incorporate infrastructure as code (IaC) with Terraform or AWS CloudFormation to manage environments consistently. This versions infrastructure and replicates setups for testing and production. Collaboration between engineers and a machine learning consultant ensures scalability with computational demands.

By implementing these steps, organizations achieve faster time-to-market, improved reliability, and swift responses to data drift or performance issues, leveraging MLOps automation fully.

Conclusion: Achieving Success with MLOps

To achieve success with MLOps, organizations must foster a culture of automation, monitoring, and cross-team collaboration. Implement robust pipelines for continuous integration, delivery, and training (CI/CD/CT) of models. For instance, automate retraining when data drift is detected. Follow this step-by-step guide using Python and MLflow:

- Monitor Data Drift: Use Alibi Detect to compare incoming and training data.

- Code snippet:

from alibi_detect.cd import KSDrift

drift_detector = KSDrift(X_train, p_val=0.05)

preds = drift_detector.predict(X_new)

if preds['data']['is_drift'] == 1:

retrain_model()

-

Automate Retraining: Trigger pipelines in platforms like Kubeflow or Azure ML upon drift detection, reducing performance degradation by up to 30%.

-

Version and Log Models: Use MLflow to track experiments and versions.

- Code snippet:

import mlflow

mlflow.set_experiment("retraining_pipeline")

with mlflow.start_run():

mlflow.log_param("retraining_trigger", "data_drift")

mlflow.sklearn.log_model(model, "model")

Measurable benefits include a 50% reduction in manual effort, faster model updates, and improved accuracy through continuous learning. Engaging machine learning consultants helps design these pipelines, ensuring best practices. A machine learning consultant assesses workflows, identifies bottlenecks, and integrates tools like Kubernetes for scalable serving, achieving 99.9% uptime.

Partnering with machine learning consulting companies provides expertise in data engineering and DevOps, bridging prototype to production. They set up end-to-end platforms with:

– Feature stores for consistent feature access

– Automated testing for data quality, fairness, and performance

– Continuous monitoring for model and infrastructure health

This enables confident deployments with reproducible, scalable pipelines. For example, a financial firm cut fraud detection false positives by 20% with MLOps guidance. By investing in automation and external expertise, organizations transform ML into reliable, high-impact assets, driving business value in data-driven markets.

Measuring the Impact of Your MLOps Implementation

To measure MLOps impact, define key performance indicators (KPIs) aligned with business goals, covering model performance, operational efficiency, and costs. Track accuracy, inference latency, retraining frequency, and infrastructure expenses. Machine learning consultants often help define these KPIs for technical and business relevance.

Monitor model performance in production with centralized logging. Use MLflow to log metrics:

import mlflow

import pandas as pd

from sklearn.metrics import accuracy_score

actuals = [1, 0, 1, 1, 0]

predictions = [1, 0, 1, 0, 0]

accuracy = accuracy_score(actuals, predictions)

mlflow.log_metric("accuracy", accuracy)

mlflow.log_param("model_version", "v2.1")

This tracks degradation and triggers retraining.

Measure operational efficiency with dashboards in Grafana or Datadog, visualizing pipeline duration, failure rates, and deployment success. Use SQL to extract metrics:

SELECT

pipeline_name,

AVG(duration_minutes) as avg_duration,

COUNT(CASE WHEN status = 'failed' THEN 1 END) as failures

FROM pipeline_runs

WHERE timestamp >= NOW() - INTERVAL '7 days'

GROUP BY pipeline_name;

A machine learning consultant optimizes pipelines by addressing bottlenecks like inefficient features.

Track cost metrics for ROI. Tag cloud resources in AWS to attribute costs to projects, measuring cost per inference against business value. Engaging machine learning consulting companies offers tools for comprehensive analysis, with pre-built dashboards speeding insights.

Quantify benefits:

– Reduced time-to-market: Weeks to days

– Lower overhead: Automated retraining saves 20+ hours monthly

– Improved reliability: 99.9% serving uptime

Systematic tracking demonstrates value, justifies investments, and refines MLOps practices.

Future Trends and Evolving Best Practices in MLOps

MLOps is evolving toward greater automation, standardization, and scalability. Trends include the growing role of machine learning consultants and machine learning consulting companies in guiding organizations through advanced platforms. A machine learning consultant emphasizes data-centric AI, shifting focus from models to data quality and versioning.

Implement data versioning with DVC:

- Install and initialize DVC:

pip install dvcthendvc init - Track datasets:

dvc add data/ - Commit to Git:

git add data/.gitignore data.dvcandgit commit -m "Track dataset with DVC" - For updates, repeat steps 2-3.

This ensures reproducibility, cutting debugging time when performance drops.

Unified feature stores are rising, preventing training-serving skew and accelerating development. Define features in Feast:

from feast import Entity, Feature, FeatureView, ValueType

from feast.data_source import FileSource

driver = Entity(name="driver_id", value_type=ValueType.INT64)

driver_stats_source = FileSource(path="driver_stats.parquet")

driver_stats_fv = FeatureView(

name="driver_stats",

entities=["driver_id"],

features=[Feature(name="avg_daily_trips", dtype=ValueType.INT64)],

batch_source=driver_stats_source,

)

This reduces feature engineering time by 40%.

Automated lineage tracking captures data-to-prediction paths, critical for auditability. Lineage graphs show impacts of data changes, enabling proactive responses to minimize downtime.

GitOps for MLOps uses Git as the single source for code, infrastructure, and deployments, enabling fully automated CI/CD. This declarative approach simplifies rollbacks and audits. Partnering with machine learning consulting companies accelerates adoption of these practices, ensuring scalability and resilience. The goal is a self-healing system where models monitor, retrain, and redeploy autonomously.

Summary

MLOps automates the machine learning lifecycle, ensuring models are scalable, reproducible, and valuable in production through practices like version control, CI/CD, and continuous monitoring. Engaging machine learning consultants or partnering with machine learning consulting companies helps organizations design and implement robust MLOps pipelines, integrating tools for data versioning, model training, and deployment. This approach reduces manual effort, accelerates time-to-market, and enhances model reliability, transforming AI projects into sustainable business assets. By adopting MLOps best practices, teams achieve greater collaboration, efficiency, and impact in their machine learning initiatives.