Introduction: The Evolution of Debugging

Debugging has long been one of the most challenging and time-consuming aspects of software development. Traditionally, programmers have relied on manual code reviews, print statements, and step-by-step execution to identify and fix errors. While these methods have proven effective over decades, they often require significant effort, deep expertise, and can slow down the development process.

As software systems grow increasingly complex, with millions of lines of code and intricate dependencies, traditional debugging techniques struggle to keep pace. Bugs can hide in unexpected places, manifest intermittently, or arise from subtle interactions between components. This complexity has driven the search for smarter, more efficient ways to detect and resolve issues.

Enter AI-powered debugging — a revolutionary approach that leverages machine learning, natural language processing, and pattern recognition to assist developers in finding and fixing bugs faster and more accurately. By analyzing vast amounts of code and historical bug data, AI tools can identify anomalies, suggest potential fixes, and even predict where errors are likely to occur.

This evolution transforms debugging from a purely manual, reactive task into a proactive, collaborative process between human developers and intelligent agents. AI acts as a personal code detective, tirelessly scanning codebases, learning from past mistakes, and providing insights that help programmers focus on higher-level problem-solving.

In this article series, we will explore how AI is reshaping debugging, the tools available today, best practices for working with AI agents, and what the future holds for this exciting intersection of software engineering and artificial intelligence.

Understanding AI-Powered Debugging Tools

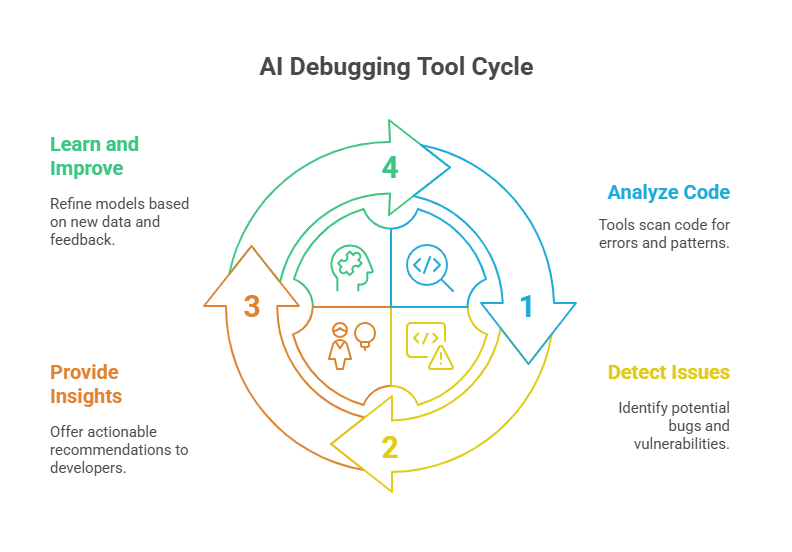

AI-powered debugging tools represent a new generation of software development assistants designed to help programmers identify, diagnose, and fix bugs more efficiently. Unlike traditional debugging methods that rely heavily on manual inspection and trial-and-error, these tools use advanced algorithms to analyze code, detect patterns, and provide actionable insights.

At their core, AI debugging tools leverage machine learning models trained on vast datasets of code and known bugs. This training enables them to recognize common coding errors, security vulnerabilities, and performance issues across different programming languages and frameworks. Some tools also incorporate natural language processing (NLP) to understand developer queries or comments, making the interaction more intuitive.

There are several types of AI-powered debugging tools available today. Static analysis tools scan source code without executing it, flagging potential problems such as syntax errors, type mismatches, or unsafe operations. Dynamic analysis tools monitor running applications to detect runtime errors, memory leaks, or unexpected behaviors. More advanced systems combine both approaches and can even suggest code fixes or generate test cases automatically.

One key feature of AI debugging tools is their ability to learn and improve over time. By continuously analyzing new codebases and developer feedback, these tools refine their models to provide more accurate and context-aware recommendations. This adaptability makes them invaluable in fast-paced development environments where code changes frequently.

Setting Up Your AI Debugging Environment

Integrating AI-powered debugging tools into your development workflow is a crucial step toward harnessing their full potential. Setting up an effective AI debugging environment involves selecting the right tools, configuring them properly, and ensuring smooth collaboration between the AI system and your existing processes.

The first step is to choose an AI debugging tool that fits your project’s technology stack and requirements. Many tools support popular programming languages like Python, JavaScript, Java, and C++, and offer features ranging from static code analysis to runtime monitoring. Consider factors such as ease of integration, customization options, and the level of AI assistance provided.

Once you have selected a tool, installation and configuration come next. Most AI debugging tools can be integrated directly into your code editor or integrated development environment (IDE), such as Visual Studio Code, IntelliJ IDEA, or Eclipse. This integration allows real-time feedback as you write code, making it easier to catch issues early. Additionally, some tools offer cloud-based platforms that analyze your code remotely and provide detailed reports.

Configuring the tool to match your project’s coding standards and workflows is essential. This may involve setting rules for code style, defining which types of bugs to prioritize, or customizing alerts to reduce noise. Many AI tools also allow you to connect them with version control systems like Git, enabling them to analyze code changes and provide context-aware suggestions.

To maximize the benefits, encourage your development team to familiarize themselves with the AI tool’s features and best practices. Training sessions or documentation can help developers understand how to interpret AI-generated insights and when to trust or question its recommendations.

Finally, establish a feedback loop where developers can report false positives or suggest improvements. This interaction helps the AI system learn and adapt to your specific codebase and team preferences, improving accuracy over time.

How AI Detects Bugs: Behind the Scenes

AI-powered debugging tools detect bugs by combining advanced techniques from machine learning, pattern recognition, and program analysis. Unlike traditional methods that rely on fixed rules or manual inspection, AI systems learn from vast amounts of code and historical bug data to identify anomalies and potential errors more intelligently.

One common approach is static code analysis enhanced with AI. The tool scans the source code without running it, looking for patterns that often indicate bugs, such as incorrect variable usage, unreachable code, or violations of coding standards. Machine learning models trained on large datasets help the AI recognize subtle issues that rule-based systems might miss, such as complex logic errors or security vulnerabilities.

Dynamic analysis is another technique where AI monitors the program during execution. By observing runtime behavior, the AI can detect memory leaks, race conditions, or unexpected exceptions. It can also analyze logs and performance metrics to pinpoint the root cause of failures. Some AI tools use anomaly detection algorithms to flag behaviors that deviate from normal patterns, which often signal bugs.

Natural language processing (NLP) plays a role when AI tools interpret developer comments, bug reports, or error messages. This helps the system understand the context and intent behind the code, improving its ability to suggest relevant fixes or tests.

Moreover, AI debugging tools often employ probabilistic models to assess the likelihood that a piece of code contains a bug. By combining multiple signals—such as code complexity, change history, and test coverage—the AI prioritizes areas that need attention, helping developers focus their efforts efficiently.

Benefits of Using AI in Debugging

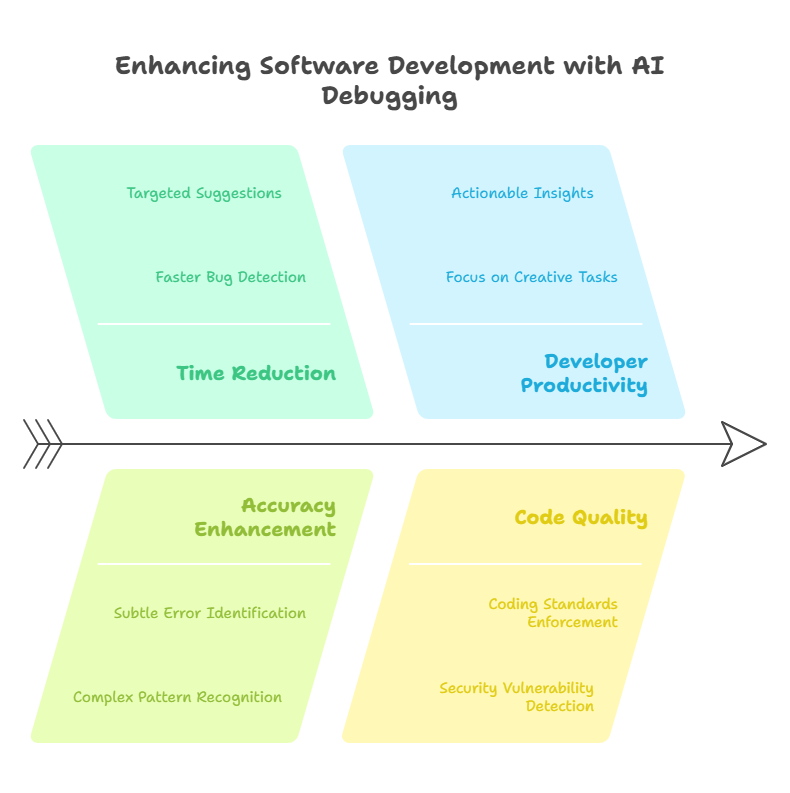

Incorporating AI into the debugging process offers numerous advantages that can significantly improve software development efficiency and quality. One of the most notable benefits is the reduction in time spent identifying and fixing bugs. AI tools can quickly analyze large codebases, detect issues that might take humans hours or days to find, and provide targeted suggestions, accelerating the debugging cycle.

AI-powered debugging also enhances accuracy. By learning from vast datasets of code and known bugs, AI systems can identify subtle errors and complex patterns that traditional methods might overlook. This leads to fewer missed bugs and reduces the risk of introducing new errors during fixes.

Another key advantage is improved developer productivity. With AI handling routine error detection and offering actionable insights, developers can focus more on creative problem-solving and feature development. This shift not only boosts morale but also helps teams deliver higher-quality software faster.

AI debugging tools also promote better code quality and maintainability. They often enforce coding standards, detect security vulnerabilities early, and suggest best practices, leading to cleaner, safer, and more robust codebases.

Furthermore, AI systems continuously learn and adapt to your specific project and coding style, becoming more effective over time. This adaptability ensures that the debugging assistance remains relevant and valuable as the code evolves.

Finally, AI-powered debugging fosters collaboration by providing clear explanations and visualizations of issues, making it easier for teams to understand problems and agree on solutions.

Interpreting AI Debugging Suggestions

When using AI-powered debugging tools, it’s essential to critically evaluate and interpret the suggestions they provide. While AI can significantly speed up bug detection and offer potential fixes, blindly trusting every recommendation can lead to new issues or overlooked problems. Here’s a step-by-step guide on how to effectively evaluate and trust AI-generated fixes and recommendations.

Step 1: Understand the Suggestion Context

AI tools often provide explanations or highlight the part of the code where the issue is detected. Start by carefully reading the suggestion and understanding the context. Is the AI flagging a syntax error, a logical flaw, or a potential security vulnerability? Knowing the type of issue helps you assess its relevance.

Step 2: Review the Code Change

Before applying any AI-generated fix, review the proposed code change thoroughly. Check if the fix aligns with your project’s coding standards and intended functionality. Sometimes, AI might suggest a generic fix that doesn’t fit the specific logic of your application.

Step 3: Test the Fix

Always test the AI-suggested fix in a controlled environment. Run unit tests, integration tests, or manual tests to verify that the fix resolves the issue without introducing new bugs. Automated testing frameworks can help streamline this process.

Step 4: Consider Alternative Solutions

AI suggestions are based on patterns learned from data but may not always be the best or only solution. Consider alternative approaches, especially for complex bugs. Use the AI recommendation as a starting point rather than a final answer.

Step 5: Provide Feedback to the AI Tool

Many AI debugging tools improve over time by learning from user feedback. If a suggestion is incorrect or suboptimal, mark it as such if the tool allows. This feedback loop helps the AI refine its future recommendations.

Example: Interpreting AI Suggestions in Python

Suppose an AI tool suggests the following fix for a function that calculates the factorial of a number but has a bug:

python

def factorial(n):

if n == 0:

return 1

else:

return n * factorial(n - 1)The AI suggests adding input validation to handle negative numbers:

python

def factorial(n):

if n < 0:

raise ValueError("Input must be a non-negative integer")

if n == 0:

return 1

else:

return n * factorial(n - 1)How to interpret this suggestion:

Context: The AI identified that the original function does not handle negative inputs, which would cause infinite recursion.

Review: The fix adds a check for negative inputs and raises an appropriate exception, which aligns with good practice.

Test: You can test the function with various inputs:

python

print(factorial(5)) # Expected output: 120

print(factorial(0)) # Expected output: 1

print(factorial(-1)) # Expected to raise ValueErrorAlternative: You might consider returning None or 0 for negative inputs, but raising an exception is clearer and safer.

Feedback: If the AI tool allows, mark this suggestion as helpful.

Collaborating with AI: Best Practices

Working effectively with AI debugging tools requires a mindset shift from seeing AI as a replacement to viewing it as a collaborative partner. To maximize the benefits of AI-assisted debugging, developers should adopt best practices that foster smooth teamwork between human expertise and AI capabilities.

First, treat AI suggestions as valuable input rather than absolute answers. Always apply your domain knowledge and critical thinking to evaluate the recommendations. This approach helps prevent over-reliance on AI and encourages developers to stay engaged in the debugging process.

Second, integrate AI tools seamlessly into your existing workflow. Use AI-powered debugging features within your preferred IDE or version control system to get real-time feedback without disrupting your coding rhythm. Consistent use helps build trust and familiarity with the AI’s strengths and limitations.

Third, maintain clear communication within your development team about how AI tools are used. Share insights on which AI suggestions worked well and which didn’t, creating a collective knowledge base. This collaboration helps the team calibrate expectations and improve overall debugging efficiency.

Fourth, provide feedback to the AI system whenever possible. Many AI debugging tools learn from user interactions, so marking false positives or confirming useful suggestions helps the AI improve over time and tailor its assistance to your codebase.

Fifth, balance automation with human oversight. Use AI to handle repetitive or straightforward bug detection tasks, freeing developers to focus on complex problems that require creativity and intuition. This balance ensures that AI augments rather than replaces human judgment.

Finally, stay updated on AI debugging advancements and continuously refine your collaboration strategies. As AI tools evolve, new features and capabilities will emerge, offering fresh opportunities to enhance your debugging process.

Limitations and Risks of AI Debugging

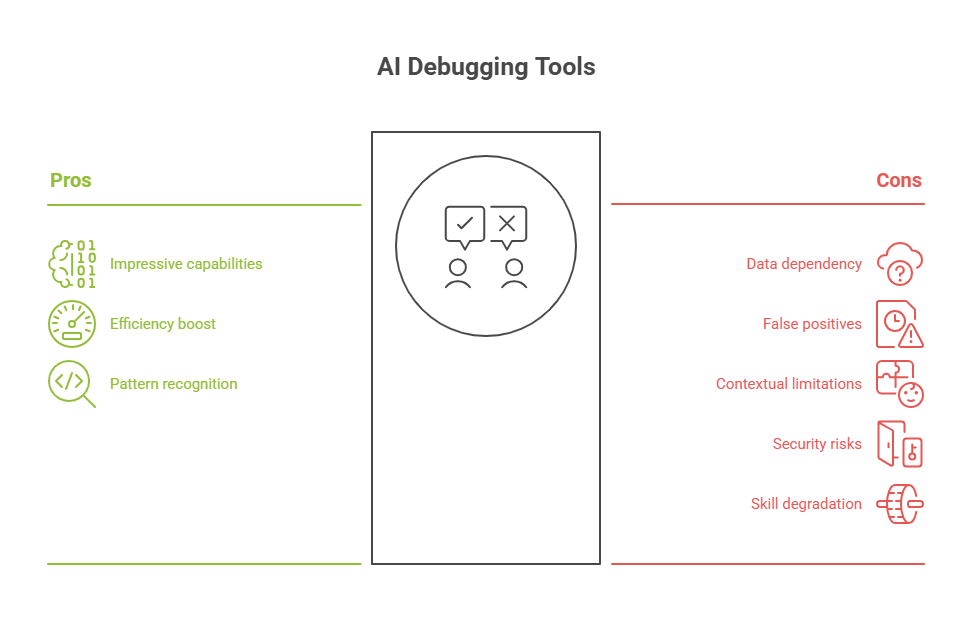

While AI-powered debugging tools offer impressive capabilities, it’s important to understand their limitations and potential risks to use them effectively and safely.

One key limitation is that AI models rely heavily on the data they were trained on. If the training data lacks examples of certain bug types or coding styles, the AI may fail to detect those issues or generate inappropriate suggestions. This can lead to missed bugs or incorrect fixes.

AI debugging tools may also produce false positives—flagging code as problematic when it is actually correct. Excessive false alarms can frustrate developers and reduce trust in the tool, causing them to ignore valuable warnings.

Another challenge is the lack of deep contextual understanding. AI can analyze code patterns and syntax but may struggle with complex business logic or domain-specific requirements. This means AI might suggest fixes that break intended functionality or overlook subtle bugs tied to application context.

Security and privacy risks also exist. AI tools that analyze proprietary or sensitive codebases must ensure data confidentiality. Using cloud-based AI services may expose code to external servers, raising concerns about intellectual property protection.

Over-reliance on AI debugging can lead to skill degradation among developers. If developers depend too much on AI suggestions without critical evaluation, their problem-solving and debugging skills may weaken over time.

To mitigate these risks, it’s crucial to combine AI debugging with human expertise. Always review AI suggestions carefully, validate fixes through testing, and maintain a strong understanding of your codebase. Additionally, choose AI tools that prioritize data security and allow customization to fit your project’s needs.

Case Studies: Real-World AI Debugging Successes

AI-assisted debugging has already demonstrated significant impact in real-world software development, improving code quality, reducing bug resolution time, and enhancing developer productivity. Let’s explore a few illustrative case studies and include a Python example to show how AI can help identify and fix bugs.

Case Study 1: Reducing Bug Resolution Time in a Web Application

A development team working on a complex web application integrated an AI debugging tool into their workflow. The AI quickly identified common issues such as null pointer exceptions and improper input validation that were causing frequent crashes. By automatically suggesting fixes, the team reduced average bug resolution time by 40%, allowing faster feature delivery and improved user experience.

Case Study 2: Improving Code Quality in Open Source Projects

An open source project used AI-powered static analysis to scan pull requests for potential bugs and security vulnerabilities. The AI flagged subtle issues like race conditions and unsafe data handling that human reviewers missed. This proactive detection helped maintain high code quality and prevented critical bugs from reaching production.

Case Study 3: Debugging Recursive Function Errors with AI

Consider a Python function intended to compute the nth Fibonacci number recursively. The original code had a bug causing incorrect results for certain inputs:

python

def fibonacci(n):

if n == 0:

return 0

elif n == 1:

return 1

else:

return fibonacci(n - 1) + fibonacci(n - 2)An AI debugging tool flagged that the function lacks input validation and suggested adding checks to handle negative inputs, which could cause infinite recursion or unexpected behavior.

Here’s the improved version incorporating the AI suggestion:

python

def fibonacci(n):

if not isinstance(n, int):

raise TypeError("Input must be an integer")

if n < 0:

raise ValueError("Input must be a non-negative integer")

if n == 0:

return 0

elif n == 1:

return 1

else:

return fibonacci(n - 1) + fibonacci(n - 2)Testing the function:

python

print(fibonacci(7)) # Expected output: 13

print(fibonacci(-1)) # Raises ValueError

print(fibonacci(3.5)) # Raises TypeErrorThis example shows how AI can help catch edge cases and improve function robustness by suggesting input validation and error handling.

Future Trends in AI Debugging

The field of AI-assisted debugging is rapidly evolving, with several emerging technologies and trends poised to transform how developers identify and fix software issues in the near future.

One major trend is the integration of large language models (LLMs) with code understanding capabilities. These models will become more context-aware, enabling them to comprehend entire codebases, project documentation, and even user requirements to provide more accurate and relevant debugging suggestions.

Another advancement is the rise of explainable AI (XAI) in debugging tools. Future AI systems will not only suggest fixes but also clearly explain the reasoning behind their recommendations, helping developers trust and learn from AI insights rather than treating them as black boxes.

Real-time collaborative debugging powered by AI will become more common. Multiple developers and AI agents will work together simultaneously, sharing insights and automatically synchronizing fixes across distributed teams, improving productivity and reducing miscommunication.

The use of automated program repair (APR) techniques will grow, where AI not only identifies bugs but generates and tests multiple candidate patches autonomously, selecting the best fix based on test results and code quality metrics.

Integration with DevOps pipelines will deepen, allowing AI debugging tools to continuously monitor code changes, run tests, and detect regressions early in the development lifecycle, enabling faster feedback loops and more reliable releases.

Finally, advances in multimodal AI will allow debugging tools to analyze not just code but related artifacts such as logs, error messages, screenshots, and even video recordings of software behavior, providing richer context for diagnosing complex issues.

Together, these trends point toward a future where AI debugging tools become indispensable partners—intelligent, transparent, and deeply integrated—helping developers write better code faster while reducing the cognitive load of troubleshooting.

Getting Started with AI Debugging Tools

If you’re interested in leveraging AI to improve your debugging process, here’s a practical guide to get started with AI debugging tools effectively.

First, choose the right AI debugging tool that fits your programming language, development environment, and project needs. Many popular IDEs now offer AI-powered extensions or plugins that integrate seamlessly, such as AI code assistants or static analyzers.

Next, install and configure the tool according to your workflow. This may involve setting up API keys, adjusting analysis depth, or customizing rules to reduce noise from false positives. Spend some time exploring the tool’s features and documentation to understand its capabilities.

Start by running the AI tool on a small codebase or a specific module to see how it identifies issues and suggests fixes. Review the AI’s recommendations carefully, applying your judgment to accept or modify the suggestions.

Incorporate the AI tool into your regular development cycle—for example, by running it before commits or as part of your continuous integration pipeline. This helps catch bugs early and maintain code quality consistently.

Encourage your team to share feedback and experiences with the AI tool. Collaborative learning helps everyone understand its strengths and limitations, improving overall effectiveness.

Finally, keep learning and experimenting. AI debugging tools are evolving rapidly, so staying updated on new features and best practices will help you get the most value.

Here’s a simple Python example demonstrating how you might use an AI-powered static analysis tool (like a linter) programmatically to check code quality:

python

import subprocess

def run_linter(file_path):

# Example using flake8 as a linter

result = subprocess.run(['flake8', file_path], capture_output=True, text=True)

if result.returncode == 0:

print("No issues found.")

else:

print("Issues detected:")

print(result.stdout)

# Run linter on a sample Python file

run_linter('example.py')This script runs a linter on a Python file and prints any detected issues. Many AI debugging tools offer similar command-line or API interfaces you can integrate into your workflow.

Best Practices for Using AI Debugging Tools

To maximize the benefits of AI debugging tools while minimizing potential pitfalls, it’s important to follow some best practices when integrating them into your development workflow.

First, always treat AI suggestions as guidance, not absolute truth. Carefully review and understand the recommendations before applying them to avoid introducing new bugs or breaking existing functionality.

Maintain a strong foundation in manual debugging and code review skills. AI tools are assistants, not replacements, so your expertise is essential for interpreting AI outputs and making informed decisions.

Customize the AI tool’s settings to fit your project’s coding standards and requirements. This helps reduce false positives and ensures the tool’s feedback is relevant and actionable.

Incorporate AI debugging into your continuous integration (CI) pipeline to catch issues early and consistently. Automated checks can prevent bugs from reaching production and improve overall code quality.

Encourage collaboration and knowledge sharing within your team about AI tool usage. Sharing experiences and tips helps everyone leverage the tool more effectively and fosters a culture of quality.

Regularly update and maintain your AI tools to benefit from the latest improvements, bug fixes, and new features. Staying current ensures you get the best performance and accuracy.

Finally, be mindful of security and privacy concerns. Avoid exposing sensitive code or data to external AI services unless you have appropriate safeguards and agreements in place.

By following these best practices, you can harness AI debugging tools as powerful allies in your software development process, improving efficiency and code reliability without compromising control or quality.

The AI Agents: The Secret to Developer Efficiency.