Introduction: The Role of AI Agents in Code Quality Assurance

In modern software development, maintaining high code quality is essential for building reliable, maintainable, and secure applications. Traditionally, code quality assurance has relied on manual code reviews, static analysis tools, and adherence to coding standards. However, as projects grow in complexity and teams become more distributed, these traditional methods can become time-consuming and prone to human error.

AI agents are transforming the landscape of code quality assurance by acting as intelligent guardians of codebases. These agents leverage machine learning, natural language processing, and advanced pattern recognition to automatically review code, detect issues, and provide actionable recommendations. Unlike static tools that rely solely on predefined rules, AI agents can learn from historical data, adapt to evolving coding practices, and identify subtle patterns that might escape human reviewers.

The integration of AI agents into the development process brings several key benefits. They can perform continuous, real-time code analysis, ensuring that quality checks are not limited to specific review stages. AI agents also help standardize code reviews across teams, reducing subjectivity and ensuring consistent application of best practices. By automating routine checks, they free up developers to focus on more complex and creative aspects of software engineering.

Moreover, AI agents can provide personalized feedback, tailored to the coding style and experience level of individual developers. This not only accelerates the onboarding of new team members but also fosters a culture of continuous learning and improvement.

In summary, AI agents are redefining code quality assurance by combining automation, intelligence, and adaptability. As these technologies continue to evolve, they promise to make software development faster, safer, and more efficient than ever before.

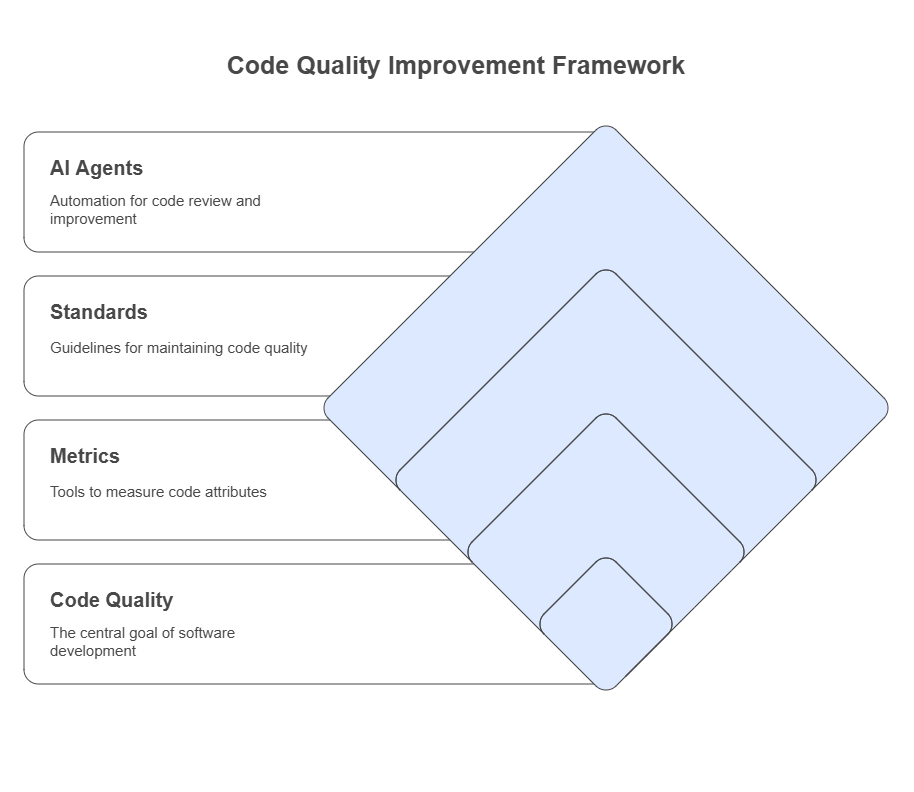

Understanding Code Quality: Metrics and Standards

Code quality is a multifaceted concept that encompasses various attributes of software code, such as readability, maintainability, performance, security, and correctness. To effectively assess and improve code quality, developers and organizations rely on a set of well-defined metrics and standards.

Key Code Quality Metrics

Some of the most commonly used metrics include:

Code Complexity: Measures like Cyclomatic Complexity quantify the number of independent paths through the code, helping identify overly complex functions that are hard to understand and maintain.

Code Coverage: Indicates the percentage of code executed by automated tests, ensuring that critical parts of the codebase are tested.

Duplication: Detects repeated code blocks that can lead to maintenance challenges and bugs.

Code Style Compliance: Ensures adherence to coding conventions and formatting rules, which improves readability and team consistency.

Bug Density: Tracks the number of defects per lines of code, highlighting problematic areas.

Security Vulnerabilities: Identifies potential security risks such as injection flaws, insecure data handling, or improper authentication.

Standards and Best Practices

To maintain high code quality, many teams adopt industry standards and best practices, such as:

SOLID Principles: Guidelines for designing maintainable and scalable object-oriented software.

Clean Code Practices: Emphasizing clarity, simplicity, and meaningful naming conventions.

Design Patterns: Reusable solutions to common software design problems.

Secure Coding Standards: Frameworks like OWASP’s secure coding guidelines to prevent vulnerabilities.

Role of AI Agents in Applying Metrics and Standards

AI agents can automatically measure these metrics and check compliance with standards during code reviews. By analyzing large codebases and historical data, they can prioritize issues based on their impact on overall quality and suggest improvements aligned with best practices.

How AI Agents Perform Automated Code Reviews

Automated code reviews powered by AI agents represent a significant advancement over traditional static analysis tools. These agents combine artificial intelligence techniques with software engineering knowledge to analyze code more intelligently, efficiently, and contextually.

Core Processes in AI-Driven Code Reviews

Code Parsing and Analysis

AI agents begin by parsing the source code to understand its structure, syntax, and semantics. This involves building abstract syntax trees (ASTs) or intermediate representations that allow the agent to analyze code beyond simple text patterns.

Pattern Recognition and Anomaly Detection

Using machine learning models trained on large datasets of code, AI agents identify common code smells, anti-patterns, and potential bugs. They can detect subtle issues such as inconsistent naming, improper resource management, or inefficient algorithms that traditional tools might miss.

Contextual Understanding

Unlike rule-based tools, AI agents consider the broader context of the code, including project-specific conventions, historical changes, and developer behavior. This enables more accurate identification of problematic code and reduces false positives.

Natural Language Processing (NLP) for Comments and Documentation

AI agents can analyze code comments and documentation to ensure they are clear, relevant, and up to date. They may also generate suggestions to improve documentation quality, enhancing overall code maintainability.

Generating Recommendations

Based on detected issues, AI agents provide actionable recommendations. These can range from simple fixes like renaming variables to more complex refactoring suggestions or security improvements.

Continuous Learning and Adaptation

AI agents improve over time by learning from developer feedback and new code patterns. This adaptive capability ensures that the automated reviews stay relevant as coding standards and project requirements evolve.

Benefits of AI-Powered Automated Reviews

Speed and Scalability: AI agents can analyze large codebases quickly and continuously, enabling real-time feedback.

Consistency: They apply standards uniformly across teams and projects, reducing subjective biases.

Depth of Analysis: AI agents uncover complex issues that may be overlooked in manual reviews.

Developer Support: By providing clear explanations and suggestions, they assist developers in writing better code.

Techniques for Detecting Code Smells and Anti-Patterns

Code smells and anti-patterns are indicators of poor design or implementation choices that can lead to maintainability issues, bugs, and technical debt. Detecting these early is crucial for sustaining code quality, and AI agents employ a variety of techniques to identify them effectively.

Common Code Smells and Anti-Patterns

Some typical examples include:

Long Methods: Functions that are excessively long and complex.

Duplicated Code: Repeated code blocks that increase maintenance effort.

God Objects: Classes that handle too many responsibilities.

Feature Envy: Methods that overly depend on data from other classes.

Shotgun Surgery: Changes that require modifications in many places.

Dead Code: Unused or unreachable code segments.

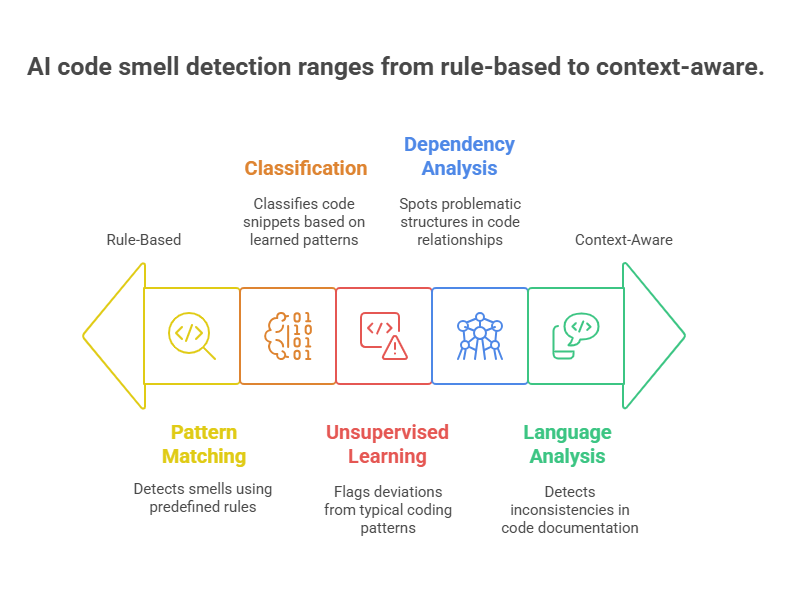

AI Techniques for Detection

Static Code Analysis with Pattern Matching

AI agents use static analysis to scan code for known patterns associated with smells and anti-patterns. This involves rule-based detection enhanced by machine learning models that can generalize beyond explicit rules.

Machine Learning Classification

Supervised learning models are trained on labeled datasets containing examples of clean code and code with smells. These models classify new code snippets, identifying potential issues with high accuracy.

Anomaly Detection

Unsupervised learning techniques detect deviations from typical coding patterns, flagging unusual structures or behaviors that may indicate anti-patterns.

Graph-Based Analysis

By representing code as graphs (e.g., call graphs, dependency graphs), AI agents analyze relationships and dependencies to spot problematic structures like tightly coupled modules or cyclic dependencies.

Natural Language Processing (NLP)

Analyzing variable names, comments, and documentation helps detect inconsistencies or misleading identifiers that contribute to code smells.

Benefits of AI-Driven Detection

Early Identification: AI agents can catch smells during development, preventing accumulation of technical debt.

Context Awareness: They consider project-specific coding styles and historical data to reduce false alarms.

Actionable Insights: Beyond detection, AI agents often suggest refactoring strategies to resolve identified issues.

Integrating AI Agents into the Development Workflow

To maximize their impact, AI agents must be seamlessly integrated into existing software development workflows. Proper integration ensures that AI-driven code quality checks complement developer activities without causing disruptions or delays.

Key Integration Points

Version Control Systems (VCS)

AI agents can be connected to platforms like GitHub, GitLab, or Bitbucket to analyze code changes in pull requests or commits. This enables automated review comments and quality checks as part of the code review process.

Continuous Integration/Continuous Deployment (CI/CD) Pipelines

Embedding AI agents into CI/CD pipelines allows for automated code analysis during build and test stages. This ensures that only code meeting quality standards progresses through the deployment process.

Integrated Development Environments (IDEs)

AI agents integrated into IDEs provide real-time feedback as developers write code. This immediate assistance helps catch issues early and improves developer productivity.

Collaboration and Communication Tools

Notifications and reports generated by AI agents can be shared via team communication platforms like Slack or Microsoft Teams, keeping all stakeholders informed about code quality status.

Best Practices for Integration

Non-Intrusive Feedback: AI agents should provide suggestions and warnings without overwhelming developers, allowing them to prioritize issues effectively.

Customization and Configurability: Teams should be able to tailor AI agent settings to align with project-specific standards and preferences.

Incremental Adoption: Gradually introducing AI agents helps teams adapt and build trust in automated reviews.

Clear Reporting: AI agents must generate understandable and actionable reports to facilitate quick resolution of issues.

Benefits of Workflow Integration

Improved Code Quality: Continuous and automated checks reduce defects and technical debt.

Faster Development Cycles: Early detection of issues minimizes costly rework.

Enhanced Collaboration: Shared insights foster better communication between developers, reviewers, and managers.

Generating Actionable Recommendations for Developers

AI agents not only detect issues in code but also provide developers with actionable recommendations to improve code quality. These recommendations help bridge the gap between identifying problems and implementing effective solutions, making the review process more productive and developer-friendly.

How AI Agents Generate Recommendations

Issue Classification

After detecting a code issue, the AI agent classifies it by type (e.g., security flaw, performance bottleneck, style violation) to tailor the recommendation accordingly.

Contextual Analysis

The agent analyzes the surrounding code context, project conventions, and historical fixes to suggest the most relevant and practical solutions.

Refactoring Suggestions

For structural problems like code smells or anti-patterns, AI agents recommend specific refactoring techniques such as extracting methods, renaming variables, or simplifying logic.

Code Snippets and Examples

To assist developers, AI agents often provide example code snippets demonstrating the recommended changes, making it easier to understand and apply fixes.

Prioritization and Severity

Recommendations are prioritized based on the severity and potential impact of the issue, helping developers focus on critical improvements first.

Learning from Feedback

AI agents adapt their recommendations over time by learning from developer actions and feedback, improving relevance and reducing unnecessary suggestions.

Benefits of Actionable Recommendations

Accelerated Development: Developers spend less time diagnosing issues and more time fixing them.

Improved Code Quality: Clear guidance leads to better adherence to best practices.

Enhanced Developer Experience: Supportive feedback fosters learning and confidence.

Example: Python Code for Generating a Simple Recommendation

Below is a simplified Python example illustrating how an AI agent might generate a recommendation for a detected long function by suggesting method extraction.

python

def analyze_function_length(function_code):

lines = function_code.strip().split('\n')

if len(lines) > 20:

return True

return False

def generate_recommendation(function_name, function_code):

if analyze_function_length(function_code):

return (f"The function '{function_name}' is quite long ({len(function_code.splitlines())} lines). "

"Consider refactoring by extracting smaller helper functions to improve readability and maintainability.")

else:

return f"The function '{function_name}' looks good in terms of length."

# Example usage

sample_function = """

def process_data(data):

# Imagine this function has more than 20 lines of code

pass

"""

recommendation = generate_recommendation("process_data", sample_function)

print(recommendation)This simple logic can be extended with more sophisticated analysis and integrated into AI agents to provide developers with meaningful, actionable advice during code reviews.

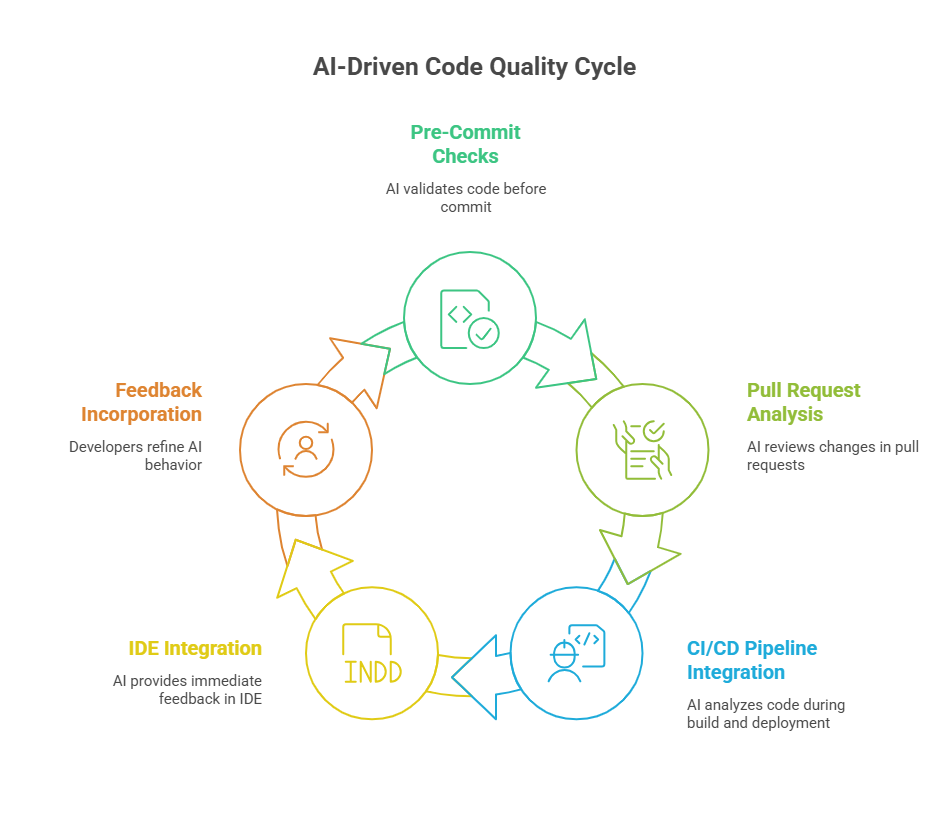

Integrating AI Agents into Development Workflows and CI/CD Pipelines

Integrating AI agents into development workflows and Continuous Integration/Continuous Deployment (CI/CD) pipelines is essential for automating code quality checks and ensuring consistent standards throughout the software lifecycle. This integration enables real-time feedback, faster issue detection, and smoother collaboration among development teams.

Key Integration Points

Pre-Commit Hooks

AI agents can be configured to run checks before code is committed to the repository. This early validation helps catch issues before they enter the main codebase, reducing the cost of fixing bugs later.

Pull Request Analysis

When developers submit pull requests, AI agents automatically review the changes, providing comments and suggestions directly within the version control platform. This streamlines the review process and supports maintainers in making informed decisions.

CI/CD Pipeline Stages

Embedding AI agents in CI/CD pipelines allows automated code analysis during build, test, and deployment phases. For example, agents can block deployments if critical issues are detected, ensuring only high-quality code reaches production.

IDE Integration

Integrating AI agents with Integrated Development Environments (IDEs) offers developers immediate feedback as they write code, promoting best practices and reducing errors early in the development cycle.

Best Practices for Integration

Seamless User Experience: AI feedback should be clear, actionable, and non-intrusive to avoid disrupting developer productivity.

Configurable Rules and Thresholds: Teams should customize AI agent settings to align with project-specific standards and tolerance for warnings.

Scalability and Performance: AI agents must operate efficiently to avoid slowing down build and deployment processes.

Continuous Improvement: Incorporate developer feedback to refine AI agent behavior and reduce false positives.

Benefits of Integration

Faster Feedback Loops: Developers receive timely insights, enabling quicker fixes.

Consistent Code Quality: Automated checks enforce coding standards uniformly across teams.

Reduced Manual Effort: AI agents handle routine reviews, freeing human reviewers to focus on complex issues.

Improved Collaboration: Shared AI-generated reports foster transparency and communication.

Handling False Positives and Improving Review Accuracy

One of the key challenges in using AI agents for automated code reviews is managing false positives—instances where the AI flags code as problematic even though it is acceptable or intentional. Excessive false positives can frustrate developers, reduce trust in the AI system, and slow down the development process. Therefore, improving review accuracy and minimizing false alarms is critical for effective AI-assisted code quality management.

Causes of False Positives

Overly Strict Rules: Rigid or generic rules that do not consider project-specific context.

Limited Context Understanding: AI agents may lack full understanding of business logic or domain-specific conventions.

Incomplete Training Data: Machine learning models trained on insufficient or biased datasets may misclassify code.

Dynamic and Evolving Codebases: Frequent changes and diverse coding styles can confuse static analysis tools.

Strategies to Reduce False Positives

Context-Aware Analysis

Incorporate project-specific configurations, coding standards, and historical data to tailor AI agent behavior to the unique environment.

Feedback Loops and Human-in-the-Loop

Allow developers to provide feedback on AI suggestions, enabling the system to learn from corrections and improve over time.

Threshold Tuning

Adjust sensitivity levels for warnings and errors to balance between catching real issues and avoiding noise.

Hybrid Approaches

Combine rule-based detection with machine learning models to leverage the strengths of both methods.

Incremental Deployment

Gradually introduce AI agents and monitor their performance, refining rules and models based on real-world usage.

Explainability and Transparency

Provide clear explanations for flagged issues so developers can understand the rationale and decide whether to act.

Benefits of Improving Accuracy

Increased Developer Trust: Reliable AI feedback encourages adoption and reliance on automated reviews.

Higher Productivity: Developers spend less time dismissing false alarms and more time addressing genuine issues.

Better Code Quality: Focused attention on real problems leads to cleaner, more maintainable code.

Security Considerations in Automated Code Reviews

Automated code reviews powered by AI agents play a crucial role in identifying security vulnerabilities early in the development process. However, integrating AI into code review workflows also introduces specific security considerations that must be addressed to protect both the codebase and the development environment.

Key Security Considerations

Data Privacy and Confidentiality

AI agents often analyze sensitive source code, which may contain proprietary algorithms or confidential information. Ensuring that code data is securely handled, stored, and transmitted is essential to prevent leaks.

Access Control and Authentication

Only authorized AI agents and users should have access to code repositories and review results. Strong authentication and role-based access control help safeguard against unauthorized access.

Integrity of AI Models

AI models used for code analysis must be protected from tampering or poisoning attacks that could degrade their effectiveness or cause them to miss vulnerabilities.

Secure Integration Points

When AI agents integrate with CI/CD pipelines, version control systems, or communication tools, secure APIs and encrypted channels should be used to prevent interception or manipulation.

Handling Malicious Code

AI agents should be designed to safely analyze potentially malicious or obfuscated code without executing it, avoiding risks of infection or compromise.

Auditability and Logging

Maintaining detailed logs of AI agent activities and review outcomes supports traceability and forensic analysis in case of security incidents.

Example: Python Code for Simple Security Check in Code Review

Below is a Python example demonstrating a basic AI agent function that scans code for the use of insecure functions (e.g., eval) and generates a security warning.

python

def check_insecure_functions(code_snippet):

insecure_functions = ['eval', 'exec', 'pickle.loads']

warnings = []

for func in insecure_functions:

if func in code_snippet:

warnings.append(f"Security Warning: Use of insecure function '{func}' detected. "

"Consider safer alternatives to avoid code injection risks.")

return warnings

# Example usage

sample_code = """

def run_user_code(user_input):

eval(user_input)

"""

security_warnings = check_insecure_functions(sample_code)

for warning in security_warnings:

print(warning)Execution Result:

Security Warning: Use of insecure function 'eval’ detected. Consider safer alternatives to avoid code injection risks.

This simple check can be expanded with more comprehensive security rules and integrated into AI agents to enhance automated detection of vulnerabilities during code reviews.

Case Studies: Successful Implementations of AI Code Guardians

AI-powered code guardians—automated agents that assist in code review and quality assurance—have been successfully implemented across various organizations and projects. These case studies highlight how AI agents improve code quality, accelerate development, and enhance security.

Case Study 1: Large E-commerce Platform

A major e-commerce company integrated AI agents into their CI/CD pipeline to automatically review pull requests. The AI agents detected common bugs, security vulnerabilities, and style violations before code was merged. This led to a 30% reduction in post-release defects and faster code review cycles, enabling developers to focus on complex features.

Case Study 2: Open Source Project

An open source project adopted AI agents to assist volunteer maintainers with reviewing numerous pull requests. The agents provided actionable recommendations and flagged potential issues, improving review consistency and reducing the backlog. Contributors received immediate feedback, increasing code quality and community engagement.

Case Study 3: Financial Services Firm

A financial institution deployed AI agents specialized in security analysis to scan code for compliance with regulatory standards. The agents identified risky patterns such as improper data handling and insecure function usage. This proactive approach helped the firm avoid costly security breaches and ensured audit readiness.

Case Study 4: Startup Developing AI Applications

A startup building AI-driven applications used AI agents integrated with their IDEs to provide real-time suggestions and detect errors during development. This integration improved developer productivity and reduced debugging time, accelerating product delivery.

Key Success Factors

Customization: Tailoring AI agents to project-specific coding standards and security policies.

Developer Involvement: Encouraging feedback to refine AI recommendations and reduce false positives.

Seamless Integration: Embedding AI agents into existing workflows and tools to minimize disruption.

Continuous Monitoring: Tracking AI agent performance and impact on code quality over time.

Future Directions: Enhancing AI Agents for Smarter Code Quality Management

The future of AI agents in code quality management is poised for significant advancements, driven by ongoing research, technological innovations, and evolving software development practices. These enhancements aim to make AI agents smarter, more adaptive, and better integrated into development workflows, ultimately improving software reliability and developer productivity.

One promising direction is the development of context-aware AI agents that deeply understand the specific codebase, project architecture, and team coding standards. By leveraging repository-wide context, these agents can provide more accurate and relevant recommendations, reducing false positives and increasing developer trust.

Explainable AI (XAI) is another critical area of focus. Future AI agents will offer transparent reasoning behind their suggestions, enabling developers to understand why certain code changes are flagged or recommended. This transparency fosters better collaboration between humans and AI, facilitating informed decision-making.

Advancements in multi-agent systems will enable multiple AI agents to collaborate, each specializing in different aspects of code quality such as security, performance, and maintainability. This distributed intelligence can provide comprehensive code analysis and holistic quality assurance.

Integration with continuous integration/continuous deployment (CI/CD) pipelines will become more seamless, allowing AI agents to operate in real-time, automatically adapting to code changes and deployment environments. This will support proactive quality management and faster release cycles.

The incorporation of federated learning techniques will allow AI agents to learn from diverse codebases across organizations without compromising data privacy, enhancing their generalization capabilities.

Finally, the rise of AI-driven developer experience platforms will combine code quality management with personalized learning, mentoring, and productivity tools, creating an ecosystem where AI agents support developers throughout their careers.

Building AI Applications: A Guide for Modern Developers

The AI Agent Revolution: Changing the Way We Develop Software

The Programmer and the AI Agent: Human-Machine Collaboration in Modern Projects