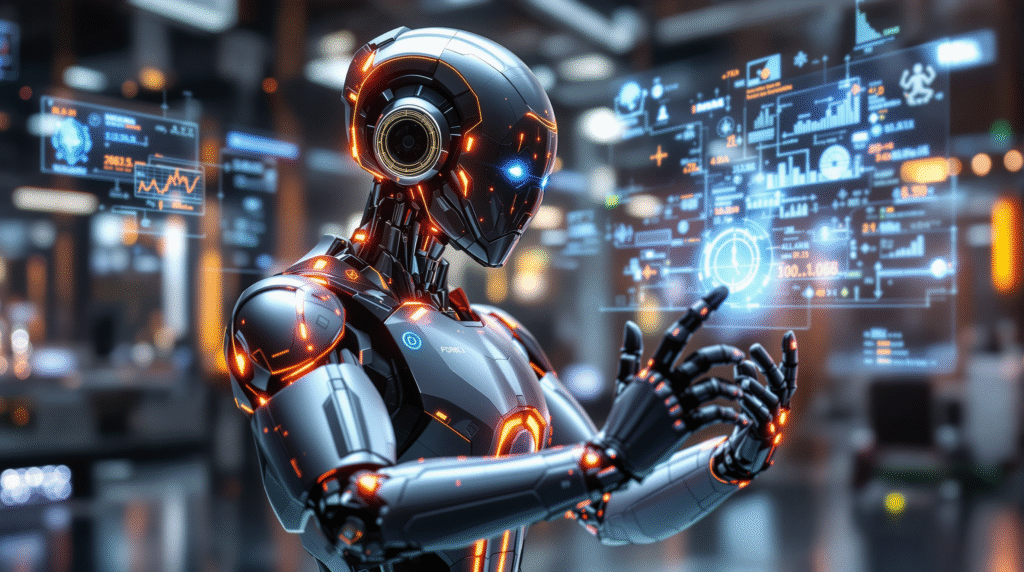

Introduction: The Role of AI Agents in Modern Software Testing

In today’s fast-paced software development landscape, ensuring high-quality, reliable software is more critical than ever. Traditional testing and debugging methods, while effective, often struggle to keep up with the increasing complexity and scale of modern applications. This is where AI agents come into play, revolutionizing the way software testing and debugging are performed.

AI agents are autonomous software entities capable of performing tasks such as analyzing code, generating test cases, detecting bugs, and assisting developers in debugging processes. Their ability to learn from data, adapt to new scenarios, and operate continuously makes them invaluable in enhancing software quality assurance.

The integration of AI agents into testing workflows offers several advantages. They can automate repetitive and time-consuming tasks, reduce human error, and provide insights that might be difficult for human testers to uncover. For example, AI agents can analyze vast codebases to identify potential vulnerabilities or generate diverse test scenarios that cover edge cases often missed by manual testing.

Moreover, AI agents support continuous testing practices essential for agile and DevOps environments. By running tests automatically with every code change and providing immediate feedback, they help maintain code quality throughout the development lifecycle.

In summary, AI agents are transforming software testing and debugging by making these processes more efficient, intelligent, and scalable. Their role is becoming increasingly vital as software systems grow in complexity, demanding smarter tools to ensure robustness and reliability. This article series will explore the various aspects of AI agents in testing and debugging, highlighting their capabilities, challenges, and future potential.

Fundamentals of AI Agents in Testing and Debugging

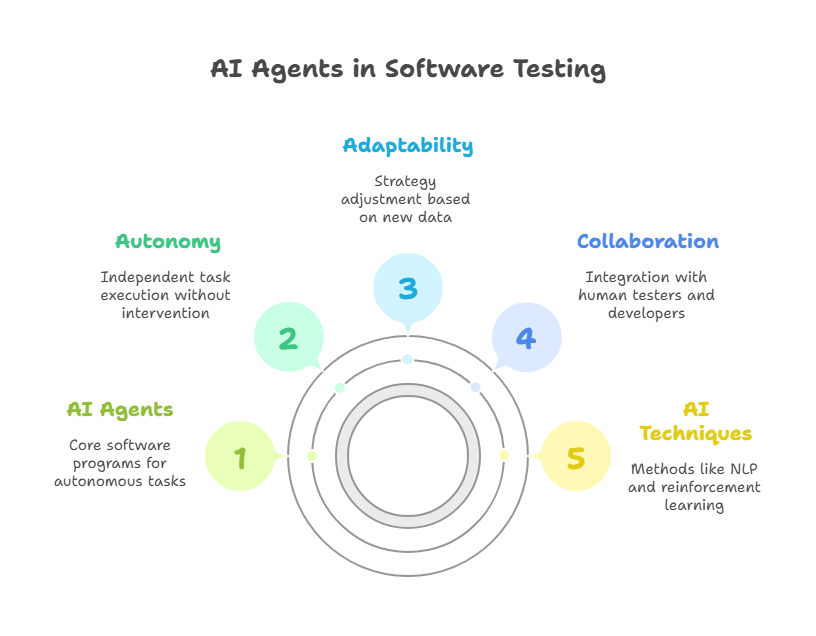

AI agents are specialized software programs designed to perform tasks autonomously by perceiving their environment, reasoning, learning, and acting to achieve specific goals. In the context of software testing and debugging, these agents leverage artificial intelligence techniques to improve the efficiency and effectiveness of quality assurance processes.

At their core, AI agents in testing operate by analyzing source code, execution traces, and test results to identify patterns, anomalies, or potential defects. They use machine learning algorithms to learn from historical data, such as past bugs and test outcomes, enabling them to predict where new issues might arise or which parts of the code require more rigorous testing.

Key characteristics of AI agents in this domain include autonomy, adaptability, and collaboration. Autonomy allows agents to perform testing tasks without constant human intervention, such as automatically generating test cases or prioritizing tests based on risk assessment. Adaptability enables them to adjust their strategies as the software evolves, learning from new data to improve accuracy. Collaboration refers to the ability of multiple agents or integration with human testers and developers to share insights and coordinate efforts.

Several AI techniques underpin these agents, including natural language processing (NLP) to understand code comments and documentation, reinforcement learning to optimize testing strategies, and anomaly detection to spot unusual behaviors during program execution.

By embedding these capabilities, AI agents help reduce manual workload, accelerate defect detection, and enhance debugging by providing actionable insights. Understanding these fundamentals is essential for effectively leveraging AI agents to improve software quality and streamline testing workflows.

Types of Testing Supported by AI Agents

AI agents have expanded the scope and capabilities of software testing by supporting a wide range of testing types. Their adaptability and intelligence enable them to assist in various testing phases, improving coverage, accuracy, and efficiency. Below are some of the key types of testing where AI agents play a significant role:

Unit Testing

AI agents can automatically generate and execute unit tests for individual components or functions. By analyzing code structure and behavior, they create test cases that cover different input scenarios, including edge cases that might be overlooked by human testers.

Integration Testing

In integration testing, AI agents help verify the interactions between different modules or services. They can simulate complex workflows and detect interface mismatches or data inconsistencies, ensuring that integrated components work together as expected.

Regression Testing

AI agents excel at regression testing by identifying which parts of the software are affected by recent changes and prioritizing test execution accordingly. This targeted approach reduces testing time while maintaining confidence that new updates do not introduce defects.

Performance Testing

By monitoring system behavior under various loads, AI agents can detect performance bottlenecks and predict potential scalability issues. They can also generate realistic usage patterns to simulate user behavior during stress tests.

Security Testing

AI agents assist in identifying vulnerabilities by analyzing code for common security flaws and simulating attack scenarios. Their ability to learn from known exploits helps in proactively detecting risks before deployment.

Usability Testing

Through natural language processing and user interaction analysis, AI agents can evaluate user interfaces and workflows, providing feedback on potential usability issues and suggesting improvements.

Exploratory Testing

AI agents can autonomously explore software applications, dynamically generating test cases based on observed behavior. This approach helps uncover unexpected bugs that scripted tests might miss.

Automated Test Case Generation Using AI Agents

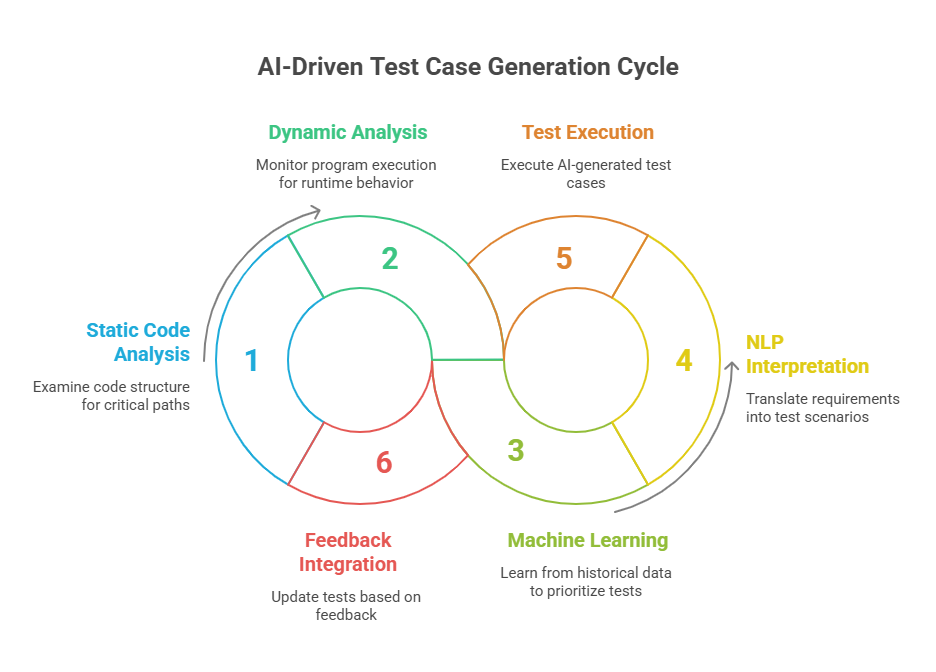

One of the most impactful applications of AI agents in software testing is the automated generation of test cases. Creating effective test cases manually is often time-consuming and prone to human error, especially for large and complex codebases. AI agents address this challenge by leveraging intelligent algorithms to design, optimize, and execute test scenarios with minimal human intervention.

AI agents use various techniques to generate test cases. Static code analysis allows them to examine the source code structure, control flow, and data dependencies to identify critical paths and edge cases. Dynamic analysis, on the other hand, involves monitoring program execution to understand runtime behavior and generate tests that cover real-world usage patterns.

Machine learning models enable AI agents to learn from historical test data, bug reports, and user feedback. This learning helps prioritize test cases that are more likely to detect defects, improving testing efficiency. For example, reinforcement learning can be applied to iteratively refine test generation strategies based on the success of previous tests.

Natural language processing (NLP) techniques allow AI agents to interpret requirements, specifications, and documentation written in human language. This capability helps in translating high-level functional descriptions into concrete test scenarios, bridging the gap between business needs and technical testing.

Automated test case generation by AI agents offers several benefits: it accelerates the testing process, increases test coverage, reduces manual effort, and helps uncover subtle bugs that might be missed by traditional methods. Additionally, AI-generated tests can be continuously updated to reflect changes in the codebase, supporting agile development and continuous integration practices.

AI Agents in Debugging: Enhancing Error Detection and Resolution

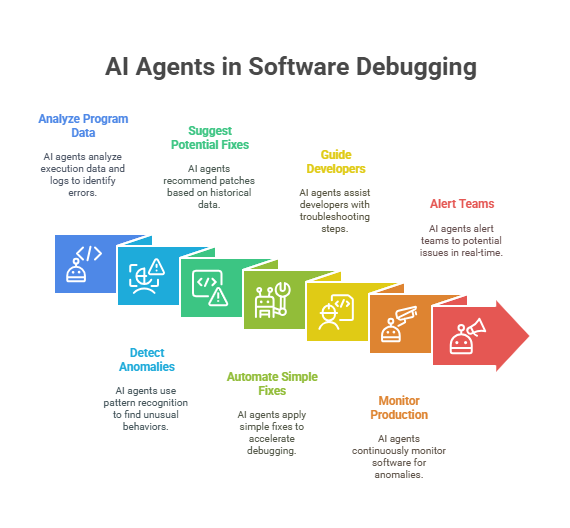

Debugging is a critical phase in software development, often consuming significant time and resources. AI agents are transforming this process by providing intelligent support for detecting, diagnosing, and resolving errors more efficiently.

AI agents assist in debugging by analyzing program execution data, logs, and error reports to identify the root causes of defects. Using pattern recognition and anomaly detection techniques, they can pinpoint unusual behaviors or code segments likely responsible for failures. This reduces the manual effort required to trace bugs through complex codebases.

Moreover, AI agents can suggest potential fixes by learning from historical bug fixes and code changes. By recommending patches or code modifications, they help developers quickly address issues while maintaining code quality. Some agents even automate the application of simple fixes, accelerating the debugging cycle.

Interactive debugging assistants powered by AI can guide developers through the troubleshooting process, answering questions, explaining error contexts, and proposing diagnostic steps. This collaboration enhances developer productivity and reduces the cognitive load associated with complex debugging tasks.

Additionally, AI agents support continuous monitoring of software in production environments, detecting anomalies in real-time and alerting teams before issues escalate. This proactive approach minimizes downtime and improves system reliability.

In summary, AI agents enhance debugging by automating error detection, providing intelligent diagnostics, and facilitating faster resolution. Their integration into development workflows leads to more robust software and a smoother development experience.

Debugging Assistance: How AI Agents Help Developers

Debugging is one of the most time-consuming and critical phases in software development. AI agents are increasingly becoming valuable allies for developers by automating and enhancing the debugging process. These intelligent agents analyze code, runtime behavior, and error logs to detect bugs, suggest fixes, and even predict potential issues before they manifest.

AI agents help developers by identifying patterns in code that commonly lead to errors, such as null pointer exceptions, memory leaks, or race conditions. They can automatically highlight suspicious code segments and provide explanations or recommendations based on learned knowledge from vast codebases and historical bug data.

Moreover, AI-powered debugging assistants can interactively guide developers through complex troubleshooting steps, reducing cognitive load and accelerating problem resolution. In continuous integration environments, these agents monitor builds and tests, quickly pinpointing the root causes of failures.

By integrating natural language processing, AI agents can also interpret error messages and stack traces, translating them into actionable insights. This capability helps both novice and experienced developers understand issues faster and apply effective solutions.

Example Python Code: Simple AI-Inspired Error Handling

Here is a basic Python example illustrating how an AI agent might suggest handling a common error—division by zero—gracefully to prevent crashes and improve robustness:

python

def safe_divide(a, b):

try:

return a / b

except ZeroDivisionError:

# AI agent suggests handling division by zero gracefully

print("Warning: Division by zero detected. Returning None.")

return None

# Example usage

result = safe_divide(10, 0)

print("Result:", result)This simple function demonstrates a pattern where the AI agent encourages proactive error handling, improving software reliability by preventing unhandled exceptions.

Integration of AI Agents with Testing Frameworks and Tools

AI agents are transforming software testing by seamlessly integrating with existing testing frameworks and tools, enhancing automation, accuracy, and efficiency. This integration allows AI agents to assist developers and QA teams in generating test cases, executing tests, analyzing results, and identifying defects more intelligently.

One key benefit of integrating AI agents with testing frameworks is the automation of test generation. AI can analyze code changes and automatically create relevant unit, integration, or end-to-end tests, reducing manual effort and increasing test coverage. For example, AI agents can use techniques like code analysis, natural language processing, and machine learning to understand application behavior and generate meaningful test scenarios.

AI agents also enhance test execution by prioritizing tests based on code changes or historical failure data, optimizing testing time and resources. They can detect flaky tests, suggest test suite optimizations, and even predict potential failure points before running tests.

Integration with popular testing tools such as pytest, JUnit, Selenium, or Jenkins enables AI agents to fit naturally into existing CI/CD pipelines. This allows continuous monitoring and real-time feedback on software quality, accelerating the development lifecycle.

Furthermore, AI agents can analyze test results and logs to provide actionable insights, such as root cause analysis or recommendations for fixing bugs. This reduces the time developers spend interpreting test failures and improves overall software reliability.

Example Python Code: AI Agent Simulating Test Prioritization with pytest

python

import pytest

# Simulated test cases with associated priority scores (higher means more important)

test_cases = {

"test_login": 0.9,

"test_payment": 0.8,

"test_profile_update": 0.5,

"test_logout": 0.3,

}

def prioritized_tests():

# AI agent prioritizes tests based on scores

return sorted(test_cases.items(), key=lambda x: x[1], reverse=True)

@pytest.mark.parametrize("test_name,priority", prioritized_tests())

def test_example(test_name, priority):

print(f"Running {test_name} with priority {priority}")

# Here would be the actual test logic

assert True

if __name__ == "__main__":

pytest.main()This example shows how an AI agent might prioritize test execution based on importance scores, helping optimize testing efforts within a pytest framework.

Challenges and Limitations of AI Agents in Testing

While AI agents bring significant advantages to software testing, their adoption also comes with several challenges and limitations that developers and organizations need to consider.

1. Data Quality and Availability:

AI agents rely heavily on large volumes of high-quality data to learn and make accurate predictions. In many projects, especially new or small-scale ones, there may be insufficient historical test data, bug reports, or code changes to train effective AI models.

2. False Positives and Negatives:

AI-driven testing tools can sometimes generate false positives (flagging non-issues as bugs) or false negatives (missing actual defects). This can lead to wasted developer time or undetected critical errors, reducing trust in the AI system.

3. Complexity of Test Scenarios:

Some complex or highly dynamic application behaviors are difficult for AI agents to model accurately. For example, UI tests involving unpredictable user interactions or systems with frequent changes may challenge AI’s ability to generate reliable tests.

4. Integration Overhead:

Integrating AI agents into existing testing frameworks and CI/CD pipelines can require significant effort, including adapting workflows, training teams, and maintaining AI models. This overhead may slow down adoption.

5. Explainability and Trust:

AI agents often operate as black boxes, making it hard for developers to understand why certain tests were generated or why specific defects were flagged. Lack of transparency can hinder trust and acceptance.

6. Maintenance and Model Drift:

AI models need continuous retraining and updating to remain effective as the codebase evolves. Without proper maintenance, model performance can degrade over time, leading to inaccurate testing outcomes.

7. Ethical and Security Concerns:

Automated testing agents may inadvertently expose sensitive data or introduce security risks if not properly managed. Ensuring compliance with privacy and security standards is essential.

Case Studies: Successful Applications of AI Agents in Debugging

AI agents have proven their value in real-world debugging scenarios across various industries and software projects. Below are some notable case studies demonstrating how AI agents enhance debugging efficiency, accuracy, and developer productivity.

1. Microsoft’s IntelliCode Debugging Assistant

Microsoft integrated AI-powered IntelliCode into Visual Studio to assist developers during debugging. The agent analyzes code context and historical debugging sessions to suggest likely causes of errors and recommend fixes. This has reduced debugging time significantly, especially for complex enterprise applications.

2. Facebook’s Sapienz for Automated Bug Detection

Facebook developed Sapienz, an AI-driven testing and debugging tool that automatically generates test cases and identifies bugs in mobile apps. Sapienz uses machine learning to prioritize test cases and detect crashes, enabling faster identification and resolution of defects in Facebook’s Android applications.

3. Google’s DeepMind for Code Analysis

Google’s DeepMind team applied AI agents to analyze large codebases and detect subtle bugs that traditional static analysis tools might miss. By learning from vast amounts of code and bug data, the AI agent helps developers pinpoint problematic code sections and suggests corrective actions.

4. Uber’s Michelangelo Platform

Uber’s Michelangelo platform incorporates AI agents that monitor production systems and automatically detect anomalies and bugs. These agents provide real-time alerts and debugging insights, helping Uber maintain high service reliability despite complex distributed architectures.

5. Open Source Projects Using AI Debugging Bots

Several open source projects have integrated AI-powered bots that comment on pull requests with potential bug warnings or code smells. These bots assist maintainers by automating initial code reviews and highlighting areas needing attention, improving code quality and reducing manual effort.

Future Trends: Evolving Capabilities of AI Agents in Software Quality Assurance

The role of AI agents in software quality assurance (QA) is rapidly evolving, driven by advances in artificial intelligence, machine learning, and software engineering practices. Here are some key future trends shaping the capabilities of AI agents in QA:

1. Enhanced Test Automation with Self-Learning Agents

Future AI agents will increasingly use self-learning techniques to automatically generate, execute, and adapt test cases based on continuous feedback from code changes and runtime behavior. This will reduce manual intervention and improve test coverage dynamically.

2. Predictive Quality Analytics

AI agents will leverage predictive analytics to forecast potential defects, performance bottlenecks, or security vulnerabilities before they occur. By analyzing historical data and development patterns, these agents will enable proactive quality management.

3. Integration of Explainable AI (XAI)

To build trust and transparency, AI agents will incorporate explainable AI methods that clarify why certain tests are prioritized or why specific bugs are flagged. This will help developers understand AI decisions and collaborate more effectively.

4. AI-Driven Continuous Testing in DevOps Pipelines

AI agents will become integral to continuous testing frameworks, automatically adjusting test suites and deployment strategies in real-time to maintain high software quality in fast-paced DevOps environments.

5. Cross-Platform and Cross-Domain Testing

Future AI agents will support testing across diverse platforms (web, mobile, IoT) and domains (finance, healthcare, automotive), adapting to domain-specific requirements and regulations.

6. Collaborative AI Agents for Multi-Team Environments

AI agents will facilitate collaboration among distributed development and QA teams by sharing insights, coordinating testing efforts, and harmonizing quality standards across projects.

7. Incorporation of Natural Language Processing (NLP)

NLP capabilities will enable AI agents to understand and generate human-readable test cases, interpret bug reports, and interact with developers through conversational interfaces, making QA more accessible.

8. Security-Focused AI Testing Agents

With growing cybersecurity threats, AI agents specialized in security testing will automatically detect vulnerabilities, simulate attacks, and recommend mitigation strategies.

Tools and Frameworks for Implementing AI Agents in Software Testing

Implementing AI agents in software testing requires leveraging specialized tools and frameworks that facilitate automation, machine learning, and integration with existing development workflows. Below are some popular and emerging tools that support AI-driven testing agents:

1. Test Automation Frameworks with AI Capabilities

Testim: Uses AI to create, execute, and maintain automated tests with self-healing capabilities that adapt to UI changes.

Mabl: An intelligent test automation platform that leverages machine learning to improve test stability and provide actionable insights.

Applitools: Focuses on visual AI testing, detecting UI anomalies using computer vision techniques.

2. Machine Learning Libraries and Platforms

TensorFlow and PyTorch: Widely used ML frameworks for building custom AI models that can analyze code, predict defects, or generate test cases.

scikit-learn: Provides tools for data mining and analysis, useful for building predictive models in testing scenarios.

3. AI-Powered Code Analysis Tools

DeepCode (now part of Snyk): Uses AI to analyze code for bugs and vulnerabilities, integrating with CI/CD pipelines.

SonarQube with AI Plugins: Enhances static code analysis with AI-driven insights.

4. Natural Language Processing (NLP) Tools

spaCy and NLTK: Useful for parsing and understanding bug reports, test documentation, and generating human-readable test cases.

OpenAI GPT APIs: Can be integrated to generate or explain test cases and assist in conversational QA bots.

5. Continuous Integration/Continuous Deployment (CI/CD) Tools

Jenkins, GitLab CI, CircleCI: These platforms can orchestrate AI-driven testing workflows, triggering AI agents during build and deployment stages.

6. Specialized AI Testing Platforms

Functionize: Combines AI and cloud-based testing to automate complex test scenarios with minimal scripting.

ReTest: Uses AI to detect UI changes and automatically update tests accordingly.

AI: Your programming assistant