Introduction: The Rise of Autonomous AI Agents

Autonomous AI agents represent a transformative shift in how machines interact with the world and perform tasks independently. Unlike traditional software programs that require explicit instructions for every action, autonomous agents possess the ability to perceive their environment, make decisions, and act without constant human intervention. This autonomy enables them to handle complex, dynamic, and uncertain situations, making them invaluable across a wide range of applications.

The rise of autonomous AI agents is driven by advances in artificial intelligence, machine learning, robotics, and sensor technologies. These agents are increasingly embedded in everyday systems—from self-driving cars navigating busy streets to intelligent virtual assistants managing our schedules. Their ability to learn from experience and adapt to new circumstances allows them to improve over time, enhancing efficiency and effectiveness.

In business, autonomous agents are revolutionizing processes such as supply chain management, customer service automation, and financial trading. In healthcare, they assist in diagnostics, personalized treatment plans, and patient monitoring. The growing deployment of autonomous agents reflects a broader trend toward intelligent automation, where machines augment human capabilities and take on tasks that were previously too complex or time-consuming.

However, the rise of autonomous AI agents also raises important questions about ethics, safety, and control. Ensuring that these agents act reliably and align with human values is a critical area of ongoing research and development.

In this context, understanding the foundations, capabilities, and challenges of autonomous AI agents is essential for harnessing their full potential. This article series will explore these aspects, bridging the gap between theoretical concepts and practical implementations.

Fundamentals of Autonomous AI Agents

Autonomous AI agents are software entities capable of perceiving their environment, making decisions, and acting independently to achieve specific goals. Unlike traditional programs that follow predefined instructions, autonomous agents exhibit self-directed behavior, adapting to changes and uncertainties in their surroundings.

Key Characteristics

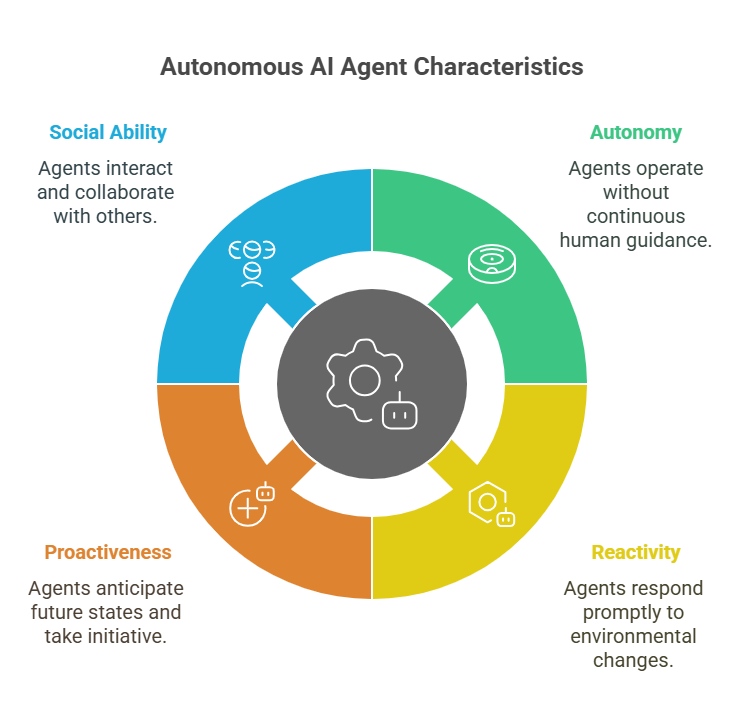

Autonomy: The core feature of these agents is their ability to operate without continuous human guidance. They can set sub-goals, plan actions, and execute tasks on their own.

Reactivity: Autonomous agents perceive changes in their environment and respond promptly to maintain effectiveness.

Proactiveness: Beyond reacting, they anticipate future states and take initiative to achieve objectives.

Social Ability: Many agents interact with other agents or humans, communicating and collaborating to solve complex problems.

Types of Autonomous Agents

Simple Reflex Agents: Act based on current perceptions using condition-action rules, without internal state or learning.

Model-Based Agents: Maintain an internal model of the world to make informed decisions.

Goal-Based Agents: Make decisions aimed at achieving specific goals, often involving planning.

Utility-Based Agents: Evaluate actions based on a utility function to maximize overall satisfaction.

Learning Agents: Improve their performance over time by learning from experience.

Encapsulation as Microservices

In modern software architectures, autonomous agents are often designed as microservices—independent, modular components that communicate via APIs. This approach enhances scalability, maintainability, and integration with other systems.

Understanding these fundamentals is crucial for designing, developing, and deploying effective autonomous AI agents that can operate reliably in real-world environments.

Theoretical Foundations

The development of autonomous AI agents is grounded in several key theoretical areas that provide the principles and methods enabling agents to perceive, reason, learn, and act independently.

Artificial Intelligence and Agent Theory

At the heart of autonomous agents lies artificial intelligence (AI), which equips agents with the ability to simulate intelligent behavior. Agent theory formalizes the concept of an agent as an entity that perceives its environment through sensors and acts upon it through actuators to achieve goals. This theory defines properties such as autonomy, reactivity, proactiveness, and social ability, which guide agent design.

Decision Theory and Planning

Decision theory provides a mathematical framework for making rational choices under uncertainty. Autonomous agents use decision-making models to select actions that maximize expected utility or achieve goals efficiently. Planning algorithms, such as state-space search, Markov decision processes (MDPs), and partially observable MDPs (POMDPs), enable agents to devise sequences of actions to reach desired outcomes.

Machine Learning

Machine learning allows agents to improve their performance by learning from data and experience. Techniques such as supervised learning, reinforcement learning, and deep learning empower agents to adapt to changing environments, recognize patterns, and optimize behavior without explicit programming for every scenario.

Control Theory and Robotics

Control theory contributes methods for managing dynamic systems and ensuring stability and responsiveness. In robotics, these principles help autonomous agents interact physically with the world, handling sensor noise, actuator limitations, and real-time constraints.

Multi-Agent Systems

Theoretical models of multi-agent systems study how multiple autonomous agents interact, cooperate, or compete within shared environments. Concepts from game theory, negotiation, and distributed problem-solving inform the design of agent societies and coordination mechanisms.

Formal Verification and Logic

Formal methods use logic and mathematical proofs to verify that autonomous agents behave correctly and safely. Techniques like temporal logic and model checking help ensure reliability, especially in safety-critical applications.

Understanding these theoretical foundations is essential for building robust autonomous AI agents capable of operating effectively in complex, uncertain, and dynamic environments.

Architectures for Autonomous Agents

Designing effective autonomous AI agents requires choosing the right architecture that supports their capabilities, scalability, and adaptability. Agent architectures define how an agent processes information, makes decisions, and interacts with its environment.

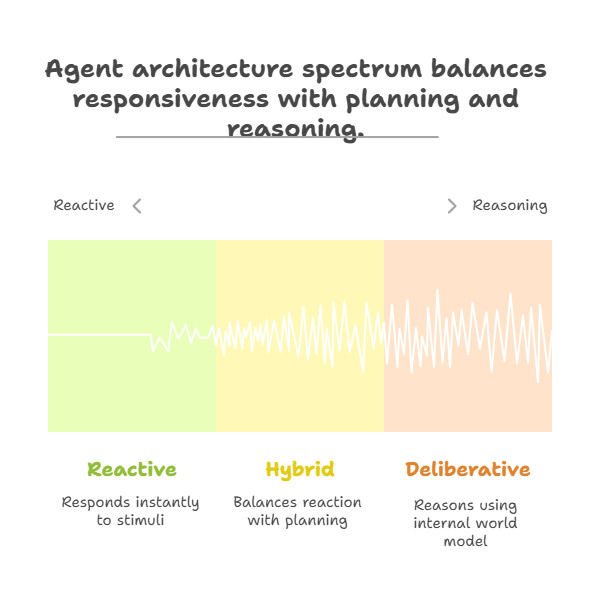

Reactive Architectures

Reactive agents operate based on direct stimulus-response mechanisms without internal symbolic representations. They are designed for fast, real-time responses to environmental changes. Examples include subsumption architecture, where simple behaviors are layered to produce complex actions. While efficient, reactive architectures may lack long-term planning capabilities.

Deliberative Architectures

Deliberative agents maintain an internal model of the world and use reasoning and planning to decide actions. These architectures involve symbolic representations and search algorithms to evaluate possible future states. Although more flexible and goal-oriented, deliberative agents can be computationally intensive and slower to respond.

Hybrid Architectures

Hybrid architectures combine reactive and deliberative components to balance responsiveness and planning. For example, a reactive layer handles immediate environmental changes, while a deliberative layer manages long-term goals and strategies. This approach is common in robotics and complex AI systems.

Layered Architectures

Layered architectures organize agent functions into hierarchical layers, each responsible for different levels of abstraction—from low-level sensing and actuation to high-level reasoning and learning. This modularity facilitates maintenance and scalability.

BDI (Belief-Desire-Intention) Architecture

The BDI model is inspired by human practical reasoning. Agents maintain beliefs about the world, desires representing objectives, and intentions as committed plans. BDI architectures support dynamic goal management and are widely used in multi-agent systems.

Service-Oriented and Microservices Architectures

In modern software development, autonomous agents are often implemented as microservices or service-oriented components. This enables modular deployment, scalability, and easy integration with other systems via APIs.

Choosing the appropriate architecture depends on the application domain, performance requirements, and complexity of tasks. Understanding these architectures helps developers build autonomous agents that are both effective and adaptable.

Learning and Adaptation

A defining feature of autonomous AI agents is their ability to learn from experience and adapt to changing environments. Learning enables agents to improve their performance over time, handle unforeseen situations, and optimize decision-making without explicit reprogramming.

Types of Learning

Supervised Learning: Agents learn from labeled data, mapping inputs to desired outputs. This approach is useful for pattern recognition tasks like image or speech classification.

Unsupervised Learning: Agents identify patterns or structures in unlabeled data, such as clustering or anomaly detection, helping them understand their environment better.

Reinforcement Learning (RL): Agents learn optimal behaviors by interacting with the environment and receiving feedback in the form of rewards or penalties. RL is particularly suited for sequential decision-making and control tasks.

Online Learning: Agents continuously update their models as new data arrives, enabling real-time adaptation.

Adaptation Mechanisms

Model Updating: Agents refine their internal models based on new observations to maintain accuracy.

Policy Improvement: In RL, agents improve their action-selection strategies to maximize cumulative rewards.

Transfer Learning: Agents leverage knowledge gained in one task or domain to accelerate learning in another.

Meta-Learning: Also known as “learning to learn,” agents develop the ability to adapt quickly to new tasks with minimal data.

Challenges in Learning

Data Quality and Quantity: Effective learning requires sufficient and representative data, which may be scarce or noisy.

Exploration vs. Exploitation: Balancing the need to try new actions (exploration) with using known successful actions (exploitation) is critical.

Computational Resources: Learning algorithms can be resource-intensive, requiring efficient implementations.

Safety and Ethics: Ensuring learned behaviors align with ethical standards and do not cause harm is essential.

Tools and Frameworks

Popular machine learning libraries such as TensorFlow, PyTorch, and scikit-learn provide robust tools for implementing learning capabilities in autonomous agents. Reinforcement learning frameworks like OpenAI Gym and Stable Baselines facilitate experimentation and deployment.

Decision-Making and Planning

Autonomous AI agents rely heavily on effective decision-making and planning to achieve their goals in dynamic and often uncertain environments. These processes enable agents to select appropriate actions, anticipate future states, and adapt strategies to maximize success.

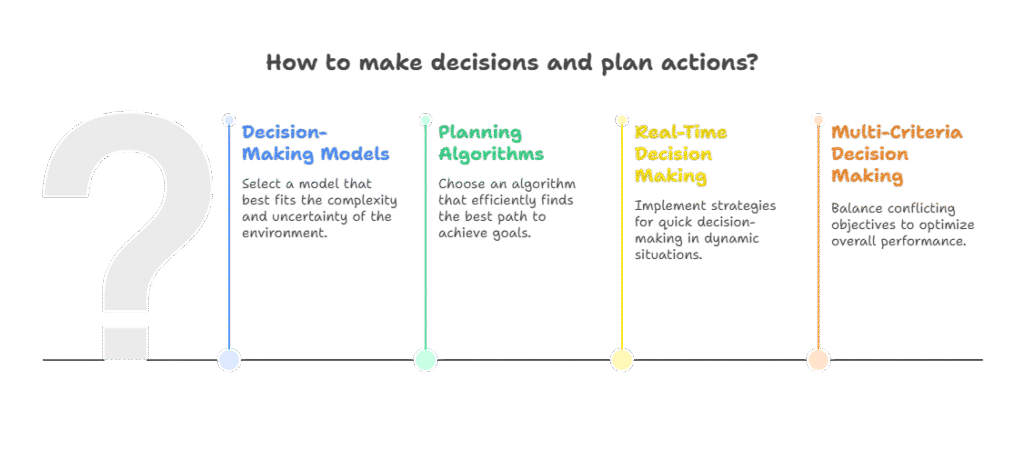

Decision-Making Models

Rule-Based Systems: Agents follow predefined rules mapping conditions to actions. While simple and interpretable, they lack flexibility in complex scenarios.

Utility-Based Decision Making: Agents evaluate possible actions based on a utility function that quantifies preferences, choosing actions that maximize expected utility.

Probabilistic Models: Techniques like Bayesian networks allow agents to reason under uncertainty by modeling probabilities of different outcomes.

Markov Decision Processes (MDPs): MDPs provide a mathematical framework for sequential decision-making where outcomes are partly random and partly under the agent’s control.

Partially Observable MDPs (POMDPs): Extend MDPs to situations where the agent has incomplete information about the environment state.

Planning Algorithms

Search Algorithms: Techniques such as A*, depth-first, and breadth-first search help agents find paths or sequences of actions to reach goals.

Heuristic Planning: Uses domain knowledge to guide search efficiently, reducing computational complexity.

Hierarchical Planning: Breaks down complex tasks into simpler subtasks, enabling scalable and modular planning.

Reactive Planning: Combines planning with real-time responses to environmental changes.

Real-Time Decision Making

In many applications, agents must make decisions quickly. Approaches like anytime algorithms provide progressively better solutions given more computation time, balancing speed and quality.

Multi-Criteria Decision Making

Agents often need to consider multiple, sometimes conflicting objectives. Techniques such as Pareto optimization help balance trade-offs.

Challenges

Scalability: Planning in large, complex environments can be computationally expensive.

Uncertainty: Dealing with incomplete or noisy information complicates decision-making.

Dynamic Environments: Agents must adapt plans as conditions change.

Tools and Frameworks

Libraries like PDDL (Planning Domain Definition Language) and frameworks such as ROSPlan support planning and decision-making in autonomous agents.

Effective decision-making and planning are critical for autonomous agents to act intelligently, achieve goals efficiently, and respond adaptively to their environments.

Perception and Environment Interaction

For autonomous AI agents to operate effectively, they must accurately perceive and interpret their environment, enabling informed decision-making and appropriate actions. Perception serves as the agent’s sensory system, bridging the gap between the external world and internal processes.

Sensing Modalities

Visual Perception: Using cameras and computer vision techniques, agents recognize objects, track movement, and understand scenes.

Auditory Perception: Microphones and speech recognition allow agents to process sounds and spoken commands.

Tactile and Proprioceptive Sensors: In robotics, these sensors provide feedback on touch, pressure, and the agent’s own position or movement.

Environmental Sensors: Agents may use GPS, temperature, humidity, or other sensors to gather contextual data.

Data Processing and Interpretation

Raw sensor data is often noisy and high-dimensional. Agents employ signal processing, filtering (e.g., Kalman filters), and feature extraction to transform data into meaningful representations.

Perception Algorithms

Object Detection and Recognition: Identifying and classifying objects within sensory input.

Localization and Mapping: Determining the agent’s position relative to its environment and building maps (e.g., SLAM—Simultaneous Localization and Mapping).

Natural Language Understanding: Interpreting human language inputs for communication and command execution.

Anomaly Detection: Recognizing unusual patterns or events that may require special attention.

Environment Interaction

Agents act upon their environment through actuators or software interfaces. Effective interaction requires understanding the consequences of actions and adapting behavior accordingly.

Challenges

Sensor Limitations: Noise, range, and resolution constraints affect perception quality.

Dynamic Environments: Changing conditions require continuous perception updates.

Multimodal Integration: Combining data from multiple sensors to form a coherent understanding.

Real-Time Processing: Ensuring timely perception and response in fast-changing scenarios.

Tools and Frameworks

Frameworks like ROS (Robot Operating System) provide tools for sensor integration, data processing, and environment interaction in robotic agents. Computer vision libraries such as OpenCV support visual perception tasks.

Mastering perception and environment interaction is essential for autonomous agents to navigate, understand, and influence their surroundings effectively.

Multi-Agent Systems and Collaboration

In many complex applications, multiple autonomous AI agents operate within the same environment, interacting to achieve individual or collective goals. Multi-agent systems (MAS) study these interactions, focusing on collaboration, competition, and coordination among agents.

Overview of Multi-Agent Systems

MAS consist of multiple agents that can be homogeneous or heterogeneous, each with its own capabilities, knowledge, and objectives. These systems enable distributed problem-solving, scalability, and robustness.

Collaboration Among Agents

Agents collaborate by sharing information, dividing tasks, and coordinating actions to achieve common goals more efficiently than isolated agents. Techniques include task allocation algorithms, joint planning, and consensus protocols.

Competition and Conflict Resolution

In scenarios where agents have conflicting interests, competition arises. Game theory and negotiation protocols help agents strategize and resolve conflicts to reach acceptable outcomes.

Communication Mechanisms

Effective communication is vital for coordination. Agents use direct messaging, shared blackboards, or publish-subscribe models to exchange data and intentions.

Coordination Strategies

Coordination ensures coherent group behavior, avoiding conflicts and redundancies. Approaches include centralized control, distributed coordination, and emergent behavior through local interactions.

Applications of MAS

Multi-agent systems are applied in traffic management, distributed robotics, smart grids, and collaborative filtering, among others.

Challenges

Scalability: Managing communication and coordination as the number of agents grows.

Robustness: Ensuring system reliability despite agent failures or malicious behavior.

Complexity: Designing protocols that handle diverse agent goals and dynamic environments.

Tools and Frameworks

Frameworks like JADE, SPADE, and Mesa support the development and simulation of multi-agent systems.

Understanding MAS and collaboration principles is crucial for building sophisticated autonomous systems capable of tackling large-scale, distributed problems.

Challenges and Limitations of Autonomous AI Agents

While autonomous AI agents offer transformative potential across industries, their development and deployment come with significant challenges and limitations that must be carefully addressed.

Technical Challenges

Complexity of Real-World Environments: Agents must operate in dynamic, uncertain, and often unpredictable settings, making reliable perception, decision-making, and adaptation difficult.

Scalability: As the number of agents or the complexity of tasks grows, ensuring efficient coordination and communication becomes increasingly challenging.

Data Quality and Availability: Effective learning and decision-making depend on high-quality, representative data, which may be scarce or noisy.

Computational Resources: Advanced learning algorithms and real-time processing require substantial computational power, which may limit deployment on resource-constrained devices.

Ethical and Social Concerns

Bias and Fairness: Agents trained on biased data can perpetuate or amplify unfair outcomes.

Transparency and Explainability: Understanding and trusting agent decisions is critical, especially in high-stakes applications.

Privacy: Autonomous agents often process sensitive data, raising concerns about data protection and user consent.

Accountability: Determining responsibility for agent actions, especially in autonomous systems, poses legal and ethical questions.

Safety and Security

Robustness to Failures: Agents must handle hardware malfunctions, software bugs, and unexpected inputs gracefully.

Adversarial Attacks: Malicious actors may exploit vulnerabilities in learning algorithms or communication protocols.

Control and Oversight: Ensuring human operators can monitor, intervene, or override agent behavior when necessary.

Integration Challenges

Interoperability: Combining agents with existing systems and diverse technologies can be complex.

User Acceptance: Resistance from users or stakeholders may hinder adoption.

Maintenance and Upgrades: Continuous updates are needed to keep agents effective and secure.

Addressing the Challenges

Ongoing research focuses on developing robust algorithms, ethical frameworks, explainable AI techniques, and secure architectures to mitigate these challenges.

Recognizing and proactively managing these limitations is essential for the responsible and successful deployment of autonomous AI agents.

Tools and Frameworks for Building Autonomous AI Agents

Developing autonomous AI agents requires a robust set of tools and frameworks that support various stages of the agent lifecycle—from design and training to deployment and monitoring. Leveraging these resources accelerates development, ensures scalability, and enhances reliability.

Programming Languages

Python: The most popular language for AI development, offering extensive libraries and community support.

Java: Widely used in enterprise applications and multi-agent system frameworks.

C++: Preferred for performance-critical applications, especially in robotics.

AI and Machine Learning Libraries

TensorFlow and PyTorch: Leading deep learning frameworks enabling model building, training, and deployment.

scikit-learn: A versatile library for classical machine learning algorithms.

OpenAI Gym: A toolkit for developing and comparing reinforcement learning algorithms.

Multi-Agent System Frameworks

JADE (Java Agent DEvelopment Framework): A popular platform for building agent-based applications with support for communication and lifecycle management.

SPADE (Smart Python Agent Development Environment): A Python-based framework facilitating agent communication and coordination.

Mesa: A Python library for agent-based modeling and simulation, useful for prototyping and research.

Robotics and Perception Frameworks

ROS (Robot Operating System): Provides tools and libraries for robot software development, including sensor integration and control.

OpenCV: A comprehensive computer vision library for image and video processing.

Deployment and Monitoring Tools

Docker and Kubernetes: Facilitate containerization and orchestration of agent services for scalable deployment.

MLflow and TensorBoard: Tools for tracking experiments, model performance, and visualization.

Cloud Platforms

AWS SageMaker, Google AI Platform, and Azure Machine Learning: Provide managed services for training, deploying, and managing AI models at scale.

Best Practices

Selecting appropriate tools depends on the application domain, team expertise, and project requirements. Combining frameworks for AI, multi-agent coordination, and deployment ensures a comprehensive development pipeline.

Utilizing these tools and frameworks empowers developers to build sophisticated autonomous AI agents efficiently and effectively.

Future Trends and Research Directions

The field of autonomous AI agents is rapidly evolving, driven by advances in technology, growing application demands, and emerging research challenges. Understanding future trends helps anticipate the next wave of innovations and prepare for their impact.

python

import asyncio

import random

class AIAgent:

def __init__(self, name):

self.name = name

self.model = random.random() # Simple model represented by a number

self.data = [random.random() for _ in range(5)] # Local dataset

async def train(self):

# Simulate training the model on local data

await asyncio.sleep(random.uniform(0.1, 0.5))

update = sum(self.data) / len(self.data) * random.uniform(0.8, 1.2)

self.model = (self.model + update) / 2

print(f"{self.name} trained model to {self.model:.4f}")

async def share_model(self, agents):

# Share model with other agents and receive their models

await asyncio.sleep(random.uniform(0.1, 0.3))

models = [agent.model for agent in agents if agent != self]

if models:

avg_model = sum(models) / len(models)

# Update model based on average of other agents' models

self.model = (self.model + avg_model) / 2

print(f"{self.name} updated model after sharing to {self.model:.4f}")

async def main():

# Create 3 AI agents

agents = [AIAgent(f"Agent-{i}") for i in range(1, 4)]

# Simulate several rounds of training and model sharing

for round in range(1, 6):

print(f"\n--- Round {round} ---")

# Train agents concurrently

await asyncio.gather(*(agent.train() for agent in agents))

# Share models among agents

await asyncio.gather(*(agent.share_model(agents) for agent in agents))

if __name__ == "__main__":

asyncio.run(main())Advances in Distributed and Decentralized Architectures

Future autonomous agents will increasingly operate in distributed environments, leveraging edge computing and decentralized networks to enhance scalability, reduce latency, and improve robustness.

Integration with Blockchain and Secure Protocols

Combining AI agents with blockchain technology promises enhanced security, transparency, and trustworthiness, especially in multi-agent collaborations and transactions.

Explainability and Trustworthy AI

Research is focusing on making autonomous agents more interpretable and transparent, enabling users to understand, trust, and effectively manage agent decisions.

Self-Adaptive and Meta-Learning Agents

Agents capable of self-adaptation and learning how to learn will become more prevalent, allowing faster adjustment to new tasks and environments with minimal human intervention.

Cross-Domain and Transfer Learning

Improved transfer learning techniques will enable agents to apply knowledge across different domains, reducing training time and data requirements.

Human-Agent Collaboration

Enhancing seamless collaboration between humans and autonomous agents through natural language interfaces, shared goals, and adaptive behaviors is a key research area.

Ethical and Regulatory Frameworks

Developing standards, guidelines, and regulations to ensure ethical deployment, privacy protection, and accountability of autonomous agents will shape future adoption.

Quantum Computing and AI Agents

Exploration of quantum computing’s potential to accelerate AI algorithms may revolutionize agent capabilities in the long term.

Emerging Standards and Protocols

Efforts to standardize communication, interoperability, and security protocols among autonomous agents will facilitate broader integration and cooperation.

Multimodal and Context-Aware Agents

Future agents will better understand and integrate diverse data types (visual, auditory, textual) and contextual information to make more informed decisions.

Staying abreast of these trends is essential for researchers, developers, and organizations aiming to leverage autonomous AI agents effectively and responsibly.

Conclusion: Bridging Theory and Practice

Autonomous AI agents represent a powerful convergence of artificial intelligence, machine learning, robotics, and software engineering. From their theoretical foundations to practical implementations, these agents are transforming how machines perceive, decide, and act independently in complex environments.

Throughout this exploration, we have seen how key concepts such as autonomy, learning, decision-making, and collaboration underpin the design of effective agents. Architectures ranging from reactive to hybrid models enable agents to balance responsiveness with strategic planning. Advances in perception and environment interaction equip agents with the sensory capabilities needed to navigate real-world challenges.

The integration of multi-agent systems expands possibilities for distributed problem-solving, while ongoing research addresses critical challenges related to scalability, ethics, safety, and transparency. A rich ecosystem of tools and frameworks supports developers in building, deploying, and managing autonomous agents across diverse domains.

Looking ahead, emerging trends like decentralized architectures, explainable AI, and human-agent collaboration promise to further enhance agent capabilities and adoption. However, responsible development and thoughtful integration remain paramount to harness the full potential of autonomous AI agents.

By bridging theory and practice, organizations and developers can unlock new opportunities for innovation, efficiency, and intelligent automation, shaping a future where autonomous agents are trusted partners in solving complex problems.

Human and AI: Agents as Creative Partners

AI Agents in Practice: Automating a Programmer’s Daily Tasks