Introduction

In recent years, the combination of AI agents and microservices architecture has emerged as a powerful approach to building scalable, flexible, and intelligent software systems. AI agents—autonomous software entities capable of perceiving their environment, making decisions, and performing tasks—are transforming how businesses automate processes, analyze data, and interact with users. Meanwhile, microservices architecture breaks down complex applications into smaller, independent services that can be developed, deployed, and scaled separately.

Integrating AI agents with microservices leverages the strengths of both technologies. This integration enables organizations to build modular AI-driven applications that are easier to maintain, update, and scale. AI agents can operate as individual microservices or collaborate across multiple services, enhancing system intelligence and responsiveness.

This article explores the fundamentals of AI agents and microservices, the benefits of their integration, and practical considerations for developers and architects aiming to harness this synergy. Understanding these concepts is essential for designing modern software solutions that meet the demands of dynamic business environments and evolving user expectations.

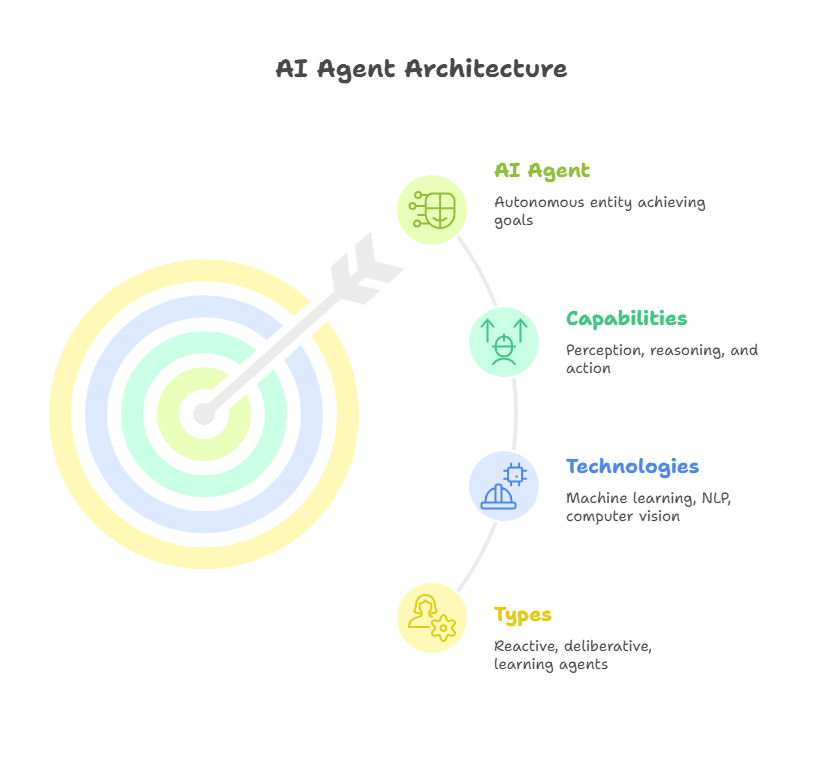

Fundamentals of AI Agents

AI agents are autonomous software entities designed to perceive their environment, reason about it, and take actions to achieve specific goals. Unlike traditional programs that follow predefined instructions, AI agents exhibit adaptive and intelligent behavior, often learning from data and interactions to improve their performance over time.

There are several types of AI agents, ranging from simple reactive agents that respond directly to stimuli, to more complex deliberative agents that plan and make decisions based on internal models of the world. Some agents incorporate learning capabilities, enabling them to adapt to changing environments through techniques such as reinforcement learning or neural networks.

Key functionalities of AI agents include perception (gathering data from sensors or inputs), reasoning (processing information and making decisions), and action (executing tasks or communicating with other systems). These capabilities are supported by various AI technologies, including machine learning, natural language processing, and computer vision.

In the context of microservices, AI agents can be encapsulated as independent services that perform specialized tasks—such as data analysis, anomaly detection, or user interaction—while communicating with other services to form a cohesive application. Understanding the core concepts and capabilities of AI agents is crucial for effectively designing and integrating them within modern software architectures.

Microservices Architecture Overview

Microservices architecture is a modern approach to software design that structures an application as a collection of small, independent services. Each microservice focuses on a specific business capability and can be developed, deployed, and scaled independently. This contrasts with traditional monolithic architectures, where all components are tightly integrated into a single codebase.

Key principles of microservices include modularity, loose coupling, and decentralized data management. Each service typically owns its own database and communicates with other services through well-defined APIs, often using lightweight protocols such as HTTP/REST or gRPC. This separation allows teams to work autonomously on different services, accelerating development cycles and improving maintainability.

The benefits of microservices are numerous: improved scalability, as services can be scaled individually based on demand; enhanced fault isolation, since failures in one service do not necessarily impact others; and greater flexibility in technology choices, allowing different services to use the most appropriate programming languages or frameworks.

However, microservices also introduce challenges, such as increased complexity in managing distributed systems, ensuring data consistency across services, and handling inter-service communication. Effective monitoring, logging, and orchestration tools are essential to address these issues.

Understanding microservices architecture is fundamental when integrating AI agents, as it provides the structural foundation for deploying intelligent, autonomous components within a scalable and resilient system.

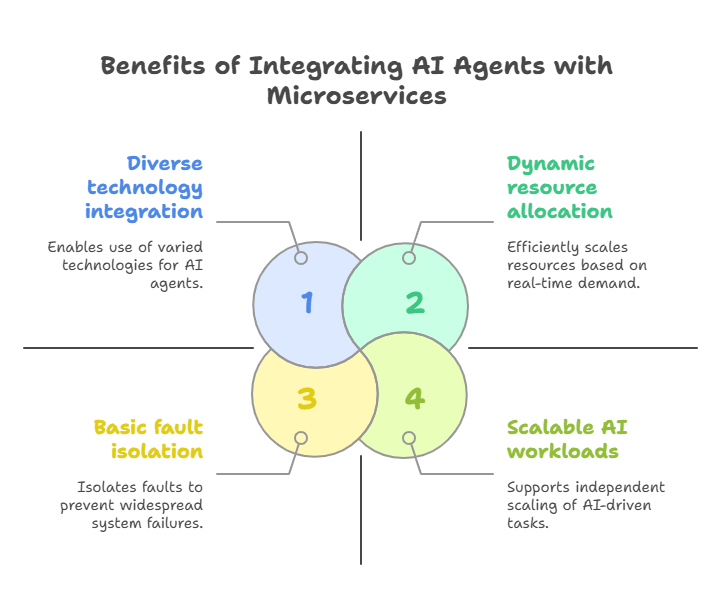

Why Integrate AI Agents with Microservices?

Integrating AI agents with microservices architecture offers a powerful combination that addresses many modern software development challenges while unlocking new opportunities for intelligent automation and scalability.

Use Cases and Business Value

AI agents excel at performing autonomous, intelligent tasks such as data analysis, decision-making, natural language processing, and anomaly detection. When deployed as microservices, these agents can be seamlessly integrated into larger applications, enabling businesses to automate complex workflows, personalize user experiences, and respond dynamically to changing conditions. For example, an AI agent microservice can analyze customer behavior in real-time and trigger personalized marketing actions without disrupting other parts of the system.

Scalability and Modularity Advantages

Microservices architecture inherently supports scalability by allowing individual services to be scaled independently based on demand. By encapsulating AI agents as microservices, organizations can allocate resources efficiently—scaling AI workloads separately from other application components. This modularity also simplifies updates and maintenance; AI models can be retrained and redeployed without affecting unrelated services.

Flexibility and Technology Diversity

AI development often requires specialized tools and frameworks that may differ from those used in other parts of an application. Microservices enable teams to choose the best technologies for AI agents without imposing constraints on the entire system. This flexibility accelerates innovation and allows for continuous improvement of AI capabilities.

Improved Fault Isolation and Resilience

Deploying AI agents as independent microservices enhances system resilience. If an AI agent encounters an error or requires maintenance, it can be isolated and addressed without causing downtime for the entire application. This fault isolation is critical for maintaining high availability in production environments.

In summary, integrating AI agents with microservices combines the intelligence and autonomy of AI with the robustness and scalability of microservices. This synergy empowers organizations to build smarter, more adaptable software systems that can evolve with business needs and technological advances.

Designing AI Agents as Microservices

Designing AI agents as microservices requires careful planning to ensure they are autonomous, scalable, and easily maintainable within a distributed system. This approach leverages the modularity of microservices while harnessing the intelligence and adaptability of AI agents.

Defining Clear Responsibilities

Each AI agent microservice should have a well-defined scope and responsibility. For example, one agent might specialize in natural language understanding, while another focuses on predictive analytics. Clear boundaries prevent overlap and simplify development, testing, and deployment.

Statelessness and Scalability

To fully benefit from microservices architecture, AI agents should be designed to be stateless whenever possible. Stateless services do not retain client-specific data between requests, making it easier to scale horizontally by adding more instances. When state is necessary (e.g., session data or model parameters), it should be managed externally using databases or distributed caches.

API Design and Communication

AI agent microservices communicate with other services and clients through well-defined APIs, typically RESTful or gRPC interfaces. Designing intuitive, consistent APIs facilitates integration and allows other components to leverage AI capabilities seamlessly. Additionally, asynchronous communication patterns (e.g., message queues or event streams) can improve system responsiveness and decouple services.

Model Management and Updates

AI models powering agents require regular updates and retraining to maintain accuracy and relevance. Designing microservices with a clear model management strategy—such as versioning models, automated deployment pipelines, and rollback mechanisms—ensures smooth updates without disrupting service availability.

Security and Privacy Considerations

Given that AI agents often process sensitive data, security must be integral to their design. Implementing authentication, authorization, encryption, and data anonymization protects both the system and user privacy. Microservices architecture allows applying security policies at the service level, enhancing overall system security.

Monitoring and Logging

Effective monitoring and logging are essential for maintaining AI agent microservices. Tracking performance metrics, error rates, and resource usage helps detect issues early and optimize system behavior. Tools like Prometheus, Grafana, and ELK stack are commonly used for observability in microservices environments.

By following these design principles, developers can build AI agent microservices that are robust, scalable, and easy to maintain, enabling intelligent features to be integrated smoothly into modern software ecosystems.

Implementing AI Agents with Python: Tools and Frameworks

Python has become the go-to programming language for developing AI agents due to its simplicity, rich ecosystem, and extensive support for machine learning and AI libraries. When building AI agents as microservices, Python offers a variety of tools and frameworks that streamline development, deployment, and integration.

Popular AI and Machine Learning Libraries

Python’s ecosystem includes powerful libraries such as TensorFlow, PyTorch, and scikit-learn, which provide robust tools for building, training, and deploying AI models. These libraries support a wide range of AI techniques, from deep learning and reinforcement learning to classical machine learning algorithms, enabling developers to create intelligent agents tailored to specific tasks.

Microservices Frameworks

For building microservices, Python offers frameworks like FastAPI and Flask. FastAPI is particularly well-suited for AI agent microservices due to its high performance, asynchronous capabilities, and automatic generation of interactive API documentation. Flask is lightweight and flexible, making it a good choice for simpler services or prototypes.

Containerization and Orchestration

To deploy AI agents as microservices, containerization tools like Docker are essential. Docker packages the AI agent and its dependencies into a portable container, ensuring consistency across development, testing, and production environments. For managing multiple containers and scaling services, orchestration platforms like Kubernetes provide automation for deployment, scaling, and monitoring.

Communication and Messaging

Microservices often communicate asynchronously using message brokers such as RabbitMQ or Apache Kafka. Python libraries like Pika (for RabbitMQ) and confluent-kafka enable AI agents to send and receive messages efficiently, facilitating coordination and data exchange between services.

Model Serving and APIs

Specialized tools like TensorFlow Serving or TorchServe allow serving trained AI models via APIs, making it easier to integrate AI capabilities into microservices. Alternatively, custom REST or gRPC endpoints can be built using FastAPI or Flask to expose AI agent functionalities.

Experiment Tracking and Monitoring

Tools like MLflow and Weights & Biases help track experiments, model versions, and performance metrics, which is crucial for maintaining and improving AI agents over time. Integrating these tools into microservices workflows supports continuous learning and deployment.

In summary, Python’s rich set of libraries and frameworks provides a comprehensive toolkit for implementing AI agents as microservices. Leveraging these technologies enables developers to build scalable, maintainable, and intelligent services that can be seamlessly integrated into modern software architectures.

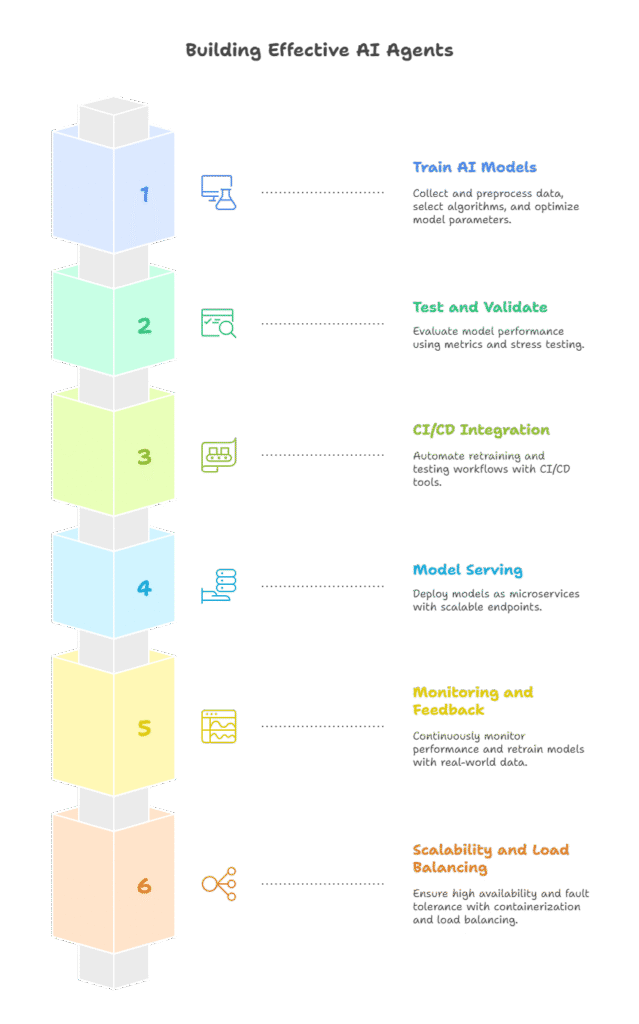

Training, Testing, and Deploying AI Agents

Building effective AI agents as microservices involves a well-structured process of training, testing, and deployment to ensure reliability, accuracy, and scalability.

Training AI Models

The first step is to train the AI models that power the agents. This involves collecting and preprocessing relevant data, selecting appropriate algorithms, and iteratively optimizing model parameters. Training can be done offline using frameworks like TensorFlow or PyTorch, often leveraging GPUs or cloud resources for efficiency. It’s important to maintain version control of datasets and models to track changes and reproduce results.

Testing and Validation

Before deployment, AI models must be rigorously tested to evaluate their performance and generalization capabilities. Common practices include splitting data into training, validation, and test sets, and using metrics such as accuracy, precision, recall, or F1-score depending on the task. Additionally, stress testing the AI agent microservice under realistic workloads helps identify bottlenecks and potential failure points.

Continuous Integration and Continuous Deployment (CI/CD)

Integrating AI model training and testing into CI/CD pipelines automates the process of updating AI agents. Tools like Jenkins, GitHub Actions, or GitLab CI can trigger retraining and testing workflows whenever new data or code changes are introduced. Automated deployment ensures that updated models are rolled out smoothly with minimal downtime.

Model Serving

Once tested, AI models are deployed as part of microservices. Model serving frameworks such as TensorFlow Serving or TorchServe provide scalable endpoints for inference requests. Alternatively, custom APIs built with FastAPI or Flask can expose model predictions. It’s crucial to monitor latency and throughput to maintain responsive service.

Monitoring and Feedback Loops

Post-deployment, continuous monitoring of AI agent performance is essential. Tracking metrics like prediction accuracy, response time, and error rates helps detect model drift or degradation. Implementing feedback loops where real-world data is collected and used to retrain models ensures that AI agents remain effective over time.

Scalability and Load Balancing

Deploying AI agents in containerized environments with orchestration tools like Kubernetes allows horizontal scaling based on demand. Load balancers distribute inference requests across multiple instances, ensuring high availability and fault tolerance.

By following these best practices in training, testing, and deployment, developers can deliver AI agent microservices that are robust, efficient, and capable of adapting to evolving requirements.

Best Practices for Collaboration Between Developers and Data Scientists

Successful development and deployment of AI agents as microservices require close collaboration between software developers and data scientists. Each group brings unique expertise—developers focus on building scalable, maintainable systems, while data scientists specialize in creating accurate and effective AI models. Here are best practices to foster effective teamwork:

Clear Communication and Shared Goals

Establish a common understanding of project objectives, success criteria, and timelines. Regular meetings and documentation help align expectations and clarify responsibilities, reducing misunderstandings.

Integrated Development Environments and Tools

Use shared platforms and tools that support both coding and data experimentation. Version control systems like Git, combined with experiment tracking tools such as MLflow or Weights & Biases, enable transparent collaboration and reproducibility.

Modular Design and API Contracts

Define clear interfaces and API contracts between AI models and application components. This modularity allows developers to integrate AI agents without needing deep knowledge of the underlying algorithms, while data scientists can update models independently.

Automated Testing and Continuous Integration

Implement automated tests for both code and model performance. Integrate these tests into CI pipelines to catch issues early and ensure that changes from either side do not break the system.

Data Management and Governance

Collaborate on data collection, cleaning, and labeling processes. Establish data governance policies to ensure data quality, privacy, and compliance with regulations.

Iterative Development and Feedback Loops

Adopt agile methodologies that encourage iterative improvements. Frequent feedback from developers, data scientists, and end-users helps refine AI agents and adapt to changing requirements.

Cross-Training and Knowledge Sharing

Encourage team members to learn basics of each other’s domains. Developers gaining understanding of AI concepts and data scientists familiarizing themselves with software engineering practices improve collaboration efficiency.

By embracing these best practices, teams can bridge the gap between AI research and software engineering, delivering AI agent microservices that are both intelligent and production-ready.

Multi-Agent Systems: Collaboration and Competition

Multi-agent systems (MAS) consist of multiple AI agents that interact within a shared environment, either collaborating to achieve common goals or competing to maximize individual objectives. This paradigm extends the capabilities of single AI agents by enabling complex, distributed problem-solving and decision-making.

Collaboration in Multi-Agent Systems

In collaborative MAS, agents share information, coordinate actions, and divide tasks to improve overall system performance. For example, in supply chain management, different AI agents might manage inventory, logistics, and demand forecasting, working together to optimize efficiency. Collaboration requires communication protocols, negotiation strategies, and consensus mechanisms to align agent behaviors.

Competition and Game-Theoretic Approaches

When agents have conflicting goals, competition arises, leading to strategic interactions modeled by game theory. Competitive MAS are common in scenarios like automated trading or resource allocation, where agents must anticipate and respond to others’ actions. Designing agents that can learn and adapt strategies in such environments is a key research area.

Design Considerations

Building MAS involves addressing challenges such as coordination complexity, communication overhead, and conflict resolution. Agents must be designed with capabilities for perception, reasoning, and communication tailored to multi-agent dynamics.

Python Tools for MAS

Python libraries like Mesa provide frameworks for simulating and developing multi-agent systems. Mesa supports agent-based modeling with customizable agents and environments, making it suitable for research and prototyping.

Applications

MAS are applied in robotics (e.g., swarm robotics), smart grids, traffic management, and distributed AI systems. By leveraging collaboration and competition, MAS enable scalable and flexible solutions to complex real-world problems.

In summary, multi-agent systems represent a powerful extension of AI agents, enabling sophisticated interactions that enhance problem-solving capabilities in dynamic environments. Understanding MAS principles is essential for designing advanced AI-driven applications.

Communication Between Agents: Protocols and Frameworks

Effective communication is a cornerstone of multi-agent systems (MAS), enabling AI agents to exchange information, coordinate actions, and collaborate or compete within shared environments. Designing robust communication mechanisms is essential for building intelligent, responsive, and scalable agent-based applications.

Communication Protocols

Communication between agents can be direct or indirect. Direct communication involves explicit message exchanges, while indirect communication uses the environment as a medium (e.g., stigmergy). Common protocols include request-response, publish-subscribe, and contract-net, each suited to different interaction patterns. Protocols define message formats, sequencing, and error handling to ensure reliable exchanges.

Message Content and Semantics

Agents must agree on the meaning of messages, which requires shared ontologies or data schemas. This semantic alignment ensures that information is interpreted correctly, enabling meaningful cooperation. Languages like FIPA ACL (Foundation for Intelligent Physical Agents Agent Communication Language) provide standardized message structures for agent communication.

Frameworks and Tools

Several frameworks facilitate agent communication. SPADE (Smart Python multi-Agent Development Environment) is a popular Python-based platform that supports asynchronous messaging, agent lifecycle management, and protocol implementation. It leverages XMPP (Extensible Messaging and Presence Protocol) for real-time communication.

Example: Simple Agent Communication in Python

Using Python’s queue module, agents can exchange messages asynchronously within a process or across threads. For distributed systems, message brokers like RabbitMQ or Kafka enable scalable, reliable communication.

python

import queue

import threading

import time

# Message queue shared between agents

message_queue = queue.Queue()

def agent_sender():

for i in range(5):

message = f"Message {i} from sender"

message_queue.put(message)

print(f"Sent: {message}")

time.sleep(1)

def agent_receiver():

while True:

try:

message = message_queue.get(timeout=2)

print(f"Received: {message}")

message_queue.task_done()

except queue.Empty:

break

sender_thread = threading.Thread(target=agent_sender)

receiver_thread = threading.Thread(target=agent_receiver)

sender_thread.start()

receiver_thread.start()

sender_thread.join()

receiver_thread.join()Challenges and Best Practices

Designing agent communication requires handling latency, message loss, and synchronization issues. Employing asynchronous messaging, retries, and acknowledgments improves robustness. Clear protocol definitions and modular communication layers facilitate maintenance and scalability.

In conclusion, well-designed communication protocols and frameworks are vital for enabling AI agents to interact effectively, unlocking the full potential of multi-agent systems in complex applications.

Conclusion: Embracing AI Agents in Modern Software Development

The integration of AI agents within microservices architecture marks a transformative shift in how software systems are designed, developed, and deployed. AI agents bring autonomy, intelligence, and adaptability, while microservices provide modularity, scalability, and resilience. Together, they enable the creation of sophisticated applications that can respond dynamically to complex business needs.

Throughout this exploration, we have seen how understanding the fundamentals of AI agents and microservices lays the groundwork for effective integration. Designing AI agents as microservices requires clear responsibilities, robust communication, and careful management of models and data. Python’s rich ecosystem offers powerful tools to implement, train, and deploy these agents efficiently.

Collaboration between developers and data scientists is crucial to bridge the gap between AI research and production-ready software. Multi-agent systems further expand possibilities by enabling agents to collaborate or compete, solving problems that single agents cannot handle alone. Effective communication protocols and frameworks ensure seamless interaction among agents, enhancing system intelligence and flexibility.

While challenges such as complexity, security, and maintenance exist, following best practices and leveraging modern technologies can mitigate risks and maximize benefits. The future of software development is increasingly intertwined with AI agents, promising smarter automation, improved decision-making, and innovative user experiences.

Embracing AI agents as integral components of microservices architectures empowers organizations to stay competitive and agile in a rapidly evolving technological landscape. By investing in knowledge, tools, and collaboration, teams can unlock the full potential of AI-driven software and shape the future of intelligent applications.

Additional Resources and Further Reading

To deepen your understanding of AI agents and their integration with microservices, here are some valuable resources and references:

Books and Articles

“Multi-Agent Systems: Algorithmic, Game-Theoretic, and Logical Foundations” by Yoav Shoham and Kevin Leyton-Brown — A comprehensive introduction to multi-agent systems theory and applications.

“Designing Data-Intensive Applications” by Martin Kleppmann — Covers modern software architecture principles, including microservices and distributed systems.

Research papers on AI agent communication protocols and multi-agent collaboration available through platforms like arXiv and IEEE Xplore.

Online Courses and Tutorials

Coursera’s “AI For Everyone” by Andrew Ng — A beginner-friendly overview of AI concepts.

Udemy and Pluralsight courses on microservices architecture and Python for AI development.

Tutorials on frameworks like FastAPI, Flask, TensorFlow Serving, and SPADE available on official documentation sites and GitHub repositories.

Open-Source Tools and Libraries

Mesa — Python framework for agent-based modeling and simulation.

SPADE — Python multi-agent system development environment.

TensorFlow and PyTorch — Leading machine learning libraries.

FastAPI — Modern, fast web framework for building APIs with Python.

Communities and Forums

Stack Overflow and Reddit’s r/MachineLearning and r/Microservices — For asking questions and sharing knowledge.

AI and microservices-focused groups on LinkedIn and Meetup for networking and learning from practitioners.

Exploring these resources will help you stay updated with the latest trends, best practices, and tools, empowering you to build effective AI agent microservices and contribute to the evolving landscape of intelligent software systems.

Human and AI: Agents as Creative Partners

AI Agents in Practice: Automating a Programmer’s Daily Tasks