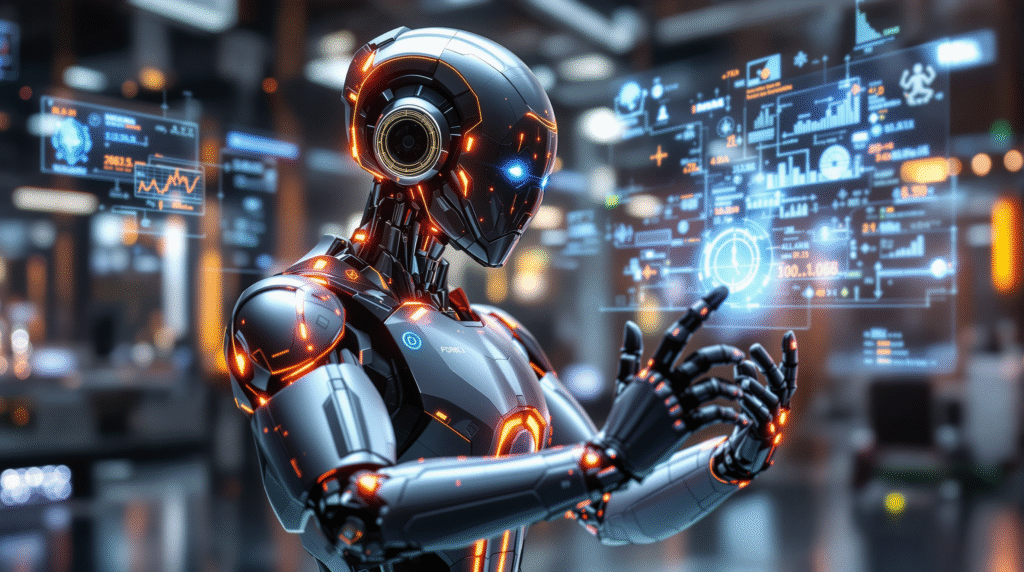

Introduction: Why AI Agents Matter

The modern world of technology increasingly relies on intelligent systems capable of making autonomous decisions, learning from data, and responding to changing conditions. AI agents—programs or systems able to act autonomously within a given environment—are becoming a key element of contemporary applications, from business process automation and computer games to advanced recommendation systems.

The importance of AI agents stems from their ability to solve complex problems in a flexible and scalable way. They not only relieve people from routine tasks but also enable quick and accurate decision-making in dynamic situations. In an era of growing data volumes and rising expectations for software, AI agents make it possible to create systems that are more intelligent, adaptive, and efficient.

For developers, this means new opportunities for professional growth and a chance to participate in projects that truly change the way companies and society function. The ability to build AI agents is becoming not just an advantage, but a necessity in the modern developer’s toolkit.

Fundamentals of AI Agents: Concepts and Definitions

To effectively design and implement AI agents, it is essential to start with an understanding of the basic concepts and definitions. An AI agent is a computer program that operates within a specific environment, receives information from it (perception), makes decisions based on that information, and performs appropriate actions. A key feature of an agent is autonomy—it can independently carry out assigned tasks, often in an adaptive manner.

There are different types of agents, depending on their complexity and decision-making methods. The simplest are reactive agents, which respond directly to stimuli from the environment without memory of past states. More advanced are goal-based agents, which strive to achieve specific objectives, and utility-based agents, which choose actions that maximize expected benefit. The most complex are learning agents, which can modify their behavior based on experience.

The environment in which an agent operates can be simple or complex, static or dynamic, fully observable or partially hidden. When designing an agent, it is important to consider these characteristics to select the appropriate architecture and algorithms.

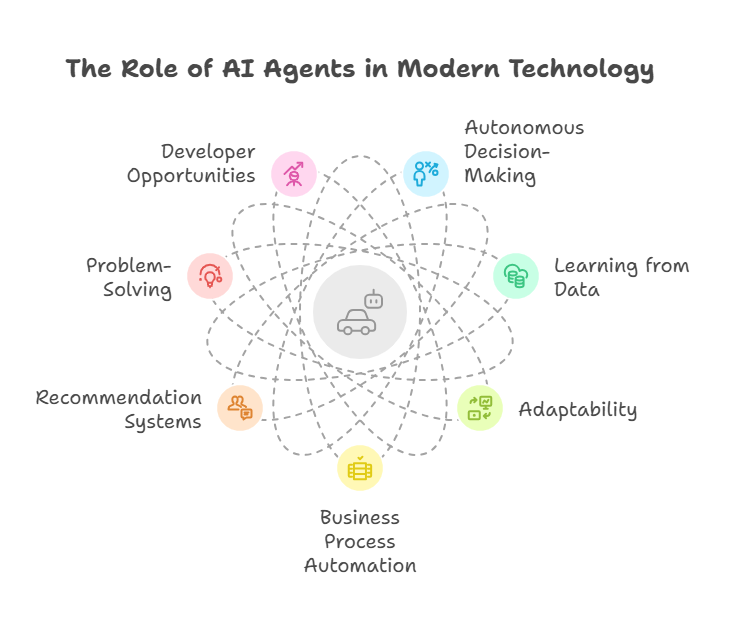

Python Essentials for AI Development

Python has become the language of choice for AI development, thanks to its simplicity, readability, and the vast ecosystem of libraries tailored for data science and machine learning. For developers aiming to build AI agents, mastering Python’s core features is the first step toward effective and efficient implementation.

Key Python skills include understanding data structures such as lists, dictionaries, and sets, which are essential for managing the information that agents process. Proficiency in functions, classes, and object-oriented programming enables developers to design modular and reusable agent architectures. Familiarity with modules and packages allows for the integration of powerful libraries like NumPy for numerical operations, pandas for data manipulation, and scikit-learn for machine learning.

Additionally, working with virtual environments (using tools like venv or conda) helps manage dependencies and maintain clean project structures. Version control with Git is also crucial for tracking changes and collaborating with other developers.

For AI-specific tasks, knowledge of libraries such as TensorFlow, PyTorch, or Keras is invaluable for building and training learning agents. Tools like OpenAI Gym provide ready-made environments for testing and benchmarking agent performance. By combining these Python essentials, developers can quickly prototype, test, and deploy AI agents in a wide range of applications.

Designing Simple AI Agents: From Rules to Actions

The foundation of any AI agent is its ability to perceive its environment and take appropriate actions. Designing simple agents often begins with rule-based systems, where the agent follows predefined instructions in response to specific inputs.

A rule-based agent operates by evaluating a set of “if-then” rules. For example, in a game environment, a simple agent might move left if it detects an obstacle on the right, or pick up an item if it is nearby. This approach is intuitive and easy to implement in Python using conditional statements and functions.

As agents become more complex, developers can introduce state tracking, allowing the agent to remember previous actions or observations. This enables more sophisticated behaviors, such as planning or adapting to changing conditions.

Here is a minimal example of a rule-based agent in Python:

python

class SimpleAgent:

def __init__(self):

self.state = None

def perceive(self, environment):

# Example: environment is a dictionary with sensor data

self.state = environment

def act(self):

if self.state.get('obstacle_right'):

return 'move_left'

elif self.state.get('item_nearby'):

return 'pick_up'

else:

return 'move_forward'

# Example usage

env = {'obstacle_right': True, 'item_nearby': False}

agent = SimpleAgent()

agent.perceive(env)

action = agent.act()

print(f"Agent action: {action}")This simple structure can be extended with more rules, memory, or even learning capabilities as needed. By starting with clear rules and gradually increasing complexity, developers can build robust AI agents that serve as the foundation for more advanced, intelligent systems.

State, Environment, and Agent Architectures

A well-designed AI agent must interact intelligently with its environment, which requires a clear understanding of three fundamental concepts: state, environment, and agent architecture.

The state represents all the information the agent needs to make decisions at any given moment. This could include the agent’s current position, recent observations, or internal variables such as goals or energy levels. Managing state effectively allows the agent to remember past actions, adapt to new situations, and plan ahead.

The environment is the external world in which the agent operates. It provides the context for the agent’s actions and delivers feedback through observations or rewards. Environments can be simple (like a grid world) or highly complex (such as a real-time strategy game or a financial market). The environment may be fully observable, where the agent has access to all relevant information, or partially observable, where some aspects are hidden and must be inferred.

Agent architecture refers to the structural design of the agent—how it processes information, makes decisions, and acts. The simplest architecture is a rule-based system, where actions are determined by fixed rules. More advanced architectures include:

Reactive agents, which respond directly to current perceptions without memory.

Deliberative agents, which maintain an internal model of the world and plan actions to achieve specific goals.

Hybrid agents, which combine reactive and deliberative elements for greater flexibility.

Choosing the right architecture depends on the complexity of the task, the nature of the environment, and the desired level of autonomy. By carefully modeling state, environment, and architecture, developers can create agents that are both effective and adaptable.

A well-designed AI agent must interact intelligently

The state represents all the information the agent needs to make decisions at any given moment. This could include the agent’s current position, recent observations, or internal variables such as goals or energy levels. Managing state effectively allows the agent to remember past actions, adapt to new situations, and plan ahead.

The environment is the external world in which the agent operates. It provides the context for the agent’s actions and delivers feedback through observations or rewards. Environments can be simple (like a grid world) or highly complex (such as a real-time strategy game or a financial market). The environment may be fully observable, where the agent has access to all relevant information, or partially observable, where some aspects are hidden and must be inferred.

Agent architecture refers to the structural design of the agent—how it processes information, makes decisions, and acts. The simplest architecture is a rule-based system, where actions are determined by fixed rules. More advanced architectures include:

Reactive agents, which respond directly to current perceptions without memory.

Deliberative agents, which maintain an internal model of the world and plan actions to achieve specific goals.

Hybrid agents, which combine reactive and deliberative elements for greater flexibility.

Choosing the right architecture depends on the complexity of the task, the nature of the environment, and the desired level of autonomy. By carefully modeling state, environment, and architecture, developers can create agents that are both effective and adaptable.Reactive agents are among the simplest and most efficient types of AI agents. They operate by mapping current perceptions directly to actions, without relying on memory or internal models. This makes them ideal for tasks where quick, context-dependent responses are required, such as robotics, simple games, or real-time monitoring systems.

To implement a reactive agent in Python, developers typically define a set of rules or policies that determine the agent’s behavior based on sensory input. The agent continuously observes its environment and selects actions accordingly.

Here is a basic example of a reactive agent in Python:

python

class ReactiveAgent:

def act(self, perception):

if perception == 'danger':

return 'escape'

elif perception == 'target':

return 'approach'

else:

return 'wait'

# Example usage

agent = ReactiveAgent()

print(agent.act('danger')) # Output: escape

print(agent.act('target')) # Output: approach

print(agent.act('nothing')) # Output: waitThis agent instantly reacts to its current perception: escaping from danger, approaching a target, or waiting if nothing is detected. While reactive agents are limited by their lack of memory and planning, they are highly efficient and robust in environments where rapid, straightforward responses are sufficient.

For more complex scenarios, reactive agents can be extended with additional rules or combined with other architectures to create hybrid systems. However, their simplicity makes them an excellent starting point for developers new to AI agent design.

Building Goal-Based and Utility-Based Agents

As AI agents tackle more complex tasks, simple rule-based or reactive approaches often become insufficient. To address this, developers can design goal-based and utility-based agents, which introduce higher levels of reasoning and adaptability.

A goal-based agent is driven by the pursuit of specific objectives. Instead of merely reacting to the environment, it evaluates possible actions in terms of how well they help achieve its goals. For example, a navigation agent might consider multiple routes and choose the one that leads most efficiently to its destination. This requires the agent to have a representation of its goals and the ability to plan or search for solutions.

Utility-based agents go a step further by quantifying preferences among different outcomes. They assign a utility value—a numerical score—to each possible state or action, allowing the agent to choose the option that maximizes its expected benefit. This approach is especially useful when there are trade-offs or uncertainty, such as balancing speed and safety in autonomous vehicles.

In Python, implementing a simple goal-based agent might involve defining a set of possible actions and a function that evaluates progress toward the goal. For a utility-based agent, you would define a utility function and select actions that maximize this value.

Here’s a minimal example of a utility-based agent in Python:

python

class UtilityAgent:

def __init__(self):

pass

def utility(self, state):

# Example: higher utility for higher 'score' in state

return state.get('score', 0) - state.get('risk', 0)

def choose_action(self, possible_states):

# Select the state with the highest utility

best_state = max(possible_states, key=self.utility)

return best_state

# Example usage

states = [

{'score': 10, 'risk': 2},

{'score': 7, 'risk': 1},

{'score': 12, 'risk': 5}

]

agent = UtilityAgent()

best = agent.choose_action(states)

print(f"Best state: {best}")

This agent evaluates each possible state and selects the one with the highest utility, balancing score and risk. By adopting goal-based and utility-based approaches, developers can create agents that make more informed, rational decisions in complex environments.

Execution Result:

Best state: {'score': 10, 'risk': 2}Learning Agents: Integrating Machine Learning

The most advanced AI agents are those that can learn from experience and adapt their behavior over time. Learning agents use machine learning techniques to improve their performance, discover new strategies, and handle environments that are too complex for manual rule design.

A learning agent typically consists of four main components: a learning element (which improves the agent’s performance), a performance element (which selects actions), a critic (which evaluates the agent’s actions), and a problem generator (which suggests exploratory actions). This structure allows the agent to balance exploitation of known strategies with exploration of new possibilities.

In practice, integrating machine learning into an agent often involves using supervised, unsupervised, or reinforcement learning algorithms. For example, a reinforcement learning agent learns by interacting with its environment, receiving rewards or penalties, and updating its policy to maximize long-term reward.

Here’s a simple example of a learning agent using Q-learning, a popular reinforcement learning algorithm, with Python and NumPy:

python

import numpy as np

class QLearningAgent:

def __init__(self, n_states, n_actions, alpha=0.1, gamma=0.9, epsilon=0.1):

self.q_table = np.zeros((n_states, n_actions))

self.alpha = alpha

self.gamma = gamma

self.epsilon = epsilon

def choose_action(self, state):

if np.random.rand() < self.epsilon:

return np.random.randint(self.q_table.shape[1]) # Explore

return np.argmax(self.q_table[state]) # Exploit

def learn(self, state, action, reward, next_state):

predict = self.q_table[state, action]

target = reward + self.gamma * np.max(self.q_table[next_state])

self.q_table[state, action] += self.alpha * (target - predict)

# Example usage

agent = QLearningAgent(n_states=5, n_actions=2)

state = 0

action = agent.choose_action(state)

agent.learn(state, action, reward=1, next_state=1)

print(agent.q_table)This agent learns to select actions that maximize cumulative reward through trial and error. Over time, its policy improves, enabling it to handle increasingly complex tasks.

By integrating machine learning, developers empower agents to adapt, generalize, and thrive in dynamic, unpredictable environments—unlocking the full potential of AI in real-world applications.

Multi-Agent Systems: Collaboration and Competition

As tasks become increasingly complex, a single AI agent may not be sufficient to solve them effectively. In such cases, multi-agent systems (MAS) are used, where multiple agents interact or compete within the same environment. Each agent can have its own goals, strategies, and level of knowledge, and their interactions lead to the emergence of complex behaviors at the system level.

Collaboration between agents enables information sharing, coordinated actions, and the achievement of common goals that would be difficult for a single agent to accomplish. Examples include robots working together to build a structure or virtual assistants dividing tasks within a large organization. On the other hand, competition arises when agents have conflicting interests, such as in strategy games or market simulations.

Designing multi-agent systems requires careful consideration of communication mechanisms, negotiation, and group decision-making. In Python, libraries such as Mesa can be used to simulate multi-agent environments, as well as custom communication protocols based on networks or message queues.

Properly designed agent interactions allow for the creation of systems that are fault-tolerant, scalable, and capable of solving problems beyond the reach of individual agents. Multi-agent systems are used in logistics, smart cities, computer games, and social research.

Communication Between Agents: Protocols and Frameworks

Effective communication is a key element of multi-agent systems. Agents must exchange information, coordinate actions, and negotiate decisions to achieve their individual or group goals. Communication can be direct (agent-to-agent) or indirect, for example, through a shared environment or a so-called blackboard.

In practice, agent communication is based on established protocols—sets of rules that define how to send messages, interpret them, and respond. Example protocols include request-response, publish-subscribe, and auctions for resource allocation.

In Python, agent communication can be implemented in many ways—from simple queues (such as queue.Queue), through network communication (such as socket), to advanced frameworks like SPADE (Smart Python Agent Development Environment), which provides ready-made tools for building agents that communicate using the FIPA-ACL standard.

Here is a simple example of communication between two agents in Python using a queue:

python

import queue

class Agent:

def __init__(self, name, inbox):

self.name = name

self.inbox = inbox

def send(self, message, recipient):

recipient.inbox.put((self.name, message))

def receive(self):

try:

sender, message = self.inbox.get_nowait()

print(f"{self.name} received from {sender}: {message}")

except queue.Empty:

pass

# Creating agents and communication queues

inbox_a = queue.Queue()

inbox_b = queue.Queue()

agent_a = Agent('AgentA', inbox_a)

agent_b = Agent('AgentB', inbox_b)

# AgentA sends a message to AgentB

agent_a.send("Hello, AgentB!", agent_b)

agent_b.receive()With the right protocols and frameworks, agents can efficiently collaborate, share knowledge, and make decisions in dynamic, distributed environments. This paves the way for building advanced, scalable AI systems that can operate in real-world applications and simulations.

Testing and Debugging AI Agents

Testing and debugging are essential steps in the development of reliable AI agents. Unlike traditional software, AI agents often operate in dynamic, unpredictable environments and may exhibit emergent behaviors that are difficult to anticipate. This makes systematic testing and robust debugging practices especially important.

The first step is to design comprehensive test scenarios that reflect both typical and edge-case situations the agent might encounter. For rule-based or reactive agents, this might involve simulating various environmental inputs and verifying that the agent’s responses match expectations. For learning agents, it’s crucial to monitor not only their performance metrics (such as accuracy or reward) but also their behavior over time to detect issues like overfitting, bias, or unintended strategies.

Debugging AI agents often requires specialized tools. Logging is invaluable: by recording the agent’s decisions, state transitions, and received rewards, developers can trace the source of unexpected behaviors. Visualization tools, such as plotting learning curves or agent trajectories, help identify patterns and anomalies.

In Python, frameworks like unittest or pytest can be used for automated testing, while libraries such as TensorBoard or Matplotlib support visualization and monitoring. Here’s a simple example of testing a reactive agent’s behavior:

python

import unittest

class ReactiveAgent:

def act(self, perception):

if perception == 'danger':

return 'escape'

elif perception == 'target':

return 'approach'

else:

return 'wait'

class TestReactiveAgent(unittest.TestCase):

def setUp(self):

self.agent = ReactiveAgent()

def test_escape(self):

self.assertEqual(self.agent.act('danger'), 'escape')

def test_approach(self):

self.assertEqual(self.agent.act('target'), 'approach')

def test_wait(self):

self.assertEqual(self.agent.act('nothing'), 'wait')

if __name__ == '__main__':

unittest.main()By combining automated tests, thorough logging, and visualization, developers can ensure that AI agents behave as intended and are robust to unexpected situations. This is especially important as agents are deployed in increasingly complex and critical applications.

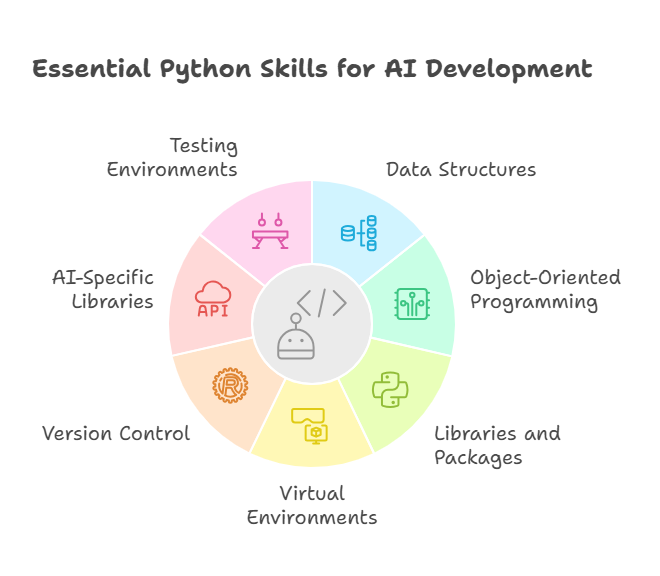

Ethics and Responsibility in AI Agent Development

As AI agents become more autonomous and influential in society, ethical considerations and responsible development practices are paramount. Developers must ensure that agents act in ways that are safe, fair, and aligned with human values.

One of the primary concerns is transparency—understanding how and why an agent makes its decisions. This is especially important for learning agents, whose behavior may not be easily interpretable. Providing clear documentation, explainable models, and audit trails helps build trust and accountability.

Fairness and bias are also critical. AI agents trained on biased data can perpetuate or even amplify existing inequalities. Developers should use diverse, representative datasets and regularly audit agent behavior for signs of discrimination or unfair treatment.

Privacy and security must be safeguarded, especially when agents handle sensitive data or operate in critical systems. This includes implementing robust data protection measures, access controls, and monitoring for misuse.

Finally, developers should consider the broader social and economic impacts of deploying AI agents. This includes anticipating potential job displacement, ensuring agents augment rather than replace human capabilities, and engaging stakeholders in the design process.

Case Study: Building a Simple AI Agent in Python

To illustrate the practical aspects of AI agent development, let’s walk through a case study of building a simple agent in Python. Suppose we want to create an agent that navigates a grid world to reach a goal while avoiding obstacles. This example demonstrates the core steps: defining the environment, implementing the agent’s logic, and running a simulation.

First, we define a minimal grid environment. The agent can move up, down, left, or right, and its objective is to reach a specific cell. Obstacles are represented as blocked cells.

Here’s a basic implementation:

python

import random

class GridWorld:

def __init__(self, size, goal, obstacles):

self.size = size

self.goal = goal

self.obstacles = set(obstacles)

self.agent_pos = (0, 0)

def is_valid(self, pos):

x, y = pos

return (0 <= x < self.size and 0 <= y < self.size and pos not in self.obstacles)

def move_agent(self, action):

x, y = self.agent_pos

moves = {'up': (x, y-1), 'down': (x, y+1), 'left': (x-1, y), 'right': (x+1, y)}

new_pos = moves.get(action, self.agent_pos)

if self.is_valid(new_pos):

self.agent_pos = new_pos

return self.agent_pos

def is_goal(self):

return self.agent_pos == self.goal

class SimpleAgent:

def __init__(self, actions):

self.actions = actions

def choose_action(self):

return random.choice(self.actions)

# Example usage

env = GridWorld(size=5, goal=(4, 4), obstacles=[(1,1), (2,2), (3,3)])

agent = SimpleAgent(actions=['up', 'down', 'left', 'right'])

steps = 0

while not env.is_goal() and steps < 50:

action = agent.choose_action()

env.move_agent(action)

steps += 1

print(f"Agent reached position {env.agent_pos} in {steps} steps.")This simple agent selects random actions and tries to reach the goal. While not optimal, this example provides a foundation for experimenting with more advanced strategies, such as pathfinding algorithms or reinforcement learning. By iteratively improving the agent’s logic, developers can observe how different approaches affect performance and robustness.

Resources and Further Learning

The field of AI agent development is vast and rapidly evolving. For those who want to deepen their knowledge and skills, a wealth of resources is available—ranging from online courses and textbooks to open-source projects and research papers.

For foundational knowledge, books like “Artificial Intelligence: A Modern Approach” by Russell and Norvig offer comprehensive coverage of agent architectures, search algorithms, and learning methods. Online platforms such as Coursera, edX, and Udacity provide hands-on courses in AI, machine learning, and reinforcement learning, often with practical coding assignments.

Open-source libraries and frameworks are invaluable for experimentation. Python packages like gymnasium (formerly OpenAI Gym), Mesa for agent-based modeling, and SPADE for multi-agent systems allow developers to build, test, and deploy agents in a variety of environments. Exploring repositories on GitHub can also provide inspiration and real-world examples.

Staying up to date with the latest research is important, as the field is constantly advancing. Reading papers from conferences like NeurIPS, ICML, or AAMAS, and following blogs or newsletters from leading AI labs, helps developers stay informed about new techniques and best practices.

Finally, engaging with the AI community—through forums, meetups, or online groups—can accelerate learning, provide support, and open doors to collaboration. By leveraging these resources, developers can continually expand their expertise and contribute to the exciting future of AI agent technology.

Conclusion: The Future of AI Agents in Software Development

AI agents are rapidly transforming the landscape of software development, offering new levels of automation, intelligence, and adaptability. As we have seen throughout this guide, agents can take on a variety of roles—from simple rule-based assistants to sophisticated, learning-driven collaborators capable of navigating complex environments and making autonomous decisions.

The integration of AI agents into development workflows is not just a technical upgrade; it represents a fundamental shift in how software is designed, built, and maintained. Agents can automate repetitive tasks, assist in debugging, optimize code, and even suggest innovative solutions that might escape human attention. This allows developers to focus on higher-level problem-solving and creativity, while routine or data-intensive tasks are handled by intelligent systems.

Looking ahead, the future of AI agents in software development is bright and full of potential. Advances in machine learning, natural language processing, and multi-agent systems will enable agents to become even more capable, context-aware, and collaborative. We can expect to see agents that not only support developers but also work alongside them as true partners—learning from feedback, adapting to new challenges, and contributing to the evolution of software engineering practices.

However, this future also brings new responsibilities. Developers must remain vigilant about the ethical implications of autonomous systems, ensuring transparency, fairness, and security in every stage of agent development and deployment. By embracing best practices and fostering a culture of responsible innovation, the software community can harness the power of AI agents to build better, safer, and more inclusive technologies.

Autonomous AI Agents: From Theory to Practice

AI Agents: Building intelligent applications with ease

Quantum Programming with AI Agents: New Horizons in Computing