Introduction to Model Optimization and Quantization

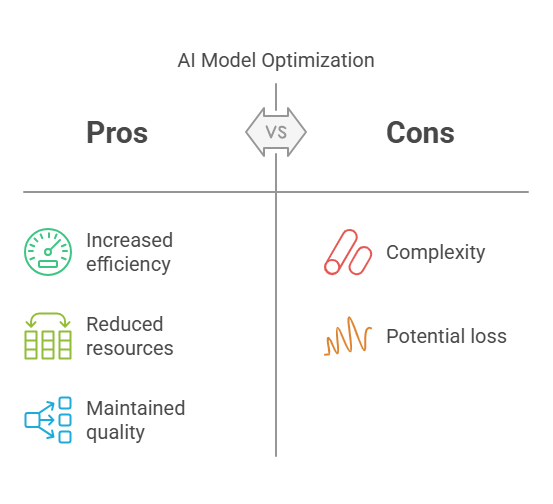

Optimization and quantization of artificial intelligence models are key topics that enable increased computational efficiency and reduced hardware resource requirements. In the era of growing AI popularity, both in industrial and consumer applications, the ability to run advanced models on devices with limited computational power—such as smartphones, IoT devices, or embedded systems—has become increasingly important. Optimization and quantization make it possible to deploy AI models in such environments without significant loss of prediction quality.

1.1. The Importance of Computational Efficiency in AI

The computational efficiency of AI models has a direct impact on their practical application. Models with a large number of parameters and high computational complexity require powerful hardware resources, which can be a barrier to their deployment on end devices or in resource-constrained environments. Computational efficiency translates into shorter inference times, lower energy consumption, and reduced operational costs. Through optimization and quantization, it is possible to significantly reduce model size and speed up their operation, opening up new possibilities for real-world AI applications.

1.2. Overview of Optimization and Quantization Techniques

AI model optimization encompasses a variety of techniques aimed at reducing the number of mathematical operations, decreasing the number of parameters, and simplifying neural network architectures. The most popular methods include pruning, knowledge distillation, weight sharing, and designing models with specific hardware in mind (hardware-aware design).

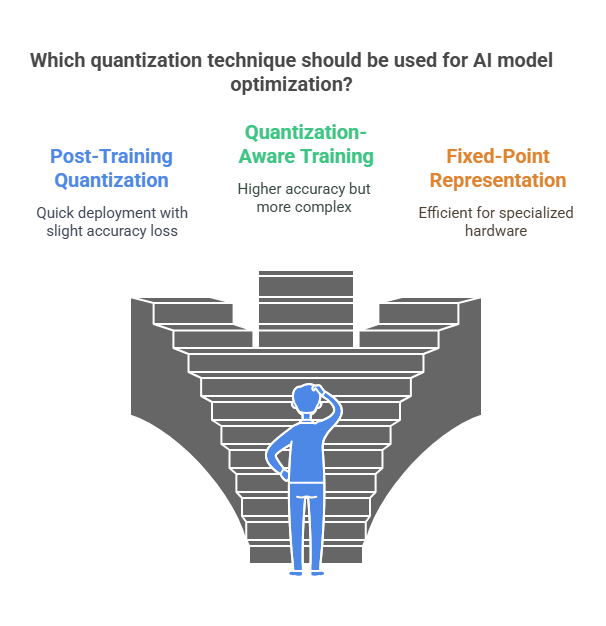

Quantization, on the other hand, involves representing model weights and activations with fewer bits, which further reduces memory and computational requirements. There are two main types: post-training quantization and quantization-aware training. Both techniques aim to enable AI models to run on a wide range of devices while maintaining high prediction quality.

Fundamentals of Model Optimization

Model optimization is a crucial aspect of deploying artificial intelligence solutions efficiently, especially when targeting environments with limited computational resources. By applying various optimization techniques, developers can significantly reduce the size and complexity of neural networks, making them faster and more cost-effective without sacrificing much accuracy. Below, we explore the most important optimization strategies used in modern AI development.

2.1. Pruning Neural Networks

Pruning is a technique that involves removing unnecessary or less important parameters (such as weights or neurons) from a neural network. The main goal is to simplify the model by eliminating redundancy, which leads to a smaller and faster network. There are several approaches to pruning, including weight pruning (removing individual connections with small weights), neuron pruning (removing entire neurons or filters), and structured pruning (removing groups of parameters in a systematic way). Pruned models often require fine-tuning to recover any lost accuracy, but the resulting networks are typically much more efficient and suitable for deployment on edge devices.

2.2. Knowledge Distillation

Knowledge distillation is an optimization method where a smaller, simpler model (the „student”) is trained to replicate the behavior of a larger, more complex model (the „teacher”). The teacher model is first trained on the original dataset, and then the student model learns not only from the ground truth labels but also from the teacher’s predictions. This process allows the student model to capture the essential knowledge and generalization capabilities of the teacher, resulting in a lightweight model that performs comparably well but is much more efficient in terms of computation and memory usage.

2.3. Weight Sharing and Parameter Reduction

Weight sharing is a technique that reduces the number of unique parameters in a neural network by forcing certain weights to share the same value. This can be achieved through methods such as hashing or clustering, where multiple connections in the network are assigned to the same parameter group. Parameter reduction can also involve techniques like low-rank factorization, where large weight matrices are decomposed into smaller, more manageable components. These strategies help to compress the model, decrease memory usage, and speed up inference, all while maintaining acceptable levels of accuracy.

2.4. Hardware-Aware Model Design

Designing models with specific hardware constraints in mind is an increasingly important aspect of optimization. Hardware-aware model design involves tailoring neural network architectures to leverage the strengths and mitigate the limitations of the target deployment platform, such as CPUs, GPUs, FPGAs, or specialized AI accelerators. This can include using operations that are more efficiently executed on the chosen hardware, minimizing memory access, and optimizing data flow. By considering hardware characteristics during the design phase, developers can create models that achieve optimal performance and efficiency in real-world applications.

Quantization Techniques in AI

Quantization is a powerful set of techniques used to reduce the computational and memory requirements of artificial intelligence models. By representing numbers with lower precision, quantization enables models to run efficiently on a wide range of hardware, including mobile devices and embedded systems. While quantization can introduce some loss of accuracy, careful application of these methods often results in models that are both lightweight and highly effective.

3.1. Post-Training Quantization

Post-training quantization is a technique applied after a model has been fully trained. In this approach, the weights and activations of the neural network are converted from high-precision formats, such as 32-bit floating-point, to lower-precision formats, like 8-bit integers. This process can be performed quickly and does not require retraining the model, making it an attractive option for rapid deployment. Although post-training quantization may lead to a slight drop in accuracy, it significantly reduces model size and speeds up inference, especially on hardware that supports low-precision arithmetic.

3.2. Quantization-Aware Training

Quantization-aware training (QAT) is a more advanced technique that incorporates quantization effects during the training process itself. In QAT, the model is trained to anticipate and compensate for the reduced precision of weights and activations. This is achieved by simulating quantization during forward and backward passes, allowing the model to adapt its parameters accordingly. As a result, quantization-aware training typically yields models that maintain higher accuracy after quantization compared to post-training methods. QAT is especially useful for applications where maintaining model performance is critical.

3.3. Fixed-Point vs. Floating-Point Representations

The choice between fixed-point and floating-point representations is central to quantization. Floating-point formats, such as FP32 or FP16, offer a wide dynamic range and are commonly used during model training. Fixed-point formats, like INT8 or INT4, use a fixed number of bits to represent values, which greatly reduces memory usage and computational complexity. Fixed-point arithmetic is often faster and more energy-efficient, making it ideal for deployment on specialized hardware. However, it requires careful calibration to ensure that the reduced precision does not significantly impact model accuracy.

3.4. Precision Trade-offs and Their Impact

Quantization involves balancing the trade-off between model efficiency and accuracy. Lowering the precision of weights and activations can lead to faster inference and smaller model sizes, but it may also introduce quantization errors that degrade performance. The impact of these trade-offs depends on the specific model architecture, the data it processes, and the target hardware. Developers must carefully evaluate the effects of different quantization strategies, often using validation datasets to measure accuracy loss and determine the optimal precision level for their application.

Practical Implementation

The practical implementation of optimization and quantization techniques is a crucial step in bringing efficient AI models from research into real-world applications. This process involves not only applying the right methods but also selecting appropriate tools, integrating optimized models into production environments, and learning from real-world case studies. Below, we explore the key aspects of practical implementation.

4.1. Tools and Frameworks for Optimization and Quantization

A wide range of tools and frameworks are available to support the optimization and quantization of AI models. Popular deep learning libraries such as TensorFlow, PyTorch, and ONNX provide built-in functionalities and extensions for model compression. For example, TensorFlow Lite and PyTorch Mobile are designed specifically for deploying lightweight models on mobile and embedded devices, offering features like post-training quantization and quantization-aware training. ONNX Runtime supports model optimization across different platforms and hardware accelerators, making it easier to deploy models in diverse environments.

In addition to these mainstream libraries, there are specialized tools such as NVIDIA TensorRT, which focuses on optimizing models for GPU inference, and OpenVINO, which is tailored for Intel hardware. These tools often include advanced features like layer fusion, operator reordering, and hardware-specific optimizations that further enhance model performance. By leveraging these frameworks, developers can automate many aspects of the optimization and quantization process, ensuring that their models are both efficient and compatible with the target deployment platform.

4.2. Integrating Optimized Models into Production

Integrating optimized and quantized models into production systems requires careful planning and testing. The process typically starts with exporting the trained and optimized model to a format supported by the target environment, such as TensorFlow Lite’s .tflite or ONNX’s .onnx format. Next, developers must ensure that the deployment pipeline supports the necessary runtime libraries and hardware accelerators to fully utilize the benefits of optimization.

Testing is a critical phase, as it is essential to verify that the optimized model maintains acceptable accuracy and performance under real-world conditions. This may involve running inference on representative datasets, monitoring latency and throughput, and checking for any discrepancies in predictions compared to the original model. In some cases, additional fine-tuning or calibration may be required to address issues that arise during deployment.

4.3. Case Studies: Real-World Applications

Numerous real-world applications demonstrate the effectiveness of optimization and quantization techniques. For instance, in mobile image recognition, quantized models enable real-time inference on smartphones without draining battery life. In industrial IoT, optimized models allow for predictive maintenance and anomaly detection directly on edge devices, reducing the need for constant cloud connectivity. Healthcare applications benefit from efficient models that can run on portable diagnostic devices, providing rapid results in remote or resource-limited settings.

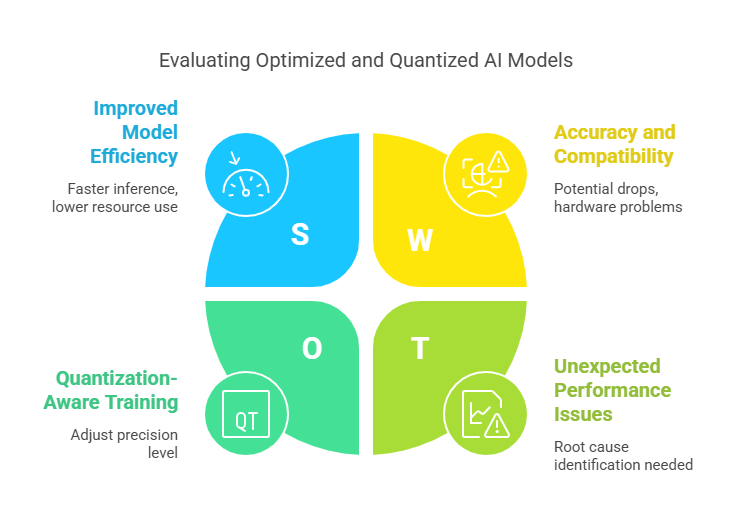

Evaluating Performance and Accuracy

Evaluating the performance and accuracy of optimized and quantized AI models is a critical step in the deployment process. While optimization and quantization can significantly improve efficiency, they may also introduce changes that affect the model’s predictive quality. Careful assessment ensures that the benefits of these techniques do not come at the cost of unacceptable accuracy loss or operational issues. This section explores the main aspects of evaluating optimized models.

5.1. Benchmarking Optimized and Quantized Models

Benchmarking involves systematically measuring the performance of AI models under various conditions. For optimized and quantized models, key metrics include inference speed (latency), throughput (number of inferences per second), and resource usage (such as memory and power consumption). These metrics are compared to those of the original, unoptimized model to quantify the improvements. Benchmarking should be performed on the target hardware, as results can vary significantly depending on the device’s capabilities. It is also important to use representative datasets that reflect real-world usage, ensuring that the benchmarks are meaningful and relevant.

5.2. Balancing Efficiency and Model Quality

One of the main challenges in model optimization and quantization is finding the right balance between efficiency and predictive quality. Reducing model size and precision can lead to faster inference and lower resource consumption, but may also introduce quantization errors or loss of information. Developers must evaluate how these changes affect accuracy, precision, recall, and other relevant metrics. In some cases, a small drop in accuracy may be acceptable if it results in significant gains in efficiency, especially for applications where speed and resource constraints are critical. The decision should be guided by the specific requirements and constraints of the intended application.

5.3. Troubleshooting Common Issues

During the evaluation process, developers may encounter issues such as unexpected drops in accuracy, increased latency, or compatibility problems with certain hardware. Troubleshooting involves identifying the root causes of these issues and applying targeted solutions. For example, if quantization leads to significant accuracy loss, it may be necessary to use quantization-aware training or adjust the precision level. If performance gains are not as expected, further optimization or hardware-specific tuning may be required. Comprehensive testing and iterative refinement are essential to ensure that the final model meets both efficiency and quality standards.

Future Trends and Challenges

The field of model optimization and quantization is rapidly evolving, driven by the growing demand for efficient AI solutions across diverse industries. As artificial intelligence becomes more deeply integrated into everyday devices and critical systems, new trends and challenges are emerging that shape the future of efficient AI deployment. Understanding these directions is essential for developers, researchers, and organizations aiming to stay at the forefront of AI technology.

6.1. Advances in Hardware for Efficient AI

One of the most significant trends is the development of specialized hardware designed to accelerate AI workloads while minimizing energy consumption and latency. Modern AI accelerators, such as Tensor Processing Units (TPUs), Graphics Processing Units (GPUs), and dedicated edge AI chips, are increasingly optimized for low-precision arithmetic and parallel processing. These advancements enable the deployment of highly optimized and quantized models on a wide range of devices, from powerful data centers to tiny edge sensors.

Emerging hardware platforms are also introducing support for novel data types and computation paradigms, such as mixed-precision arithmetic and in-memory computing. These innovations allow for even greater efficiency gains, making it possible to run complex AI models in real time on devices with strict power and space constraints. As hardware continues to evolve, close collaboration between hardware designers and AI researchers will be crucial to fully exploit these capabilities and ensure that software frameworks can take advantage of new features.

6.2. Emerging Techniques in Model Compression

Beyond traditional optimization and quantization, new model compression techniques are being developed to further reduce the size and complexity of AI models. Methods such as neural architecture search (NAS), automated pruning, and advanced knowledge distillation are enabling the creation of highly efficient models tailored to specific tasks and hardware. These techniques often leverage machine learning itself to discover optimal model structures and compression strategies, leading to solutions that outperform manually designed models in both efficiency and accuracy.

6.3. Ethical and Practical Considerations

As AI models become more efficient and widely deployed, ethical and practical challenges also come to the forefront. Ensuring that optimized models do not inadvertently introduce bias, compromise privacy, or reduce transparency is a growing concern. Developers must consider the implications of deploying AI in sensitive environments, such as healthcare or security, where the consequences of errors or unfairness can be significant. Additionally, maintaining the security and integrity of models on edge devices, where they may be more vulnerable to attacks, is an ongoing challenge.

Conclusion

The journey through optimization and quantization of AI models reveals how essential these techniques are for making artificial intelligence accessible, efficient, and practical in real-world scenarios. As AI continues to expand into new domains, the ability to deploy high-performing models on a variety of hardware platforms—ranging from powerful servers to tiny edge devices—depends on the thoughtful application of these methods. The following summary highlights the key techniques and best practices that have emerged as foundational in this field.

7.1. Summary of Key Techniques

Throughout this exploration, several core techniques have proven to be especially impactful. Pruning reduces model complexity by eliminating unnecessary parameters, resulting in smaller and faster networks. Knowledge distillation enables the transfer of knowledge from large, complex models to smaller, more efficient ones without significant loss of accuracy. Weight sharing and parameter reduction further compress models, making them suitable for deployment in resource-constrained environments. Quantization, both post-training and quantization-aware training, allows models to operate with lower numerical precision, dramatically improving speed and reducing memory usage. Hardware-aware model design ensures that models are tailored to the strengths and limitations of the target platform, maximizing performance and efficiency.

7.2. Best Practices for Model Optimization and Quantization

To achieve the best results, developers should follow a set of best practices when optimizing and quantizing AI models. It is important to start with a clear understanding of the deployment environment and its constraints, as this will guide the choice of techniques and tools. Benchmarking and validation on representative datasets are essential to ensure that efficiency gains do not come at the expense of unacceptable accuracy loss. Iterative testing and refinement, including the use of quantization-aware training and hardware-specific optimizations, help to address any issues that arise during deployment. Finally, staying informed about the latest advancements in both software frameworks and hardware accelerators enables developers to leverage new opportunities for further efficiency and performance improvements.

python

import tensorflow as tf

# Load a pre-trained Keras model (for example purposes, MobileNetV2)

model = tf.keras.applications.MobileNetV2(weights="imagenet", input_shape=(224, 224, 3))

# Convert the model to TensorFlow Lite with post-training quantization

converter = tf.lite.TFLiteConverter.from_keras_model(model)

converter.optimizations = [tf.lite.Optimize.DEFAULT]

tflite_quant_model = converter.convert()

# Save the quantized model to a file

with open("mobilenet_v2_quantized.tflite", "wb") as f:

f.write(tflite_quant_model)

print("mobilenet_v2_quantized.tflite")Advanced Deep Learning Techniques: From Transformers to Generative Models